---

license: apache-2.0

pipeline_tag: text-to-image

---

# Work / train in progress!

⚡️Waifu: efficient high-resolution waifu synthesis

waifu is a free text-to-image model that can efficiently generate images in 80 languages. Our goal is to create a small model without compromising on quality.

## Core designs include:

(1) [**AuraDiffusion/16ch-vae**](https://huggingface.co/AuraDiffusion/16ch-vae): A fully open source 16ch VAE. Natively trained in fp16. \

(2) [**Linear DiT**](https://github.com/NVlabs/Sana): we use 1.6b DiT transformer with linear attention. \

(3) [**MEXMA-SigLIP**](https://huggingface.co/visheratin/mexma-siglip): MEXMA-SigLIP is a model that combines the [MEXMA](https://huggingface.co/facebook/MEXMA) multilingual text encoder and an image encoder from the [SigLIP](https://huggingface.co/timm/ViT-SO400M-14-SigLIP-384) model. This allows us to get a high-performance CLIP model for 80 languages.. \

(4) Other: we use Flow-Euler sampler, Adafactor-Fused optimizer and bf16 precision for training, and combine efficient caption labeling (MoonDream, CogVlM, Human, Gpt's) and danbooru tags to accelerate convergence.

## Pros

- Small model that can be trained on a common GPU; fast training process.

- Supports multiple languages and demonstrates good prompt adherence.

- Utilizes the best 16-channel VAE (Variational Autoencoder).

## Cons

- Trained on only 2 million images (low-budget model, approximately $3,000).

- Training dataset consists primarily of anime and illustrations (only about 1% realistic images).

- Only lowres for now (512)

## Example

```py

# 1st, install latest diffusers from source!!

pip install git+https://github.com/huggingface/diffusers

```

```py

import torch

from diffusers import DiffusionPipeline

#from pipeline_waifu import WaifuPipeline

pipe_id = "AiArtLab/waifu-2b"

variant = "fp16"

# Pipeline

pipeline = DiffusionPipeline.from_pretrained(

pipe_id,

variant=variant,

trust_remote_code = True

).to("cuda")

#print(pipeline)

prompt = 'аниме девушка, waifu, يبتسم جنسيا , sur le fond de la tour Eiffel'

generator = torch.Generator(device="cuda").manual_seed(42)

image = pipeline(

prompt = prompt,

negative_prompt = "",

generator=generator,

)[0]

for img in image:

img.show()

img.save('waifu.png')

```

## Donations

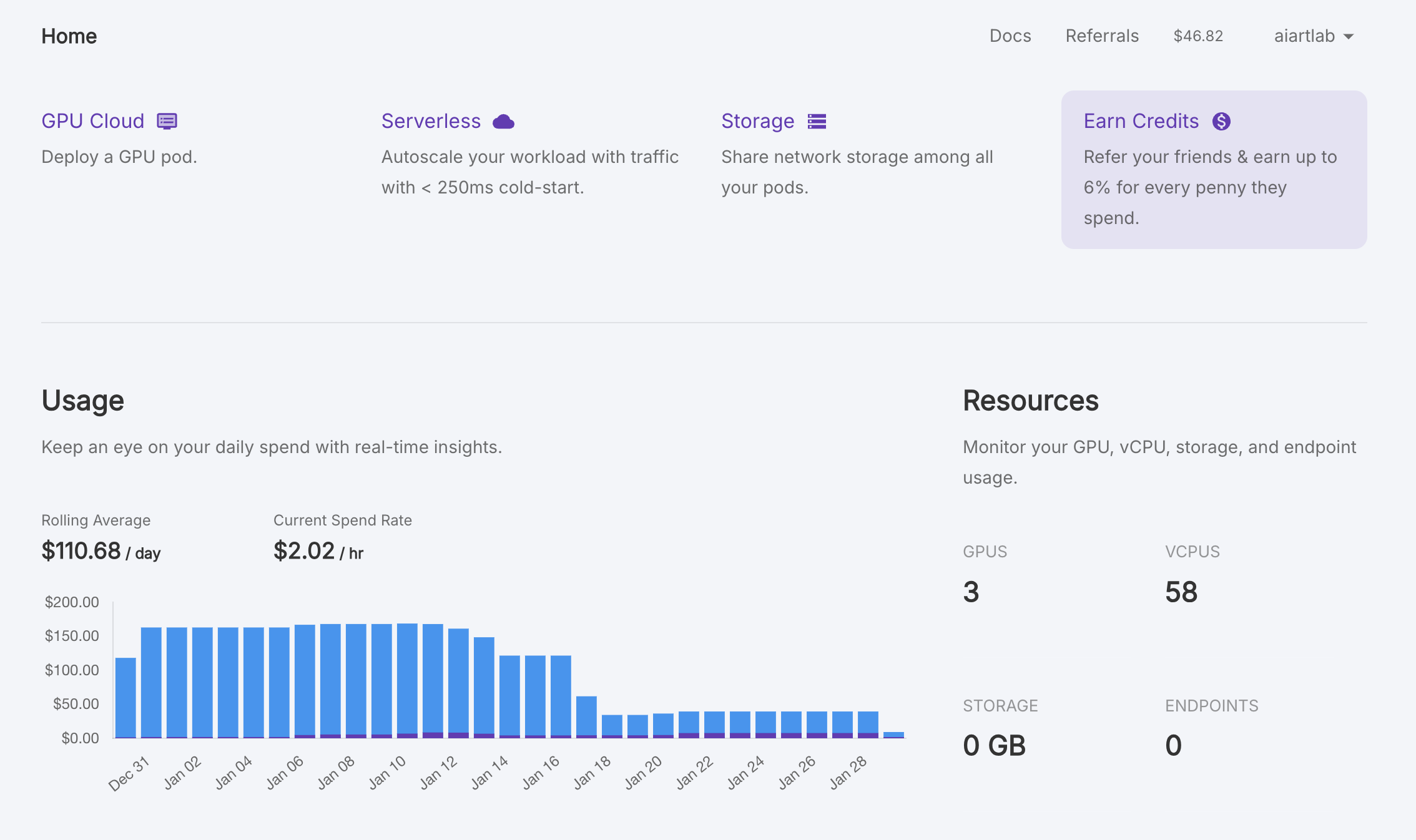

We are a small GPU poor group of enthusiasts (current train budget ~$3k)

Please contact with us if you may provide some GPU's on training

DOGE: DEw2DR8C7BnF8GgcrfTzUjSnGkuMeJhg83

## Contacts

[recoilme](https://t.me/recoilme)

## How to cite

```bibtex

@misc{Waifu,

url = {[https://huggingface.co/AiArtLab/waifu-2b](https://huggingface.co/AiArtLab/waifu-2b)},

title = {waifu-2b},

author = {recoilme, muinez, femboysLover}

}

```