FANG DAI

commited on

Upload 42 files

Browse files- .gitattributes +1 -0

- LICENSE.txt +201 -0

- ResNet_main.sh +61 -0

- Resent/main_train.py +113 -0

- Resent/scripts/config.py +29 -0

- Resent/scripts/dataset.py +80 -0

- Resent/scripts/model.py +152 -0

- Resent/scripts/multiAUC.py +138 -0

- Resent/testmodel.py +139 -0

- Tiger-Coarse.sh +23 -0

- Tiger-Fine.sh +21 -0

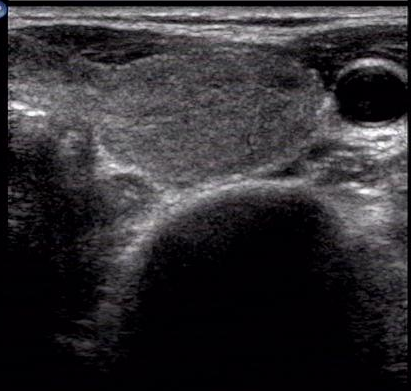

- dataset/Figure1.png +3 -0

- dataset/Resnet training data/PTC/test/0/0.txt +1 -0

- dataset/Resnet training data/PTC/test/1/1.txt +1 -0

- dataset/Resnet training data/PTC/train/0/fi.txt +1 -0

- dataset/Resnet training data/PTC/train/1/figure1.txt +1 -0

- dataset/Resnet training data/PTC/valid/0/0.txt +1 -0

- dataset/Resnet training data/PTC/valid/1/1.txt +1 -0

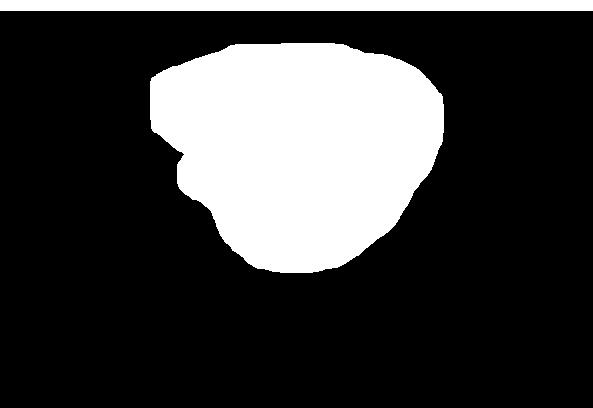

- dataset/training data/condition_BG/20191101_094946_8.png +0 -0

- dataset/training data/condition_BG/20191101_111608_16.png +0 -0

- dataset/training data/condition_BG/20191101_111809_22.png +0 -0

- dataset/training data/condition_BG/20191104_140920_45.png +0 -0

- dataset/training data/condition_BG/634878812500937500.png +0 -0

- dataset/training data/condition_BG/635348002413906250.png +0 -0

- dataset/training data/condition_BG/635501726176562500.png +0 -0

- dataset/training data/condition_FG/20191101_094946_8.png +0 -0

- dataset/training data/condition_FG/20191101_111608_16.png +0 -0

- dataset/training data/condition_FG/20191101_111809_22.png +0 -0

- dataset/training data/condition_FG/20191104_140920_45.png +0 -0

- dataset/training data/condition_FG/634878812500937500.png +0 -0

- dataset/training data/condition_FG/635348002413906250.png +0 -0

- dataset/training data/condition_FG/635501726176562500.png +0 -0

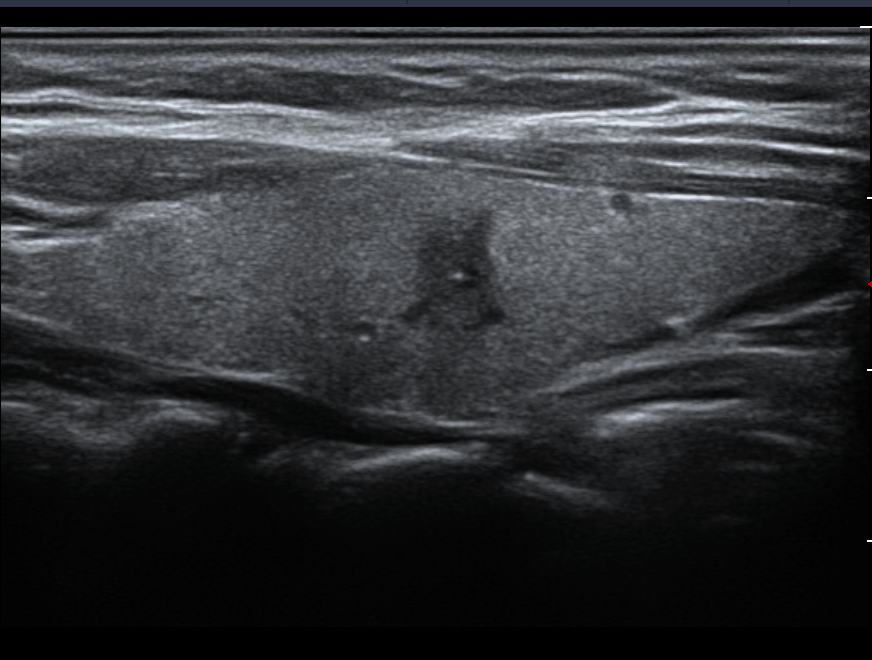

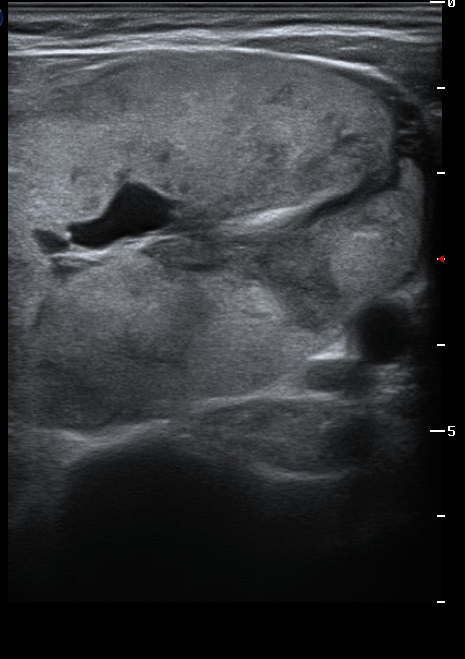

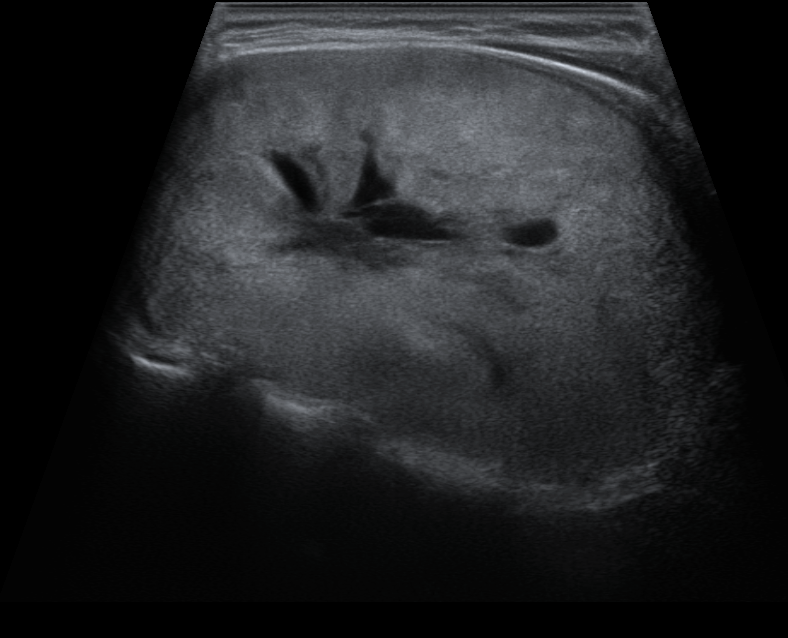

- dataset/training data/init_image/20191101_094946_8.png +0 -0

- dataset/training data/init_image/20191101_111608_16.png +0 -0

- dataset/training data/init_image/20191101_111809_22.png +0 -0

- dataset/training data/init_image/20191104_140920_45.png +0 -0

- dataset/training data/init_image/634878812500937500.png +0 -0

- dataset/training data/init_image/635348002413906250.png +0 -0

- dataset/training data/init_image/635501726176562500.jpg +0 -0

- dataset/training data/init_image/metadata.jsonl +7 -0

- model/pretrain/thyroid_stable_diffusion/1.txt +1 -0

- modelsaved/readme.md +16 -0

- requirements.txt +367 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

dataset/Figure1.png filter=lfs diff=lfs merge=lfs -text

|

LICENSE.txt

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright 2024 Hui Lu, Fang Dai, Siqiong Yao, Shanghai jiao tong university

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

ResNet_main.sh

ADDED

|

@@ -0,0 +1,61 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

export MODELNAME="Thyroid_Benign&PTC"

|

| 2 |

+

export ARCH="resnet"

|

| 3 |

+

export IMAGEPATH="./dataset"

|

| 4 |

+

export TRAIN="Benign&PTC_train"

|

| 5 |

+

export VALID="Benign&PTC_valid"

|

| 6 |

+

export TEST="Benign&PTC_test"

|

| 7 |

+

export BATCH=64

|

| 8 |

+

|

| 9 |

+

python main_train.py \

|

| 10 |

+

--modelname $MODELNAME \

|

| 11 |

+

--architecture $ARCH \

|

| 12 |

+

--imagepath $IMAGEPATH \

|

| 13 |

+

--train_data $TRAIN \

|

| 14 |

+

--valid_data $VALID \

|

| 15 |

+

--test_data $TEST \

|

| 16 |

+

--learning_rat 0.0005 \

|

| 17 |

+

--batch_size $BATCH \

|

| 18 |

+

--num_epochs 200 \

|

| 19 |

+

--Class 2

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

export MODELNAME="Thyroid_Benign&FTC"

|

| 24 |

+

export ARCH="resnet"

|

| 25 |

+

export IMAGEPATH="./dataset"

|

| 26 |

+

export TRAIN="Benign&FTC_train"

|

| 27 |

+

export VALID="Benign&FTC_valid"

|

| 28 |

+

export TEST="Benign&FTC_test"

|

| 29 |

+

export BATCH=64

|

| 30 |

+

|

| 31 |

+

python main_train.py \

|

| 32 |

+

--modelname $MODELNAME \

|

| 33 |

+

--architecture $ARCH \

|

| 34 |

+

--imagepath $IMAGEPATH \

|

| 35 |

+

--train_data $TRAIN \

|

| 36 |

+

--valid_data $VALID \

|

| 37 |

+

--test_data $TEST \

|

| 38 |

+

--learning_rat 0.0005 \

|

| 39 |

+

--batch_size $BATCH \

|

| 40 |

+

--num_epochs 200 \

|

| 41 |

+

--Class 2

|

| 42 |

+

|

| 43 |

+

export MODELNAME="Thyroid_Benign&MTC"

|

| 44 |

+

export ARCH="resnet"

|

| 45 |

+

export IMAGEPATH="./dataset"

|

| 46 |

+

export TRAIN="Benign&MTC_train"

|

| 47 |

+

export VALID="Benign&MTC_valid"

|

| 48 |

+

export TEST="Benign&MTC_test"

|

| 49 |

+

export BATCH=64

|

| 50 |

+

|

| 51 |

+

python main_train.py \

|

| 52 |

+

--modelname $MODELNAME \

|

| 53 |

+

--architecture $ARCH \

|

| 54 |

+

--imagepath $IMAGEPATH \

|

| 55 |

+

--train_data $TRAIN \

|

| 56 |

+

--valid_data $VALID \

|

| 57 |

+

--test_data $TEST \

|

| 58 |

+

--learning_rat 0.0005 \

|

| 59 |

+

--batch_size $BATCH \

|

| 60 |

+

--num_epochs 200 \

|

| 61 |

+

--Class 2

|

Resent/main_train.py

ADDED

|

@@ -0,0 +1,113 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import torch

|

| 3 |

+

from torchvision import models

|

| 4 |

+

import torch.nn as nn

|

| 5 |

+

import torch.optim as optim

|

| 6 |

+

import time

|

| 7 |

+

import copy

|

| 8 |

+

import sys

|

| 9 |

+

import pandas as pd

|

| 10 |

+

import matplotlib.pyplot as plt

|

| 11 |

+

import numpy as np

|

| 12 |

+

from sklearn.metrics import roc_auc_score

|

| 13 |

+

from scripts.model import train_model

|

| 14 |

+

import scripts.dataset as DATA

|

| 15 |

+

import scripts.config as config

|

| 16 |

+

import argparse

|

| 17 |

+

from PIL import ImageFile

|

| 18 |

+

ImageFile.LOAD_TRUNCATED_IMAGES = True

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

def main(args):

|

| 22 |

+

modelname = args.modelname

|

| 23 |

+

imagepath = args.imagepath

|

| 24 |

+

label_num,subgroup_num = config.THYROID()

|

| 25 |

+

Datasets = DATA.Thyroid_Datasets

|

| 26 |

+

|

| 27 |

+

if args.architecture =='resnet':

|

| 28 |

+

net = models.resnet18(pretrained=True)

|

| 29 |

+

features = net.fc.in_features

|

| 30 |

+

net.fc = nn.Sequential(

|

| 31 |

+

nn.Linear(features, args.Class))

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

if os.path.exists('./modelsaved/%s' % modelname) == False:

|

| 35 |

+

os.makedirs('./modelsaved/%s' % modelname)

|

| 36 |

+

if os.path.exists('./result/%s' % modelname) == False:

|

| 37 |

+

os.makedirs('./result/%s' % modelname)

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

data_transforms = config.Transforms(modelname)

|

| 41 |

+

|

| 42 |

+

print("%s Initializing Datasets and Dataloaders..." % modelname)

|

| 43 |

+

|

| 44 |

+

transformed_datasets = {}

|

| 45 |

+

transformed_datasets['train'] = Datasets(

|

| 46 |

+

path_to_images=imagepath,

|

| 47 |

+

fold=args.train_data,

|

| 48 |

+

PRED_LABEL=label_num,

|

| 49 |

+

transform=data_transforms['train'])

|

| 50 |

+

transformed_datasets['valid'] = Datasets(

|

| 51 |

+

path_to_images=imagepath,

|

| 52 |

+

fold=args.valid_data,

|

| 53 |

+

PRED_LABEL=label_num,

|

| 54 |

+

transform=data_transforms['valid'])

|

| 55 |

+

transformed_datasets['test'] = Datasets(

|

| 56 |

+

path_to_images=imagepath,

|

| 57 |

+

fold=args.test_data,

|

| 58 |

+

PRED_LABEL=label_num,

|

| 59 |

+

transform=data_transforms['valid'])

|

| 60 |

+

|

| 61 |

+

dataloaders = {}

|

| 62 |

+

dataloaders['train'] = torch.utils.data.DataLoader(

|

| 63 |

+

transformed_datasets['train'],

|

| 64 |

+

batch_size=args.batch_size,

|

| 65 |

+

shuffle=True,

|

| 66 |

+

num_workers=24)

|

| 67 |

+

dataloaders['valid'] = torch.utils.data.DataLoader(

|

| 68 |

+

transformed_datasets['valid'],

|

| 69 |

+

batch_size=1,

|

| 70 |

+

shuffle=False,

|

| 71 |

+

num_workers=24)

|

| 72 |

+

dataloaders['test'] = torch.utils.data.DataLoader(

|

| 73 |

+

transformed_datasets['test'],

|

| 74 |

+

batch_size=1,

|

| 75 |

+

shuffle=False,

|

| 76 |

+

num_workers=24)

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

if args.modelload_path:

|

| 80 |

+

net.load_state_dict(torch.load('%s' % args.modelload_path , map_location=lambda storage, loc: storage),strict=False)

|

| 81 |

+

|

| 82 |

+

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 83 |

+

|

| 84 |

+

net = net.to(device)

|

| 85 |

+

optimizer = optim.Adam(filter(lambda p: p.requires_grad, net.parameters()), lr=args.learning_rate, betas=(0.9, 0.99),weight_decay=0.03)

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

if args.Class > 2:

|

| 89 |

+

criterion = nn.BCELoss()

|

| 90 |

+

else:

|

| 91 |

+

criterion = nn.CrossEntropyLoss()

|

| 92 |

+

|

| 93 |

+

train_model(net,dataloaders, criterion, optimizer, args.num_epochs, modelname, device)

|

| 94 |

+

|

| 95 |

+

|

| 96 |

+

|

| 97 |

+

if __name__ == '__main__':

|

| 98 |

+

parser = argparse.ArgumentParser()

|

| 99 |

+

parser.add_argument("--modelname", type=str, default="Thyroid")

|

| 100 |

+

parser.add_argument("--architecture", type=str, choices= ["resnet","densnet","efficientnet"], default="resnet")

|

| 101 |

+

parser.add_argument("--modelload_path", type=str, default= None)

|

| 102 |

+

parser.add_argument("--imagepath", type=str, default="./dataset/")

|

| 103 |

+

parser.add_argument("--train_data", type=str, default='thyroid_train')

|

| 104 |

+

parser.add_argument("--valid_data", type=str, default='thyroid_valid')

|

| 105 |

+

parser.add_argument("--test_data", type=str, default='thyroid_test')

|

| 106 |

+

parser.add_argument("--learning_rate", type=float, default=0.00005)

|

| 107 |

+

parser.add_argument("--batch_size", type=int, default=64)

|

| 108 |

+

parser.add_argument("--num_epochs", type=int, default=100)

|

| 109 |

+

parser.add_argument("--Class", type=int, default=2)

|

| 110 |

+

args = parser.parse_args()

|

| 111 |

+

main(args)

|

| 112 |

+

|

| 113 |

+

|

Resent/scripts/config.py

ADDED

|

@@ -0,0 +1,29 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from torchvision import transforms

|

| 2 |

+

|

| 3 |

+

THYROID_LABEL = ['Benign', 'Malignant']

|

| 4 |

+

THYROID_SUBGROUP = [['Papillary','Follicular','Medullary']]

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

def THYROID():

|

| 8 |

+

return (THYROID_LABEL, THYROID_SUBGROUP[0])

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

def Transforms(name):

|

| 12 |

+

# if name in ["Thyroid"]:

|

| 13 |

+

data_transforms_ONE = {

|

| 14 |

+

'valid': transforms.Compose([

|

| 15 |

+

transforms.CenterCrop(512),

|

| 16 |

+

transforms.Resize(224),

|

| 17 |

+

transforms.ToTensor(),

|

| 18 |

+

transforms.Normalize([.5, .5, .5], [.5, .5, .5])

|

| 19 |

+

]),

|

| 20 |

+

'train': transforms.Compose([

|

| 21 |

+

transforms.CenterCrop(512),

|

| 22 |

+

transforms.RandomCrop(256),

|

| 23 |

+

transforms.Resize(224),

|

| 24 |

+

transforms.ToTensor(),

|

| 25 |

+

transforms.Normalize([.5, .5, .5], [.5, .5, .5])

|

| 26 |

+

])

|

| 27 |

+

}

|

| 28 |

+

return data_transforms_ONE

|

| 29 |

+

|

Resent/scripts/dataset.py

ADDED

|

@@ -0,0 +1,80 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import pandas as pd

|

| 2 |

+

import numpy as np

|

| 3 |

+

from torch.utils.data import Dataset

|

| 4 |

+

import os

|

| 5 |

+

from PIL import Image

|

| 6 |

+

|

| 7 |

+

class Thyroid_Datasets(Dataset):

|

| 8 |

+

def __init__(

|

| 9 |

+

self,

|

| 10 |

+

path_to_images,

|

| 11 |

+

fold,

|

| 12 |

+

PRED_LABEL,

|

| 13 |

+

transform=None,

|

| 14 |

+

sample=0,

|

| 15 |

+

finding="any"):

|

| 16 |

+

self.transform = transform

|

| 17 |

+

self.path_to_images = path_to_images

|

| 18 |

+

self.PRED_LABEL = PRED_LABEL

|

| 19 |

+

self.df = pd.read_csv("%s/CSV/%s.csv" % (path_to_images,fold))

|

| 20 |

+

print("%s/CSV/%s.csv " % (path_to_images,fold), "num of images: %s" % len(self.df))

|

| 21 |

+

|

| 22 |

+

if(sample > 0 and sample < len(self.df)):

|

| 23 |

+

self.df = self.df.sample(sample)

|

| 24 |

+

self.df = self.df.dropna(subset = ['Path'])

|

| 25 |

+

self.df = self.df.set_index("Path")

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

def __len__(self):

|

| 29 |

+

return len(self.df)

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

def __getitem__(self, idx):

|

| 33 |

+

X = self.df.index[idx]

|

| 34 |

+

if str(X) is not None:

|

| 35 |

+

image = Image.open(os.path.join(self.path_to_images,str(X)))

|

| 36 |

+

image = image.convert('RGB')

|

| 37 |

+

label = self.df["label".strip()].iloc[idx].astype('int')

|

| 38 |

+

subg = self.df["subtype".strip()].iloc[idx]

|

| 39 |

+

if self.transform:

|

| 40 |

+

image = self.transform(image)

|

| 41 |

+

|

| 42 |

+

return (image, label, subg)

|

| 43 |

+

|

| 44 |

+

class Multi_Thyroid_Datasets(Dataset):

|

| 45 |

+

def __init__(

|

| 46 |

+

self,

|

| 47 |

+

path_to_images,

|

| 48 |

+

fold,

|

| 49 |

+

PRED_LABEL,

|

| 50 |

+

transform=None,

|

| 51 |

+

sample=0,

|

| 52 |

+

finding="any"):

|

| 53 |

+

self.transform = transform

|

| 54 |

+

self.path_to_images = path_to_images

|

| 55 |

+

self.PRED_LABEL = PRED_LABEL

|

| 56 |

+

self.df = pd.read_csv("%s/CSV/%s.csv" % (path_to_images,fold))

|

| 57 |

+

print("%s/CSV/%s.csv " % (path_to_images,fold), "num of images: %s" % len(self.df))

|

| 58 |

+

|

| 59 |

+

if(sample > 0 and sample < len(self.df)):

|

| 60 |

+

self.df = self.df.sample(sample)

|

| 61 |

+

self.df = self.df.dropna(subset = ['Path'])

|

| 62 |

+

self.df = self.df.set_index("Path")

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

def __len__(self):

|

| 66 |

+

return len(self.df)

|

| 67 |

+

|

| 68 |

+

|

| 69 |

+

def __getitem__(self, idx):

|

| 70 |

+

X = self.df.index[idx]

|

| 71 |

+

if str(X) is not None:

|

| 72 |

+

image = Image.open(os.path.join(self.path_to_images,str(X)))

|

| 73 |

+

image = image.convert('RGB')

|

| 74 |

+

label = self.df["label".strip()].iloc[idx].astype('int')

|

| 75 |

+

subg = self.df["subtype".strip()].iloc[idx]

|

| 76 |

+

if self.transform:

|

| 77 |

+

image = self.transform(image)

|

| 78 |

+

|

| 79 |

+

return (image, label, subg)

|

| 80 |

+

return (image, label, subg)

|

Resent/scripts/model.py

ADDED

|

@@ -0,0 +1,152 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import torch.nn as nn

|

| 3 |

+

import torch.optim as optim

|

| 4 |

+

import time

|

| 5 |

+

import copy

|

| 6 |

+

import sys

|

| 7 |

+

import pandas as pd

|

| 8 |

+

import matplotlib.pyplot as plt

|

| 9 |

+

import numpy as np

|

| 10 |

+

from sklearn.metrics import roc_auc_score

|

| 11 |

+

from torch.autograd import Variable

|

| 12 |

+

from scripts.multiAUC import Metric

|

| 13 |

+

import numpy

|

| 14 |

+

from tqdm import tqdm

|

| 15 |

+

from random import sample

|

| 16 |

+

from scripts.plot import bootstrap_auc,result_csv,plotimage

|

| 17 |

+

import pynvml

|

| 18 |

+

pynvml.nvmlInit()

|

| 19 |

+

from prettytable import PrettyTable

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

def train_model(model, dataloaders, criterion, optimizer,num_epochs, modelname, device):

|

| 23 |

+

global VAL_auc,TEST_auc

|

| 24 |

+

since = time.time()

|

| 25 |

+

train_loss_history, valid_loss_history, test_loss_history= [], [], []

|

| 26 |

+

test_maj_history, test_min_history = [], []

|

| 27 |

+

train_auc_history, val_auc_history, test_auc_history = [], [], []

|

| 28 |

+

best_model_wts = copy.deepcopy(model.state_dict())

|

| 29 |

+

scheduler = optim.lr_scheduler.StepLR(optimizer, step_size=20, gamma=0.5)

|

| 30 |

+

for epoch in range(num_epochs):

|

| 31 |

+

start = time.time()

|

| 32 |

+

|

| 33 |

+

print('{} Epoch {}/{} {}'.format('-' * 30, epoch, num_epochs - 1, '-' * 30))

|

| 34 |

+

for phase in ['train','valid', 'test']:

|

| 35 |

+

if phase == 'train' and epoch != 0:

|

| 36 |

+

model.train()

|

| 37 |

+

else:

|

| 38 |

+

model.eval()

|

| 39 |

+

running_loss,running_corrects,prob_all, label_all = [], [], [], []

|

| 40 |

+

with tqdm(range(len(dataloaders[phase])),desc='%s' % phase, ncols=100) as t:

|

| 41 |

+

if epoch == 0 :

|

| 42 |

+

t.set_postfix(L = 0.000, usedMemory = 0)

|

| 43 |

+

|

| 44 |

+

for data in dataloaders[phase]:

|

| 45 |

+

inputs, labels, sub = data

|

| 46 |

+

print(labels)

|

| 47 |

+

inputs = inputs.to(device)

|

| 48 |

+

labels = labels.to(device)

|

| 49 |

+

optimizer.zero_grad(set_to_none=True)

|

| 50 |

+

with torch.set_grad_enabled(phase == 'train'):

|

| 51 |

+

outputs = model(inputs)

|

| 52 |

+

loss = criterion(outputs, labels)

|

| 53 |

+

_, preds = torch.max(outputs, 1)

|

| 54 |

+

if phase == 'train' and epoch != 0:

|

| 55 |

+

loss.backward()

|

| 56 |

+

optimizer.step()

|

| 57 |

+

running_loss.append(loss.item())

|

| 58 |

+

running_corrects.append((preds.cpu().detach() == labels.cpu().detach()).numpy())

|

| 59 |

+

|

| 60 |

+

prob_all.extend(outputs[:, 1].cpu().detach().numpy())

|

| 61 |

+

label_all.extend(labels.cpu().detach().numpy())

|

| 62 |

+

|

| 63 |

+

"""

|

| 64 |

+

B:batch

|

| 65 |

+

L:Loss

|

| 66 |

+

maj: Maj group AUC

|

| 67 |

+

min: Min group AUC

|

| 68 |

+

n: NVIDIA Memory used

|

| 69 |

+

"""

|

| 70 |

+

gpu_device = pynvml.nvmlDeviceGetHandleByIndex(0)

|

| 71 |

+

meminfo = pynvml.nvmlDeviceGetMemoryInfo(gpu_device).total

|

| 72 |

+

usedMemory = pynvml.nvmlDeviceGetMemoryInfo(gpu_device).used

|

| 73 |

+

usedMemory = usedMemory/meminfo

|

| 74 |

+

t.set_postfix(loss = loss.data.item(), usedMemory = usedMemory) #

|

| 75 |

+

t.update()

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

# num = len(label_all)

|

| 80 |

+

# auc = roc_auc_score(label_all, prob_all)

|

| 81 |

+

# epoch_loss = np.mean(running_loss)

|

| 82 |

+

# label_all = np.array(label_all)

|

| 83 |

+

# prob_all = np.array(prob_all)

|

| 84 |

+

# statistics = bootstrap_auc(label_all, prob_all, [0,1,3,4,5])

|

| 85 |

+

# max_auc = np.max(statistics, axis=1).max()

|

| 86 |

+

# min_auc = np.min(statistics, axis=1).max()

|

| 87 |

+

# print('{} --> Num: {} Loss: {:.4f} AUROC: {:.4f} ({:.2f} ~ {:.2f})'.format(

|

| 88 |

+

# phase, num, epoch_loss, auc, min_auc, max_auc ))

|

| 89 |

+

if modelname =="Thyroid_PF":

|

| 90 |

+

try:

|

| 91 |

+

data_auc = roc_auc_score(Label,Output)

|

| 92 |

+

Data_auc_maj = roc_auc_score(Label_maj, Output_maj)

|

| 93 |

+

Data_auc_min = roc_auc_score(Label_min, Output_min)

|

| 94 |

+

except:

|

| 95 |

+

data_auc = roc_auc_score(Label,Output)

|

| 96 |

+

Data_auc_maj = 0

|

| 97 |

+

Data_auc_min = 0

|

| 98 |

+

epoch_loss = running_loss / Batch

|

| 99 |

+

statistics = bootstrap_auc(Label, Output, [0,1,2,3,4])

|

| 100 |

+

max_auc = np.max(statistics, axis=1).max()

|

| 101 |

+

min_auc = np.min(statistics, axis=1).max()

|

| 102 |

+

if G == [] and phase == "train":

|

| 103 |

+

G1.append(0)

|

| 104 |

+

elif phase == "train":

|

| 105 |

+

G1.append(sum(G)/len(G))

|

| 106 |

+

print('{} --> Num: {} Loss: {:.4f} Gamma: {:.4f} AUROC: {:.4f} ({:.2f} ~ {:.2f}) (Maj {:.4f}, Min {:.4f})'.format(

|

| 107 |

+

phase, len(outputs_out), epoch_loss, G1[-1], data_auc, min_auc, max_auc, Data_auc_maj, Data_auc_min))

|

| 108 |

+

|

| 109 |

+

else:

|

| 110 |

+

myMetic = Metric(Output,Label)

|

| 111 |

+

data_auc,auc = myMetic.auROC()

|

| 112 |

+

epoch_loss = running_loss / Batch

|

| 113 |

+

statistics = bootstrap_auc(Label, Output, [0,1,2,3,4])

|

| 114 |

+

max_auc = np.max(statistics, axis=1).max()

|

| 115 |

+

min_auc = np.min(statistics, axis=1).max()

|

| 116 |

+

if G == [] and phase == "train":

|

| 117 |

+

G1.append(0)

|

| 118 |

+

elif phase == "train":

|

| 119 |

+

G1.append(sum(G)/len(G))

|

| 120 |

+

print('{} --> Num: {} Loss: {:.4f} AUROC: {:.4f} ({:.2f} ~ {:.2f}) (Maj {:.4f}, Min {:.4f})'.format(

|

| 121 |

+

phase, len(outputs_out), epoch_loss, data_auc, min_auc, max_auc, data_auc_maj,data_auc_min))

|

| 122 |

+

|

| 123 |

+

if phase == 'train':

|

| 124 |

+

train_loss_history.append(epoch_loss)

|

| 125 |

+

train_auc_history.append(auc)

|

| 126 |

+

|

| 127 |

+

if phase == 'valid':

|

| 128 |

+

valid_loss_history.append(epoch_loss)

|

| 129 |

+

val_auc_history.append(auc)

|

| 130 |

+

|

| 131 |

+

if phase == 'test':

|

| 132 |

+

test_loss_history.append(epoch_loss)

|

| 133 |

+

test_auc_history.append(auc)

|

| 134 |

+

|

| 135 |

+

if phase == 'valid' and train_auc_history[-1] >= 0.9:

|

| 136 |

+

if val_auc_history[-1] >= max(val_auc_history) or test_auc_history[-1] >= max(test_auc_history):

|

| 137 |

+

print("In epoch %d, better AUC(%.3f) and save model. " % (epoch, float(val_auc_history[-1])))

|

| 138 |

+

PATH = '/export/home/daifang/Diffusion/Resnet/modelsaved/%s/e%d_%s_V%.3fT%.3f.pth' % (modelname,epoch,modelname,val_auc_history[-1],test_auc_history[-1])

|

| 139 |

+

torch.save(model.state_dict(),PATH)

|

| 140 |

+

|

| 141 |

+

print("learning rate = %.6f time: %.1f sec" % (optimizer.param_groups[-1]['lr'], time.time() - start))

|

| 142 |

+

if epoch != 0:

|

| 143 |

+

scheduler.step()

|

| 144 |

+

print()

|

| 145 |

+

|

| 146 |

+

plotimage(train_auc_history, val_auc_history, test_auc_history,"AUC", modelname)

|

| 147 |

+

plotimage(train_loss_history, valid_loss_history, test_loss_history,"Loss", modelname)

|

| 148 |

+

result_csv( train_auc_history, val_auc_history, test_auc_history, modelname)

|

| 149 |

+

|

| 150 |

+

time_elapsed = time.time() - since

|

| 151 |

+

print('Training complete in {:.0f}m {:.0f}s'.format(time_elapsed // 60, time_elapsed % 60))

|

| 152 |

+

model.load_state_dict(best_model_wts)

|

Resent/scripts/multiAUC.py

ADDED

|

@@ -0,0 +1,138 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import sklearn

|

| 2 |

+

import numpy as np

|

| 3 |

+

from scipy.sparse import csr_matrix

|

| 4 |

+

from scipy.sparse.csgraph import laplacian

|

| 5 |

+

from scipy.sparse.linalg import eigs

|

| 6 |

+

from sklearn.metrics import accuracy_score

|

| 7 |

+

from sklearn.metrics import f1_score

|

| 8 |

+

from sklearn.metrics import hamming_loss

|

| 9 |

+

from sklearn.metrics import roc_auc_score

|

| 10 |

+

import pandas as pd

|

| 11 |

+

from random import sample

|

| 12 |

+

|

| 13 |

+

# np.set_printoptions(threshold='nan')

|

| 14 |

+

class Metric(object):

|

| 15 |

+

def __init__(self,output,label):

|

| 16 |

+

self.output = output #prediction label matric

|

| 17 |

+

self.label = label #true label matric

|

| 18 |

+

|

| 19 |

+

def accuracy_subset(self,threash=0.5):

|

| 20 |

+

y_pred =self.output

|

| 21 |

+

y_true = self.label

|

| 22 |

+

y_pred=np.where(y_pred>threash,1,0)

|

| 23 |

+

accuracy=accuracy_score(y_true,y_pred)

|

| 24 |

+

return accuracy

|

| 25 |

+

|

| 26 |

+

def accuracy(self,threash=0.5):

|

| 27 |

+

y_pred =self.output

|

| 28 |

+

y_true = self.label

|

| 29 |

+

y_pred=np.where(y_pred>threash,1,0)

|

| 30 |

+

accuracy=sklearn.metrics.accuracy_score(y_true, y_pred, normalize=True, sample_weight=None)

|

| 31 |

+

return accuracy

|

| 32 |

+

|

| 33 |

+

def accuracy_multiclass(self):

|

| 34 |

+

y_pred =self.output

|

| 35 |

+

y_true = self.label

|

| 36 |

+

accuracy=accuracy_score(np.argmax(y_pred,1),np.argmax(y_true,1))

|

| 37 |

+

return accuracy

|

| 38 |

+

|

| 39 |

+

def micfscore(self,threash=0.5,type='micro'):

|

| 40 |

+

y_pred =self.output

|

| 41 |

+

y_true = self.label

|

| 42 |

+

y_pred=np.where(y_pred>threash,1,0)

|

| 43 |

+

return f1_score(y_pred,y_true,average=type)

|

| 44 |

+

|

| 45 |

+

def macfscore(self,threash=0.5,type='macro'):

|

| 46 |

+

y_pred =self.output

|

| 47 |

+

y_true = self.label

|

| 48 |

+

y_pred=np.where(y_pred>threash,1,0)

|

| 49 |

+

return f1_score(y_pred,y_true,average=type)

|

| 50 |

+

|

| 51 |

+

def hamming_distance(self,threash=0.5):

|

| 52 |

+

y_pred =self.output

|

| 53 |

+

y_true = self.label

|

| 54 |

+

y_pred=np.where(y_pred>threash,1,0)

|

| 55 |

+

return hamming_loss(y_true,y_pred)

|

| 56 |

+

|

| 57 |

+

def fscore_class(self,type='micro'):

|

| 58 |

+

y_pred =self.output

|

| 59 |

+

y_true = self.label

|

| 60 |

+

return f1_score(np.argmax(y_pred,1),np.argmax(y_true,1),average=type)

|

| 61 |

+

|

| 62 |

+

def auROC(self):

|

| 63 |

+

y_pred =self.output

|

| 64 |

+

y_true = self.label

|

| 65 |

+

row,col = y_true.shape

|

| 66 |

+

temp = []

|

| 67 |

+

ROC = 0

|

| 68 |

+

for i in range(col):

|

| 69 |

+

try:

|

| 70 |

+

ROC = roc_auc_score(y_true[:,i], y_pred[:,i], average='micro', sample_weight=None)

|

| 71 |

+

except:

|

| 72 |

+

ROC == 0.5

|

| 73 |

+

temp.append(ROC)

|

| 74 |

+

for i in range(col):

|

| 75 |

+

ROC += float(temp[i])

|

| 76 |

+

return ROC/(col+1),temp

|

| 77 |

+

|

| 78 |

+

def MacroAUC(self):

|

| 79 |

+

y_pred =self.output #num_instance*num_label

|

| 80 |

+

y_true = self.label #num_instance*num_label

|

| 81 |

+

num_instance,num_class = y_pred.shape

|

| 82 |

+

count = np.zeros((num_class,1)) # store the number of postive instance'score>negative instance'score

|

| 83 |

+

num_P_instance = np.zeros((num_class,1)) #number of positive instance for every label

|

| 84 |

+

num_N_instance = np.zeros((num_class,1))

|

| 85 |

+

auc = np.zeros((num_class,1)) # for each label

|

| 86 |

+

count_valid_label = 0

|

| 87 |

+

for i in range(num_class):

|

| 88 |

+

num_P_instance[i,0] = sum(y_true[:,i] == 1) #label,,test_target

|

| 89 |

+

num_N_instance[i,0] = num_instance - num_P_instance[i,0]

|

| 90 |

+

# exclude the label on which all instances are positive or negative,

|

| 91 |

+

# leading to num_P_instance(i,1) or num_N_instance(i,1) is zero

|

| 92 |

+

if num_P_instance[i,0] == 0 or num_N_instance[i,0] == 0:

|

| 93 |

+

auc[i,0] = 0

|

| 94 |

+

count_valid_label = count_valid_label + 1

|

| 95 |

+

else:

|

| 96 |

+

temp_P_Outputs = np.zeros((int(num_P_instance[i,0]), num_class))

|

| 97 |

+

temp_N_Outputs = np.zeros((int(num_N_instance[i,0]), num_class))

|

| 98 |

+

#

|

| 99 |

+

temp_P_Outputs[:,i] = y_pred[y_true[:,i]==1,i]

|

| 100 |

+

temp_N_Outputs[:,i] = y_pred[y_true[:,i]==0,i]

|

| 101 |

+

for m in range(int(num_P_instance[i,0])):

|

| 102 |

+

for n in range(int(num_N_instance[i,0])):

|

| 103 |

+

if(temp_P_Outputs[m,i] > temp_N_Outputs[n,i] ):

|

| 104 |

+

count[i,0] = count[i,0] + 1

|

| 105 |

+

elif(temp_P_Outputs[m,i] == temp_N_Outputs[n,i]):

|

| 106 |

+

count[i,0] = count[i,0] + 0.5

|

| 107 |

+

|

| 108 |

+

auc[i,0] = count[i,0]/(num_P_instance[i,0]*num_N_instance[i,0])

|

| 109 |

+

macroAUC1 = sum(auc)/(num_class-count_valid_label)

|

| 110 |

+

return float(macroAUC1), auc

|

| 111 |

+

|

| 112 |

+

|

| 113 |

+

def bootstrap_auc(label, output, classes, bootstraps=5, fold_size=1000):

|

| 114 |

+

statistics = np.zeros((len(classes), bootstraps))

|

| 115 |

+

for c in range(len(classes)):

|

| 116 |

+

for i in range(bootstraps):

|

| 117 |

+

L=[]

|

| 118 |

+

for k in range(len(label)):

|

| 119 |

+

L.append([output[k],label[k]])

|

| 120 |

+

if fold_size <= len(L):

|

| 121 |

+

X = sample(L, fold_size)

|

| 122 |

+

else:

|

| 123 |

+

fold_size == len(L)

|

| 124 |

+

X = sample(L, fold_size)

|

| 125 |

+

for b in range(len(X)):

|

| 126 |

+

if b ==0:

|

| 127 |

+

Output = np.array([X[b][0]])

|

| 128 |

+

Label = np.array([X[b][1]])

|

| 129 |

+

Output = np.concatenate((Output, np.array([X[b][0]])),axis=0)

|

| 130 |

+

Label = np.concatenate((Label, np.array([X[b][1]])),axis=0)

|

| 131 |

+

|

| 132 |

+

myMetic = Metric(Output,Label)

|

| 133 |