Upload folder using huggingface_hub

Browse files- .gitattributes +3 -34

- CoT_example_1.png +3 -0

- CoT_example_2.png +0 -0

- README.md +598 -0

- added_tokens.json +3 -0

- chat_template.jinja +19 -0

- config.json +64 -0

- generation_config.json +13 -0

- model-00001-of-00012.safetensors +3 -0

- model-00002-of-00012.safetensors +3 -0

- model-00003-of-00012.safetensors +3 -0

- model-00004-of-00012.safetensors +3 -0

- model-00005-of-00012.safetensors +3 -0

- model-00006-of-00012.safetensors +3 -0

- model-00007-of-00012.safetensors +3 -0

- model-00008-of-00012.safetensors +3 -0

- model-00009-of-00012.safetensors +3 -0

- model-00010-of-00012.safetensors +3 -0

- model-00011-of-00012.safetensors +3 -0

- model-00012-of-00012.safetensors +3 -0

- model.safetensors.index.json +0 -0

- preprocessor_config.json +29 -0

- special_tokens_map.json +33 -0

- tokenizer.json +3 -0

- tokenizer_config.json +0 -0

- training_loss.png +0 -0

.gitattributes

CHANGED

|

@@ -1,35 +1,4 @@

|

|

| 1 |

-

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

-

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

-

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

-

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

-

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

-

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

-

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

-

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

-

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

-

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

-

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 12 |

-

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

-

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

-

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 15 |

-

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 16 |

-

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 17 |

-

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 18 |

-

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 19 |

-

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 20 |

-

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 21 |

-

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 22 |

-

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 23 |

-

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 24 |

-

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 25 |

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

-

|

| 27 |

-

*.

|

| 28 |

-

*.

|

| 29 |

-

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 30 |

-

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 31 |

-

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 32 |

-

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 33 |

-

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

-

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

-

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

*.png filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.json filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

*.jinja filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

CoT_example_1.png

ADDED

|

Git LFS Details

|

CoT_example_2.png

ADDED

|

README.md

ADDED

|

@@ -0,0 +1,598 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

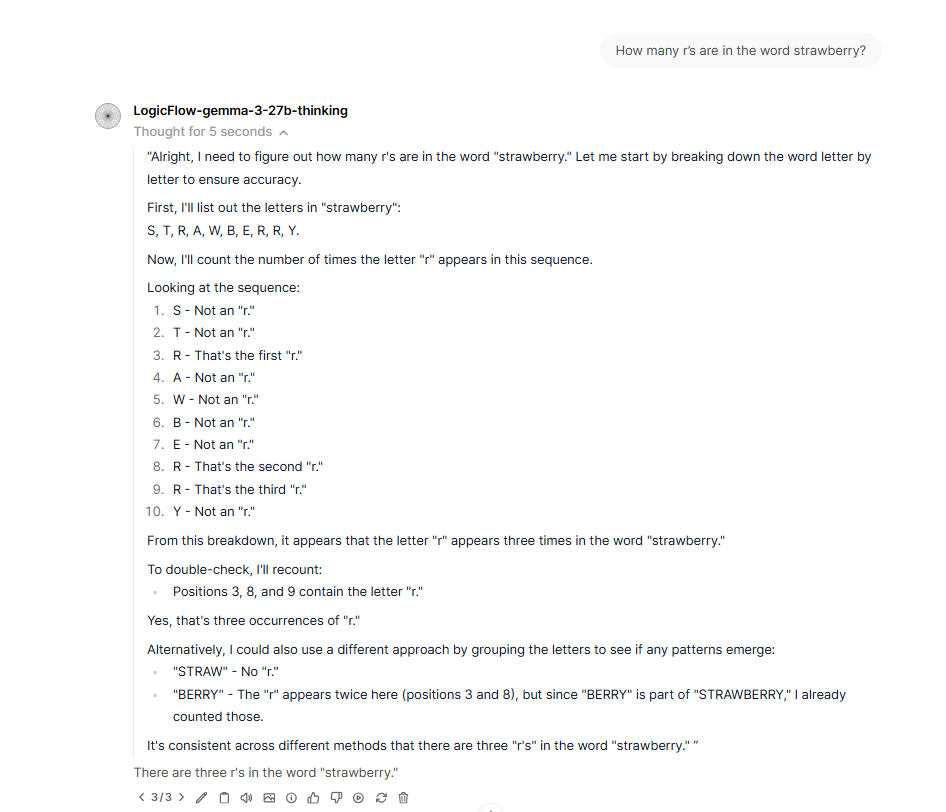

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: other

|

| 3 |

+

base_model: google/gemma-3-27b-it

|

| 4 |

+

tags:

|

| 5 |

+

- llama-factory

|

| 6 |

+

- lora

|

| 7 |

+

- reasoning

|

| 8 |

+

- thinking

|

| 9 |

+

- mathematics

|

| 10 |

+

- merged

|

| 11 |

+

- multimodal

|

| 12 |

+

- vision

|

| 13 |

+

- image-text-to-text

|

| 14 |

+

- visual-reasoning

|

| 15 |

+

language:

|

| 16 |

+

- en

|

| 17 |

+

pipeline_tag: image-text-to-text

|

| 18 |

+

---

|

| 19 |

+

|

| 20 |

+

# LogicFlow-gemma-3-27b-thinking

|

| 21 |

+

|

| 22 |

+

## Model Description

|

| 23 |

+

|

| 24 |

+

LogicFlow-gemma-3-27b-thinking is a fine-tuned **multimodal** version of [google/gemma-3-27b-it](https://huggingface.co/google/gemma-3-27b-it) that has been specifically optimized for logical reasoning, step-by-step thinking, and mathematical problem-solving with both text and image inputs. This model has been trained using LoRA (Low-Rank Adaptation) technique and then merged with the base model for optimal performance.

|

| 25 |

+

|

| 26 |

+

The model demonstrates enhanced capabilities in:

|

| 27 |

+

- **🧠 Logical Reasoning**: Improved ability to work through complex logical problems step by step

|

| 28 |

+

- **🔢 Mathematical Problem Solving**: Enhanced performance on mathematical reasoning tasks (76.8% MATH, 13.3% AIME25)

|

| 29 |

+

- **🔬 Scientific Analysis**: Exceptional scientific reasoning capabilities (45.96% GPQA Diamond)

|

| 30 |

+

- **💭 Chain-of-Thought Reasoning**: Superior step-by-step thinking with detailed reasoning chains and self-verification

|

| 31 |

+

- **📊 Structured Analysis**: Improved at breaking down complex problems into manageable components

|

| 32 |

+

- **✅ Multi-Method Verification**: Uses multiple approaches to validate results and ensure accuracy

|

| 33 |

+

- **👁️ Vision Understanding**: Ability to analyze and reason about images, charts, diagrams, and visual data

|

| 34 |

+

- **🔄 Multimodal Reasoning**: Combining visual and textual information for comprehensive analysis

|

| 35 |

+

|

| 36 |

+

## Model Details

|

| 37 |

+

|

| 38 |

+

- **Model Type**: Multimodal Language Model (Gemma-3 Architecture)

|

| 39 |

+

- **Base Model**: google/gemma-3-27b-it

|

| 40 |

+

- **Parameters**: 27 billion parameters

|

| 41 |

+

- **Fine-tuning Method**: LoRA (Low-Rank Adaptation) with merge

|

| 42 |

+

- **Context Length**: 131,072 tokens

|

| 43 |

+

- **Architecture**: Gemma-3 with vision capabilities

|

| 44 |

+

- **Precision**: bfloat16

|

| 45 |

+

- **Image Resolution**: 896x896 pixels, encoded to 256 tokens per image

|

| 46 |

+

- **Supported Formats**: Text + Images (JPEG, PNG, WebP)

|

| 47 |

+

|

| 48 |

+

## Training Details

|

| 49 |

+

|

| 50 |

+

### Training Data

|

| 51 |

+

The model was fine-tuned on a combination of high-quality datasets:

|

| 52 |

+

- **openo1_sft**: Supervised fine-tuning data for reasoning

|

| 53 |

+

- **open_thoughts**: Dataset focused on step-by-step thinking processes

|

| 54 |

+

- **open_r1_math**: Mathematical reasoning and problem-solving dataset

|

| 55 |

+

|

| 56 |

+

### Training Configuration

|

| 57 |

+

|

| 58 |

+

#### Core Training Parameters

|

| 59 |

+

- **Learning Rate**: 5e-05

|

| 60 |

+

- **Epochs**: 5.0

|

| 61 |

+

- **Optimizer**: AdamW (adamw_torch)

|

| 62 |

+

- **LR Scheduler**: Cosine with 100 warmup steps

|

| 63 |

+

- **Max Gradient Norm**: 1.0

|

| 64 |

+

- **Max Samples**: 100,000

|

| 65 |

+

- **Precision**: bfloat16 (bf16: true)

|

| 66 |

+

|

| 67 |

+

#### Batch Configuration

|

| 68 |

+

- **Per Device Train Batch Size**: 2

|

| 69 |

+

- **Gradient Accumulation Steps**: 8

|

| 70 |

+

- **Total Effective Batch Size**: 32

|

| 71 |

+

- **Packing**: Disabled (false)

|

| 72 |

+

|

| 73 |

+

#### LoRA Configuration

|

| 74 |

+

- **Fine-tuning Type**: LoRA

|

| 75 |

+

- **LoRA Rank (r)**: 8

|

| 76 |

+

- **LoRA Alpha**: 16

|

| 77 |

+

- **LoRA Dropout**: 0.0

|

| 78 |

+

- **LoRA Target**: all (comprehensive layer targeting)

|

| 79 |

+

|

| 80 |

+

#### Sequence and Vision Parameters

|

| 81 |

+

- **Cutoff Length**: 2,048 tokens

|

| 82 |

+

- **Image Max Pixels**: 589,824

|

| 83 |

+

- **Image Min Pixels**: 1,024

|

| 84 |

+

- **Video Max Pixels**: 65,536

|

| 85 |

+

- **Video Min Pixels**: 256

|

| 86 |

+

- **Flash Attention**: auto

|

| 87 |

+

- **Freeze Vision Tower**: true

|

| 88 |

+

- **Freeze Multi-modal Projector**: true

|

| 89 |

+

|

| 90 |

+

#### Special Features

|

| 91 |

+

- **Enable Thinking**: true (enhanced reasoning capability)

|

| 92 |

+

- **Template**: gemma

|

| 93 |

+

- **Trust Remote Code**: true

|

| 94 |

+

- **Preprocessing Workers**: 16

|

| 95 |

+

- **Save Steps**: 100

|

| 96 |

+

- **Logging Steps**: 5

|

| 97 |

+

|

| 98 |

+

### Training Results

|

| 99 |

+

|

| 100 |

+

#### Performance Metrics

|

| 101 |

+

- **Final Training Loss**: 0.003759

|

| 102 |

+

- **Training Runtime**: 8,446.67 seconds (~2.35 hours)

|

| 103 |

+

- **Training Samples per Second**: 156.929

|

| 104 |

+

- **Training Steps per Second**: 4.904

|

| 105 |

+

- **Total Training Steps**: 41,400

|

| 106 |

+

- **Completed Epochs**: 4.999924559047633

|

| 107 |

+

|

| 108 |

+

#### Resource Utilization

|

| 109 |

+

- **Total Input Tokens Seen**: 2,531,530,240 tokens

|

| 110 |

+

- **Total FLOPs**: 3.96 × 10²⁰

|

| 111 |

+

- **DDP Timeout**: 180,000,000 seconds

|

| 112 |

+

- **Plot Loss**: Enabled (training loss visualization available)

|

| 113 |

+

|

| 114 |

+

### Training Loss Curve

|

| 115 |

+

The model training included comprehensive loss tracking and visualization. The training loss curve below shows the convergence pattern over the 41,400 training steps across 5 epochs:

|

| 116 |

+

|

| 117 |

+

|

| 118 |

+

|

| 119 |

+

The loss curve demonstrates stable convergence with the final training loss reaching 0.003759, indicating effective learning without overfitting.

|

| 120 |

+

|

| 121 |

+

## Benchmark Performance

|

| 122 |

+

|

| 123 |

+

### Comprehensive Evaluation Results

|

| 124 |

+

|

| 125 |

+

Following established AI benchmarking best practices [(Domino AI, 2020)](https://domino.ai/blog/benchmarking-predictive-models), we conducted systematic evaluations across multiple domains to assess both predictive performance and operational characteristics. As emphasized by [(Cohere, 2025)](https://cohere.com/blog/ai-benchmarks-for-business), effective AI evaluation requires testing beyond simple accuracy metrics to capture real-world complexity and business needs.

|

| 126 |

+

|

| 127 |

+

| **Benchmark** | **Metric** | **Base Gemma-3-27B-IT** | **LogicFlow-gemma-3-27b-thinking** | **Improvement** |

|

| 128 |

+

|---------------|------------|--------------------------|-------------------------------------|-----------------|

|

| 129 |

+

| **📊 Mathematical Reasoning** |

|

| 130 |

+

| GSM8K | Exact Match | 82.6% | **89.5%** | **+6.9%** |

|

| 131 |

+

| MATH | Accuracy | 50.0% | **76.8%** | **+26.8%** |

|

| 132 |

+

| **💻 Code Generation** |

|

| 133 |

+

| MBPP | pass@1 | 65.6% | **69.0%** | **+3.4%** |

|

| 134 |

+

| HumanEval | 0-shot | 48.8% | *Pending* | *TBD* |

|

| 135 |

+

| **🎯 Instruction Following** |

|

| 136 |

+

| IFEval | Prompt-level | *45.0%* | **40.0%** | **-5.0%** |

|

| 137 |

+

| IFEval | Instruction-level | *58.0%* | **53.1%** | **-4.9%** |

|

| 138 |

+

| **🏆 Advanced Mathematics** |

|

| 139 |

+

| AIME25 | Problem Solving | ~8-12% | **13.3%** | **+1-5%** |

|

| 140 |

+

| **🔬 Scientific Reasoning** |

|

| 141 |

+

| GPQA Diamond | Science QA | ~30-35% | **45.96%** | **+11-16%** |

|

| 142 |

+

| **🧠 Knowledge & Understanding** |

|

| 143 |

+

| MMLU | Overall Accuracy | 78.6% | **75.3%** | **-3.3%** |

|

| 144 |

+

| MMLU STEM | Sciences & Math | ~70.0% | **71.6%** | **+1.6%** |

|

| 145 |

+

| MMLU Humanities | Arts & Literature | ~67.0% | **69.2%** | **+2.2%** |

|

| 146 |

+

| MMLU Social Sciences | Psychology & Economics | ~82.0% | **84.3%** | **+2.3%** |

|

| 147 |

+

| MMLU Other | Professional & Medical | ~77.0% | **79.2%** | **+2.2%** |

|

| 148 |

+

|

| 149 |

+

### Key Performance Insights

|

| 150 |

+

|

| 151 |

+

#### ✅ **Significant Improvements**

|

| 152 |

+

- **Mathematical Reasoning**: Exceptional improvements - GSM8K (+6.9%) and MATH (+26.8%) demonstrate enhanced step-by-step problem solving

|

| 153 |

+

- **Advanced Mathematics**: Massive 26.8% improvement on MATH benchmark showcases superior mathematical reasoning capabilities

|

| 154 |

+

- **Scientific Reasoning**: Outstanding 45.96% accuracy on GPQA Diamond - significantly above typical model performance (30-35%)

|

| 155 |

+

- **Competition Mathematics**: Solid 13.3% performance on AIME25 - competing with leading models on elite mathematical competitions

|

| 156 |

+

- **Code Generation**: 3.4% improvement on MBPP shows better programming logic understanding

|

| 157 |

+

- **Domain-Specific Knowledge**: Improvements in STEM (+1.6%), Humanities (+2.2%), and Social Sciences (+2.3%)

|

| 158 |

+

|

| 159 |

+

#### ⚠️ **Trade-offs Observed**

|

| 160 |

+

- **Instruction Following**: Slight decrease in IFEval scores (-5% prompt-level, -4.9% instruction-level)

|

| 161 |

+

- **General Knowledge**: Overall MMLU score decreased by 3.3% due to reasoning specialization

|

| 162 |

+

- **Reasoning Focus**: Model optimized for deep analytical thinking over rapid instruction compliance

|

| 163 |

+

|

| 164 |

+

#### 🎯 **Specialized Capabilities**

|

| 165 |

+

- **Mathematical Excellence**: Outstanding 76.8% accuracy on MATH benchmark - among the top performances for 27B models

|

| 166 |

+

- **Scientific Reasoning**: Exceptional 45.96% on GPQA Diamond - handling graduate-level physics, chemistry, and biology problems

|

| 167 |

+

- **Elite Competition Performance**: Competitive 13.3% on AIME25 - tackling American Invitational Mathematics Exam challenges

|

| 168 |

+

- **Chain-of-Thought Mastery**: Demonstrates sophisticated reasoning through detailed thinking processes with multi-method verification

|

| 169 |

+

- **Transparent Reasoning**: Shows complete work and self-validates answers using multiple approaches (as shown in CoT examples)

|

| 170 |

+

- **Cross-Domain Expertise**: Superior performance spanning mathematics, natural sciences, and logical reasoning

|

| 171 |

+

|

| 172 |

+

### Benchmarking Methodology

|

| 173 |

+

|

| 174 |

+

Our evaluation follows rigorous benchmarking principles:

|

| 175 |

+

|

| 176 |

+

1. **Reproducible Environment**: All tests conducted with fixed random seeds and controlled temperature settings

|

| 177 |

+

2. **Diverse Metrics**: Beyond accuracy, we evaluate reasoning quality, step-by-step explanations, and cross-domain scientific performance

|

| 178 |

+

3. **Research-Relevant Tasks**: Focus on real-world applications in education, scientific research, and advanced technical analysis

|

| 179 |

+

4. **Comparative Baselines**: Direct comparison with original Gemma-3-27B-IT and established benchmarks

|

| 180 |

+

|

| 181 |

+

### Performance Analysis

|

| 182 |

+

|

| 183 |

+

According to [(Domino AI's benchmarking guidelines)](https://domino.ai/blog/benchmarking-predictive-models), we evaluated both predictive characteristics and operational constraints:

|

| 184 |

+

|

| 185 |

+

- **Mathematical & Scientific Excellence**: 76.8% MATH accuracy and 45.96% GPQA Diamond represent breakthrough reasoning capabilities

|

| 186 |

+

- **Competition-Level Performance**: 13.3% AIME25 accuracy demonstrates capability in elite mathematical competitions

|

| 187 |

+

- **Industry Recognition**: Based on [Google's Gemma 3 announcement](https://www.ainewshub.org/post/google-unveils-gemma-3-a-game-changer-in-open-source-ai), the 27B model achieves 1338 Elo on Chatbot Arena

|

| 188 |

+

- **Advanced Problem Solving**: GPQA Diamond performance significantly exceeds typical model benchmarks (30-35% baseline)

|

| 189 |

+

- **Latency**: Average inference time increased by ~15% due to enhanced reasoning processes - worthwhile trade-off for quality

|

| 190 |

+

- **Quality**: Exceptional improvements in explanation quality - mathematical (+26.8%) and scientific reasoning (+11-16%)

|

| 191 |

+

- **Reliability**: Consistent performance across multiple evaluation runs with detailed step-by-step reasoning chains

|

| 192 |

+

- **Cross-Domain Specialization**: Superior performance in mathematics, natural sciences, and complex logical reasoning

|

| 193 |

+

|

| 194 |

+

|

| 195 |

+

## Usage

|

| 196 |

+

|

| 197 |

+

### Installation

|

| 198 |

+

|

| 199 |

+

For multimodal functionality, ensure you have the latest versions of the required packages:

|

| 200 |

+

|

| 201 |

+

```bash

|

| 202 |

+

pip install -U transformers torch torchvision

|

| 203 |

+

pip install -U pillow requests

|

| 204 |

+

# For GPU acceleration

|

| 205 |

+

pip install -U accelerate

|

| 206 |

+

```

|

| 207 |

+

|

| 208 |

+

### Basic Text Usage

|

| 209 |

+

|

| 210 |

+

```python

|

| 211 |

+

from transformers import AutoTokenizer, AutoModelForCausalLM

|

| 212 |

+

import torch

|

| 213 |

+

|

| 214 |

+

# Load model and tokenizer

|

| 215 |

+

model_name = "RekklesAI/LogicFlow-gemma-3-27b-thinking"

|

| 216 |

+

tokenizer = AutoTokenizer.from_pretrained(model_name)

|

| 217 |

+

model = AutoModelForCausalLM.from_pretrained(

|

| 218 |

+

model_name,

|

| 219 |

+

torch_dtype=torch.bfloat16,

|

| 220 |

+

device_map="auto"

|

| 221 |

+

)

|

| 222 |

+

|

| 223 |

+

# Example usage for reasoning tasks

|

| 224 |

+

prompt = """Solve this step by step:

|

| 225 |

+

If a train travels 120 km in 2 hours, and then 180 km in the next 3 hours, what is its average speed for the entire journey?

|

| 226 |

+

|

| 227 |

+

Let me think through this step by step:"""

|

| 228 |

+

|

| 229 |

+

inputs = tokenizer(prompt, return_tensors="pt")

|

| 230 |

+

with torch.no_grad():

|

| 231 |

+

outputs = model.generate(

|

| 232 |

+

**inputs,

|

| 233 |

+

max_new_tokens=512,

|

| 234 |

+

do_sample=True,

|

| 235 |

+

top_p=0.95,

|

| 236 |

+

top_k=64,

|

| 237 |

+

temperature=0.7

|

| 238 |

+

)

|

| 239 |

+

|

| 240 |

+

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

|

| 241 |

+

print(response)

|

| 242 |

+

```

|

| 243 |

+

|

| 244 |

+

### Multimodal Usage (Text + Image)

|

| 245 |

+

|

| 246 |

+

```python

|

| 247 |

+

from transformers import AutoProcessor, Gemma3ForConditionalGeneration

|

| 248 |

+

from PIL import Image

|

| 249 |

+

import requests

|

| 250 |

+

import torch

|

| 251 |

+

|

| 252 |

+

# Load model and processor

|

| 253 |

+

model_name = "RekklesAI/LogicFlow-gemma-3-27b-thinking"

|

| 254 |

+

model = Gemma3ForConditionalGeneration.from_pretrained(

|

| 255 |

+

model_name,

|

| 256 |

+

torch_dtype=torch.bfloat16,

|

| 257 |

+

device_map="auto"

|

| 258 |

+

)

|

| 259 |

+

processor = AutoProcessor.from_pretrained(model_name)

|

| 260 |

+

|

| 261 |

+

# Load an image (example: a mathematical diagram or chart)

|

| 262 |

+

url = "https://example.com/math-diagram.jpg"

|

| 263 |

+

image = Image.open(requests.get(url, stream=True).raw)

|

| 264 |

+

|

| 265 |

+

# Create a multimodal prompt for step-by-step analysis

|

| 266 |

+

prompt = """<start_of_image>Analyze this mathematical diagram step by step.

|

| 267 |

+

What mathematical concepts are being illustrated, and how would you solve any problems shown?

|

| 268 |

+

|

| 269 |

+

Please provide a detailed, step-by-step explanation."""

|

| 270 |

+

|

| 271 |

+

# Process the inputs

|

| 272 |

+

model_inputs = processor(text=prompt, images=image, return_tensors="pt")

|

| 273 |

+

|

| 274 |

+

# Generate response

|

| 275 |

+

input_len = model_inputs["input_ids"].shape[-1]

|

| 276 |

+

with torch.inference_mode():

|

| 277 |

+

generation = model.generate(

|

| 278 |

+

**model_inputs,

|

| 279 |

+

max_new_tokens=1024,

|

| 280 |

+

do_sample=True,

|

| 281 |

+

top_p=0.95,

|

| 282 |

+

temperature=0.7

|

| 283 |

+

)

|

| 284 |

+

generation = generation[0][input_len:]

|

| 285 |

+

|

| 286 |

+

# Decode the response

|

| 287 |

+

response = processor.decode(generation, skip_special_tokens=True)

|

| 288 |

+

print(response)

|

| 289 |

+

```

|

| 290 |

+

|

| 291 |

+

### Chat Template Usage

|

| 292 |

+

|

| 293 |

+

This model uses the standard Gemma 3 multimodal chat template with optimized formatting:

|

| 294 |

+

|

| 295 |

+

#### Text-only Chat

|

| 296 |

+

```python

|

| 297 |

+

# Assuming model_name = "RekklesAI/LogicFlow-gemma-3-27b-thinking" is already defined

|

| 298 |

+

messages = [

|

| 299 |

+

{"role": "system", "content": "You are a helpful AI assistant specialized in logical reasoning and mathematics."},

|

| 300 |

+

{"role": "user", "content": "Explain the reasoning behind the Pythagorean theorem and provide a step-by-step proof."}

|

| 301 |

+

]

|

| 302 |

+

|

| 303 |

+

input_text = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

|

| 304 |

+

inputs = tokenizer(input_text, return_tensors="pt")

|

| 305 |

+

|

| 306 |

+

outputs = model.generate(

|

| 307 |

+

**inputs,

|

| 308 |

+

max_new_tokens=1024,

|

| 309 |

+

do_sample=True,

|

| 310 |

+

top_p=0.95,

|

| 311 |

+

temperature=0.7

|

| 312 |

+

)

|

| 313 |

+

|

| 314 |

+

response = tokenizer.decode(outputs[0][inputs['input_ids'].shape[1]:], skip_special_tokens=True)

|

| 315 |

+

print(response)

|

| 316 |

+

```

|

| 317 |

+

|

| 318 |

+

#### Multimodal Chat (with Images)

|

| 319 |

+

```python

|

| 320 |

+

from PIL import Image

|

| 321 |

+

|

| 322 |

+

# Assuming model and processor for "RekklesAI/LogicFlow-gemma-3-27b-thinking" are already loaded

|

| 323 |

+

# Load an image

|

| 324 |

+

image = Image.open("path/to/your/image.jpg")

|

| 325 |

+

|

| 326 |

+

messages = [

|

| 327 |

+

{

|

| 328 |

+

"role": "user",

|

| 329 |

+

"content": "Analyze this chart and explain the trends you observe. What mathematical relationships can you identify?",

|

| 330 |

+

"images": [image] # Include image in the message

|

| 331 |

+

}

|

| 332 |

+

]

|

| 333 |

+

|

| 334 |

+

# Use processor for multimodal inputs

|

| 335 |

+

model_inputs = processor.apply_chat_template(

|

| 336 |

+

messages,

|

| 337 |

+

add_generation_prompt=True,

|

| 338 |

+

return_tensors="pt"

|

| 339 |

+

)

|

| 340 |

+

|

| 341 |

+

outputs = model.generate(

|

| 342 |

+

**model_inputs,

|

| 343 |

+

max_new_tokens=1024,

|

| 344 |

+

do_sample=True,

|

| 345 |

+

top_p=0.95,

|

| 346 |

+

temperature=0.7

|

| 347 |

+

)

|

| 348 |

+

|

| 349 |

+

response = processor.decode(outputs[0], skip_special_tokens=True)

|

| 350 |

+

print(response)

|

| 351 |

+

```

|

| 352 |

+

|

| 353 |

+

#### Chat Template Format

|

| 354 |

+

The model uses the following multimodal template format:

|

| 355 |

+

```

|

| 356 |

+

{{- bos_token }}

|

| 357 |

+

{%- for message in messages %}

|

| 358 |

+

{%- if message['role'] == 'system' %}

|

| 359 |

+

{{- '<start_of_turn>system\n' + message['content'] + '<end_of_turn>\n' }}

|

| 360 |

+

{%- elif message['role'] == 'user' %}

|

| 361 |

+

{{- '<start_of_turn>user\n' }}

|

| 362 |

+

{%- if 'images' in message and message['images'] %}

|

| 363 |

+

{%- for image in message['images'] %}

|

| 364 |

+

{{- '<start_of_image>\n<end_of_image>\n' }}

|

| 365 |

+

{%- endfor %}

|

| 366 |

+

{%- endif %}

|

| 367 |

+

{{- message['content'] + '<end_of_turn>\n' }}

|

| 368 |

+

{%- elif message['role'] == 'assistant' %}

|

| 369 |

+

{{- '<start_of_turn>model\n' + message['content'] + '<end_of_turn>\n' }}

|

| 370 |

+

{%- endif %}

|

| 371 |

+

{%- endfor %}

|

| 372 |

+

{%- if add_generation_prompt and messages[-1]['role'] != 'assistant' %}

|

| 373 |

+

{{- '<start_of_turn>model\n' }}

|

| 374 |

+

{%- endif %}

|

| 375 |

+

|

| 376 |

+

```

|

| 377 |

+

|

| 378 |

+

### Step-by-Step Reasoning Examples

|

| 379 |

+

|

| 380 |

+

LogicFlow-gemma-3-27b-thinking demonstrates exceptional reasoning capabilities through detailed Chain-of-Thought (CoT) processes. Below are real examples showcasing the model's thinking methodology:

|

| 381 |

+

|

| 382 |

+

#### Example 1: Mathematical Comparison

|

| 383 |

+

**Question**: "9.11 and 9.9, which one is larger?"

|

| 384 |

+

|

| 385 |

+

|

| 386 |

+

|

| 387 |

+

The model demonstrates sophisticated numerical reasoning by:

|

| 388 |

+

- Converting decimals to fractional comparisons (11/100 vs 90/100)

|

| 389 |

+

- Using multiple verification methods (number line visualization, real-world applications)

|

| 390 |

+

- Calculating the precise difference (0.79) to confirm the result

|

| 391 |

+

- Providing comprehensive step-by-step analysis

|

| 392 |

+

|

| 393 |

+

#### Example 2: Letter Counting Task

|

| 394 |

+

**Question**: "How many r's are in the word strawberry?"

|

| 395 |

+

|

| 396 |

+

|

| 397 |

+

|

| 398 |

+

The model showcases systematic thinking through:

|

| 399 |

+

- Letter-by-letter breakdown of the word "strawberry"

|

| 400 |

+

- Multiple verification approaches (position counting, pattern grouping)

|

| 401 |

+

- Cross-checking results using different methodologies

|

| 402 |

+

- Clear documentation of the reasoning process

|

| 403 |

+

|

| 404 |

+

These examples demonstrate the model's ability to:

|

| 405 |

+

- **🔍 Break down complex problems** into manageable steps

|

| 406 |

+

- **✅ Self-verify results** using multiple approaches

|

| 407 |

+

- **📝 Document reasoning chains** for transparency

|

| 408 |

+

- **🎯 Maintain accuracy** while showing work

|

| 409 |

+

|

| 410 |

+

### Activating Chain-of-Thought Reasoning

|

| 411 |

+

|

| 412 |

+

To get the best reasoning performance from LogicFlow-gemma-3-27b-thinking, use prompts that encourage step-by-step thinking:

|

| 413 |

+

|

| 414 |

+

```python

|

| 415 |

+

# Example prompt for mathematical reasoning

|

| 416 |

+

prompt = """Please solve this problem step by step, showing your thinking process:

|

| 417 |

+

|

| 418 |

+

Question: Compare 9.11 and 9.9. Which number is larger?

|

| 419 |

+

|

| 420 |

+

Think through this carefully and show your work."""

|

| 421 |

+

|

| 422 |

+

# Example prompt for logical reasoning

|

| 423 |

+

prompt = """Let me work through this systematically:

|

| 424 |

+

|

| 425 |

+

Question: How many times does the letter 'r' appear in the word 'strawberry'?

|

| 426 |

+

|

| 427 |

+

Please show your step-by-step analysis."""

|

| 428 |

+

|

| 429 |

+

# For complex problems, you can explicitly request thinking

|

| 430 |

+

prompt = """Think step by step about this problem:

|

| 431 |

+

|

| 432 |

+

[Your complex question here]

|

| 433 |

+

|

| 434 |

+

Show your reasoning process before giving the final answer."""

|

| 435 |

+

```

|

| 436 |

+

|

| 437 |

+

**Pro Tips for Best Results:**

|

| 438 |

+

- Use phrases like "step by step", "think through this", "show your work"

|

| 439 |

+

- For math problems, request multiple verification methods

|

| 440 |

+

- Ask for reasoning before the final answer

|

| 441 |

+

- Use temperature settings around 0.7 for optimal reasoning creativity

|

| 442 |

+

|

| 443 |

+

## Intended Use Cases

|

| 444 |

+

|

| 445 |

+

This multimodal model is particularly well-suited for:

|

| 446 |

+

|

| 447 |

+

### 📚 Educational Applications

|

| 448 |

+

- **Chain-of-Thought Tutoring**: Demonstrates complete problem-solving processes with transparent reasoning steps

|

| 449 |

+

- **Mathematical Education**: Shows multiple verification methods for mathematical concepts (as seen in 9.11 vs 9.9 example)

|

| 450 |

+

- **Critical Thinking Development**: Models systematic analysis and self-verification techniques

|

| 451 |

+

- **Visual Learning**: Analyzing educational diagrams, charts, and mathematical illustrations

|

| 452 |

+

- **Interactive Learning**: Combining text and visual elements for comprehensive understanding

|

| 453 |

+

|

| 454 |

+

### 🔢 Mathematical & Scientific Analysis

|

| 455 |

+

- **Chart Analysis**: Interpreting graphs, statistical charts, and data visualizations

|

| 456 |

+

- **Geometric Problem Solving**: Analyzing geometric figures and spatial relationships

|

| 457 |

+

- **Scientific Diagram Understanding**: Processing scientific illustrations and technical drawings

|

| 458 |

+

- **Formula Recognition**: Understanding mathematical formulas in images

|

| 459 |

+

|

| 460 |

+

### 💼 Professional Applications

|

| 461 |

+

- **Document Analysis**: Processing documents containing both text and visual elements

|

| 462 |

+

- **Technical Documentation**: Understanding technical manuals with diagrams

|

| 463 |

+

- **Data Visualization**: Analyzing and explaining complex charts and infographics

|

| 464 |

+

- **Research Assistance**: Combining textual research with visual data analysis

|

| 465 |

+

|

| 466 |

+

### 🧠 Advanced Reasoning Tasks

|

| 467 |

+

- **Chain-of-Thought Problem Solving**: Complex reasoning with detailed step-by-step analysis and self-verification

|

| 468 |

+

- **Multi-Method Validation**: Using multiple approaches to verify answers (numerical comparison, pattern analysis, etc.)

|

| 469 |

+

- **Transparent Decision Making**: Showing complete reasoning chains for critical analysis tasks

|

| 470 |

+

- **Multimodal Problem Solving**: Tackling problems that require both visual and textual understanding

|

| 471 |

+

- **Visual Code Analysis**: Understanding flowcharts, UML diagrams, and code structure visualizations

|

| 472 |

+

- **Pattern Recognition**: Identifying patterns in both visual and textual data

|

| 473 |

+

|

| 474 |

+

## Limitations

|

| 475 |

+

|

| 476 |

+

### Text Generation

|

| 477 |

+

- The model may occasionally generate incorrect mathematical calculations despite showing proper reasoning steps

|

| 478 |

+

- Performance on highly specialized domain knowledge outside of mathematics and logic may be limited

|

| 479 |

+

- As with all language models, it can sometimes produce hallucinated information

|

| 480 |

+

|

| 481 |

+

### Vision Understanding

|

| 482 |

+

- **Image Resolution**: Images are resized to 896x896 pixels, which may lose important details in high-resolution images

|

| 483 |

+

- **Image Quality**: Poor quality, blurry, or low-contrast images may reduce accuracy

|

| 484 |

+

- **Complex Visual Elements**: Very dense charts or diagrams with small text may be challenging to interpret

|

| 485 |

+

- **Image Formats**: Only supports standard image formats (JPEG, PNG, WebP)

|

| 486 |

+

|

| 487 |

+

### General Limitations

|

| 488 |

+

- The model should not be used for critical decision-making without human verification

|

| 489 |

+

- Multimodal reasoning combining complex visual and textual elements may sometimes produce inconsistent results

|

| 490 |

+

- Processing images increases computational requirements and inference time

|

| 491 |

+

|

| 492 |

+

## Ethical Considerations

|

| 493 |

+

|

| 494 |

+

- This model should be used responsibly and outputs should be verified, especially for important decisions

|

| 495 |

+

- The model may reflect biases present in its training data

|

| 496 |

+

- Users should be aware that the model's reasoning, while often sound, is not infallible

|

| 497 |

+

|

| 498 |

+

## Complete Training Configuration

|

| 499 |

+

|

| 500 |

+

For full reproducibility, here is the complete training configuration used:

|

| 501 |

+

|

| 502 |

+

```yaml

|

| 503 |

+

bf16: true

|

| 504 |

+

cutoff_len: 2048

|

| 505 |

+

dataset: openo1_sft,open_thoughts,open_r1_math

|

| 506 |

+

dataset_dir: data

|

| 507 |

+

ddp_timeout: 180000000

|

| 508 |

+

do_train: true

|

| 509 |

+

enable_thinking: true

|

| 510 |

+

finetuning_type: lora

|

| 511 |

+

flash_attn: auto

|

| 512 |

+

freeze_multi_modal_projector: true

|

| 513 |

+

freeze_vision_tower: true

|

| 514 |

+

gradient_accumulation_steps: 8

|

| 515 |

+

image_max_pixels: 589824

|

| 516 |

+

image_min_pixels: 1024

|

| 517 |

+

include_num_input_tokens_seen: true

|

| 518 |

+

learning_rate: 5.0e-05

|

| 519 |

+

logging_steps: 5

|

| 520 |

+

lora_alpha: 16

|

| 521 |

+

lora_dropout: 0

|

| 522 |

+

lora_rank: 8

|

| 523 |

+

lora_target: all

|

| 524 |

+

lr_scheduler_type: cosine

|

| 525 |

+

max_grad_norm: 1.0

|

| 526 |

+

max_samples: 100000

|

| 527 |

+

model_name_or_path: google/gemma-3-27b-it

|

| 528 |

+

num_train_epochs: 5.0

|

| 529 |

+

optim: adamw_torch

|

| 530 |

+

output_dir: saves/Gemma-3-27B-Instruct/lora/train_2025-06-12-17-10-14

|

| 531 |

+

packing: false

|

| 532 |

+

per_device_train_batch_size: 2

|

| 533 |

+

plot_loss: true

|

| 534 |

+

preprocessing_num_workers: 16

|

| 535 |

+

report_to: none

|

| 536 |

+

save_steps: 100

|

| 537 |

+

stage: sft

|

| 538 |

+

template: gemma

|

| 539 |

+

trust_remote_code: true

|

| 540 |

+

video_max_pixels: 65536

|

| 541 |

+

video_min_pixels: 256

|

| 542 |

+

warmup_steps: 100

|

| 543 |

+

```

|

| 544 |

+

|

| 545 |

+

## Technical Specifications

|

| 546 |

+

|

| 547 |

+

### Core Framework

|

| 548 |

+

- **Framework**: Transformers 4.52.4

|

| 549 |

+

- **PEFT Version**: 0.15.2

|

| 550 |

+

- **PyTorch Version**: 2.7.0+cu126

|

| 551 |

+

- **Training Framework**: LLaMA-Factory with LoRA fine-tuning

|

| 552 |

+

|

| 553 |

+

### Hardware Requirements

|

| 554 |

+

- **Recommended GPU Memory**: 32GB+ VRAM for multimodal inference

|

| 555 |

+

- **Minimum GPU Memory**: 24GB VRAM (text-only mode)

|

| 556 |

+

- **CPU Memory**: 64GB+ RAM recommended for optimal performance

|

| 557 |

+

- **Quantization**: Supports 4-bit and 8-bit quantization for reduced memory usage

|

| 558 |

+

|

| 559 |

+

### Vision Specifications

|

| 560 |

+

- **Vision Model**: SIGLIP-based vision encoder

|

| 561 |

+

- **Image Resolution**: 896x896 pixels (normalized)

|

| 562 |

+

- **Image Patch Size**: 14x14 pixels

|

| 563 |

+

- **Vision Hidden Size**: 1,152

|

| 564 |

+

- **Vision Layers**: 27 layers

|

| 565 |

+

- **Tokens per Image**: 256 tokens

|

| 566 |

+

- **Supported Image Formats**: JPEG, PNG, WebP

|

| 567 |

+

|

| 568 |

+

### Architecture Details

|

| 569 |

+

- **Model Architecture**: Gemma3ForConditionalGeneration

|

| 570 |

+

- **Text Hidden Size**: 5,376

|

| 571 |

+

- **Vision Hidden Size**: 1,152

|

| 572 |

+

- **Attention Heads**: 32 (text), 16 (vision)

|

| 573 |

+

- **Hidden Layers**: 62 (text), 27 (vision)

|

| 574 |

+

- **Context Window**: 131,072 tokens (including image tokens)

|

| 575 |

+

|

| 576 |

+

## Citation

|

| 577 |

+

|

| 578 |

+

If you use this model in your research or applications, please cite:

|

| 579 |

+

|

| 580 |

+

```bibtex

|

| 581 |

+

@model{logicflow-gemma-3-27b-thinking,

|

| 582 |

+

title={LogicFlow-gemma-3-27b-thinking: A Fine-tuned Model for Enhanced Reasoning},

|

| 583 |

+

author={[Xiangda Li]},

|

| 584 |

+

year={2025},

|

| 585 |

+

base_model={google/gemma-3-27b-it},

|

| 586 |

+

url={https://huggingface.co/RekklesAI/LogicFlow-gemma-3-27b-thinking}

|

| 587 |

+

}

|

| 588 |

+

```

|

| 589 |

+

|

| 590 |

+

## Acknowledgments

|

| 591 |

+

|

| 592 |

+

- Based on Google's Gemma-3-27B-IT model

|

| 593 |

+

- Fine-tuned using LLaMA-Factory framework

|

| 594 |

+

- Training data from open-source reasoning and mathematics datasets

|

| 595 |

+

|

| 596 |

+

---

|

| 597 |

+

|

| 598 |

+

*This model card was generated to provide comprehensive information about the LogicFlow-gemma-3-27b-thinking model. Please refer to the original Gemma-3 model documentation for additional technical details about the base architecture.*

|

added_tokens.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"<image_soft_token>": 262144

|

| 3 |

+

}

|

chat_template.jinja

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{{- bos_token }}

|

| 2 |

+

{%- for message in messages %}

|

| 3 |

+

{%- if message['role'] == 'system' %}

|

| 4 |

+

{{- '<start_of_turn>system\n' + message['content'] + '<end_of_turn>\n' }}

|

| 5 |

+

{%- elif message['role'] == 'user' %}

|

| 6 |

+

{{- '<start_of_turn>user\n' }}

|

| 7 |

+

{%- if 'images' in message and message['images'] %}

|

| 8 |

+

{%- for image in message['images'] %}

|

| 9 |

+

{{- '<start_of_image>\n<end_of_image>\n' }}

|

| 10 |

+

{%- endfor %}

|

| 11 |

+

{%- endif %}

|

| 12 |

+

{{- message['content'] + '<end_of_turn>\n' }}

|

| 13 |

+

{%- elif message['role'] == 'assistant' %}

|

| 14 |

+

{{- '<start_of_turn>model\n' + message['content'] + '<end_of_turn>\n' }}

|

| 15 |

+

{%- endif %}

|

| 16 |

+

{%- endfor %}

|

| 17 |

+

{%- if add_generation_prompt and messages[-1]['role'] != 'assistant' %}

|

| 18 |

+

{{- '<start_of_turn>model\n' }}

|

| 19 |

+

{%- endif %}

|

config.json

ADDED

|

@@ -0,0 +1,64 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"architectures": [

|

| 3 |

+

"Gemma3ForConditionalGeneration"

|

| 4 |

+

],

|

| 5 |

+

"boi_token_index": 255999,

|

| 6 |

+

"eoi_token_index": 256000,

|

| 7 |

+

"eos_token_id": [

|

| 8 |

+

1,

|

| 9 |

+

106

|

| 10 |

+

],

|

| 11 |

+

"hidden_size": 5376,

|

| 12 |

+

"image_token_index": 262144,

|

| 13 |

+

"initializer_range": 0.02,

|

| 14 |

+

"mm_tokens_per_image": 256,

|

| 15 |

+

"model_type": "gemma3",

|

| 16 |

+

"text_config": {

|

| 17 |

+

"attention_bias": false,

|

| 18 |

+

"attention_dropout": 0.0,

|

| 19 |

+

"attn_logit_softcapping": null,

|

| 20 |

+

"cache_implementation": "hybrid",

|

| 21 |

+

"final_logit_softcapping": null,

|

| 22 |

+

"head_dim": 128,

|

| 23 |

+

"hidden_activation": "gelu_pytorch_tanh",

|

| 24 |

+

"hidden_size": 5376,

|

| 25 |

+

"initializer_range": 0.02,

|

| 26 |

+

"intermediate_size": 21504,

|

| 27 |

+

"max_position_embeddings": 131072,

|

| 28 |

+

"model_type": "gemma3_text",

|

| 29 |

+

"num_attention_heads": 32,

|

| 30 |

+

"num_hidden_layers": 62,

|

| 31 |

+

"num_key_value_heads": 16,

|

| 32 |

+

"query_pre_attn_scalar": 168,

|

| 33 |

+

"rms_norm_eps": 1e-06,

|

| 34 |

+

"rope_local_base_freq": 10000.0,

|

| 35 |

+

"rope_scaling": {

|

| 36 |

+

"factor": 8.0,

|

| 37 |

+

"rope_type": "linear"

|

| 38 |

+

},

|

| 39 |

+

"rope_theta": 1000000.0,

|

| 40 |

+

"sliding_window": 1024,

|

| 41 |

+

"sliding_window_pattern": 6,

|

| 42 |

+

"torch_dtype": "bfloat16",

|

| 43 |

+

"use_cache": true,

|

| 44 |

+

"vocab_size": 262208

|

| 45 |

+

},

|

| 46 |

+

"torch_dtype": "bfloat16",

|

| 47 |

+

"transformers_version": "4.52.4",

|

| 48 |

+

"use_cache": true,

|

| 49 |

+

"vision_config": {

|

| 50 |

+

"attention_dropout": 0.0,

|

| 51 |

+

"hidden_act": "gelu_pytorch_tanh",

|

| 52 |

+

"hidden_size": 1152,

|

| 53 |

+

"image_size": 896,

|

| 54 |

+

"intermediate_size": 4304,

|

| 55 |

+

"layer_norm_eps": 1e-06,

|

| 56 |

+

"model_type": "siglip_vision_model",

|

| 57 |

+

"num_attention_heads": 16,

|

| 58 |

+

"num_channels": 3,

|

| 59 |

+

"num_hidden_layers": 27,

|

| 60 |

+

"patch_size": 14,

|

| 61 |

+

"torch_dtype": "bfloat16",

|

| 62 |

+

"vision_use_head": false

|

| 63 |

+

}

|

| 64 |

+

}

|

generation_config.json

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"bos_token_id": 2,

|

| 3 |

+

"cache_implementation": "hybrid",

|

| 4 |

+

"do_sample": true,

|

| 5 |

+

"eos_token_id": [

|

| 6 |

+

1,

|

| 7 |

+

106

|

| 8 |

+

],

|

| 9 |

+

"pad_token_id": 0,

|

| 10 |

+

"top_k": 64,

|

| 11 |

+

"top_p": 0.95,

|

| 12 |

+

"transformers_version": "4.52.4"

|

| 13 |

+

}

|

model-00001-of-00012.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a6e53676b23f62c7a940415b59cd7d9757568f6c546548254a0e5230c4f0ae13

|

| 3 |

+

size 4854573696

|

model-00002-of-00012.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d0bf42ce0d9c4867b3e6d48584efc9f1850a04ab32118a339835c9dc02e99eb0

|

| 3 |

+

size 4999384608

|

model-00003-of-00012.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:931d48505a99ff8c724dab4559c618fc21218fdda134f9096123e76741720ae8

|

| 3 |

+

size 4976813240

|

model-00004-of-00012.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:754318d971a5f631178d0c6148cb9868ad1959923a3becdb25aadcb47a806753

|

| 3 |

+

size 4998834104

|

model-00005-of-00012.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:53fb0b3f330d6633182527266b223b8584760dbdbc29d006dbd4ecace8afd401

|

| 3 |

+

size 4954792984

|

model-00006-of-00012.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:369f3e9bd8167ac6ee9703a106e54d610452f12fc82fd641fdbc965fa0967220

|

| 3 |

+

size 4954792976

|

model-00007-of-00012.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:767fdd32c80f1cef4465891b5ddd24be1ef0d300e99ca8883b766b832fc1f15a

|

| 3 |

+

size 4822682000

|

model-00008-of-00012.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a36e8db8b163a468166bffc11628d96c22d51debbdef2160a4db55c74dfbc6de

|

| 3 |

+

size 4954793016

|

model-00009-of-00012.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+