Update README.md

Browse files

README.md

CHANGED

|

@@ -22,6 +22,6 @@ Notes:

|

|

| 22 |

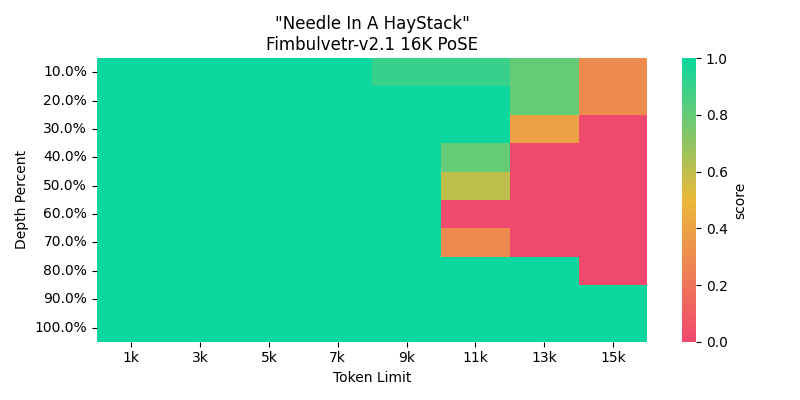

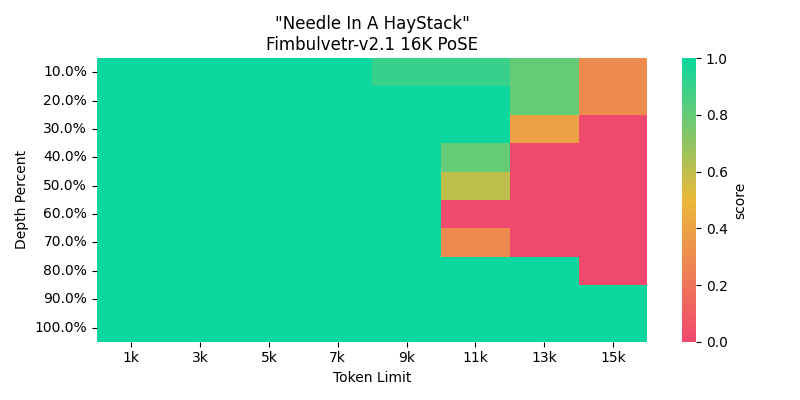

<br> \- I get consistent and reliable answers at ~11K context fine.

|

| 23 |

<br> \- Still coherent at up to 16K though! Just works not that well.

|

| 24 |

|

| 25 |

-

I recommend sticking up to 12K context, but loading the model at 16K. It has a really accurate context up to 10K from multiple different extended long context tests. 16K works fine for roleplays, but not for more detailed tasks.

|

| 26 |

|

| 27 |

|

|

|

|

| 22 |

<br> \- I get consistent and reliable answers at ~11K context fine.

|

| 23 |

<br> \- Still coherent at up to 16K though! Just works not that well.

|

| 24 |

|

| 25 |

+

I recommend sticking up to 12K context, but loading the model at 16K for inference. It has a really accurate context up to 10K from multiple different extended long context tests. 16K works fine for roleplays, but not for more detailed tasks.

|

| 26 |

|

| 27 |

|