Update README.md

Browse files

README.md

CHANGED

|

@@ -24,4 +24,7 @@ Notes:

|

|

| 24 |

|

| 25 |

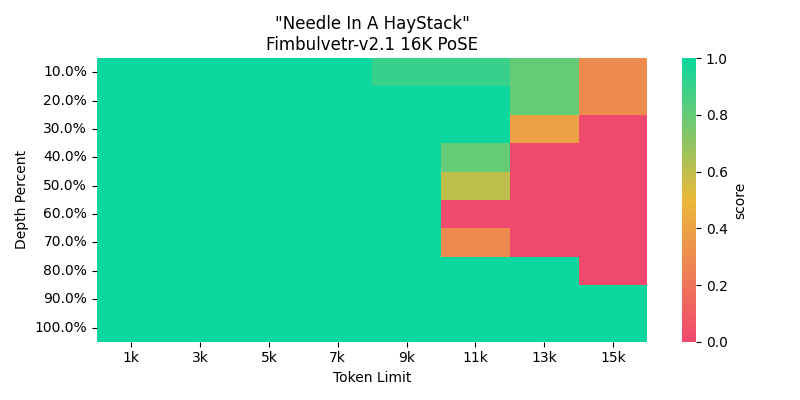

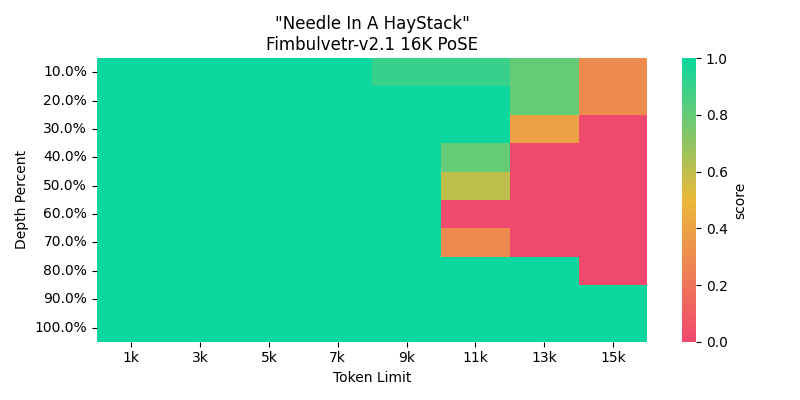

I recommend sticking up to 12K context, but loading the model at 16K for inference. It has a really accurate context up to 10K from multiple different extended long context tests. 16K works fine for roleplays, but not for more detailed tasks.

|

| 26 |

|

| 27 |

-

|

|

|

|

|

|

|

|

|

|

|

|

| 24 |

|

| 25 |

I recommend sticking up to 12K context, but loading the model at 16K for inference. It has a really accurate context up to 10K from multiple different extended long context tests. 16K works fine for roleplays, but not for more detailed tasks.

|

| 26 |

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

Red Needle in Haystack testing results for this specific one are usually due to weird result artifacts, like the model answering part of the key, or commenting extra. Basically, they got the result, but it's incomplete or there's additional stuff taken.

|

| 30 |

+

Something like ' 3211'or '3211 and' instead of '321142'. Weird. Hence why its coherent and semi-reliable for roleplays.

|