---

license: mit

language:

- en

base_model:

- ResembleAI/chatterbox

pipeline_tag: text-to-speech

tags:

- gguf-connector

---

## gguf quantized version of chatterbox

- base model from [resembleai](https://huggingface.co/ResembleAI)

- text-to-speech synthesis

### **run it with gguf-connector**

```

ggc c2

```

| Prompt | Audio Sample |

|--------|---------------|

|`Hey Connector, why your appearance looks so stupid?` `Oh, really? maybe I ate too much smart beans.` `Wow. Amazing.` `Let's go to get some more smart beans and you will become stupid as well.` | 🎧 **audio-sample-1** |

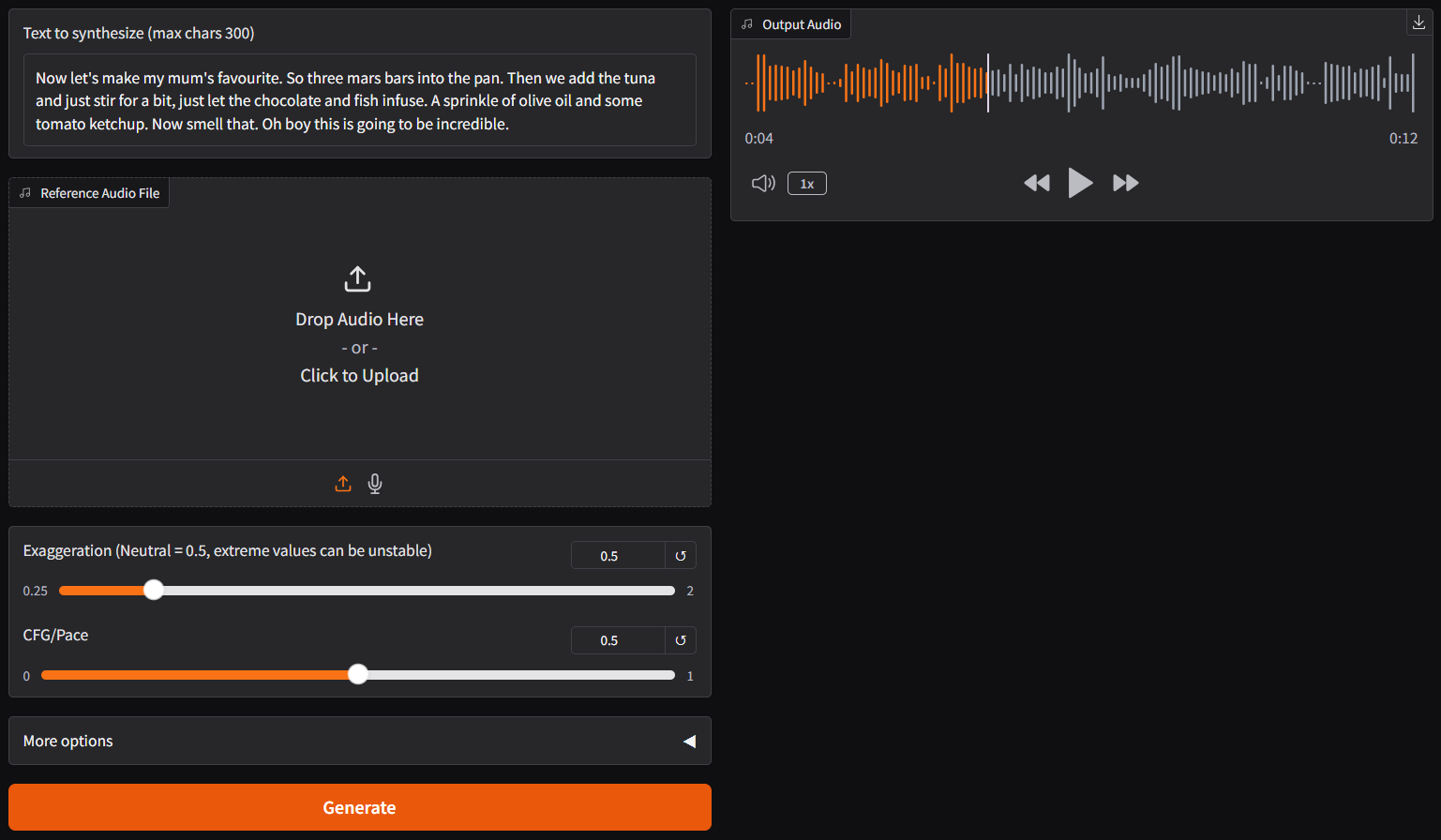

|`Now let's make my mum's favourite. So three mars bars into the pan. Then we add the tuna and just stir for a bit, just let the chocolate and fish infuse. ` `A sprinkle of olive oil and some tomato ketchup. Now smell that. Oh boy this is going to be incredible.` | 🎧 **audio-sample-2** |

### **review/reference**

- simply execute the command (`ggc c2`) above in console/terminal

- opt a `vae`, a `clip(encoder)` and a `model` file in the current directory to interact with (see example below)

>

>GGUF file(s) available. Select which one for **ve**:

>

>1. s3gen-bf16.gguf

>2. s3gen-f16.gguf

>3. s3gen-f32.gguf

>4. t3_cfg-q2_k.gguf

>5. t3_cfg-q4_k_m.gguf

>6. t3_cfg-q6_k.gguf

>7. ve_fp32-f16.gguf (recommended)

>8. ve_fp32-f32.gguf

>

>Enter your choice (1 to 8): 7

>

>ve file: ve_fp32-f16.gguf is selected!

>

>GGUF file(s) available. Select which one for **t3**:

>

>1. s3gen-bf16.gguf

>2. s3gen-f16.gguf

>3. s3gen-f32.gguf

>4. t3_cfg-q2_k.gguf

>5. t3_cfg-q4_k_m.gguf (recommended)

>6. t3_cfg-q6_k.gguf

>7. ve_fp32-f16.gguf

>8. ve_fp32-f32.gguf

>

>Enter your choice (1 to 8): 5

>

>t3 file: t3_cfg-q4_k_m.gguf is selected!

>

>GGUF file(s) available. Select which one for **s3gen**:

>

>1. s3gen-bf16.gguf (recommended)

>2. s3gen-f16.gguf (for non-cuda user)

>3. s3gen-f32.gguf

>4. t3_cfg-q2_k.gguf

>5. t3_cfg-q4_k_m.gguf

>6. t3_cfg-q6_k.gguf

>7. ve_fp32-f16.gguf

>8. ve_fp32-f32.gguf

>

>Enter your choice (1 to 8): _

>

- note: for the latest update, only tokenizer will be pulled to cache automatically during the first launch; you need to prepare the **model**, **encoder** and **vae** files yourself, working like [vision](https://huggingface.co/calcuis/llava-gguf) connector right away; mix and match, more flexible

- run it entirely offline; i.e., from local URL: http://127.0.0.1:7860 with lazy webui

- gguf-connector ([pypi](https://pypi.org/project/gguf-connector))