Update README.md

Browse files

README.md

CHANGED

|

@@ -40,8 +40,24 @@ tags:

|

|

| 40 |

- gguf-connector

|

| 41 |

---

|

| 42 |

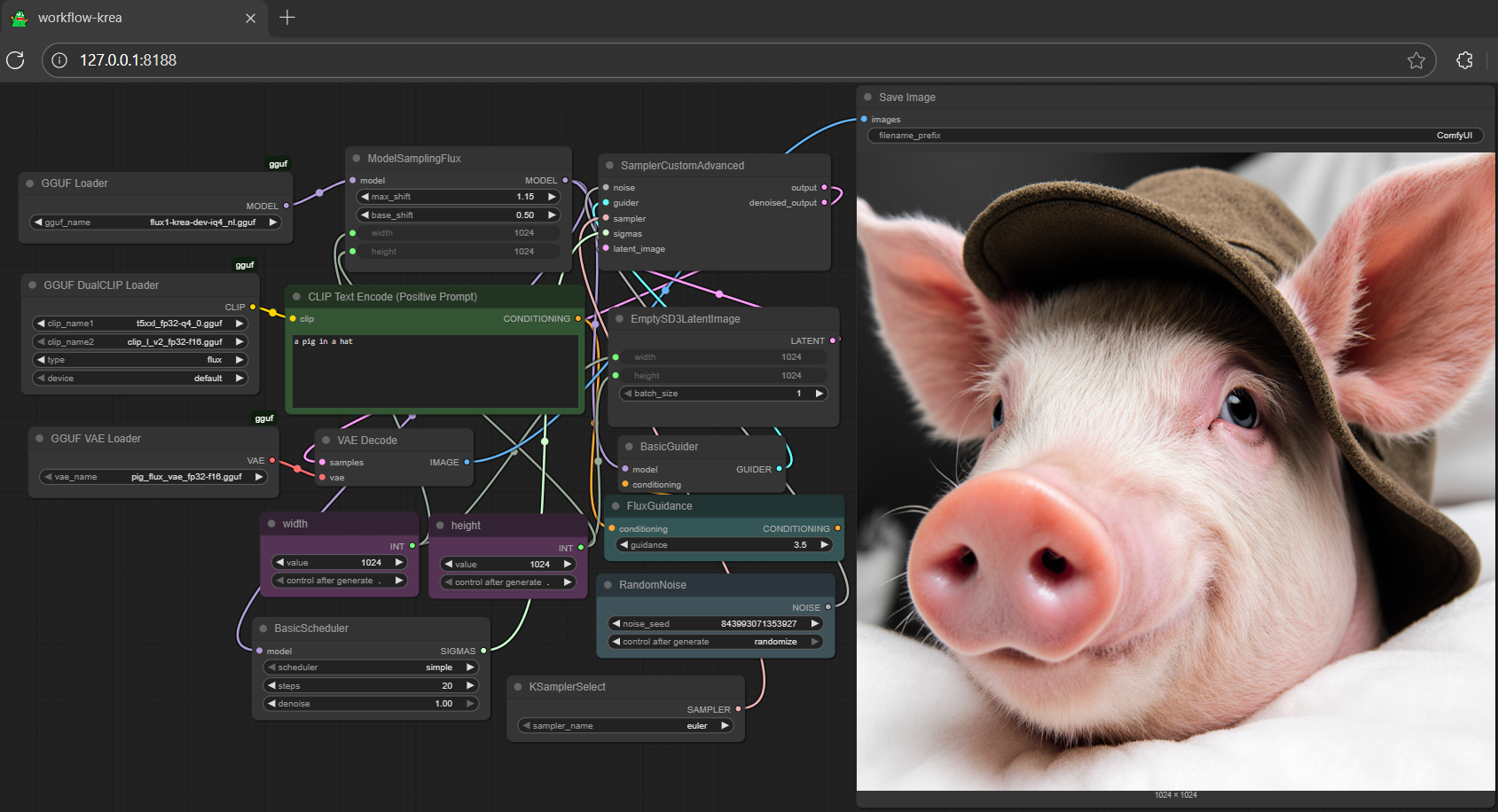

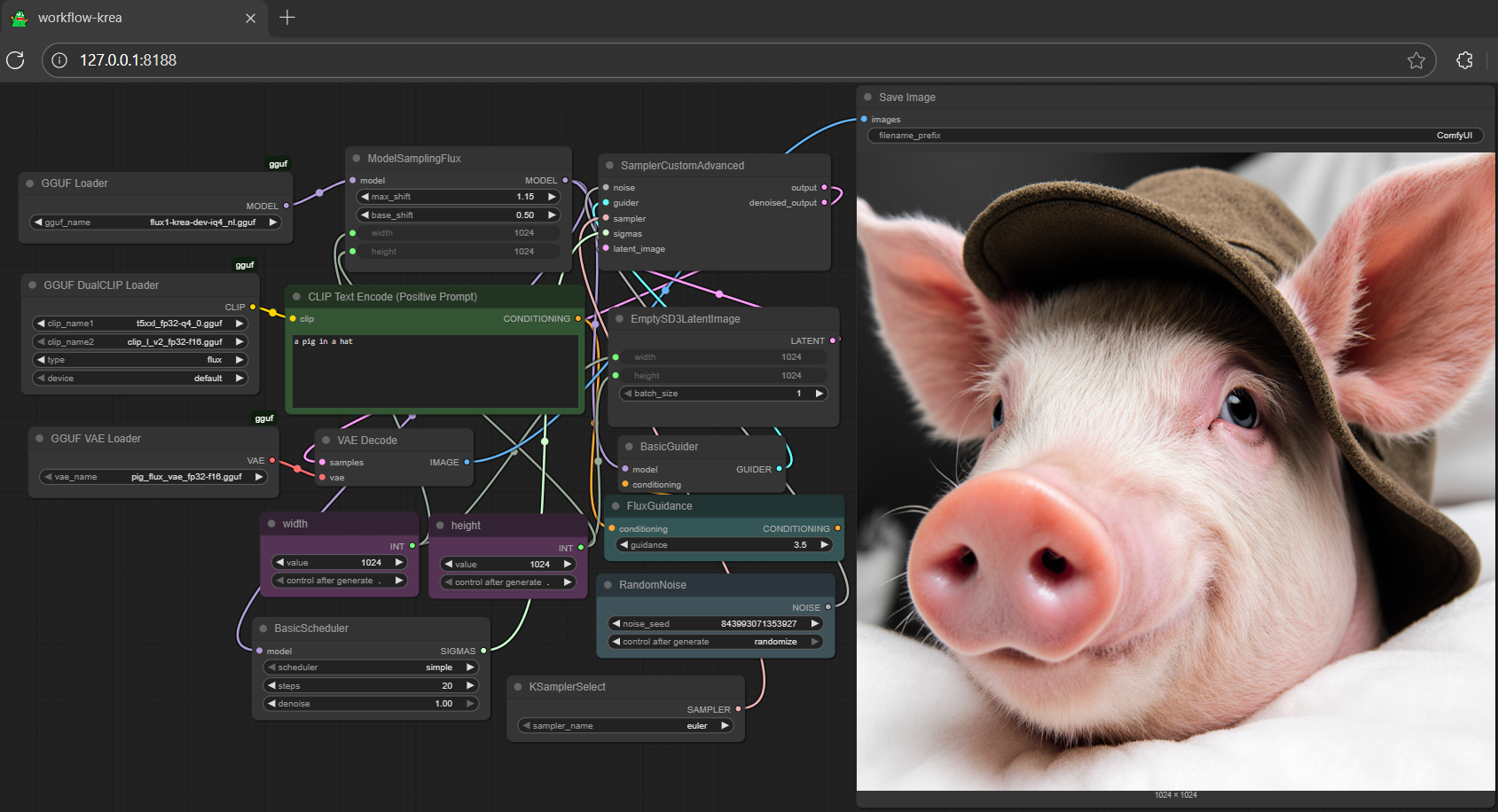

# **gguf quantized version of krea**

|

| 43 |

-

- run it with

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 44 |

|

|

|

|

| 45 |

```py

|

| 46 |

import torch

|

| 47 |

from transformers import T5EncoderModel

|

|

@@ -89,40 +105,6 @@ image.save("output.png")

|

|

| 89 |

|

| 90 |

|

| 91 |

|

| 92 |

-

## **run it with gguf-connector**

|

| 93 |

-

- able to opt - 2-bit, 4-bit or 8-bit model for dequant while executing:

|

| 94 |

-

```

|

| 95 |

-

ggc k4

|

| 96 |

-

```

|

| 97 |

-

|

| 98 |

-

|

| 99 |

-

|

| 100 |

-

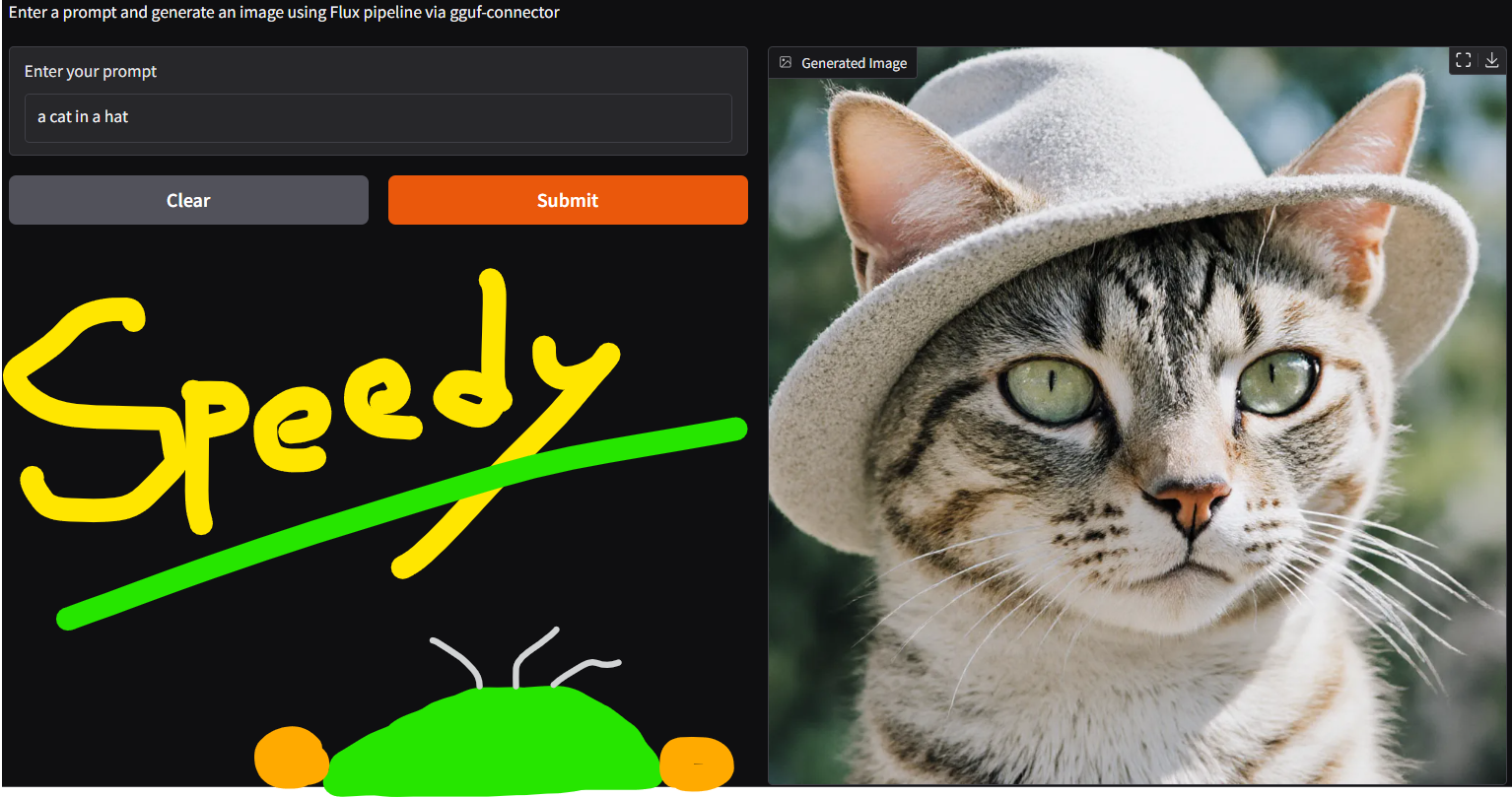

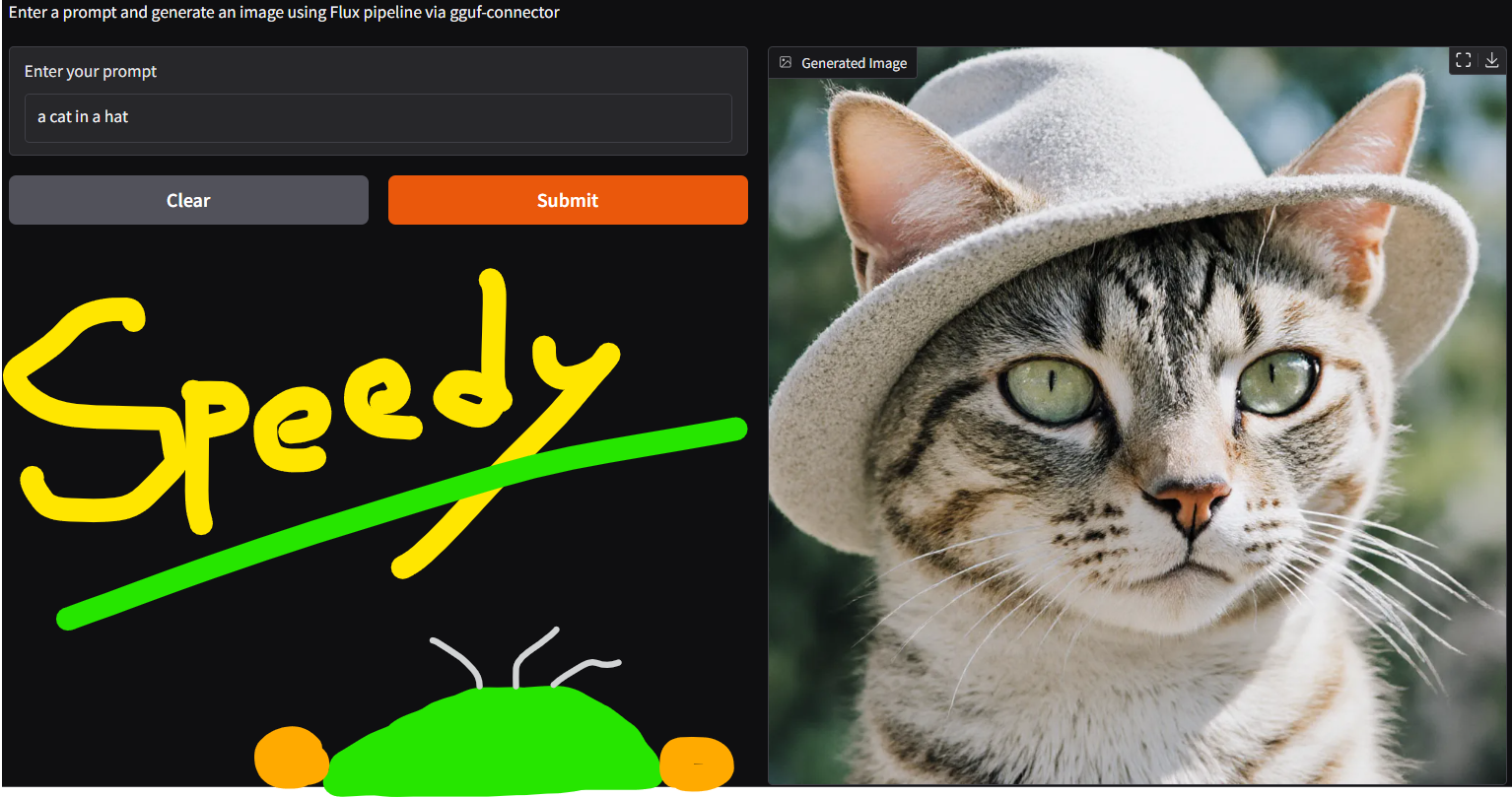

### 🐷 Krea 7 Image Generator (connector mode)

|

| 101 |

-

- opt a `gguf` file straight in the current directory to interact with

|

| 102 |

-

```

|

| 103 |

-

ggc k7

|

| 104 |

-

```

|

| 105 |

-

|

| 106 |

-

> device auto-detection logic applied (different by system)

|

| 107 |

-

>>>

|

| 108 |

-

>

|

| 109 |

-

>GGUF file(s) available. Select which one to use:

|

| 110 |

-

>

|

| 111 |

-

>1. flux1-krea-dev-q2_k.gguf

|

| 112 |

-

>2. flux1-krea-dev-q4_0.gguf

|

| 113 |

-

>3. flux1-krea-dev-q8_0.gguf

|

| 114 |

-

>

|

| 115 |

-

>Enter your choice (1 to 3): _

|

| 116 |

-

>>>

|

| 117 |

-

|

| 118 |

-

- k7 supports all t, k, s quants plus iq4_nl, iq4_xs, iq3_s, iq3_xxs and iq2_s

|

| 119 |

-

- compatible with kontext and flux1-dev files as well; try it out!

|

| 120 |

-

|

| 121 |

-

### All-in-one flux connector

|

| 122 |

-

```

|

| 123 |

-

ggc k

|

| 124 |

-

```

|

| 125 |

-

|

| 126 |

### **reference**

|

| 127 |

- base model from [black-forest-labs](https://huggingface.co/black-forest-labs)

|

| 128 |

- diffusers from [huggingface](https://github.com/huggingface/diffusers)

|

|

|

|

| 40 |

- gguf-connector

|

| 41 |

---

|

| 42 |

# **gguf quantized version of krea**

|

| 43 |

+

- run it straight with `gguf-connector`

|

| 44 |

+

- opt a `gguf` file in the current directory to interact with by:

|

| 45 |

+

```

|

| 46 |

+

ggc k

|

| 47 |

+

```

|

| 48 |

+

>

|

| 49 |

+

>GGUF file(s) available. Select which one to use:

|

| 50 |

+

>

|

| 51 |

+

>1. flux1-krea-dev-q2_k.gguf

|

| 52 |

+

>2. flux1-krea-dev-q4_0.gguf

|

| 53 |

+

>3. flux1-krea-dev-q8_0.gguf

|

| 54 |

+

>

|

| 55 |

+

>Enter your choice (1 to 3): _

|

| 56 |

+

>>>

|

| 57 |

+

|

| 58 |

+

|

| 59 |

|

| 60 |

+

- run it with diffusers (see example inference below)

|

| 61 |

```py

|

| 62 |

import torch

|

| 63 |

from transformers import T5EncoderModel

|

|

|

|

| 105 |

|

| 106 |

|

| 107 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 108 |

### **reference**

|

| 109 |

- base model from [black-forest-labs](https://huggingface.co/black-forest-labs)

|

| 110 |

- diffusers from [huggingface](https://github.com/huggingface/diffusers)

|