Update README.md

Browse files

README.md

CHANGED

|

@@ -1,5 +1,105 @@

|

|

| 1 |

-

---

|

| 2 |

-

license: other

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: other

|

| 3 |

+

base_model: stabilityai/stable-diffusion-xl-base-1.0

|

| 4 |

+

tags:

|

| 5 |

+

- stable-diffusion-xl

|

| 6 |

+

- stable-diffusion-xl-diffusers

|

| 7 |

+

- text-to-image

|

| 8 |

+

- diffusers

|

| 9 |

+

- controlnet

|

| 10 |

+

inference: false

|

| 11 |

+

---

|

| 12 |

+

|

| 13 |

+

# Important Notice

|

| 14 |

+

|

| 15 |

+

This is a copy of [thibaud/controlnet-openpose-sdxl-1.0](https://huggingface.co/thibaud/controlnet-openpose-sdxl-1.0)

|

| 16 |

+

allowing direct usage from diffusers using the safetensors version.

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

# SDXL-controlnet: OpenPose (v2)

|

| 20 |

+

|

| 21 |

+

These are controlnet weights trained on stabilityai/stable-diffusion-xl-base-1.0 with OpenPose (v2) conditioning. You can find some example images in the following.

|

| 22 |

+

|

| 23 |

+

prompt: a ballerina, romantic sunset, 4k photo

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

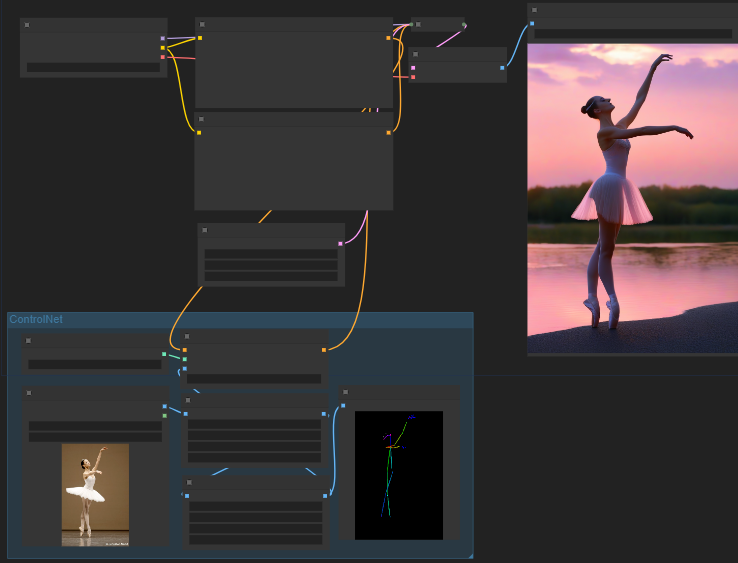

### Comfy Workflow

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

(Image is from ComfyUI, you can drag and drop in Comfy to use it as workflow)

|

| 32 |

+

|

| 33 |

+

License: refers to the OpenPose's one.

|

| 34 |

+

|

| 35 |

+

### Using in 🧨 diffusers

|

| 36 |

+

|

| 37 |

+

First, install all the libraries:

|

| 38 |

+

|

| 39 |

+

```bash

|

| 40 |

+

pip install -q controlnet_aux transformers accelerate

|

| 41 |

+

pip install -q git+https://github.com/huggingface/diffusers

|

| 42 |

+

```

|

| 43 |

+

|

| 44 |

+

Now, we're ready to make Darth Vader dance:

|

| 45 |

+

|

| 46 |

+

```python

|

| 47 |

+

from diffusers import AutoencoderKL, StableDiffusionXLControlNetPipeline, ControlNetModel, UniPCMultistepScheduler

|

| 48 |

+

import torch

|

| 49 |

+

from controlnet_aux import OpenposeDetector

|

| 50 |

+

from diffusers.utils import load_image

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

# Compute openpose conditioning image.

|

| 54 |

+

openpose = OpenposeDetector.from_pretrained("lllyasviel/ControlNet")

|

| 55 |

+

|

| 56 |

+

image = load_image(

|

| 57 |

+

"https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/person.png"

|

| 58 |

+

)

|

| 59 |

+

openpose_image = openpose(image)

|

| 60 |

+

|

| 61 |

+

# Initialize ControlNet pipeline.

|

| 62 |

+

controlnet = ControlNetModel.from_pretrained("dimitribarbot/controlnet-openpose-sdxl-1.0-safetensors", torch_dtype=torch.float16)

|

| 63 |

+

pipe = StableDiffusionXLControlNetPipeline.from_pretrained(

|

| 64 |

+

"stabilityai/stable-diffusion-xl-base-1.0", controlnet=controlnet, torch_dtype=torch.float16

|

| 65 |

+

)

|

| 66 |

+

pipe.enable_model_cpu_offload()

|

| 67 |

+

|

| 68 |

+

|

| 69 |

+

# Infer.

|

| 70 |

+

prompt = "Darth vader dancing in a desert, high quality"

|

| 71 |

+

negative_prompt = "low quality, bad quality"

|

| 72 |

+

images = pipe(

|

| 73 |

+

prompt,

|

| 74 |

+

negative_prompt=negative_prompt,

|

| 75 |

+

num_inference_steps=25,

|

| 76 |

+

num_images_per_prompt=4,

|

| 77 |

+

image=openpose_image.resize((1024, 1024)),

|

| 78 |

+

generator=torch.manual_seed(97),

|

| 79 |

+

).images

|

| 80 |

+

images[0]

|

| 81 |

+

```

|

| 82 |

+

|

| 83 |

+

Here are some gemerated examples:

|

| 84 |

+

|

| 85 |

+

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

### Training

|

| 89 |

+

|

| 90 |

+

Use of the training script by HF🤗 [here](https://github.com/huggingface/diffusers/blob/main/examples/controlnet/README_sdxl.md).

|

| 91 |

+

|

| 92 |

+

#### Training data

|

| 93 |

+

This checkpoint was first trained for 15,000 steps on laion 6a resized to a max minimum dimension of 768.

|

| 94 |

+

|

| 95 |

+

#### Compute

|

| 96 |

+

one 1xA100 machine (Thanks a lot HF🤗 to provide the compute!)

|

| 97 |

+

|

| 98 |

+

#### Batch size

|

| 99 |

+

Data parallel with a single gpu batch size of 2 with gradient accumulation 8.

|

| 100 |

+

|

| 101 |

+

#### Hyper Parameters

|

| 102 |

+

Constant learning rate of 8e-5

|

| 103 |

+

|

| 104 |

+

#### Mixed precision

|

| 105 |

+

fp16

|