Aero-1-Audio

Aero-1-Audio is a compact audio model adept at various audio tasks, including speech recognition, audio understanding, and following audio instructions.

Built upon the Qwen-2.5-1.5B language model, Aero delivers strong performance across multiple audio benchmarks while remaining parameter-efficient, even compared with larger advanced models like Whisper and Qwen-2-Audio and Phi-4-Multimodal, or commercial services like ElevenLabs/Scribe.

Aero is trained within one day on 16 H100 GPUs using just 50k hours of audio data. Our insight suggests that audio model training could be sample efficient with high quality and filtered data.

Aero can accurately perform ASR and audio understanding on continuous audio inputs up to 15 minutes in length, which we find the scenario is still a challenge for other models.

- Developed by: [LMMs-Lab]

- Model type: [LLM + Audio Encoder]

- Language(s) (NLP): [English]

- License: [MIT]

How to Get Started with the Model

Use the code below to get started with the model.

You are encouraged to install transformers by using

python3 -m pip install transformers@git+https://github.com/huggingface/[email protected]

as this is the transformers version we are using when building this model.

Simple Demo

from transformers import AutoProcessor, AutoModelForCausalLM

import torch

import librosa

def load_audio():

return librosa.load(librosa.ex("libri1"), sr=16000)[0]

processor = AutoProcessor.from_pretrained("lmms-lab/Aero-1-Audio-1.5B", trust_remote_code=True)

# We encourage to use flash attention 2 for better performance

# Please install it with `pip install --no-build-isolation flash-attn`

# If you do not want flash attn, please use sdpa or eager`

model = AutoModelForCausalLM.from_pretrained("lmms-lab/Aero-1-Audio-1.5B", device_map="cuda", torch_dtype="auto", attn_implementation="flash_attention_2", trust_remote_code=True)

model.eval()

messages = [

{

"role": "user",

"content": [

{

"type": "audio_url",

"audio": "placeholder",

},

{

"type": "text",

"text": "Please transcribe the audio",

}

]

}

]

audios = [load_audio()]

prompt = processor.apply_chat_template(messages, add_generation_prompt=True)

inputs = processor(text=prompt, audios=audios, sampling_rate=16000, return_tensors="pt")

inputs = {k: v.to("cuda") for k, v in inputs.items()}

outputs = model.generate(**inputs, eos_token_id=151645, max_new_tokens=4096)

cont = outputs[:, inputs["input_ids"].shape[-1] :]

print(processor.batch_decode(cont, skip_special_tokens=True)[0])

Batch Inference

The model supports batch inference with transformers. An example demo is like this:

from transformers import AutoProcessor, AutoModelForCausalLM

import torch

import librosa

def load_audio():

return librosa.load(librosa.ex("libri1"), sr=16000)[0]

def load_audio_2():

return librosa.load(librosa.ex("libri2"), sr=16000)[0]

processor = AutoProcessor.from_pretrained("lmms-lab/Aero-1-Audio-1.5B", trust_remote_code=True)

# We encourage to use flash attention 2 for better performance

# Please install it with `pip install --no-build-isolation flash-attn`

# If you do not want flash attn, please use sdpa or eager`

model = AutoModelForCausalLM.from_pretrained("lmms-lab/Aero-1-Audio-1.5B", device_map="cuda", torch_dtype="auto", attn_implementation="flash_attention_2", trust_remote_code=True)

model.eval()

messages = [

{

"role": "user",

"content": [

{

"type": "audio_url",

"audio": "placeholder",

},

{

"type": "text",

"text": "Please transcribe the audio",

}

]

}

]

messages = [messages, messages]

audios = [load_audio(), load_audio_2()]

processor.tokenizer.padding_side="left"

prompt = processor.apply_chat_template(messages, add_generation_prompt=True)

inputs = processor(text=prompt, audios=audios, sampling_rate=16000, return_tensors="pt", padding=True)

inputs = {k: v.to("cuda") for k, v in inputs.items()}

outputs = model.generate(**inputs, eos_token_id=151645, pad_token_id=151643, max_new_tokens=4096)

cont = outputs[:, inputs["input_ids"].shape[-1] :]

print(processor.batch_decode(cont, skip_special_tokens=True))

Training Details

Training Data

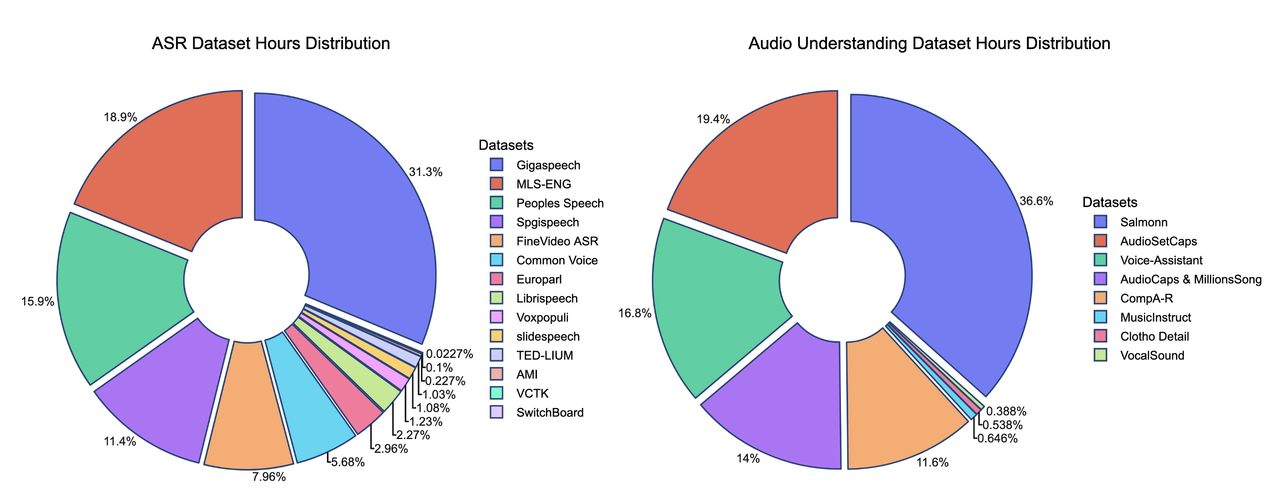

We present the contributions of our data mixture here. Our SFT data mixture includes over 20 publicly available datasets, and comparisons with other models highlight the data's lightweight nature.

*The hours of some training datasets are estimated and may not be fully accurate

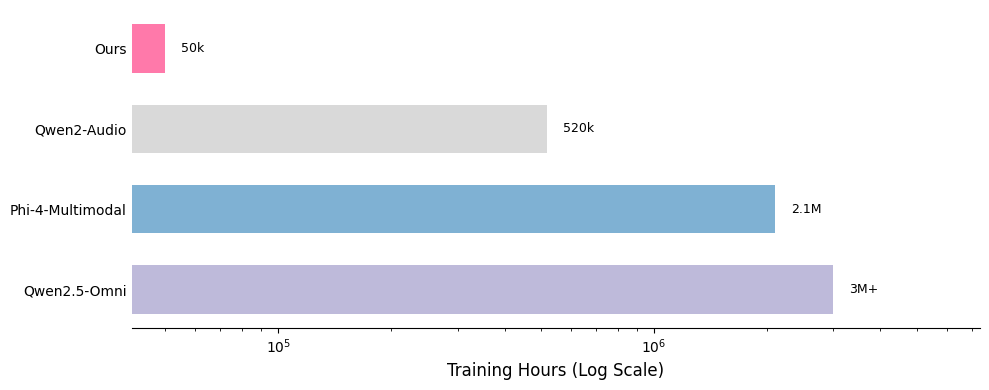

One of the key strengths of our training recipe lies in the quality and quantity of our data. Our training dataset consists of approximately 5 billion tokens, corresponding to around 50,000 hours of audio. Compared to models such as Qwen-Omni and Phi-4, our dataset is over 100 times smaller, yet our model achieves competitive performance. All data is sourced from publicly available open-source datasets, highlighting the sample efficiency of our training approach. A detailed breakdown of our data distribution is provided below, along with comparisons to other models.

- Downloads last month

- 3,389