Update README.md

Browse files

README.md

CHANGED

|

@@ -8,7 +8,153 @@ tags:

|

|

| 8 |

- model_hub_mixin

|

| 9 |

- pytorch_model_hub_mixin

|

| 10 |

---

|

|

|

|

|

|

|

| 11 |

|

| 12 |

-

|

| 13 |

-

|

| 14 |

-

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 8 |

- model_hub_mixin

|

| 9 |

- pytorch_model_hub_mixin

|

| 10 |

---

|

| 11 |

+

- Library: https://github.com/nicolas-dufour/CAD

|

| 12 |

+

<div align="center">

|

| 13 |

|

| 14 |

+

# Don’t drop your samples! Coherence-aware training benefits Conditional diffusion

|

| 15 |

+

|

| 16 |

+

<a href="https://nicolas-dufour.github.io/" >Nicolas Dufour</a>, <a href="https://scholar.google.com/citations?user=n_C2h-QAAAAJ&hl=fr&oi=ao" >Victor Besnier</a>, <a href="https://vicky.kalogeiton.info/" >Vicky Kalogeiton</a>, <a href="https://davidpicard.github.io/" >David Picard</a>

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

</div>

|

| 22 |

+

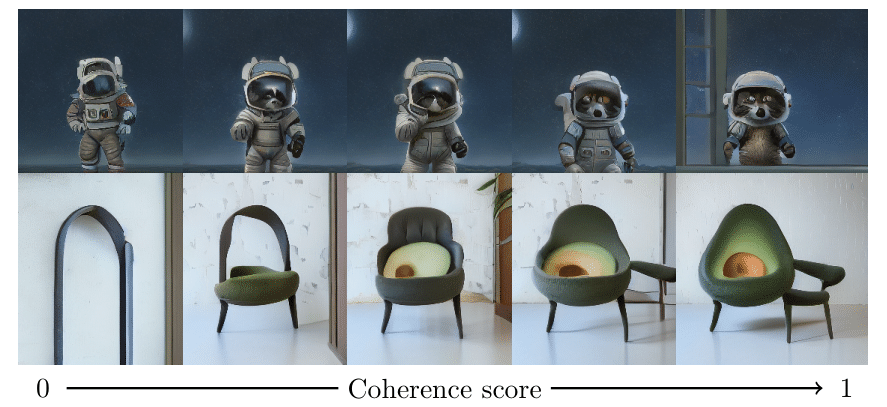

This repo has the code for the paper "Dont Drop Your samples: Coherence-aware training benefits Condition Diffusion" accepted at CVPR 2024 as a Highlight.

|

| 23 |

+

|

| 24 |

+

The core idea is that diffusion model is usually trained on noisy data. The usual solution is to filter massive datapools. We propose a new training method that leverages the coherence of the data to improve the training of diffusion models. We show that this method improves the quality of the generated samples on several datasets.

|

| 25 |

+

|

| 26 |

+

Project website: [https://nicolas-dufour.github.io/cad](https://nicolas-dufour.github.io/cad)

|

| 27 |

+

|

| 28 |

+

## Install

|

| 29 |

+

|

| 30 |

+

To install, first create a conda env with python 3.10

|

| 31 |

+

|

| 32 |

+

```bash

|

| 33 |

+

conda create -n cad python=3.10

|

| 34 |

+

```

|

| 35 |

+

Activate the env

|

| 36 |

+

|

| 37 |

+

```bash

|

| 38 |

+

conda activate cad

|

| 39 |

+

```

|

| 40 |

+

|

| 41 |

+

For inference only,

|

| 42 |

+

|

| 43 |

+

```bash

|

| 44 |

+

pip install cad-diffusion

|

| 45 |

+

```

|

| 46 |

+

|

| 47 |

+

## Pretrained models

|

| 48 |

+

|

| 49 |

+

To use the pretrained model do the following:

|

| 50 |

+

```python

|

| 51 |

+

from cad import CADT2IPipeline

|

| 52 |

+

|

| 53 |

+

pipe = CADT2IPipeline("nicolas-dufour/CAD_512").to("cuda")

|

| 54 |

+

|

| 55 |

+

prompt = "An avocado armchair"

|

| 56 |

+

|

| 57 |

+

image = pipe(prompt, cfg=15)

|

| 58 |

+

```

|

| 59 |

+

|

| 60 |

+

If you just want to download the models, not the sampling pipeline, you can do:

|

| 61 |

+

|

| 62 |

+

```python

|

| 63 |

+

from cad import CAD

|

| 64 |

+

|

| 65 |

+

model = CAD.from_pretrained("nicolas-dufour/CAD_512")

|

| 66 |

+

```

|

| 67 |

+

|

| 68 |

+

Models are hosted in the hugging face hub. The previous scripts download them automatically, but weights can be found at:

|

| 69 |

+

|

| 70 |

+

[https://huggingface.co/nicolas-dufour/CAD_256](https://huggingface.co/nicolas-dufour/CAD_256)

|

| 71 |

+

|

| 72 |

+

[https://huggingface.co/nicolas-dufour/CAD_512](https://huggingface.co/nicolas-dufour/CAD_512)

|

| 73 |

+

|

| 74 |

+

## Using the Pipeline

|

| 75 |

+

|

| 76 |

+

The `CADT2IPipeline` class provides a comprehensive interface for generating images from text prompts. Here's a detailed guide on how to use it:

|

| 77 |

+

|

| 78 |

+

### Basic Usage

|

| 79 |

+

|

| 80 |

+

```python

|

| 81 |

+

from cad import CADT2IPipeline

|

| 82 |

+

|

| 83 |

+

# Initialize the pipeline

|

| 84 |

+

pipe = CADT2IPipeline("nicolas-dufour/CAD_512").to("cuda")

|

| 85 |

+

|

| 86 |

+

# Generate an image from a prompt

|

| 87 |

+

prompt = "An avocado armchair"

|

| 88 |

+

image = pipe(prompt, cfg=15)

|

| 89 |

+

```

|

| 90 |

+

|

| 91 |

+

### Advanced Configuration

|

| 92 |

+

|

| 93 |

+

The pipeline can be initialized with several customization options:

|

| 94 |

+

|

| 95 |

+

```python

|

| 96 |

+

pipe = CADT2IPipeline(

|

| 97 |

+

model_path="nicolas-dufour/CAD_512",

|

| 98 |

+

sampler="ddim", # Options: "ddim", "ddpm", "dpm", "dpm_2S", "dpm_2M"

|

| 99 |

+

scheduler="sigmoid", # Options: "sigmoid", "cosine", "linear"

|

| 100 |

+

postprocessing="sd_1_5_vae", # Options: "consistency-decoder", "sd_1_5_vae"

|

| 101 |

+

scheduler_start=-3,

|

| 102 |

+

scheduler_end=3,

|

| 103 |

+

scheduler_tau=1.1,

|

| 104 |

+

device="cuda"

|

| 105 |

+

)

|

| 106 |

+

```

|

| 107 |

+

|

| 108 |

+

### Generation Parameters

|

| 109 |

+

|

| 110 |

+

The pipeline's `__call__` method accepts various parameters to control the generation process:

|

| 111 |

+

|

| 112 |

+

```python

|

| 113 |

+

image = pipe(

|

| 114 |

+

cond="A beautiful landscape", # Text prompt or list of prompts

|

| 115 |

+

num_samples=4, # Number of images to generate

|

| 116 |

+

cfg=15, # Classifier-free guidance scale

|

| 117 |

+

guidance_type="constant", # Type of guidance: "constant", "linear"

|

| 118 |

+

guidance_start_step=0, # Step to start guidance

|

| 119 |

+

coherence_value=1.0, # Coherence value for sampling

|

| 120 |

+

uncoherence_value=0.0, # Uncoherence value for sampling

|

| 121 |

+

thresholding_type="clamp", # Type of thresholding: "clamp", "dynamic_thresholding", "per_channel_dynamic_thresholding"

|

| 122 |

+

clamp_value=1.0, # Clamp value for thresholding

|

| 123 |

+

thresholding_percentile=0.995 # Percentile for thresholding

|

| 124 |

+

)

|

| 125 |

+

```

|

| 126 |

+

|

| 127 |

+

#### Guidance Types

|

| 128 |

+

- `constant`: Applies uniform guidance throughout the sampling process

|

| 129 |

+

- `linear`: Linearly increases guidance strength from start to end

|

| 130 |

+

- `exponential`: Exponentially increases guidance strength from start to end

|

| 131 |

+

|

| 132 |

+

#### Thresholding Types

|

| 133 |

+

- `clamp`: Clamps values to a fixed range using `clamp_value`

|

| 134 |

+

- `dynamic`: Dynamically adjusts thresholds based on the batch statistics

|

| 135 |

+

- `percentile`: Uses percentile-based thresholding with `thresholding_percentile`

|

| 136 |

+

|

| 137 |

+

### Advanced Parameters

|

| 138 |

+

|

| 139 |

+

For more control over the generation process, you can also specify:

|

| 140 |

+

|

| 141 |

+

- `x_N`: Initial noise tensor

|

| 142 |

+

- `latents`: Previous latents for continuation

|

| 143 |

+

- `num_steps`: Custom number of sampling steps

|

| 144 |

+

- `sampler`: Custom sampler function

|

| 145 |

+

- `scheduler`: Custom scheduler function

|

| 146 |

+

- `guidance_start_step`: Step to start guidance

|

| 147 |

+

- `generator`: Random number generator for reproducibility

|

| 148 |

+

- `unconfident_prompt`: Custom unconfident prompt text

|

| 149 |

+

|

| 150 |

+

## Citation

|

| 151 |

+

If you happen to use this repo in your experiments, you can acknowledge us by citing the following paper:

|

| 152 |

+

|

| 153 |

+

```bibtex

|

| 154 |

+

@article{dufour2024dont,

|

| 155 |

+

title={Don’t drop your samples! Coherence-aware training benefits Conditional diffusion},

|

| 156 |

+

author={Nicolas Dufour and Victor Besnier and Vicky Kalogeiton and David Picard},

|

| 157 |

+

journal={CVPR}

|

| 158 |

+

year={2024}

|

| 159 |

+

}

|

| 160 |

+

```

|