Upload folder using huggingface_hub

Browse files- .gitattributes +2 -0

- assets/controlnet.png +3 -0

- assets/face_normal.png +0 -0

- assets/face_seg.png +0 -0

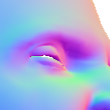

- assets/left_eye_normal.png +0 -0

- assets/left_eye_seg.png +0 -0

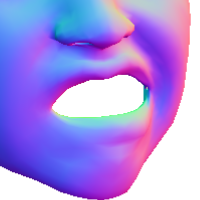

- assets/mouth_normal.png +0 -0

- assets/mouth_seg.png +0 -0

- assets/prompt.png +3 -0

- config.json +52 -0

- diffusion_pytorch_model.safetensors +3 -0

- script/dataset_AnimPortrait3D_controlnet.py +118 -0

- script/face_normal.png +0 -0

- script/face_seg.png +0 -0

- script/run.sh +17 -0

- script/train_normal_seg_controlnet_all_in_one.py +1328 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

assets/controlnet.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

assets/prompt.png filter=lfs diff=lfs merge=lfs -text

|

assets/controlnet.png

ADDED

|

Git LFS Details

|

assets/face_normal.png

ADDED

|

assets/face_seg.png

ADDED

|

assets/left_eye_normal.png

ADDED

|

assets/left_eye_seg.png

ADDED

|

assets/mouth_normal.png

ADDED

|

assets/mouth_seg.png

ADDED

|

assets/prompt.png

ADDED

|

Git LFS Details

|

config.json

ADDED

|

@@ -0,0 +1,52 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "ControlNetModel",

|

| 3 |

+

"_diffusers_version": "0.31.0.dev0",

|

| 4 |

+

"_name_or_path": "/media/yiqian/data/datasets/controlnet-training-runs/all/checkpoint-32000/controlnet",

|

| 5 |

+

"act_fn": "silu",

|

| 6 |

+

"addition_embed_type": null,

|

| 7 |

+

"addition_embed_type_num_heads": 64,

|

| 8 |

+

"addition_time_embed_dim": null,

|

| 9 |

+

"attention_head_dim": 8,

|

| 10 |

+

"block_out_channels": [

|

| 11 |

+

320,

|

| 12 |

+

640,

|

| 13 |

+

1280,

|

| 14 |

+

1280

|

| 15 |

+

],

|

| 16 |

+

"class_embed_type": null,

|

| 17 |

+

"conditioning_channels": 4,

|

| 18 |

+

"conditioning_embedding_out_channels": [

|

| 19 |

+

16,

|

| 20 |

+

32,

|

| 21 |

+

96,

|

| 22 |

+

256

|

| 23 |

+

],

|

| 24 |

+

"controlnet_conditioning_channel_order": "rgb",

|

| 25 |

+

"cross_attention_dim": 768,

|

| 26 |

+

"down_block_types": [

|

| 27 |

+

"CrossAttnDownBlock2D",

|

| 28 |

+

"CrossAttnDownBlock2D",

|

| 29 |

+

"CrossAttnDownBlock2D",

|

| 30 |

+

"DownBlock2D"

|

| 31 |

+

],

|

| 32 |

+

"downsample_padding": 1,

|

| 33 |

+

"encoder_hid_dim": null,

|

| 34 |

+

"encoder_hid_dim_type": null,

|

| 35 |

+

"flip_sin_to_cos": true,

|

| 36 |

+

"freq_shift": 0,

|

| 37 |

+

"global_pool_conditions": false,

|

| 38 |

+

"in_channels": 4,

|

| 39 |

+

"layers_per_block": 2,

|

| 40 |

+

"mid_block_scale_factor": 1,

|

| 41 |

+

"mid_block_type": "UNetMidBlock2DCrossAttn",

|

| 42 |

+

"norm_eps": 1e-05,

|

| 43 |

+

"norm_num_groups": 32,

|

| 44 |

+

"num_attention_heads": null,

|

| 45 |

+

"num_class_embeds": null,

|

| 46 |

+

"only_cross_attention": false,

|

| 47 |

+

"projection_class_embeddings_input_dim": null,

|

| 48 |

+

"resnet_time_scale_shift": "default",

|

| 49 |

+

"transformer_layers_per_block": 1,

|

| 50 |

+

"upcast_attention": false,

|

| 51 |

+

"use_linear_projection": false

|

| 52 |

+

}

|

diffusion_pytorch_model.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7ddf77299c5ef0f4eb3e1a2e2955553a5b4196821f397b94c48c6683549bfcd4

|

| 3 |

+

size 1445157696

|

script/dataset_AnimPortrait3D_controlnet.py

ADDED

|

@@ -0,0 +1,118 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

import json

|

| 5 |

+

import torchvision.transforms as transforms

|

| 6 |

+

from torch.utils.data.dataset import Dataset

|

| 7 |

+

#from torchvision.io import read_image

|

| 8 |

+

from PIL import Image

|

| 9 |

+

import os

|

| 10 |

+

import torch

|

| 11 |

+

import torchvision.transforms.functional as F

|

| 12 |

+

def tokenize_captions( caption, tokenizer):

|

| 13 |

+

captions = [caption]

|

| 14 |

+

inputs = tokenizer(

|

| 15 |

+

captions, max_length=tokenizer.model_max_length, padding="max_length", truncation=True, return_tensors="pt"

|

| 16 |

+

)

|

| 17 |

+

# tokenizer(prompt, padding='max_length',

|

| 18 |

+

# max_length=self.tokenizer.model_max_length, return_tensors='pt')

|

| 19 |

+

return inputs.input_ids

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

class SquarePad:

|

| 25 |

+

def __call__(self, image ):

|

| 26 |

+

w, h = image.size

|

| 27 |

+

max_wh = max(w, h)

|

| 28 |

+

hp = int((max_wh - w) / 2)

|

| 29 |

+

vp = int((max_wh - h) / 2)

|

| 30 |

+

padding = (hp, vp, hp, vp)

|

| 31 |

+

return F.pad(image, padding, (255,255,255), 'constant')

|

| 32 |

+

|

| 33 |

+

class NormalSegDataset(Dataset):

|

| 34 |

+

def __init__(self,args, path,tokenizer,cfg_prob ):

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

self.image_transforms = transforms.Compose(

|

| 38 |

+

[

|

| 39 |

+

# transforms.Resize(args.resolution, interpolation=transforms.InterpolationMode.BILINEAR),

|

| 40 |

+

# SquarePad(),

|

| 41 |

+

# transforms.Pad( (200,100,200,300),fill=(255,255,255),padding_mode='constant'),

|

| 42 |

+

# transforms.RandomRotation(degrees=30, fill=(255, 255, 255)) ,

|

| 43 |

+

transforms.RandomResizedCrop(args.resolution, scale=(0.9, 1.0), interpolation=2, ),

|

| 44 |

+

transforms.ToTensor(),

|

| 45 |

+

]

|

| 46 |

+

)

|

| 47 |

+

|

| 48 |

+

self.additional_image_transforms = transforms.Compose(

|

| 49 |

+

[transforms.Normalize([0.5], [0.5]),]

|

| 50 |

+

)

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

meta_path = os.path.join(path, 'meta_train_seg.json')

|

| 54 |

+

|

| 55 |

+

with open(meta_path, 'r') as f:

|

| 56 |

+

self.meta = json.load(f)

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

self.keys = self.meta['keys']

|

| 61 |

+

self.meta = self.meta['data']

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

self.tokenizer = tokenizer

|

| 65 |

+

|

| 66 |

+

self.cfg_prob = cfg_prob

|

| 67 |

+

|

| 68 |

+

def __len__(self):

|

| 69 |

+

return len(self.keys)

|

| 70 |

+

|

| 71 |

+

def __getitem__(self, index):

|

| 72 |

+

|

| 73 |

+

meta_data = self.meta[self.keys[index]]

|

| 74 |

+

|

| 75 |

+

rgb_path = meta_data['rgb']

|

| 76 |

+

normal_path = meta_data['normal']

|

| 77 |

+

seg_path = meta_data['seg']

|

| 78 |

+

text_prompt = meta_data['caption'][0]

|

| 79 |

+

|

| 80 |

+

rand = torch.rand(1).item()

|

| 81 |

+

if rand < self.cfg_prob:

|

| 82 |

+

text_prompt = ""

|

| 83 |

+

|

| 84 |

+

image = Image.open(rgb_path).convert("RGB")

|

| 85 |

+

state = torch.get_rng_state()

|

| 86 |

+

image = self.image_transforms(image)

|

| 87 |

+

|

| 88 |

+

rand = torch.rand(1).item()

|

| 89 |

+

if rand < self.cfg_prob:

|

| 90 |

+

# get a white image

|

| 91 |

+

# print("white image")

|

| 92 |

+

normal_image = Image.new('RGB', (image.shape[1], image.shape[2]), (255, 255, 255))

|

| 93 |

+

# gray_image = Image.new('L', (image.shape[1], image.shape[2]), (255))

|

| 94 |

+

seg_image = Image.new('L', (image.shape[1], image.shape[2]), (0))

|

| 95 |

+

else:

|

| 96 |

+

normal_image = Image.open(normal_path).convert("RGB")

|

| 97 |

+

seg_image = Image.open(seg_path).convert("L")

|

| 98 |

+

torch.set_rng_state(state)

|

| 99 |

+

normal_image = self.image_transforms(normal_image)

|

| 100 |

+

|

| 101 |

+

torch.set_rng_state(state)

|

| 102 |

+

seg_image = self.image_transforms(seg_image)

|

| 103 |

+

|

| 104 |

+

|

| 105 |

+

conditioning_image = torch.cat([normal_image, seg_image], dim=0)

|

| 106 |

+

|

| 107 |

+

image = self.additional_image_transforms(image)

|

| 108 |

+

|

| 109 |

+

prompt = text_prompt

|

| 110 |

+

|

| 111 |

+

|

| 112 |

+

|

| 113 |

+

|

| 114 |

+

prompt = tokenize_captions(prompt, self.tokenizer)

|

| 115 |

+

|

| 116 |

+

return image, conditioning_image, prompt, text_prompt

|

| 117 |

+

|

| 118 |

+

|

script/face_normal.png

ADDED

|

script/face_seg.png

ADDED

|

script/run.sh

ADDED

|

@@ -0,0 +1,17 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

accelerate launch train.py \

|

| 5 |

+

--pretrained_model_name_or_path="SG161222/Realistic_Vision_V5.1_noVAE" \

|

| 6 |

+

--output_dir="./controlnet-training-runs" \

|

| 7 |

+

--train_data_dir=/path/to/dataset \

|

| 8 |

+

--cfg_prob=0.1 \

|

| 9 |

+

--resolution=512 \

|

| 10 |

+

--learning_rate=1e-5 \

|

| 11 |

+

--num_validation_images=3 \

|

| 12 |

+

--validation_image "./face_normal.png" "./face_seg.png" \

|

| 13 |

+

--validation_prompt "a Teen boy, pensive look, dark hair. Preppy sweater, collared shirt, moody room, 80s memorabilia" \

|

| 14 |

+

--train_batch_size=4 \

|

| 15 |

+

--num_train_epochs=40 \

|

| 16 |

+

--validation_steps=500 \

|

| 17 |

+

--checkpointing_steps=2000

|

script/train_normal_seg_controlnet_all_in_one.py

ADDED

|

@@ -0,0 +1,1328 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env python

|

| 2 |

+

# coding=utf-8

|

| 3 |

+

# Copyright 2024 The HuggingFace Inc. team. All rights reserved.

|

| 4 |

+

#

|

| 5 |

+

# Licensed under the Apache License, Version 2.0 (the "License");

|

| 6 |

+

# you may not use this file except in compliance with the License.

|

| 7 |

+

# You may obtain a copy of the License at

|

| 8 |

+

#

|

| 9 |

+

# http://www.apache.org/licenses/LICENSE-2.0

|

| 10 |

+

#

|

| 11 |

+

# Unless required by applicable law or agreed to in writing, software

|

| 12 |

+

# distributed under the License is distributed on an "AS IS" BASIS,

|

| 13 |

+

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 14 |

+

# See the License for the specific language governing permissions and

|

| 15 |

+

import cv2

|

| 16 |

+

import time

|

| 17 |

+

import argparse

|

| 18 |

+

import contextlib

|

| 19 |

+

import gc

|

| 20 |

+

import logging

|

| 21 |

+

import math

|

| 22 |

+

import os

|

| 23 |

+

import random

|

| 24 |

+

import shutil

|

| 25 |

+

from pathlib import Path

|

| 26 |

+

|

| 27 |

+

import accelerate

|

| 28 |

+

import numpy as np

|

| 29 |

+

import torch

|

| 30 |

+

import torch.nn.functional as F

|

| 31 |

+

import torch.utils.checkpoint

|

| 32 |

+

import transformers

|

| 33 |

+

from accelerate import Accelerator

|

| 34 |

+

from accelerate.logging import get_logger

|

| 35 |

+

from accelerate.utils import ProjectConfiguration, set_seed

|

| 36 |

+

from dataset_AnimPortrait3D_controlnet import NormalSegDataset

|

| 37 |

+

from huggingface_hub import create_repo, upload_folder

|

| 38 |

+

from packaging import version

|

| 39 |

+

from PIL import Image

|

| 40 |

+

from torchvision import transforms

|

| 41 |

+

from tqdm.auto import tqdm

|

| 42 |

+

from transformers import AutoTokenizer, PretrainedConfig

|

| 43 |

+

|

| 44 |

+

import diffusers

|

| 45 |

+

from diffusers import (

|

| 46 |

+

AutoencoderKL,

|

| 47 |

+

ControlNetModel,

|

| 48 |

+

DDPMScheduler,

|

| 49 |

+

StableDiffusionControlNetPipeline,

|

| 50 |

+

UNet2DConditionModel,

|

| 51 |

+

UniPCMultistepScheduler,

|

| 52 |

+

)

|

| 53 |

+

from diffusers.optimization import get_scheduler

|

| 54 |

+

from diffusers.utils import check_min_version, is_wandb_available

|

| 55 |

+

from diffusers.utils.hub_utils import load_or_create_model_card, populate_model_card

|

| 56 |

+

from diffusers.utils.import_utils import is_xformers_available

|

| 57 |

+

from diffusers.utils.torch_utils import is_compiled_module

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

if is_wandb_available():

|

| 61 |

+

import wandb

|

| 62 |

+

|

| 63 |

+

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

| 64 |

+

check_min_version("0.31.0.dev0")

|

| 65 |

+

|

| 66 |

+

logger = get_logger(__name__)

|

| 67 |

+

|

| 68 |

+

|

| 69 |

+

def image_grid(imgs, rows, cols):

|

| 70 |

+

assert len(imgs) == rows * cols

|

| 71 |

+

|

| 72 |

+

w, h = imgs[0].size

|

| 73 |

+

grid = Image.new("RGB", size=(cols * w, rows * h))

|

| 74 |

+

|

| 75 |

+

for i, img in enumerate(imgs):

|

| 76 |

+

grid.paste(img, box=(i % cols * w, i // cols * h))

|

| 77 |

+

return grid

|

| 78 |

+

|

| 79 |

+

|

| 80 |

+

|

| 81 |

+

def log_validation(

|

| 82 |

+

vae, text_encoder, tokenizer, unet, controlnet, args, accelerator, weight_dtype, step, is_final_validation=False,train_batch = None

|

| 83 |

+

):

|

| 84 |

+

logger.info("Running validation... ")

|

| 85 |

+

|

| 86 |

+

if not is_final_validation:

|

| 87 |

+

controlnet = accelerator.unwrap_model(controlnet)

|

| 88 |

+

else:

|

| 89 |

+

controlnet = ControlNetModel.from_pretrained(args.output_dir, torch_dtype=weight_dtype)

|

| 90 |

+

|

| 91 |

+

pipeline = StableDiffusionControlNetPipeline.from_pretrained(

|

| 92 |

+

args.pretrained_model_name_or_path,

|

| 93 |

+

vae=vae,

|

| 94 |

+

text_encoder=text_encoder,

|

| 95 |

+

tokenizer=tokenizer,

|

| 96 |

+

unet=unet,

|

| 97 |

+

controlnet=controlnet,

|

| 98 |

+

safety_checker=None,

|

| 99 |

+

revision=args.revision,

|

| 100 |

+

variant=args.variant,

|

| 101 |

+

torch_dtype=weight_dtype,

|

| 102 |

+

)

|

| 103 |

+

pipeline.scheduler = UniPCMultistepScheduler.from_config(pipeline.scheduler.config)

|

| 104 |

+

pipeline = pipeline.to(accelerator.device)

|

| 105 |

+

pipeline.set_progress_bar_config(disable=True)

|

| 106 |

+

|

| 107 |

+

if args.enable_xformers_memory_efficient_attention:

|

| 108 |

+

pipeline.enable_xformers_memory_efficient_attention()

|

| 109 |

+

|

| 110 |

+

if args.seed is None:

|

| 111 |

+

generator = None

|

| 112 |

+

else:

|

| 113 |

+

generator = torch.Generator(device=accelerator.device).manual_seed(args.seed)

|

| 114 |

+

|

| 115 |

+

validation_images = args.validation_image.copy()

|

| 116 |

+

validation_nums = len(validation_images)//2

|

| 117 |

+

validation_prompt = args.validation_prompt.copy()

|

| 118 |

+

|

| 119 |

+

inference_ctx = contextlib.nullcontext() if is_final_validation else torch.autocast("cuda")

|

| 120 |

+

|

| 121 |

+

|

| 122 |

+

assert len(validation_prompt) == validation_nums

|

| 123 |

+

validation_prompts = validation_prompt

|

| 124 |

+

|

| 125 |

+

gt_images = [None] * validation_nums

|

| 126 |

+

|

| 127 |

+

logger.info(f'[info] validation_nums {validation_nums}')

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

if len(validation_images)<12:

|

| 131 |

+

conditioning_train = train_batch["conditioning_pixel_values"] # b, c, h, w

|

| 132 |

+

|

| 133 |

+

gt_train = train_batch["pixel_values"] # b, c, h, w

|

| 134 |

+

|

| 135 |

+

# text_prompts = []

|

| 136 |

+

for i in range(4):

|

| 137 |

+

validation_prompts.append(train_batch["text_prompts"][i])

|

| 138 |

+

logger.info(f'[info] append prompt { train_batch["text_prompts"][i]}')

|

| 139 |

+

|

| 140 |

+

|

| 141 |

+

# validation_prompts.append(text_prompts[i])

|

| 142 |

+

|

| 143 |

+

conditioning_image = conditioning_train[i] # c, h, w

|

| 144 |

+

conditioning_image = conditioning_image.permute(1,2,0).cpu().numpy()

|

| 145 |

+

normal_image = conditioning_image[:,:,:3] * 255

|

| 146 |

+

seg_image = conditioning_image[:,:,3:].repeat(3, axis=2) * 255

|

| 147 |

+

gt_image = gt_train[i]/2+0.5 # c, h, w

|

| 148 |

+

gt_image = gt_image.permute(1,2,0).cpu().numpy() * 255

|

| 149 |

+

|

| 150 |

+

validation_images.append(Image.fromarray(normal_image.astype(np.uint8)))

|

| 151 |

+

validation_images.append(Image.fromarray(seg_image.astype(np.uint8)))

|

| 152 |

+

|

| 153 |

+

gt_images.append(gt_image.astype(np.uint8))

|

| 154 |

+

|

| 155 |

+

|

| 156 |

+

|

| 157 |

+

logger.info(f'[info] new len(validation_images) {len(validation_images)}')

|

| 158 |

+

save_dir_path = os.path.join(args.output_dir, "eval_img")

|

| 159 |

+

if not os.path.exists(save_dir_path):

|

| 160 |

+

os.makedirs(save_dir_path)

|

| 161 |

+

for i in range(len(validation_images)//2):

|

| 162 |

+

if isinstance(validation_images[i*2], str):

|

| 163 |

+

normal_image = Image.open(validation_images[i*2]).resize((args.resolution, args.resolution))

|

| 164 |

+

|

| 165 |

+

else:

|

| 166 |

+

normal_image = validation_images[i*2]

|

| 167 |

+

|

| 168 |

+

if isinstance(validation_images[i*2+1], str):

|

| 169 |

+

seg_image = Image.open(validation_images[i*2+1]).resize((args.resolution, args.resolution))

|

| 170 |

+

else:

|

| 171 |

+

seg_image = validation_images[i*2+1]

|

| 172 |

+

|

| 173 |

+

seg_image = np.array(seg_image)[:,:,:1]

|

| 174 |

+

|

| 175 |

+

gt_image = gt_images[i]

|

| 176 |

+

|

| 177 |

+

|

| 178 |

+

|

| 179 |

+

validation_image = np.concatenate([np.array(normal_image), seg_image], axis=2)[None,...] / 255.0

|

| 180 |

+

# PIL.Image: 0-255

|

| 181 |

+

# np.array: 0-1

|

| 182 |

+

|

| 183 |

+

validation_prompt = validation_prompts[i]

|

| 184 |

+

print('validation_prompt: ', validation_prompt)

|

| 185 |

+

images = []

|

| 186 |

+

for _ in range(args.num_validation_images):

|

| 187 |

+

with inference_ctx:

|

| 188 |

+

image = pipeline(

|

| 189 |

+

validation_prompt, validation_image, num_inference_steps=20, generator=generator,guidance_scale=7.5

|

| 190 |

+

).images[0]

|

| 191 |

+

|

| 192 |

+

images.append(image)

|

| 193 |

+

|

| 194 |

+

validation_image = validation_image[0] * 255.0

|

| 195 |

+

|

| 196 |

+

normal = np.array(validation_image)[:,:,:3]

|

| 197 |

+

seg = np.array(validation_image)[:,:,3:]

|

| 198 |

+

seg = np.concatenate([seg, seg, seg], axis=2)

|

| 199 |

+

|

| 200 |

+

if gt_image is not None:

|

| 201 |

+

gt_image = cv2.resize(gt_image, images[0].size)

|

| 202 |

+

|

| 203 |

+

formatted_images = [gt_image,normal,seg]

|

| 204 |

+

|

| 205 |

+

else:

|

| 206 |

+

formatted_images = [normal,seg]

|

| 207 |

+

for image in images:

|

| 208 |

+

formatted_images.append(np.asarray(image))

|

| 209 |

+

|

| 210 |

+

formatted_images = np.concatenate(formatted_images, 1).astype(np.uint8)

|

| 211 |

+

|

| 212 |

+

file_path = os.path.join(save_dir_path, "{}_{}_{}.png".format(step, time.time(), validation_prompt.replace(" ", "-")))

|

| 213 |

+

formatted_images = cv2.cvtColor(formatted_images, cv2.COLOR_BGR2RGB)

|

| 214 |

+

print("Save images to:", file_path)

|

| 215 |

+

cv2.imwrite(file_path, formatted_images)

|

| 216 |

+

|

| 217 |

+

del pipeline

|

| 218 |

+

gc.collect()

|

| 219 |

+

torch.cuda.empty_cache()

|

| 220 |

+

|

| 221 |

+

|

| 222 |

+

# def log_validation(

|

| 223 |

+

# vae, text_encoder, tokenizer, unet, controlnet, args, accelerator, weight_dtype, step, is_final_validation=False

|

| 224 |

+

# ):

|

| 225 |

+

# logger.info("Running validation... ")

|

| 226 |

+

|

| 227 |

+

# if not is_final_validation:

|

| 228 |

+

# controlnet = accelerator.unwrap_model(controlnet)

|

| 229 |

+

# else:

|

| 230 |

+

# controlnet = ControlNetModel.from_pretrained(args.output_dir, torch_dtype=weight_dtype)

|

| 231 |

+

|

| 232 |

+

# pipeline = StableDiffusionControlNetPipeline.from_pretrained(

|

| 233 |

+

# args.pretrained_model_name_or_path,

|

| 234 |

+

# vae=vae,

|

| 235 |

+

# text_encoder=text_encoder,

|

| 236 |

+

# tokenizer=tokenizer,

|

| 237 |

+

# unet=unet,

|

| 238 |

+

# controlnet=controlnet,

|

| 239 |

+

# safety_checker=None,

|

| 240 |

+

# revision=args.revision,

|

| 241 |

+

# variant=args.variant,

|

| 242 |

+

# torch_dtype=weight_dtype,

|

| 243 |

+

# )

|

| 244 |

+

# pipeline.scheduler = UniPCMultistepScheduler.from_config(pipeline.scheduler.config)

|

| 245 |

+

# pipeline = pipeline.to(accelerator.device)

|

| 246 |

+

# pipeline.set_progress_bar_config(disable=True)

|

| 247 |

+

|

| 248 |

+

# if args.enable_xformers_memory_efficient_attention:

|

| 249 |

+

# pipeline.enable_xformers_memory_efficient_attention()

|

| 250 |

+

|

| 251 |

+

# if args.seed is None:

|

| 252 |

+

# generator = None

|

| 253 |

+

# else:

|

| 254 |

+

# generator = torch.Generator(device=accelerator.device).manual_seed(args.seed)

|

| 255 |

+

|

| 256 |

+

# if len(args.validation_image) == len(args.validation_prompt):

|

| 257 |

+

# validation_images = args.validation_image

|

| 258 |

+

# validation_prompts = args.validation_prompt

|

| 259 |

+

# elif len(args.validation_image) == 1:

|

| 260 |

+

# validation_images = args.validation_image * len(args.validation_prompt)

|

| 261 |

+

# validation_prompts = args.validation_prompt

|

| 262 |

+

# elif len(args.validation_prompt) == 1:

|

| 263 |

+

# validation_images = args.validation_image

|

| 264 |

+

# validation_prompts = args.validation_prompt * len(args.validation_image)

|

| 265 |

+

# else:

|

| 266 |

+

# raise ValueError(

|

| 267 |

+

# "number of `args.validation_image` and `args.validation_prompt` should be checked in `parse_args`"

|

| 268 |

+

# )

|

| 269 |

+

|

| 270 |

+

# image_logs = []

|

| 271 |

+

# inference_ctx = contextlib.nullcontext() if is_final_validation else torch.autocast("cuda")

|

| 272 |

+

|

| 273 |

+

# for validation_prompt, validation_image in zip(validation_prompts, validation_images):

|

| 274 |

+

# validation_image = Image.open(validation_image).convert("RGB")

|

| 275 |

+

|

| 276 |

+

# images = []

|

| 277 |

+

|

| 278 |

+

# for _ in range(args.num_validation_images):

|

| 279 |

+

# with inference_ctx:

|

| 280 |

+

# image = pipeline(

|

| 281 |

+

# validation_prompt, validation_image, num_inference_steps=20, generator=generator

|

| 282 |

+

# ).images[0]

|

| 283 |

+

|

| 284 |

+

# images.append(image)

|

| 285 |

+

|

| 286 |

+

# image_logs.append(

|

| 287 |

+

# {"validation_image": validation_image, "images": images, "validation_prompt": validation_prompt}

|

| 288 |

+

# )

|

| 289 |

+

|

| 290 |

+

# tracker_key = "test" if is_final_validation else "validation"

|

| 291 |

+

# save_dir_path = os.path.join(args.output_dir, "eval_img")

|

| 292 |

+

# if not os.path.exists(save_dir_path):

|

| 293 |

+

# os.makedirs(save_dir_path)

|

| 294 |

+

# for tracker in accelerator.trackers:

|

| 295 |

+

# for log in image_logs:

|

| 296 |

+

# images = log["images"]

|

| 297 |

+

# validation_prompt = log["validation_prompt"]

|

| 298 |

+

# validation_image = log["validation_image"]

|

| 299 |

+

|

| 300 |

+

# formatted_images = []

|

| 301 |

+

# formatted_images.append(np.asarray(validation_image))

|

| 302 |

+

# for image in images:

|

| 303 |

+

# formatted_images.append(np.asarray(image))

|

| 304 |

+

# formatted_images = np.concatenate(formatted_images, 1)

|

| 305 |

+

|

| 306 |

+

# file_path = os.path.join(save_dir_path, "{}_{}_{}.png".format(step, time.time(), validation_prompt.replace(" ", "-")))

|

| 307 |

+

# formatted_images = cv2.cvtColor(formatted_images, cv2.COLOR_BGR2RGB)

|

| 308 |

+

# print("Save images to:", file_path)

|

| 309 |

+

# cv2.imwrite(file_path, formatted_images)

|

| 310 |

+

|

| 311 |

+

# del pipeline

|

| 312 |

+

# gc.collect()

|

| 313 |

+

# torch.cuda.empty_cache()

|

| 314 |

+

|

| 315 |

+

# return image_logs

|

| 316 |

+

|

| 317 |

+

|

| 318 |

+

def import_model_class_from_model_name_or_path(pretrained_model_name_or_path: str, revision: str):

|

| 319 |

+

text_encoder_config = PretrainedConfig.from_pretrained(

|

| 320 |

+

pretrained_model_name_or_path,

|

| 321 |

+

subfolder="text_encoder",

|

| 322 |

+

revision=revision,

|

| 323 |

+

)

|

| 324 |

+

model_class = text_encoder_config.architectures[0]

|

| 325 |

+

|

| 326 |

+

if model_class == "CLIPTextModel":

|

| 327 |

+

from transformers import CLIPTextModel

|

| 328 |

+

|

| 329 |

+

return CLIPTextModel

|

| 330 |

+

elif model_class == "RobertaSeriesModelWithTransformation":

|

| 331 |

+

from diffusers.pipelines.alt_diffusion.modeling_roberta_series import RobertaSeriesModelWithTransformation

|

| 332 |

+

|

| 333 |

+

return RobertaSeriesModelWithTransformation

|

| 334 |

+

else:

|

| 335 |

+

raise ValueError(f"{model_class} is not supported.")

|

| 336 |

+

|

| 337 |

+

|

| 338 |

+

def save_model_card(repo_id: str, image_logs=None, base_model=str, repo_folder=None):

|

| 339 |

+

img_str = ""

|

| 340 |

+

if image_logs is not None:

|

| 341 |

+

img_str = "You can find some example images below.\n\n"

|

| 342 |

+

for i, log in enumerate(image_logs):

|

| 343 |

+

images = log["images"]

|

| 344 |

+

validation_prompt = log["validation_prompt"]

|

| 345 |

+

validation_image = log["validation_image"]

|

| 346 |

+

validation_image.save(os.path.join(repo_folder, "image_control.png"))

|

| 347 |

+

img_str += f"prompt: {validation_prompt}\n"

|

| 348 |

+

images = [validation_image] + images

|

| 349 |

+

image_grid(images, 1, len(images)).save(os.path.join(repo_folder, f"images_{i}.png"))

|

| 350 |

+

img_str += f"\n"

|

| 351 |

+

|

| 352 |

+

model_description = f"""

|

| 353 |

+

# controlnet-{repo_id}

|

| 354 |

+

|

| 355 |

+

These are controlnet weights trained on {base_model} with new type of conditioning.

|

| 356 |

+

{img_str}

|

| 357 |

+

"""

|

| 358 |

+

model_card = load_or_create_model_card(

|

| 359 |

+

repo_id_or_path=repo_id,

|

| 360 |

+

from_training=True,

|

| 361 |

+

license="creativeml-openrail-m",

|

| 362 |

+

base_model=base_model,

|

| 363 |

+

model_description=model_description,

|

| 364 |

+

inference=True,

|

| 365 |

+

)

|

| 366 |

+

|

| 367 |

+

tags = [

|

| 368 |

+

"stable-diffusion",

|

| 369 |

+

"stable-diffusion-diffusers",

|

| 370 |

+

"text-to-image",

|

| 371 |