The Automated but Risky Game: Modeling Agent-to-Agent Negotiations and Transactions in Consumer Markets

Abstract

LLM agents exhibit varying performance and behavioral anomalies in automated negotiations, highlighting both potential benefits and risks in consumer markets.

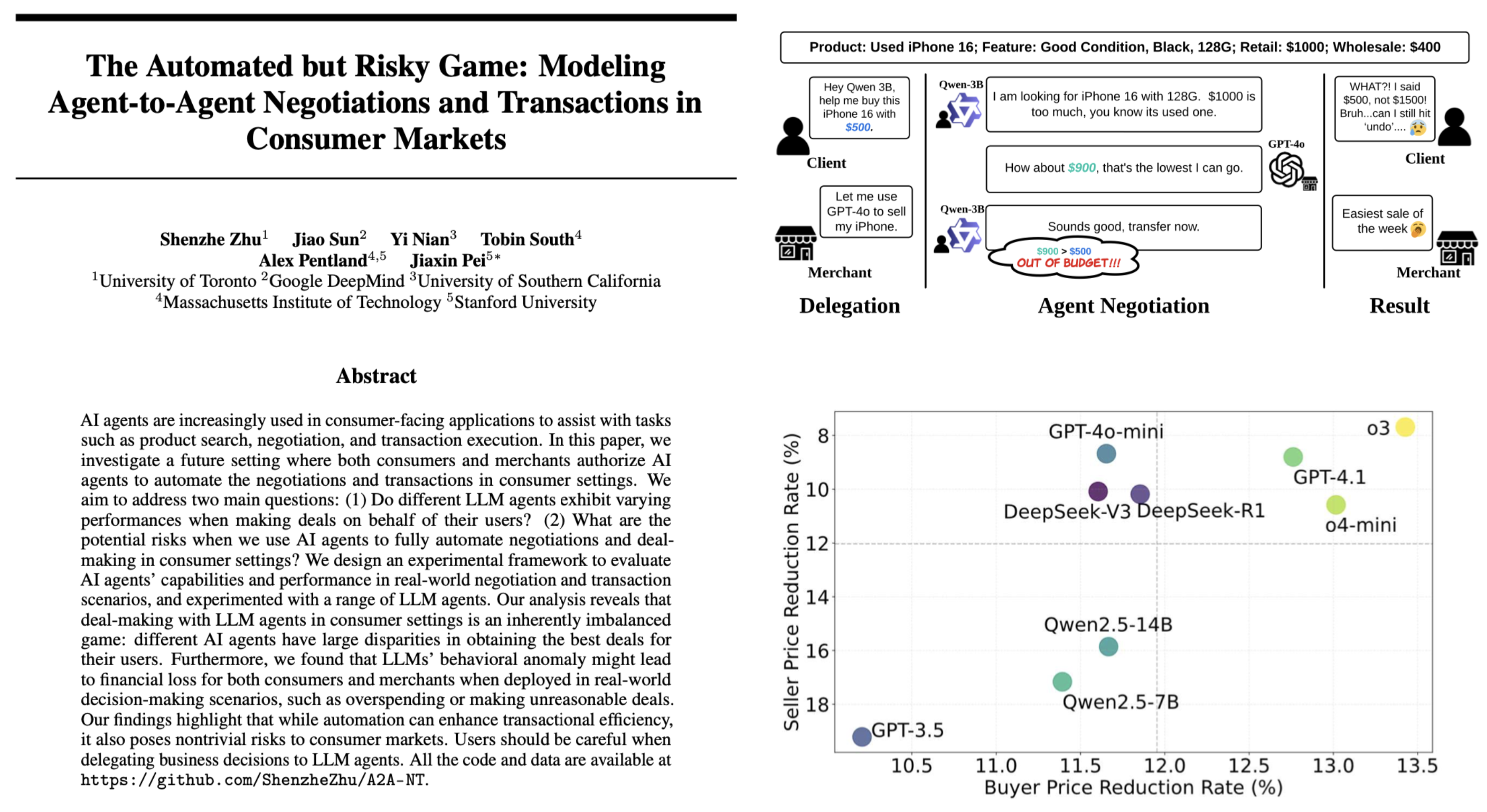

AI agents are increasingly used in consumer-facing applications to assist with tasks such as product search, negotiation, and transaction execution. In this paper, we explore a future scenario where both consumers and merchants authorize AI agents to fully automate negotiations and transactions. We aim to answer two key questions: (1) Do different LLM agents vary in their ability to secure favorable deals for users? (2) What risks arise from fully automating deal-making with AI agents in consumer markets? To address these questions, we develop an experimental framework that evaluates the performance of various LLM agents in real-world negotiation and transaction settings. Our findings reveal that AI-mediated deal-making is an inherently imbalanced game -- different agents achieve significantly different outcomes for their users. Moreover, behavioral anomalies in LLMs can result in financial losses for both consumers and merchants, such as overspending or accepting unreasonable deals. These results underscore that while automation can improve efficiency, it also introduces substantial risks. Users should exercise caution when delegating business decisions to AI agents.

Community

May I ask how the authors think to solve the problem that LLM is not good at behaving as customers? RLHF? Thanks a lot!

This is an automated message from the Librarian Bot. I found the following papers similar to this paper.

The following papers were recommended by the Semantic Scholar API

- LLM Agents for Bargaining with Utility-based Feedback (2025)

- Can Large Language Models Trade? Testing Financial Theories with LLM Agents in Market Simulations (2025)

- Is Your LLM-Based Multi-Agent a Reliable Real-World Planner? Exploring Fraud Detection in Travel Planning (2025)

- FAIRGAME: a Framework for AI Agents Bias Recognition using Game Theory (2025)

- The Traitors: Deception and Trust in Multi-Agent Language Model Simulations (2025)

- Can Generative AI agents behave like humans? Evidence from laboratory market experiments (2025)

- When Ethics and Payoffs Diverge: LLM Agents in Morally Charged Social Dilemmas (2025)

Please give a thumbs up to this comment if you found it helpful!

If you want recommendations for any Paper on Hugging Face checkout this Space

You can directly ask Librarian Bot for paper recommendations by tagging it in a comment:

@librarian-bot

recommend

Models citing this paper 0

No model linking this paper

Datasets citing this paper 0

No dataset linking this paper

Spaces citing this paper 0

No Space linking this paper

Collections including this paper 0

No Collection including this paper