HeurAgenix: Leveraging LLMs for Solving Complex Combinatorial Optimization Challenges

Abstract

HeurAgenix, a two-stage hyper-heuristic framework using large language models, evolves and selects heuristics dynamically for combinatorial optimization problems, achieving performance on par with specialized solvers.

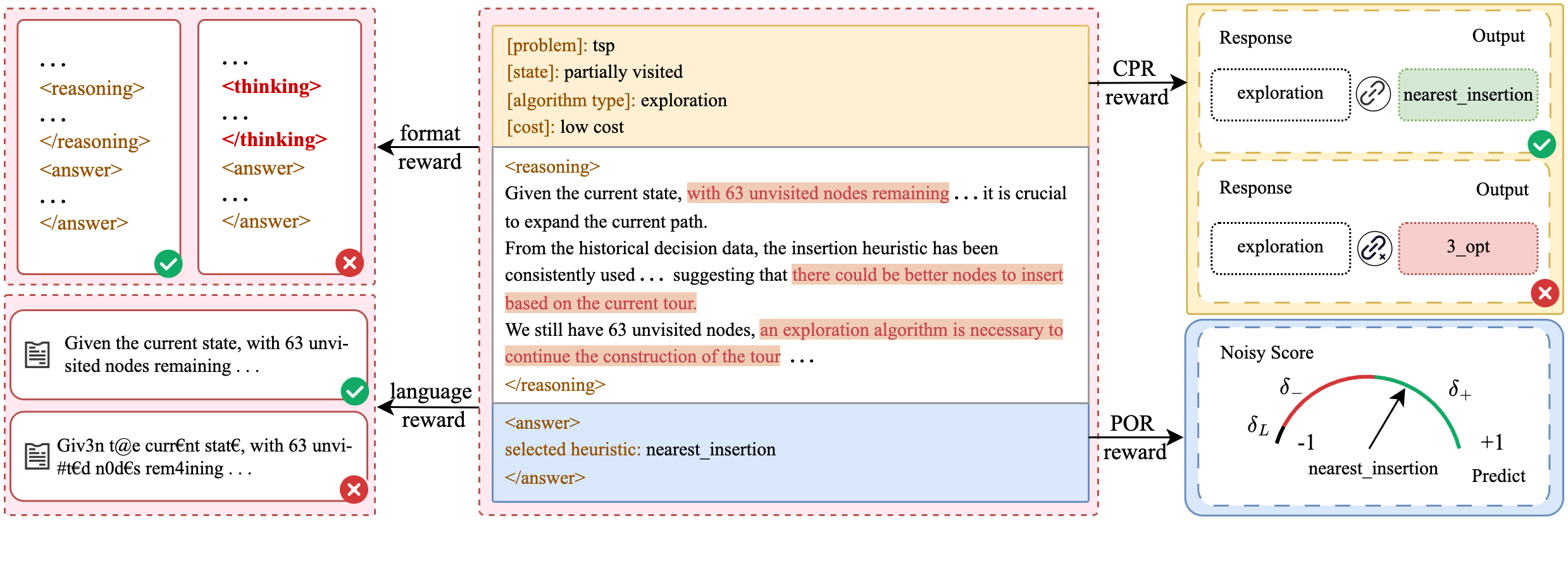

Heuristic algorithms play a vital role in solving combinatorial optimization (CO) problems, yet traditional designs depend heavily on manual expertise and struggle to generalize across diverse instances. We introduce HeurAgenix, a two-stage hyper-heuristic framework powered by large language models (LLMs) that first evolves heuristics and then selects among them automatically. In the heuristic evolution phase, HeurAgenix leverages an LLM to compare seed heuristic solutions with higher-quality solutions and extract reusable evolution strategies. During problem solving, it dynamically picks the most promising heuristic for each problem state, guided by the LLM's perception ability. For flexibility, this selector can be either a state-of-the-art LLM or a fine-tuned lightweight model with lower inference cost. To mitigate the scarcity of reliable supervision caused by CO complexity, we fine-tune the lightweight heuristic selector with a dual-reward mechanism that jointly exploits singals from selection preferences and state perception, enabling robust selection under noisy annotations. Extensive experiments on canonical benchmarks show that HeurAgenix not only outperforms existing LLM-based hyper-heuristics but also matches or exceeds specialized solvers. Code is available at https://github.com/microsoft/HeurAgenix.

Community

This paper presents HeurAgenix, an end-to-end, LLM-driven hyper-heuristic framework that first evolves a diverse pool of heuristics via a contrastive, data-driven evolution phase and then adaptively selects the most promising heuristic for each problem state by combining LLM-based filtering with test-time scaling. To enable real-time deployment at low cost, the LLM selector is distilled into a fine-tuned lightweight model trained with a dual-reward mechanism (preference-based outcome rewards + context-perception rewards). On five canonical combinatorial optimization benchmarks (TSP, CVRP, MKP, JSSP and MaxCut), HeurAgenix consistently outperforms existing LLM-based hyper-heuristics and matches or exceeds specialized solvers.

Models citing this paper 0

No model linking this paper

Datasets citing this paper 0

No dataset linking this paper

Spaces citing this paper 0

No Space linking this paper

Collections including this paper 0

No Collection including this paper