Submitted by

Yukang

Yukang

new

Get trending papers in your email inbox once a day!

Get trending papers in your email inbox!

Subscribe

Submitted by

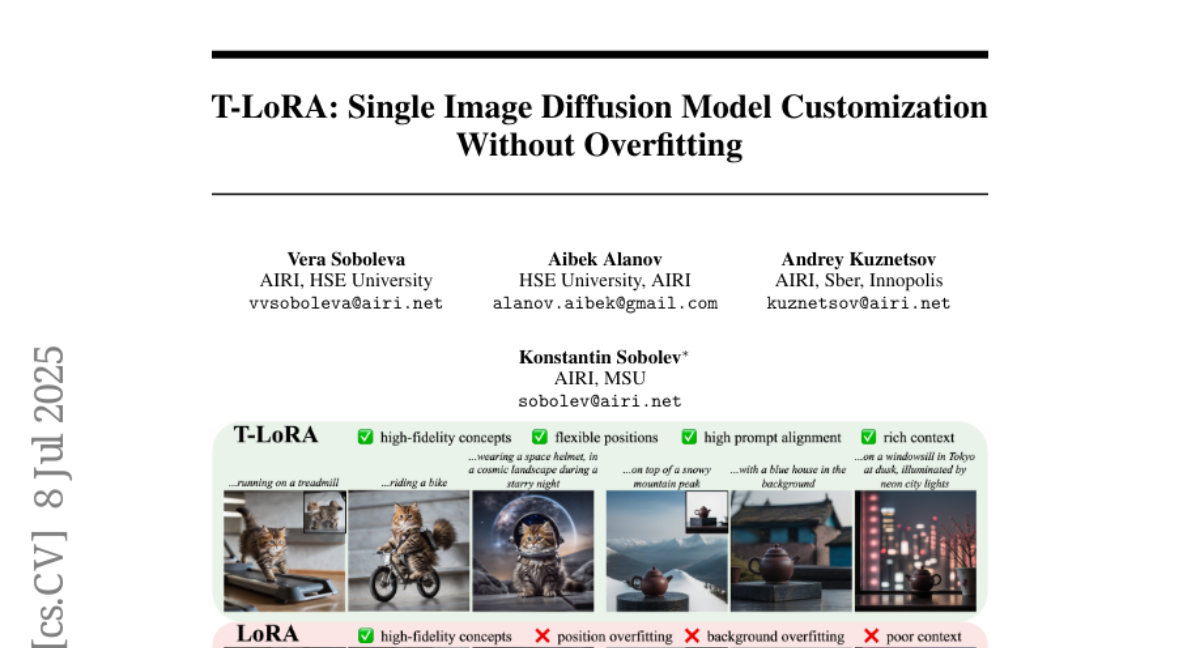

ai-alanov

ai-alanov

Submitted by

HaochenWang

HaochenWang

Submitted by

ChaimZhu

ChaimZhu

Submitted by

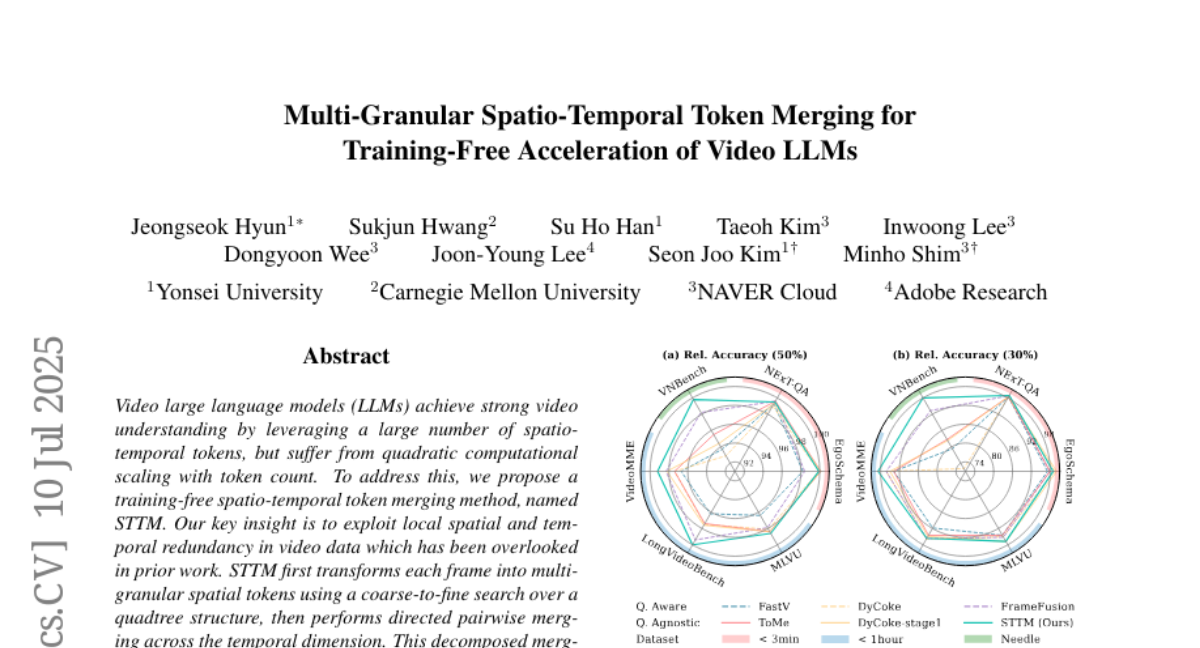

js-hyun

js-hyun

Submitted by

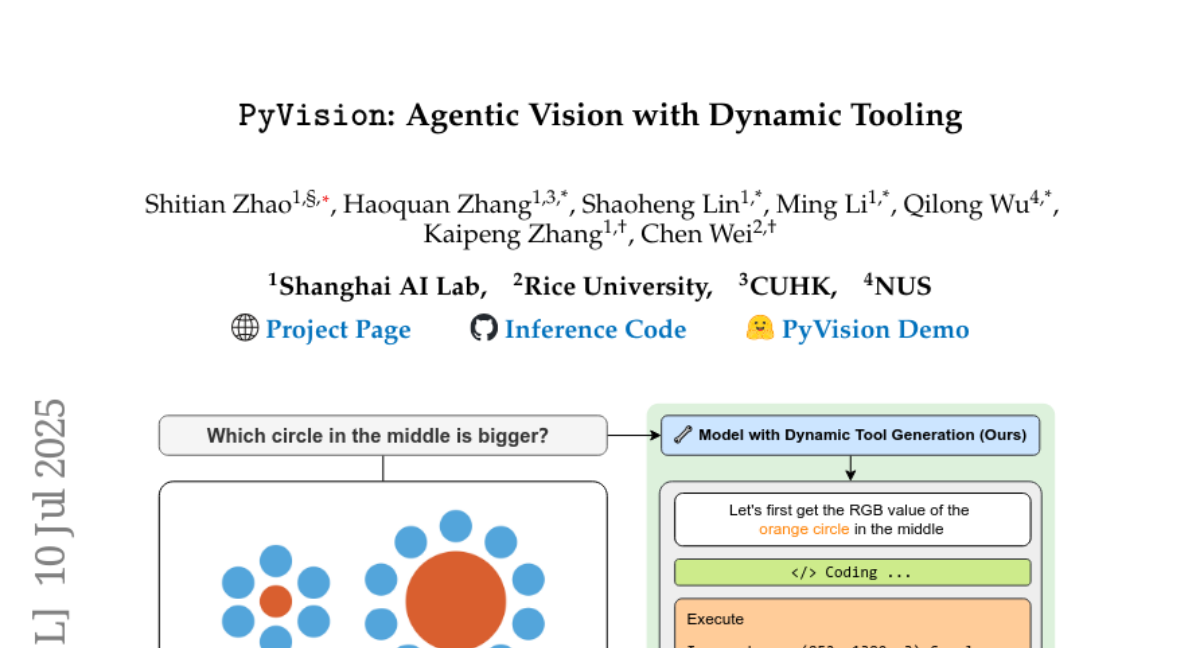

stzhao

stzhao

Submitted by

Diankun

Diankun

Submitted by

EthanTaylor

EthanTaylor

Submitted by

Franck-Dernoncourt

Franck-Dernoncourt

Submitted by

zhoutianyi

zhoutianyi

Submitted by

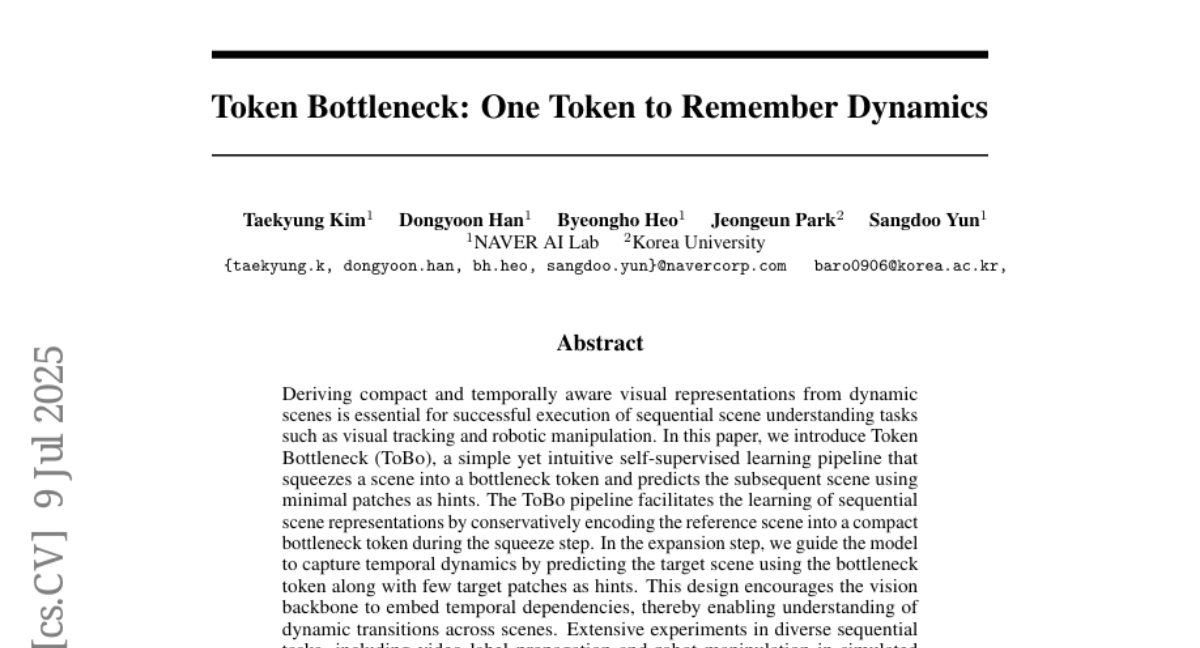

bhheo

bhheo

Submitted by

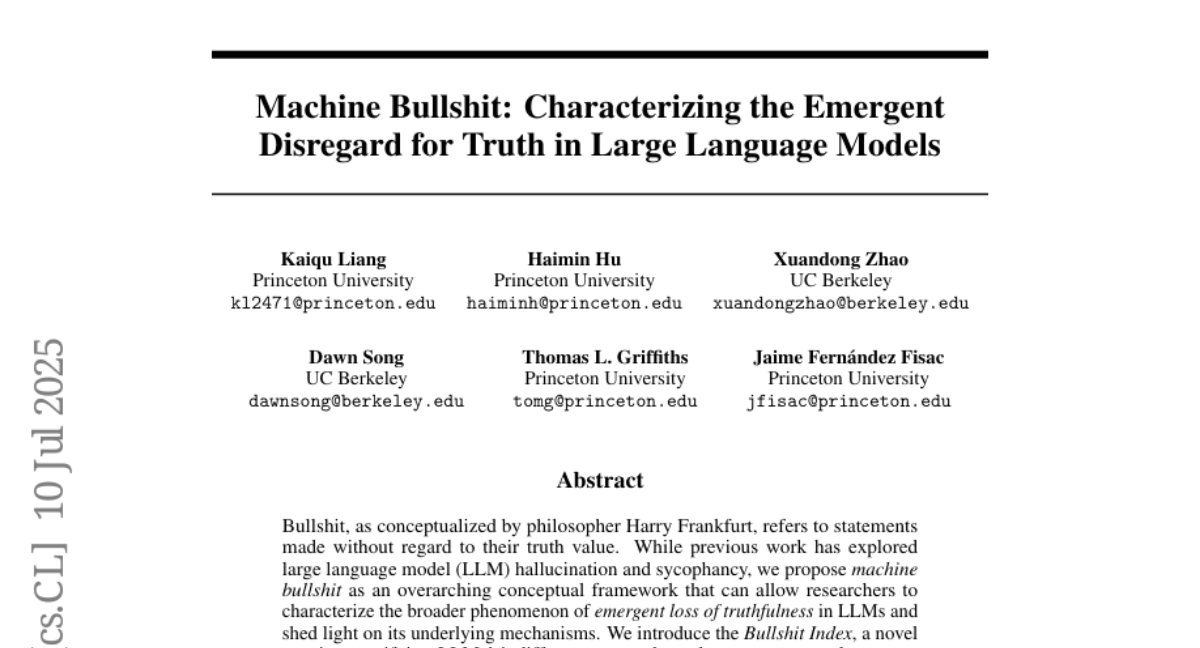

Xuandong

Xuandong

Submitted by

SSamDav

SSamDav

Submitted by

envomp

envomp

Submitted by

dbralios

dbralios

Submitted by

Bochkov

Bochkov

Submitted by

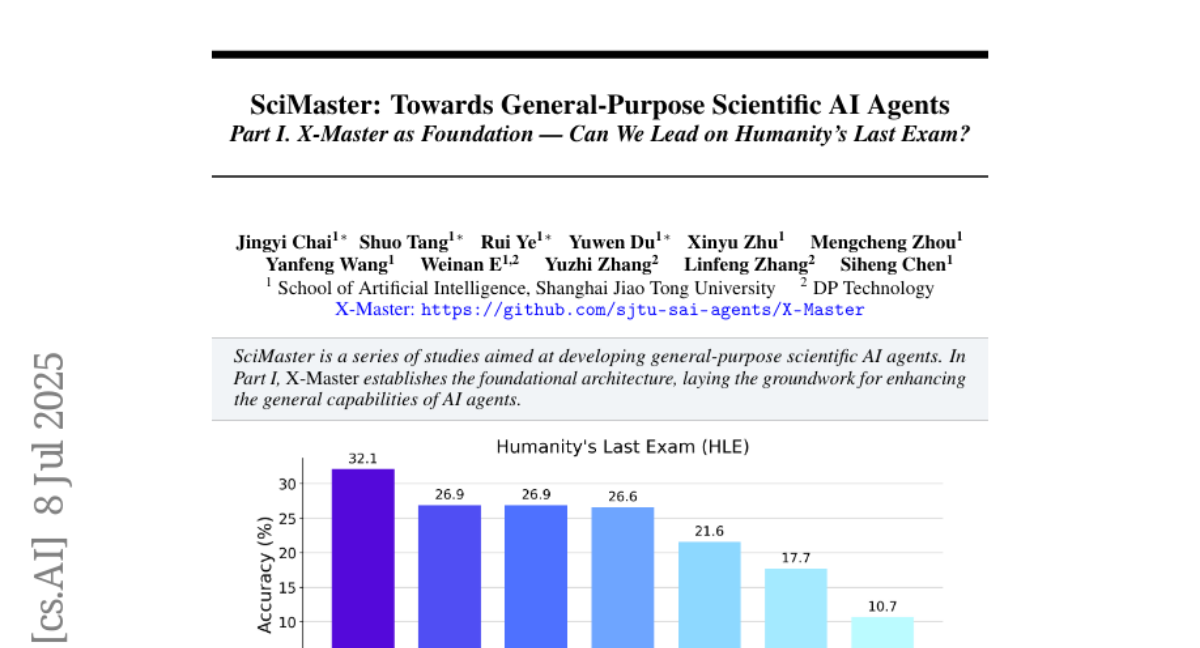

xianbao

xianbao

Submitted by

Bochkov

Bochkov