Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +11 -0

- added_tokens.json +6 -0

- config.json +102 -0

- generation_config.json +8 -0

- khmerhomophonecorrector/.DS_Store +0 -0

- khmerhomophonecorrector/.gitattributes +46 -0

- khmerhomophonecorrector/FYP_ Model Tracking - Sheet1.csv +107 -0

- khmerhomophonecorrector/README.md +3 -0

- khmerhomophonecorrector/app.py +216 -0

- khmerhomophonecorrector/batch_size_impact.png +3 -0

- khmerhomophonecorrector/data/.DS_Store +0 -0

- khmerhomophonecorrector/data/test.json +0 -0

- khmerhomophonecorrector/data/train.json +3 -0

- khmerhomophonecorrector/data/val.json +0 -0

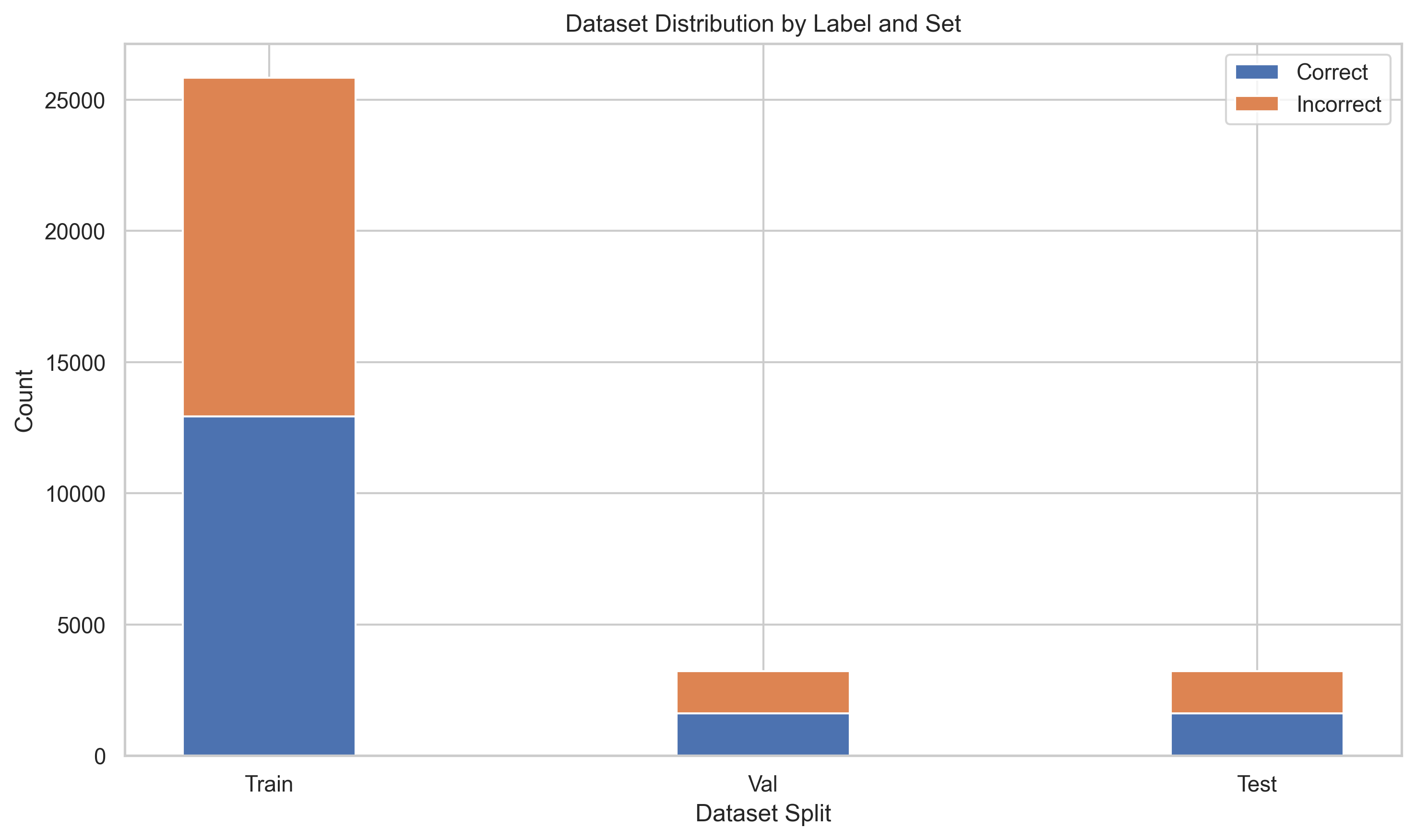

- khmerhomophonecorrector/dataset_distribution.png +0 -0

- khmerhomophonecorrector/header.png +3 -0

- khmerhomophonecorrector/homophone_pairs.json +3 -0

- khmerhomophonecorrector/homophone_test.json +272 -0

- khmerhomophonecorrector/infer_from_json.py +270 -0

- khmerhomophonecorrector/khmerhomophonecorrector/added_tokens.json +6 -0

- khmerhomophonecorrector/khmerhomophonecorrector/config.json +102 -0

- khmerhomophonecorrector/khmerhomophonecorrector/generation_config.json +8 -0

- khmerhomophonecorrector/khmerhomophonecorrector/model.safetensors +3 -0

- khmerhomophonecorrector/khmerhomophonecorrector/special_tokens_map.json +21 -0

- khmerhomophonecorrector/khmerhomophonecorrector/spiece.model +3 -0

- khmerhomophonecorrector/khmerhomophonecorrector/tokenizer_config.json +99 -0

- khmerhomophonecorrector/khmerhomophonecorrector/training_args.bin +3 -0

- khmerhomophonecorrector/khmerhomophonecorrector/training_state.json +1 -0

- khmerhomophonecorrector/loss_comparison.png +3 -0

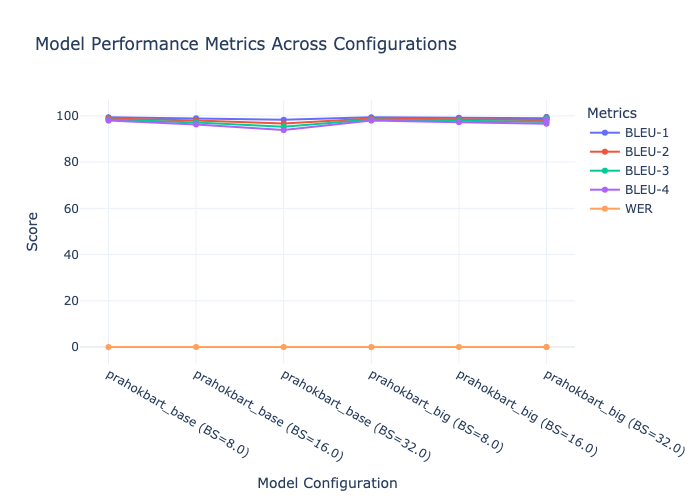

- khmerhomophonecorrector/metrics_comparison.png +3 -0

- khmerhomophonecorrector/model_performance_line_chart.html +0 -0

- khmerhomophonecorrector/model_performance_line_chart.png +0 -0

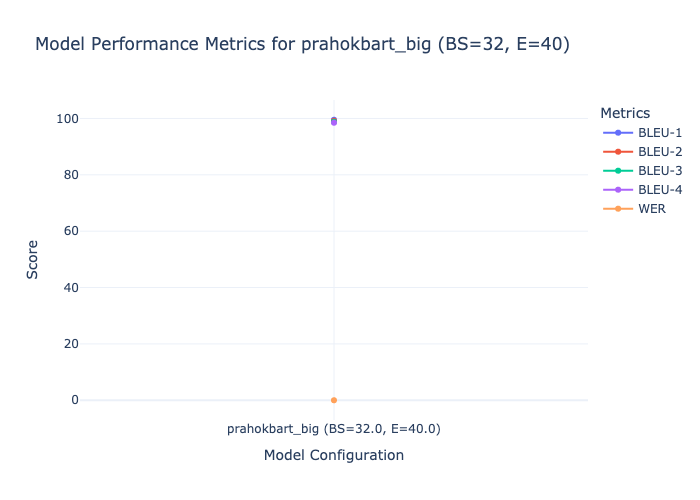

- khmerhomophonecorrector/model_performance_line_chart_big_bs32_e40.html +0 -0

- khmerhomophonecorrector/model_performance_line_chart_big_bs32_e40.png +0 -0

- khmerhomophonecorrector/model_performance_table.html +80 -0

- khmerhomophonecorrector/test_results.txt +0 -0

- khmerhomophonecorrector/tool/.DS_Store +0 -0

- khmerhomophonecorrector/tool/__pycache__/khnormal.cpython-312.pyc +0 -0

- khmerhomophonecorrector/tool/balance_data.py +39 -0

- khmerhomophonecorrector/tool/clean_data.py +52 -0

- khmerhomophonecorrector/tool/combine_homophones.py +46 -0

- khmerhomophonecorrector/tool/complete_homophone_sentences.py +182 -0

- khmerhomophonecorrector/tool/convert_format.py +107 -0

- khmerhomophonecorrector/tool/convert_training_data.py +108 -0

- khmerhomophonecorrector/tool/debug_homophone_check.py +54 -0

- khmerhomophonecorrector/tool/filter.py +40 -0

- khmerhomophonecorrector/tool/homophone_missing.py +88 -0

- khmerhomophonecorrector/tool/khnormal.py +158 -0

- khmerhomophonecorrector/tool/normalize_khmer.py +52 -0

- khmerhomophonecorrector/tool/segmentation.py +48 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,14 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

khmerhomophonecorrector/batch_size_impact.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

khmerhomophonecorrector/data/train.json filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

khmerhomophonecorrector/header.png filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

khmerhomophonecorrector/homophone_pairs.json filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

khmerhomophonecorrector/loss_comparison.png filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

khmerhomophonecorrector/metrics_comparison.png filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

khmerhomophonecorrector/training_loss.png filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

khmerhomophonecorrector/visualization/batch_size_impact.png filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

khmerhomophonecorrector/visualization/loss_comparison.png filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

khmerhomophonecorrector/visualization/metrics_comparison.png filter=lfs diff=lfs merge=lfs -text

|

| 46 |

+

khmerhomophonecorrector/visualization/training_loss.png filter=lfs diff=lfs merge=lfs -text

|

added_tokens.json

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"</s>": 32001,

|

| 3 |

+

"<2en>": 32003,

|

| 4 |

+

"<2km>": 32002,

|

| 5 |

+

"<s>": 32000

|

| 6 |

+

}

|

config.json

ADDED

|

@@ -0,0 +1,102 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"activation_dropout": 0.1,

|

| 3 |

+

"activation_function": "gelu",

|

| 4 |

+

"adaptor_activation_function": "gelu",

|

| 5 |

+

"adaptor_dropout": 0.1,

|

| 6 |

+

"adaptor_hidden_size": 512,

|

| 7 |

+

"adaptor_init_std": 0.02,

|

| 8 |

+

"adaptor_scaling_factor": 1.0,

|

| 9 |

+

"adaptor_tuning": false,

|

| 10 |

+

"additional_source_wait_k": -1,

|

| 11 |

+

"alibi_encoding": false,

|

| 12 |

+

"architectures": [

|

| 13 |

+

"MBartForConditionalGeneration"

|

| 14 |

+

],

|

| 15 |

+

"asymmetric_alibi_encoding": false,

|

| 16 |

+

"attention_dropout": 0.1,

|

| 17 |

+

"bos_token_id": 32000,

|

| 18 |

+

"bottleneck_mid_fusion_tokens": 4,

|

| 19 |

+

"classifier_dropout": 0.0,

|

| 20 |

+

"d_model": 1024,

|

| 21 |

+

"decoder_adaptor_tying_config": null,

|

| 22 |

+

"decoder_attention_heads": 16,

|

| 23 |

+

"decoder_ffn_dim": 4096,

|

| 24 |

+

"decoder_layerdrop": 0.0,

|

| 25 |

+

"decoder_layers": 6,

|

| 26 |

+

"decoder_tying_config": null,

|

| 27 |

+

"deep_adaptor_tuning": false,

|

| 28 |

+

"deep_adaptor_tuning_ffn_only": false,

|

| 29 |

+

"dropout": 0.1,

|

| 30 |

+

"embed_low_rank_dim": 0,

|

| 31 |

+

"encoder_adaptor_tying_config": null,

|

| 32 |

+

"encoder_attention_heads": 16,

|

| 33 |

+

"encoder_ffn_dim": 4096,

|

| 34 |

+

"encoder_layerdrop": 0.0,

|

| 35 |

+

"encoder_layers": 6,

|

| 36 |

+

"encoder_tying_config": null,

|

| 37 |

+

"eos_token_id": 32001,

|

| 38 |

+

"expert_ffn_size": 128,

|

| 39 |

+

"features_embed_dims": null,

|

| 40 |

+

"features_vocab_sizes": null,

|

| 41 |

+

"forced_eos_token_id": 2,

|

| 42 |

+

"gradient_checkpointing": false,

|

| 43 |

+

"gradient_reversal_for_domain_classifier": false,

|

| 44 |

+

"hypercomplex": false,

|

| 45 |

+

"hypercomplex_n": 2,

|

| 46 |

+

"ia3_adaptors": false,

|

| 47 |

+

"init_std": 0.02,

|

| 48 |

+

"initialization_scheme": "static",

|

| 49 |

+

"is_encoder_decoder": true,

|

| 50 |

+

"layernorm_adaptor_input": false,

|

| 51 |

+

"layernorm_prompt_projection": false,

|

| 52 |

+

"lora_adaptor_rank": 2,

|

| 53 |

+

"lora_adaptors": false,

|

| 54 |

+

"max_position_embeddings": 1024,

|

| 55 |

+

"mid_fusion_layers": 3,

|

| 56 |

+

"model_type": "mbart",

|

| 57 |

+

"moe_adaptors": false,

|

| 58 |

+

"multi_source": false,

|

| 59 |

+

"multi_source_method": null,

|

| 60 |

+

"multilayer_softmaxing": null,

|

| 61 |

+

"no_embed_norm": false,

|

| 62 |

+

"no_positional_encoding_decoder": false,

|

| 63 |

+

"no_positional_encoding_encoder": false,

|

| 64 |

+

"no_projection_prompt": false,

|

| 65 |

+

"no_scale_attention_embedding": false,

|

| 66 |

+

"num_domains_for_domain_classifier": 1,

|

| 67 |

+

"num_experts": 8,

|

| 68 |

+

"num_hidden_layers": 6,

|

| 69 |

+

"num_moe_adaptor_experts": 4,

|

| 70 |

+

"num_prompts": 100,

|

| 71 |

+

"num_sparsify_blocks": 8,

|

| 72 |

+

"pad_token_id": 0,

|

| 73 |

+

"parallel_adaptors": false,

|

| 74 |

+

"positional_encodings": false,

|

| 75 |

+

"postnorm_decoder": false,

|

| 76 |

+

"postnorm_encoder": false,

|

| 77 |

+

"prompt_dropout": 0.1,

|

| 78 |

+

"prompt_init_std": 0.02,

|

| 79 |

+

"prompt_projection_hidden_size": 4096,

|

| 80 |

+

"prompt_tuning": false,

|

| 81 |

+

"recurrent_projections": 1,

|

| 82 |

+

"residual_connection_adaptor": false,

|

| 83 |

+

"residual_connection_prompt": false,

|

| 84 |

+

"rope_encoding": false,

|

| 85 |

+

"scale_embedding": false,

|

| 86 |

+

"softmax_bias_tuning": false,

|

| 87 |

+

"softmax_temperature": 1.0,

|

| 88 |

+

"sparsification_temperature": 3.0,

|

| 89 |

+

"sparsify_attention": false,

|

| 90 |

+

"sparsify_ffn": false,

|

| 91 |

+

"target_vocab_size": 0,

|

| 92 |

+

"temperature_calibration": false,

|

| 93 |

+

"tokenizer_class": "AlbertTokenizer",

|

| 94 |

+

"torch_dtype": "float32",

|

| 95 |

+

"transformers_version": "4.52.4",

|

| 96 |

+

"unidirectional_encoder": false,

|

| 97 |

+

"use_cache": true,

|

| 98 |

+

"use_moe": false,

|

| 99 |

+

"use_tanh_activation_prompt": false,

|

| 100 |

+

"vocab_size": 32004,

|

| 101 |

+

"wait_k": -1

|

| 102 |

+

}

|

generation_config.json

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_from_model_config": true,

|

| 3 |

+

"bos_token_id": 32000,

|

| 4 |

+

"eos_token_id": 32001,

|

| 5 |

+

"forced_eos_token_id": 2,

|

| 6 |

+

"pad_token_id": 0,

|

| 7 |

+

"transformers_version": "4.52.4"

|

| 8 |

+

}

|

khmerhomophonecorrector/.DS_Store

ADDED

|

Binary file (12.3 kB). View file

|

|

|

khmerhomophonecorrector/.gitattributes

ADDED

|

@@ -0,0 +1,46 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

+

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

+

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

+

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 12 |

+

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

+

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

+

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 15 |

+

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 16 |

+

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 17 |

+

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 18 |

+

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 19 |

+

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 20 |

+

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 21 |

+

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 22 |

+

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 23 |

+

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 24 |

+

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 25 |

+

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

+

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

+

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

+

*.tar filter=lfs diff=lfs merge=lfs -text

|

| 29 |

+

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 30 |

+

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 31 |

+

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 32 |

+

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 33 |

+

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

+

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

batch_size_impact.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

data/train.json filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

header.png filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

homophone_pairs.json filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

loss_comparison.png filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

metrics_comparison.png filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

training_loss.png filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

visualization/batch_size_impact.png filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

visualization/loss_comparison.png filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

visualization/metrics_comparison.png filter=lfs diff=lfs merge=lfs -text

|

| 46 |

+

visualization/training_loss.png filter=lfs diff=lfs merge=lfs -text

|

khmerhomophonecorrector/FYP_ Model Tracking - Sheet1.csv

ADDED

|

@@ -0,0 +1,107 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Model Name,Batch Size,Num of Epochs,Epochs,Train Loss,Val Loss,WER,BLEU-1,BLEU-2,BLEU-3,BLEU-4,Notes,

|

| 2 |

+

prahokbart_base,8,10,1,0.1789,0.118885,0.0095,99.4068,98.8971,98.4134,97.9704,https://drive.google.com/drive/folders/16BOObaDAzmx6yl__UavLhbQUQj1JiTph,

|

| 3 |

+

,,,2,0.0808,0.066198,,,,,,,

|

| 4 |

+

,,,3,0.0648,0.049457,,,,,,,

|

| 5 |

+

,,,4,0.0466,0.040769,,,,,,,

|

| 6 |

+

,,,5,0.0402,0.035832,,,,,,,

|

| 7 |

+

,,,6,0.029,0.032629,,,,,,,

|

| 8 |

+

,,,7,0.0419,0.030779,,,,,,,

|

| 9 |

+

,,,8,0.0199,0.030187,,,,,,,

|

| 10 |

+

,,,9,0.018,0.029398,,,,,,,

|

| 11 |

+

,,,10,0.017,0.028081,,,,,,,

|

| 12 |

+

,,,,,,,,,,,,

|

| 13 |

+

prahokbart_base,16,10,1,0.344,0.243551,0.0146,98.9618,98.0396,97.1388,96.2967,prahokbart-base-E10-B16.ipynb,prahokbart-base-bs16-e10

|

| 14 |

+

,,,2,0.1701,0.127156,,,,,,,

|

| 15 |

+

,,,3,0.113,0.094204,,,,,,,

|

| 16 |

+

,,,4,0.0994,0.077294,,,,,,,

|

| 17 |

+

,,,5,0.0826,0.06774,,,,,,,

|

| 18 |

+

,,,6,0.0744,0.061196,,,,,,,

|

| 19 |

+

,,,7,0.0727,0.056898,,,,,,,

|

| 20 |

+

,,,8,0.0583,0.054359,,,,,,,

|

| 21 |

+

,,,9,0.0512,0.053133,,,,,,,

|

| 22 |

+

,,,10,0.0567,0.052445,,,,,,,

|

| 23 |

+

,,,,,,,,,,,,

|

| 24 |

+

prahokbart_base,32,10,1,0.4248,,0.0217,98.3017,96.752,95.2542,93.8637,prahokbart-base-E10-B32.ipynb,prahokbart-base-bs32-e10

|

| 25 |

+

,,,2,0.2198,0.1806311905,,,,,,,

|

| 26 |

+

,,,3,0.1831,,,,,,,,

|

| 27 |

+

,,,4,0.15,,,,,,,,

|

| 28 |

+

,,,5,0.137,0.1235590726,,,,,,,

|

| 29 |

+

,,,6,0.1273,,,,,,,,

|

| 30 |

+

,,,7,0.1204,0.1003917083,,,,,,,

|

| 31 |

+

,,,8,0.1096,,,,,,,,

|

| 32 |

+

,,,9,0.1099,,,,,,,,

|

| 33 |

+

,,,10,0.1061,0.09450948983,,,,,,,

|

| 34 |

+

,,,,,,,,,,,,

|

| 35 |

+

prahokbart_big,8,10,1,0.1789,0.118885,0.0095,99.4068,98.8971,98.4134,97.9704,prahokbart-big-E10-B8.ipynb,prahokbart-big-bs8-e10

|

| 36 |

+

,,,2,0.0808,0.066198,,,,,,,

|

| 37 |

+

,,,3,0.0648,0.049457,,,,,,,

|

| 38 |

+

,,,4,0.0466,0.040769,,,,,,,

|

| 39 |

+

,,,5,0.0402,0.035832,,,,,,,

|

| 40 |

+

,,,6,0.029,0.032629,,,,,,,

|

| 41 |

+

,,,7,0.0419,0.030779,,,,,,,

|

| 42 |

+

,,,8,0.0199,0.030187,,,,,,,

|

| 43 |

+

,,,9,0.018,0.029398,,,,,,,

|

| 44 |

+

,,,10,0.0268,0.029082,,,,,,,

|

| 45 |

+

,,,,,,,,,,,,

|

| 46 |

+

prahokbart_big,16,10,1,0.237,0.156053,0.012,99.1946,98.5264,97.8799,97.2795,prahokbart-big-E10-B16.ipynb,prahokbart-big-bs16-e10

|

| 47 |

+

,,,2,0.1137,0.080692,,,,,,,

|

| 48 |

+

,,,3,0.08,0.062454,,,,,,,

|

| 49 |

+

,,,4,0.0672,0.051034,,,,,,,

|

| 50 |

+

,,,5,0.0537,0.045366,,,,,,,

|

| 51 |

+

,,,6,0.0474,0.041196,,,,,,,

|

| 52 |

+

,,,7,0.048,0.038459,,,,,,,

|

| 53 |

+

,,,8,0.0366,0.036974,,,,,,,

|

| 54 |

+

,,,9,0.0305,0.036123,,,,,,,

|

| 55 |

+

,,,10,0.038,0.035709,,,,,,,

|

| 56 |

+

,,,,,,,,,,,,

|

| 57 |

+

prahokbart_big,32,10,1,0.4347,0.328299,0.0142,99.0066,98.1694,97.3646,96.6186,prahokbart-big-E10-B32.ipynb,prahokbart-big-bs32-e10

|

| 58 |

+

,,,2,0.1448,0.107667,,,,,,,

|

| 59 |

+

,,,3,0.1,0.080751,,,,,,,

|

| 60 |

+

,,,4,0.0857,0.066501,,,,,,,

|

| 61 |

+

,,,5,0.0717,0.059016,,,,,,,

|

| 62 |

+

,,,6,0.0608,0.053938,,,,,,,

|

| 63 |

+

,,,7,0.0606,0.050479,,,,,,,

|

| 64 |

+

,,,8,0.0569,0.048502,,,,,,,

|

| 65 |

+

,,,9,0.0569,0.047486,,,,,,,

|

| 66 |

+

,,,10,0.0484,0.047022,,,,,,,

|

| 67 |

+

,,,,,,,,,,,,

|

| 68 |

+

prahokbart_big,32,40,1,0.6786,0.59872,0.008,99.5398,99.162,98.8093,98.4861,prahokbart-big-E10-B32.ipynb,

|

| 69 |

+

,,,2,0.3993,0.318888,,,,,,,

|

| 70 |

+

,,,3,0.1638,0.126617,,,,,,,

|

| 71 |

+

,,,4,0.1196,0.088467,,,,,,,

|

| 72 |

+

,,,5,0.0861,0.068045,,,,,,,

|

| 73 |

+

,,,6,0.0663,0.056211,,,,,,,

|

| 74 |

+

,,,7,0.0599,0.0488,,,,,,,

|

| 75 |

+

,,,8,0.0516,0.043238,,,,,,,

|

| 76 |

+

,,,9,0.047,0.039321,,,,,,,

|

| 77 |

+

,,,10,0.0357,0.035333,,,,,,,

|

| 78 |

+

,,,11,0.0377,0.03289,,,,,,,

|

| 79 |

+

,,,12,0.0335,0.030855,,,,,,,

|

| 80 |

+

,,,13,0.0279,0.029597,,,,,,,

|

| 81 |

+

,,,14,0.0362,0.028269,,,,,,,

|

| 82 |

+

,,,15,0.0206,0.027406,,,,,,,

|

| 83 |

+

,,,16,0.0229,0.026543,,,,,,,

|

| 84 |

+

,,,17,0.0197,0.026183,,,,,,,

|

| 85 |

+

,,,18,0.0167,0.025577,,,,,,,

|

| 86 |

+

,,,19,0.0181,0.02498,,,,,,,

|

| 87 |

+

,,,20,0.0153,0.024927,,,,,,,

|

| 88 |

+

,,,21,0.0137,0.024544,,,,,,,

|

| 89 |

+

,,,22,0.0166,0.024343,,,,,,,

|

| 90 |

+

,,,23,0.0134,0.024054,,,,,,,

|

| 91 |

+

,,,24,0.0121,0.023849,,,,,,,

|

| 92 |

+

,,,25,0.015,0.023575,,,,,,,

|

| 93 |

+

,,,26,0.0114,0.023603,,,,,,,

|

| 94 |

+

,,,27,0.0107,0.023624,,,,,,,

|

| 95 |

+

,,,28,0.0113,0.023694,,,,,,,

|

| 96 |

+

,,,29,0.0113,0.02336,,,,,,,

|

| 97 |

+

,,,30,0.0087,0.023514,,,,,,,

|

| 98 |

+

,,,31,0.0103,0.023472,,,,,,,

|

| 99 |

+

,,,32,0.0082,0.023636,,,,,,,

|

| 100 |

+

,,,33,0.0112,0.02359,,,,,,,

|

| 101 |

+

,,,34,0.0086,0.023592,,,,,,,

|

| 102 |

+

,,,35,0.0081,0.023537,,,,,,,

|

| 103 |

+

,,,36,0.009,0.023482,,,,,,,

|

| 104 |

+

,,,37,0.0089,0.023521,,,,,,,

|

| 105 |

+

,,,38,0.009,0.023539,,,,,,,

|

| 106 |

+

,,,39,0.0078,0.02354,,,,,,,

|

| 107 |

+

,,,40,0.0091,0.023525,,,,,,,

|

khmerhomophonecorrector/README.md

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

---

|

khmerhomophonecorrector/app.py

ADDED

|

@@ -0,0 +1,216 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer, MBartForConditionalGeneration

|

| 3 |

+

import json

|

| 4 |

+

from khmernltk import word_tokenize

|

| 5 |

+

import torch

|

| 6 |

+

import difflib

|

| 7 |

+

|

| 8 |

+

# Set page config

|

| 9 |

+

st.set_page_config(

|

| 10 |

+

page_title="Khmer Homophone Corrector",

|

| 11 |

+

page_icon="✍️",

|

| 12 |

+

layout="wide"

|

| 13 |

+

)

|

| 14 |

+

|

| 15 |

+

# Custom CSS

|

| 16 |

+

st.markdown("""

|

| 17 |

+

<style>

|

| 18 |

+

.main {

|

| 19 |

+

padding: 2rem;

|

| 20 |

+

}

|

| 21 |

+

.stTextArea textarea {

|

| 22 |

+

font-size: 1.2rem;

|

| 23 |

+

}

|

| 24 |

+

.result-text {

|

| 25 |

+

font-size: 1.2rem;

|

| 26 |

+

padding: 1rem;

|

| 27 |

+

background-color: #f8f9fa;

|

| 28 |

+

border-radius: 0.5rem;

|

| 29 |

+

margin: 0.5rem 0;

|

| 30 |

+

}

|

| 31 |

+

.correction {

|

| 32 |

+

background-color: #ffd700;

|

| 33 |

+

padding: 0.2rem;

|

| 34 |

+

border-radius: 0.2rem;

|

| 35 |

+

}

|

| 36 |

+

.correction-details {

|

| 37 |

+

font-size: 1rem;

|

| 38 |

+

color: #666;

|

| 39 |

+

margin-top: 0.5rem;

|

| 40 |

+

}

|

| 41 |

+

.header-image {

|

| 42 |

+

width: 100%;

|

| 43 |

+

max-width: 800px;

|

| 44 |

+

margin: 0 auto;

|

| 45 |

+

display: block;

|

| 46 |

+

}

|

| 47 |

+

.model-info {

|

| 48 |

+

font-size: 0.9rem;

|

| 49 |

+

color: #666;

|

| 50 |

+

margin-top: 0.5rem;

|

| 51 |

+

}

|

| 52 |

+

</style>

|

| 53 |

+

""", unsafe_allow_html=True)

|

| 54 |

+

|

| 55 |

+

# Display header image

|

| 56 |

+

st.image("header.png", use_column_width=True)

|

| 57 |

+

|

| 58 |

+

# Model configurations

|

| 59 |

+

MODEL_CONFIG = {

|

| 60 |

+

"path": "./prahokbart-big-bs32-e40",

|

| 61 |

+

"description": "Large model with batch size 32, trained for 40 epochs"

|

| 62 |

+

}

|

| 63 |

+

|

| 64 |

+

def word_segment(text):

|

| 65 |

+

return " ".join(word_tokenize(text)).replace(" ", " ▂ ")

|

| 66 |

+

|

| 67 |

+

def find_corrections(original, corrected):

|

| 68 |

+

original_words = [w for w in word_tokenize(original) if w.strip()]

|

| 69 |

+

corrected_words = [w for w in word_tokenize(corrected) if w.strip()]

|

| 70 |

+

|

| 71 |

+

matcher = difflib.SequenceMatcher(None, original_words, corrected_words)

|

| 72 |

+

corrections = []

|

| 73 |

+

|

| 74 |

+

for tag, i1, i2, j1, j2 in matcher.get_opcodes():

|

| 75 |

+

if tag != 'equal':

|

| 76 |

+

original_text = ' '.join(original_words[i1:i2])

|

| 77 |

+

corrected_text = ' '.join(corrected_words[j1:j2])

|

| 78 |

+

if original_text.strip() and corrected_text.strip() and original_text != corrected_text:

|

| 79 |

+

corrections.append({

|

| 80 |

+

'original': original_text,

|

| 81 |

+

'corrected': corrected_text,

|

| 82 |

+

'position': i1

|

| 83 |

+

})

|

| 84 |

+

|

| 85 |

+

return corrections

|

| 86 |

+

|

| 87 |

+

@st.cache_resource

|

| 88 |

+

def load_model(model_path):

|

| 89 |

+

try:

|

| 90 |

+

model = MBartForConditionalGeneration.from_pretrained(model_path)

|

| 91 |

+

tokenizer = AutoTokenizer.from_pretrained(model_path)

|

| 92 |

+

|

| 93 |

+

model.eval()

|

| 94 |

+

|

| 95 |

+

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 96 |

+

model = model.to(device)

|

| 97 |

+

|

| 98 |

+

return {

|

| 99 |

+

"model": model,

|

| 100 |

+

"tokenizer": tokenizer,

|

| 101 |

+

"device": device

|

| 102 |

+

}

|

| 103 |

+

except Exception as e:

|

| 104 |

+

st.error(f"Error loading model: {str(e)}")

|

| 105 |

+

return None

|

| 106 |

+

|

| 107 |

+

def process_text(text, model_components):

|

| 108 |

+

if model_components is None:

|

| 109 |

+

return "Error: Model not loaded properly"

|

| 110 |

+

|

| 111 |

+

model = model_components["model"]

|

| 112 |

+

tokenizer = model_components["tokenizer"]

|

| 113 |

+

device = model_components["device"]

|

| 114 |

+

|

| 115 |

+

segmented_text = word_segment(text)

|

| 116 |

+

input_text = f"{segmented_text} </s> <2km>"

|

| 117 |

+

|

| 118 |

+

inputs = tokenizer(

|

| 119 |

+

input_text,

|

| 120 |

+

return_tensors="pt",

|

| 121 |

+

padding=True,

|

| 122 |

+

truncation=True,

|

| 123 |

+

max_length=1024,

|

| 124 |

+

add_special_tokens=True

|

| 125 |

+

)

|

| 126 |

+

|

| 127 |

+

inputs = {k: v.to(device) for k, v in inputs.items()}

|

| 128 |

+

|

| 129 |

+

if 'token_type_ids' in inputs:

|

| 130 |

+

del inputs['token_type_ids']

|

| 131 |

+

|

| 132 |

+

with torch.no_grad():

|

| 133 |

+

outputs = model.generate(

|

| 134 |

+

**inputs,

|

| 135 |

+

max_length=1024,

|

| 136 |

+

num_beams=5,

|

| 137 |

+

early_stopping=True,

|

| 138 |

+

do_sample=False,

|

| 139 |

+

no_repeat_ngram_size=3,

|

| 140 |

+

forced_bos_token_id=32000,

|

| 141 |

+

forced_eos_token_id=32001,

|

| 142 |

+

length_penalty=1.0,

|

| 143 |

+

temperature=1.0

|

| 144 |

+

)

|

| 145 |

+

|

| 146 |

+

corrected = tokenizer.decode(outputs[0], skip_special_tokens=True)

|

| 147 |

+

corrected = corrected.replace("</s>", "").replace("<2km>", "").replace("▂", " ").strip()

|

| 148 |

+

|

| 149 |

+

return corrected

|

| 150 |

+

|

| 151 |

+

# Header

|

| 152 |

+

st.title("✍️ Khmer Homophone Corrector")

|

| 153 |

+

|

| 154 |

+

# Simple instruction

|

| 155 |

+

st.markdown("Type or paste your Khmer text below to correct homophones.")

|

| 156 |

+

|

| 157 |

+

# Create two columns for input and output

|

| 158 |

+

col1, col2 = st.columns(2)

|

| 159 |

+

|

| 160 |

+

with col1:

|

| 161 |

+

st.subheader("Input Text")

|

| 162 |

+

user_input = st.text_area(

|

| 163 |

+

"Enter Khmer text with homophones:",

|

| 164 |

+

height=200,

|

| 165 |

+

placeholder="Type or paste your Khmer text here...",

|

| 166 |

+

key="input_text"

|

| 167 |

+

)

|

| 168 |

+

|

| 169 |

+

correct_button = st.button("🔄 Correct Text", type="primary", use_container_width=True)

|

| 170 |

+

|

| 171 |

+

with col2:

|

| 172 |

+

st.subheader("Results")

|

| 173 |

+

if correct_button and user_input:

|

| 174 |

+

with st.spinner("Processing..."):

|

| 175 |

+

try:

|

| 176 |

+

# Load model

|

| 177 |

+

model_components = load_model(MODEL_CONFIG["path"])

|

| 178 |

+

|

| 179 |

+

# Process the text

|

| 180 |

+

corrected = process_text(user_input, model_components)

|

| 181 |

+

|

| 182 |

+

# Find corrections

|

| 183 |

+

corrections = find_corrections(user_input, corrected)

|

| 184 |

+

|

| 185 |

+

# Display results

|

| 186 |

+

st.markdown("**Corrected Text:**")

|

| 187 |

+

st.markdown(f'<div class="result-text">{corrected}</div>', unsafe_allow_html=True)

|

| 188 |

+

|

| 189 |

+

# Show corrections if any were made

|

| 190 |

+

if corrections:

|

| 191 |

+

st.success(f"Found {len(corrections)} corrections!")

|

| 192 |

+

st.markdown("**Corrections made:**")

|

| 193 |

+

for i, correction in enumerate(corrections, 1):

|

| 194 |

+

st.markdown(f"""

|

| 195 |

+

<div class="correction-details">

|

| 196 |

+

{i}. Changed "{correction['original']}" to "{correction['corrected']}"

|

| 197 |

+

</div>

|

| 198 |

+

""", unsafe_allow_html=True)

|

| 199 |

+

else:

|

| 200 |

+

st.warning("No corrections were made.")

|

| 201 |

+

except Exception as e:

|

| 202 |

+

st.error(f"An error occurred: {str(e)}")

|

| 203 |

+

elif correct_button:

|

| 204 |

+

st.warning("Please enter text first!")

|

| 205 |

+

|

| 206 |

+

# Footer

|

| 207 |

+

st.markdown("---")

|

| 208 |

+

st.markdown("""

|

| 209 |

+

<div style='text-align: center; padding: 10px;'>

|

| 210 |

+

<a href='https://sites.google.com/paragoniu.edu.kh/khmerhomophonecorrector/home'

|

| 211 |

+

target='_blank'

|

| 212 |

+

style='text-decoration: none; color: #1f77b4; font-size: 16px;'>

|

| 213 |

+

📚 Learn more about this project

|

| 214 |

+

</a>

|

| 215 |

+

</div>

|

| 216 |

+

""", unsafe_allow_html=True)

|

khmerhomophonecorrector/batch_size_impact.png

ADDED

|

Git LFS Details

|

khmerhomophonecorrector/data/.DS_Store

ADDED

|

Binary file (6.15 kB). View file

|

|

|

khmerhomophonecorrector/data/test.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

khmerhomophonecorrector/data/train.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a7d1f07ca76eb9e270b523e5ccd476f348d996c68d94a7c050c9313ea5b43834

|

| 3 |

+

size 25445389

|

khmerhomophonecorrector/data/val.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

khmerhomophonecorrector/dataset_distribution.png

ADDED

|

khmerhomophonecorrector/header.png

ADDED

|

Git LFS Details

|

khmerhomophonecorrector/homophone_pairs.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:0f1b62ec06518733f86834a18e229ed67dc0491f0074b9acfbfce96d71033162

|

| 3 |

+

size 32176015

|

khmerhomophonecorrector/homophone_test.json

ADDED

|

@@ -0,0 +1,272 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"homophones": [

|

| 3 |

+

["ក", "ក៏", "ករ", "ករណ៍"],

|

| 4 |

+

["កល", "កល់"],

|

| 5 |

+

["កាប់", "កប្ប"],

|

| 6 |

+

["កាប", "កាព្យ"],

|

| 7 |

+

["កូត", "កូដ"],

|

| 8 |

+

["កាំ", "កម្ម"],

|

| 9 |

+

["កេះ", "កែះ", "កេស", "កែស"],

|

| 10 |

+

["ក្រិត", "ក្រឹត្យ", "ក្រឹត", "ក្រិដ្ឋ"],

|

| 11 |

+

["កាណ៌", "ការណ៍", "ការ្យ"],

|

| 12 |

+

["ក្លា", "ខ្លា"],

|

| 13 |

+

["កាន់", "កណ្ឌ"],

|

| 14 |

+

["កួរ", "គួរ"],

|

| 15 |

+

["កេរ", "កេរ្តិ៍", "គេ", "គែ", "គេហ៍"],

|

| 16 |

+

["ក្មួយ", "ខ្មួយ"],

|

| 17 |

+

["ក្លាស់", "ខ្លះ"],

|

| 18 |

+

["ក្លែង", "ខ្លែង"],

|

| 19 |

+

["ក្រាស", "ក្រាស់"],

|

| 20 |

+

["ក្រិស", "ក្រេស"],

|

| 21 |

+

["កំពង់", "កំពុង"],

|

| 22 |

+

["ក្រំ", "ក្រម", "គ្រាំ"],

|

| 23 |

+

["ក្រួស", "គ្រោះ"],

|

| 24 |

+

["កោន", "កោណ"],

|

| 25 |

+

["កោត", "កោដ្ឋ", "កោដិ"],

|

| 26 |

+

["កុះ", "កោស", "កូស"],

|

| 27 |

+

["កន្លះ", "កន្លាស់"],

|

| 28 |

+

["ខ្ចប់", "ខ្ជាប់"],

|

| 29 |

+

["ខន្ធ", "ខ័ន", "ខាន់", "ខណ្ឌ"],

|

| 30 |

+

["ខុរ", "ខុល"],

|

| 31 |

+

["ខ្វេះ", "ខ្វែះ"],

|

| 32 |

+

["ខែត្រ", "ខេត្ត"],

|

| 33 |

+

["គន់", "គន្ធ", "គុន", "គុណ", "គណ"],

|

| 34 |

+

["គត", "គត់", "គុត"],

|

| 35 |

+

["គប់", "គុប"],

|

| 36 |

+

["គល់", "គុល", "គឹល"],

|

| 37 |

+

["គាថា", "កថា"],

|

| 38 |

+

["គុំ", "គំ", "គុម្ព", "គមន៏", "គម"],

|

| 39 |

+

["គូថ", "គូទ", "គូធ"],

|

| 40 |

+

["គ្រា", "គ្រាហ៍"],

|

| 41 |

+

["គ្រំ", "គ្រុំ", "គ្រុម"],

|

| 42 |

+

["រងំ", "រងុំ"],

|

| 43 |

+

["ចរណ៍", "ជ័រ"],

|

| 44 |

+

["ចប់", "ជាប់", "ចប"],

|

| 45 |

+

["ចារ", "ចារុ៍"],

|

| 46 |

+

["ចារិក", "ចារឹក", "ចរិត"],

|

| 47 |

+

["ចាក់", "ចក្រ", "ចក្ក"],

|

| 48 |

+

["ច័ន", "ចាន់", "ចណ្ឌ", "ចន្ទ", "ចន្រ្ទ", "ចន្ទន៍"],

|

| 49 |

+

["ចិត", "ចិត្ត", "ចិត្រ្ត"],

|

| 50 |

+

["ចិក", "ចឹក"],

|

| 51 |

+

["ចូរ", "ចូល", "ចូឡ"],

|

| 52 |

+

["ចេះ", "ចេស", "ចែស", "ជែះ", "ជេះ", "ជេស្ឋ"],

|

| 53 |

+

["ច្នៃ", "ឆ្នៃ"],

|

| 54 |

+

["ច្រែស", "ច្រេះ", "ច្រេស", "ច្រែះ"],

|

| 55 |

+

["ច្រាស", "ច្រាស់"],

|

| 56 |

+

["ច្រោះ", "ច្រស"],

|

| 57 |

+

["ច្រៀង", "ជ្រៀង"],

|

| 58 |

+

["ចោទ", "ចោត"],

|

| 59 |

+

["ចំណោត", "ចំណោទ"],

|

| 60 |

+

["ឆន្ទ", "ឆាន់"],

|

| 61 |

+

["ឆ្វេង", "ឈ្វេង"],

|

| 62 |

+

["ជង", "ជង់", "ជង្ឃ"],

|

| 63 |

+

["ជច់", "ជុច"],

|

| 64 |

+

["ជល", "ជល់", "ជុល"],

|

| 65 |

+

["ជន់", "ជន", "ជន្ម"],

|

| 66 |

+

["ជីរ", "ជី", "ជីវ៍"],

|

| 67 |

+

["ជីប", "ជីព"],

|

| 68 |

+

["ជប", "ជប់"],

|

| 69 |

+

["ជួស", "ជោះ"],

|

| 70 |

+

["ជំនួស", "ជំនោះ"],

|

| 71 |

+

["ជំនំ", "ជំនុំ"],

|

| 72 |

+

["ជោក", "ជោគ"],

|

| 73 |

+

["ជំ", "ជុំ"],

|

| 74 |

+

["ជំរំ", "ជុំរុំ"],

|

| 75 |

+

["ជ្រង", "ជ្រោង"],

|

| 76 |

+

["ជ្រង់", "ជ្រុង"],

|

| 77 |

+

["ជ្រួយ", "ជ្រោយ"],

|

| 78 |

+

["ជ្រួស", "ជ្រោះ"],

|

| 79 |

+

["ឈឹង", "ឆឹង"],

|

| 80 |

+

["ញុះ", "ញោះ", "ញោស"],

|

| 81 |

+

["ដ", "ដរ", "ដ៏"],

|

| 82 |

+

["ដប", "ដប់"],

|

| 83 |

+

["ដា", "ដារ"],

|

| 84 |

+

["ដាស", "ដាស់"],

|

| 85 |

+

["ដុះ", "ដុស"],

|

| 86 |

+

["ណ៎ះ", "ណាស់"],

|

| 87 |

+

["ត្រប់", "ទ្រាប់", "ទ្រព្យ"],

|

| 88 |

+

["ត្លុក", "ថ្លុក"],

|

| 89 |

+

["តិះ", "តេះ", "តេស្ត"],

|

| 90 |

+

["ត្រិះ", "ត្រែះ", "ត្រេះ"],

|

| 91 |

+

["ទង់", "ទុង"],

|

| 92 |

+

["ទប់", "ទព្វ"],

|

| 93 |

+

["ទល់", "ទុល"],

|

| 94 |

+

["ទាល់", "ទ័ល"],

|

| 95 |

+

["ទន់", "ទុន"],

|

| 96 |

+

["ទន្ត", "ទណ្ឌ", "ទាន់"],

|

| 97 |

+

["ទា", "ទារ"],

|

| 98 |

+

["ទិច", "ទិត្យ", "តិច"],

|

| 99 |

+

["ទំ", "ទុំ", "ទម"],

|

| 100 |

+

["ទុក", "ទុក្ខ"],

|

| 101 |

+

["ទូ", "ទូរ"],

|

| 102 |

+

["ទាប", "ទៀប", "តៀប"],

|

| 103 |

+

["ទិញ", "ទេញ"],

|

| 104 |

+

["ទៃ", "ទេយ្យ", "ទ័យ"],

|

| 105 |

+

["ទេស", "ទេសន៍", "ទែះ"],

|

| 106 |

+

["ទោះ", "ទស", "ទស់", "ទស្សន៍"],

|

| 107 |

+

["ទោ", "ទោរ"],

|

| 108 |

+

["ទ្រង់", "ទ្រុង"],

|

| 109 |

+

["ធន", "ធន់", "ធុន"],

|

| 110 |

+

["ធំ", "ធុំ"],

|

| 111 |

+

["ធុញ", "ធញ្ញ"],

|

| 112 |

+

["នប់", "នព្វ"],

|

| 113 |

+

["និង", "នឹង", "ហ្នឹង"],

|

| 114 |

+

["នោះ", "នុះ", "នុ៎ះ"],

|

| 115 |

+

["នៅ", "នូវ"],

|

| 116 |

+

["នាក់", "អ្នក"],

|

| 117 |

+

["នាដ", "នាថ"],

|

| 118 |

+

["នរនាថ", "នរនាទ"],

|

| 119 |

+

["នាល", "នាឡិ"],

|

| 120 |

+

["និមិត្ត", "និមិ្មត"],

|

| 121 |

+

["នៃ", "ន័យ", "នី"],

|

| 122 |

+

["បក្ខ", "បក្ស", "ប៉ាក់"],

|

| 123 |

+

["បញ្ចប់", "បញ្ជាប់"],

|

| 124 |

+

["បណ្ឌិត", "បណ្ឌិត្យ"],

|

| 125 |

+

["បាត់", "បត្រ", "បត្ត", "បត្តិ", "ប័តន៍", "ប័ត", "ប័ទ"],

|

| 126 |

+

["បត់", "បទ", "ប័ទ្ម", "បដ", "បថ"],

|

| 127 |

+

["បន្ទំ", "បន្ទុំ"],

|

| 128 |

+

["បាទ", "បាត", "បាត្រ"],

|

| 129 |

+

["បិណ្ឌ", "បិន"],

|

| 130 |

+

["បាស", "បះ"],

|

| 131 |

+

["បុះ", "បុស្ស", "បូស", "បុស្ប"],

|

| 132 |

+

["បិត", "បិទ"],

|

| 133 |

+

["បូ", "បូព៌", "បូណ៌"],

|

| 134 |

+

["បរិបូរ", "បរិបូរណ៍", "បរិបូរណ៌"],

|

| 135 |

+

["បម្រះ", "បម្រាស", "បម្រាស់"],

|

| 136 |

+

["ប្រី", "ប្រិយ"],

|

| 137 |

+

["ប្រឹស", "ប្រឹះ", "ប្រឹស្ឋ", "ប្រើស"],

|

| 138 |

+

["ប្រសិទ្ធ", "ប្រសិទ្ធិ័"],

|

| 139 |

+

["ប្រសូត", "ប្រសូតិ"],

|

| 140 |

+

["ប្រុស", "ប្រុះ"],

|

| 141 |

+

["ប្រួញ", "ព្រួញ"],

|

| 142 |

+

["ប្រួល", "ព្រួល"],

|

| 143 |

+

["ប្រះ", "ប្រាស", "ប្រាស់"],

|

| 144 |

+

["ប្រមាថ", "ប្រមាទ"],

|

| 145 |

+

["អប្រមាថ", "អប្រមាទ"],

|

| 146 |

+

["ប្រៀប", "ព្រៀប", "ព្រាប"],

|

| 147 |

+

["ប្រោះ", "ប្រស់", "ប្រស", "ប្រោស"],

|

| 148 |

+

["ប្រសប់", "ប្រសព្វ"],

|

| 149 |

+

["ប្រេះ", "ប្រែះ"],

|

| 150 |

+

["ប្លៀក", "ភ្លៀក", "ផ្លៀក"],

|

| 151 |

+

["ពន្លត់", "ពន្លុត"],

|

| 152 |

+

["ពាត", "ពាធ", "ពាទ្យ"],

|

| 153 |

+

["ពារ", "ពៀរ"],

|

| 154 |

+

["ព្រាង", "ព្រៀង"],

|

| 155 |

+

["ពិន", "ពិណ", "បុិន"],

|

| 156 |

+

["ពៃ", "ពៃរ៏"],

|

| 157 |

+

["ពេចន៍", "ពេជ្ឈ", "ពេជ្រ", "ពេច", "ពិច"],

|

| 158 |

+

["ពង់", "ពង្ស", "ពុង"],

|

| 159 |

+

["ព័ទ្ធ", "ព៌ត", "ពត្តិ", "ពាត់", "ព័ត"],

|

| 160 |

+

["ពុត", "ពុធ", "ពុទ្ធ", "ពត់"],

|

| 161 |

+

["ពន់", "ពុន", "ពន្ធ"],

|

| 162 |

+

["ព័ន្ធ", "ពាន់"],

|

| 163 |

+

["ពល", "ពល់", "ពុល"],

|

| 164 |

+

["ពស់", "ពោះ"],

|

| 165 |

+

["ពព្រុស", "ពព្រូស"],

|

| 166 |

+

["ពោរ", "ពោធិ៍", "ពោធិ", "ពោ"],

|

| 167 |

+

["ព្រិច", "ព្រេច"],

|

| 168 |

+

["ព្រិល", "ព្រឹល"],

|

| 169 |

+

["ព្រឹត្ត", "ព្រឹត្តិ", "ព្រឹទ្ធ"],

|

| 170 |

+

["ព្រុស", "ព្រួស"],

|

| 171 |

+

["ព្រួស", "ព្រោះ"],

|

| 172 |

+

["ព្រំ", "ព្រហ្ម"],

|

| 173 |

+

["ព្រឹក", "ព្រឹក្ស"],

|

| 174 |

+

["ព្រឹក្សា", "ប្រឹក្សា"],

|

| 175 |

+

["ភប់", "ភព"],

|

| 176 |

+

["ភក្តិ", "ភ័ក", "ភក្រ្ត", "ភ័គ", "ភក្ស"],

|

| 177 |

+

["ភាន់", "ភ័ន្ត", "ភ័ណ្ឌ", "ភ័ណ"],

|

| 178 |

+

["មិត្ត", "មិទ្ធៈ", "មឹត"],

|

| 179 |

+

["មួ", "មួរ"],

|

| 180 |

+

["ម៉ដ្ធ", "ម៉ត់"],

|

| 181 |

+

["ម្រាក់", "ម្រ័ក្សណ៍"],

|

| 182 |

+

["យន់", "យន្ត", "យ័ន្ត", "យ័ន"],

|

| 183 |

+

["រង់", "រុង", "រង្គ", "រង"],

|

| 184 |

+

["រថ", "រដ្ឋ", "រត្ន", "រាត់"],

|

| 185 |

+

["រា", "រាហុ៍"],

|

| 186 |

+

["រាក", "រាគ"],

|

| 187 |

+

["រាក់", "រក្ស", "រ័ក"],

|

| 188 |

+

["រាច", "រាជ", "រាជ្យ"],

|

| 189 |

+

["រាម", "រៀម"],

|

| 190 |

+

["រស", "រស់", "រួស", "រោះ"],

|

| 191 |

+

["រាស់", "រ៉ស់"],

|

| 192 |

+

["រុិល", "រឹល"],

|

| 193 |

+

["រុក", "រុក្ខ"],

|

| 194 |

+

["រុត", "រុទ្ធ", "រុត្តិ"],

|

| 195 |

+

["រុះ", "រូស"],

|

| 196 |

+

["រំ", "រុំ", "រម្យ"],

|

| 197 |

+

["រំលិច", "រំលេច"],

|

| 198 |

+

["រោច", "រោចន៍"],

|

| 199 |

+

["របោះ", "របស់"],

|

| 200 |

+

["រឹង", "រុឹង"],

|

| 201 |

+

["រាំ", "រម្មណ៍"],

|

| 202 |

+

["រៀបរប", "រៀបរាប់"],

|

| 203 |

+

["លក់", "ល័ក្ត", "លក្ខណ៍", "ល័ក្ខ", "លក្ម្សណ៍"],

|

| 204 |

+

["លាប", "លាភ", "លៀប"],

|

| 205 |

+

["លង់", "លុង"],

|

| 206 |

+

["លន់", "លុន"],

|

| 207 |

+

["លប់", "លុប"],

|

| 208 |

+

["លោះ", "លស់", "លួស"],

|

| 209 |

+

["លិច", "លេច"],

|

| 210 |

+

["លាង", "លៀង"],

|

| 211 |

+

["លុត", "លត់", "លុត្ត"],

|

| 212 |

+

["លាប់", "ឡប់"],

|

| 213 |

+

["លិទ្ធ", "លិឍ", "លិត"],

|

| 214 |

+

["លួង", "ហ្លួង"],

|

| 215 |

+

["លេស", "លេះ"],

|

| 216 |

+

["ល្បះ", "ល្បាស់"],

|

| 217 |

+

["វង់", "វង្ស"],

|

| 218 |

+

["វន្ត", "វ័ន", "វាន់"],

|

| 219 |

+

["វត្ត", "វត្ស", "វ័ធ", "វត្ថ", "វដ្ត", "វឌ្ឍន៍", "វាត់", "វត្តន៍"],

|

| 220 |

+

["វ័យ", "វៃ", "វាយ", "វ៉ៃ"],

|

| 221 |

+

["វាត", "វាទ"],

|

| 222 |

+

["វិច", "វេច", "វេជ្ជ", "វេច្ច"],

|

| 223 |

+

["វិញ", "វេញ"],

|

| 224 |

+

["វាច", "វៀច"],

|

| 225 |

+

["វាង", "វៀង"],

|

| 226 |

+

["វាល", "វៀល"],

|

| 227 |

+

["សង់", "សង្ឃ"],

|

| 228 |

+

["ស័ក", "ស័ក្តិ", "សក្យ", "សគ្គ", "សគ៌ៈ"],

|

| 229 |

+

["ស័ង្ខ", "សាំង"],

|

| 230 |

+

["សស្ត្រា", "សាស្ត្រា"],

|

| 231 |

+

["សត្វ", "សត", "សត្យ", "សាត់"],

|

| 232 |

+

["សប្ត", "សព្ទ", "សាប់", "សប្ប"],

|

| 233 |

+

["សប", "សប់", "សព្វ", "សព", "សប្តិ"],

|

| 234 |

+

["សាសន៍", "សស្ត្រ", "សះ"],

|

| 235 |

+

["សិត", "សិទ្ធ", "សិទ្ធិ"],

|

| 236 |

+

["សិង", "សិង្ហ", "សឹង", "សុឹង"],

|

| 237 |

+

["សុក", "សុក្ក", "សុខ", "សុក្រ"],

|

| 238 |

+

["សិរ", "សិរ្ស", "សេ", "សេរ"],

|

| 239 |

+

["សូ", "សូរ", "សូរ្យ", "សូល៍"],

|

| 240 |

+

["សូទ", "សូត", "សូត្រ", "សូធ្យ", "សូទ្រ"],

|

| 241 |

+

["សូន", "សូន្យ"],

|

| 242 |

+

["សូម", "សុំ"],

|

| 243 |

+

["សួ", "សួរ", "សួគ៌"],

|

| 244 |

+

["សេដ្ធ", "សេត"],

|

| 245 |

+

["សោត", "សោធ", "សោធន៍"],

|

| 246 |

+

["សំ", "សម"],

|

| 247 |

+

["សម្បត្តិ", "សម្ប័ទ"],

|

| 248 |

+

["សម្បូរ", "សម្បូណ៍"],

|

| 249 |

+

["សម្រិត", "សំរឹទ្ធ"],

|

| 250 |

+

["សមិត", "សមិតិ", "សមិទ្ធ", "សមិទ្ធិ"],

|

| 251 |

+

["ស្និត", "ស្និទ្ធ"],

|

| 252 |

+

["ស្រស", "ស្រស់"],

|

| 253 |

+

["ស្រុះ", "ស្រុស"],

|

| 254 |

+

["ស្រះ", "ស្រាស់"],

|

| 255 |

+

["ស្លេះ", "ស្លេស្ម"],

|

| 256 |

+

["សេស", "សេះ"],

|

| 257 |

+

["ហត្ថ", "ហាត់"],

|

| 258 |

+

["ហស", "ហស្ត", "ហស្ថ", "ហោះ", "ហស្បតិ៍"],

|

| 259 |

+

["ហាស", "ហ័ស", "ហស្ស"],

|

| 260 |

+

["ហោង", "ហង"],

|

| 261 |

+

["អក", "អករ៍"],

|

| 262 |

+

["អ័ក្ស", "អាក់"],

|

| 263 |

+

["អង់", "អង្គ", "អង"],

|

| 264 |

+

["អដ្ឋ", "អត្ថ", "អឌ្ឍ", "អត្ត", "អាត់"],

|

| 265 |

+

["អន់", "អន្ធ"],

|

| 266 |

+

["អាចារ", "អាចារ្យ"],

|

| 267 |

+

["អាថ៌", "អាទិ"],

|

| 268 |

+

["អាប់", "អប្ប", "អ័ព្ទ"],

|

| 269 |

+

["អារម្មណ៍", "អារម្ភ"],

|

| 270 |

+

["ឥត", "ឥដ្ឋ", "ឥទ្ធិ"]

|

| 271 |

+

]

|

| 272 |

+

}

|

khmerhomophonecorrector/infer_from_json.py

ADDED

|

@@ -0,0 +1,270 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|