Commit

·

b1f1770

1

Parent(s):

b7ea7c4

update sam2&&scripts

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- app_lhm.py +23 -22

- sam2_configs/__init__.py +0 -0

- sam2_configs/sam2.1_hiera_l.yaml +120 -0

- third_party/sam2/.clang-format +85 -0

- third_party/sam2/.github/workflows/check_fmt.yml +17 -0

- third_party/sam2/.gitignore +11 -0

- third_party/sam2/.watchmanconfig +1 -0

- third_party/sam2/CODE_OF_CONDUCT.md +80 -0

- third_party/sam2/CONTRIBUTING.md +31 -0

- third_party/sam2/INSTALL.md +189 -0

- third_party/sam2/LICENSE +201 -0

- third_party/sam2/LICENSE_cctorch +29 -0

- third_party/sam2/MANIFEST.in +7 -0

- third_party/sam2/README.md +224 -0

- third_party/sam2/RELEASE_NOTES.md +27 -0

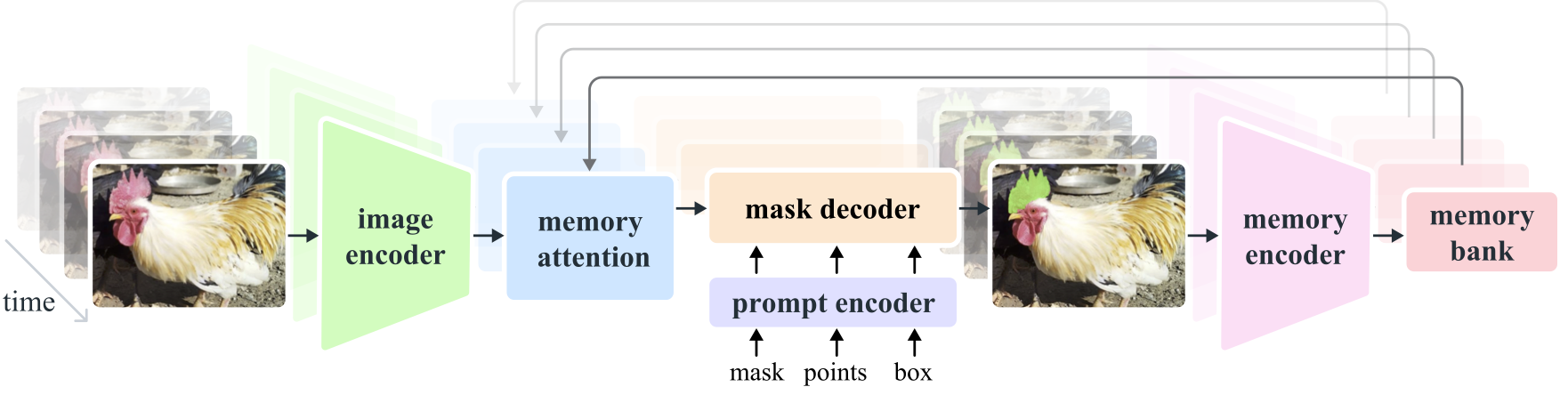

- third_party/sam2/assets/model_diagram.png +0 -0

- third_party/sam2/assets/sa_v_dataset.jpg +0 -0

- third_party/sam2/backend.Dockerfile +64 -0

- third_party/sam2/checkpoints/download_ckpts.sh +59 -0

- third_party/sam2/docker-compose.yaml +42 -0

- third_party/sam2/pyproject.toml +6 -0

- third_party/sam2/sam2/__init__.py +11 -0

- third_party/sam2/sam2/automatic_mask_generator.py +454 -0

- third_party/sam2/sam2/benchmark.py +92 -0

- third_party/sam2/sam2/build_sam.py +174 -0

- third_party/sam2/sam2/configs/sam2.1/sam2.1_hiera_b+.yaml +116 -0

- third_party/sam2/sam2/configs/sam2.1/sam2.1_hiera_l.yaml +120 -0

- third_party/sam2/sam2/configs/sam2.1/sam2.1_hiera_s.yaml +119 -0

- third_party/sam2/sam2/configs/sam2.1/sam2.1_hiera_t.yaml +121 -0

- third_party/sam2/sam2/configs/sam2.1_training/sam2.1_hiera_b+_MOSE_finetune.yaml +339 -0

- third_party/sam2/sam2/configs/sam2/sam2_hiera_b+.yaml +113 -0

- third_party/sam2/sam2/configs/sam2/sam2_hiera_l.yaml +117 -0

- third_party/sam2/sam2/configs/sam2/sam2_hiera_s.yaml +116 -0

- third_party/sam2/sam2/configs/sam2/sam2_hiera_t.yaml +118 -0

- third_party/sam2/sam2/csrc/connected_components.cu +289 -0

- third_party/sam2/sam2/modeling/__init__.py +5 -0

- third_party/sam2/sam2/modeling/backbones/__init__.py +5 -0

- third_party/sam2/sam2/modeling/backbones/hieradet.py +317 -0

- third_party/sam2/sam2/modeling/backbones/image_encoder.py +134 -0

- third_party/sam2/sam2/modeling/backbones/utils.py +93 -0

- third_party/sam2/sam2/modeling/memory_attention.py +169 -0

- third_party/sam2/sam2/modeling/memory_encoder.py +181 -0

- third_party/sam2/sam2/modeling/position_encoding.py +239 -0

- third_party/sam2/sam2/modeling/sam/__init__.py +5 -0

- third_party/sam2/sam2/modeling/sam/mask_decoder.py +295 -0

- third_party/sam2/sam2/modeling/sam/prompt_encoder.py +202 -0

- third_party/sam2/sam2/modeling/sam/transformer.py +311 -0

- third_party/sam2/sam2/modeling/sam2_base.py +909 -0

- third_party/sam2/sam2/modeling/sam2_utils.py +323 -0

- third_party/sam2/sam2/sam2_hiera_b+.yaml +1 -0

app_lhm.py

CHANGED

|

@@ -22,24 +22,24 @@ import base64

|

|

| 22 |

import subprocess

|

| 23 |

import os

|

| 24 |

|

| 25 |

-

def install_cuda_toolkit():

|

| 26 |

-

# CUDA_TOOLKIT_URL = "https://developer.download.nvidia.com/compute/cuda/11.8.0/local_installers/cuda_11.8.0_520.61.05_linux.run"

|

| 27 |

-

# # CUDA_TOOLKIT_URL = "https://developer.download.nvidia.com/compute/cuda/12.2.0/local_installers/cuda_12.2.0_535.54.03_linux.run"

|

| 28 |

-

# CUDA_TOOLKIT_FILE = "/tmp/%s" % os.path.basename(CUDA_TOOLKIT_URL)

|

| 29 |

-

# subprocess.call(["wget", "-q", CUDA_TOOLKIT_URL, "-O", CUDA_TOOLKIT_FILE])

|

| 30 |

-

# subprocess.call(["chmod", "+x", CUDA_TOOLKIT_FILE])

|

| 31 |

-

# subprocess.call([CUDA_TOOLKIT_FILE, "--silent", "--toolkit"])

|

| 32 |

-

|

| 33 |

-

|

| 34 |

-

|

| 35 |

-

|

| 36 |

-

|

| 37 |

-

|

| 38 |

-

|

| 39 |

-

|

| 40 |

-

|

| 41 |

-

|

| 42 |

-

install_cuda_toolkit()

|

| 43 |

|

| 44 |

def launch_pretrained():

|

| 45 |

from huggingface_hub import snapshot_download, hf_hub_download

|

|

@@ -54,7 +54,8 @@ def launch_env_not_compile_with_cuda():

|

|

| 54 |

os.system("pip install chumpy")

|

| 55 |

os.system("pip uninstall -y basicsr")

|

| 56 |

os.system("pip install git+https://github.com/hitsz-zuoqi/BasicSR/")

|

| 57 |

-

os.system("pip install

|

|

|

|

| 58 |

# os.system("pip install git+https://github.com/ashawkey/diff-gaussian-rasterization/")

|

| 59 |

# os.system("pip install git+https://github.com/camenduru/simple-knn/")

|

| 60 |

os.system("pip install --no-index --no-cache-dir pytorch3d -f https://dl.fbaipublicfiles.com/pytorch3d/packaging/wheels/py310_cu121_pyt251/download.html")

|

|

@@ -78,8 +79,7 @@ def launch_env_not_compile_with_cuda():

|

|

| 78 |

# os.system("mv pytorch3d /usr/local/lib/python3.10/site-packages/")

|

| 79 |

# os.system("mv pytorch3d-0.7.8.dist-info /usr/local/lib/python3.10/site-packages/")

|

| 80 |

|

| 81 |

-

|

| 82 |

-

launch_env_not_compile_with_cuda()

|

| 83 |

# launch_env_compile_with_cuda()

|

| 84 |

|

| 85 |

def assert_input_image(input_image):

|

|

@@ -268,5 +268,6 @@ def launch_gradio_app():

|

|

| 268 |

|

| 269 |

|

| 270 |

if __name__ == '__main__':

|

| 271 |

-

|

|

|

|

| 272 |

launch_gradio_app()

|

|

|

|

| 22 |

import subprocess

|

| 23 |

import os

|

| 24 |

|

| 25 |

+

# def install_cuda_toolkit():

|

| 26 |

+

# # CUDA_TOOLKIT_URL = "https://developer.download.nvidia.com/compute/cuda/11.8.0/local_installers/cuda_11.8.0_520.61.05_linux.run"

|

| 27 |

+

# # # CUDA_TOOLKIT_URL = "https://developer.download.nvidia.com/compute/cuda/12.2.0/local_installers/cuda_12.2.0_535.54.03_linux.run"

|

| 28 |

+

# # CUDA_TOOLKIT_FILE = "/tmp/%s" % os.path.basename(CUDA_TOOLKIT_URL)

|

| 29 |

+

# # subprocess.call(["wget", "-q", CUDA_TOOLKIT_URL, "-O", CUDA_TOOLKIT_FILE])

|

| 30 |

+

# # subprocess.call(["chmod", "+x", CUDA_TOOLKIT_FILE])

|

| 31 |

+

# # subprocess.call([CUDA_TOOLKIT_FILE, "--silent", "--toolkit"])

|

| 32 |

+

|

| 33 |

+

# os.environ["CUDA_HOME"] = "/usr/local/cuda"

|

| 34 |

+

# os.environ["PATH"] = "%s/bin:%s" % (os.environ["CUDA_HOME"], os.environ["PATH"])

|

| 35 |

+

# os.environ["LD_LIBRARY_PATH"] = "%s/lib:%s" % (

|

| 36 |

+

# os.environ["CUDA_HOME"],

|

| 37 |

+

# "" if "LD_LIBRARY_PATH" not in os.environ else os.environ["LD_LIBRARY_PATH"],

|

| 38 |

+

# )

|

| 39 |

+

# # Fix: arch_list[-1] += '+PTX'; IndexError: list index out of range

|

| 40 |

+

# os.environ["TORCH_CUDA_ARCH_LIST"] = "8.0;8.6"

|

| 41 |

+

|

| 42 |

+

# install_cuda_toolkit()

|

| 43 |

|

| 44 |

def launch_pretrained():

|

| 45 |

from huggingface_hub import snapshot_download, hf_hub_download

|

|

|

|

| 54 |

os.system("pip install chumpy")

|

| 55 |

os.system("pip uninstall -y basicsr")

|

| 56 |

os.system("pip install git+https://github.com/hitsz-zuoqi/BasicSR/")

|

| 57 |

+

os.system("pip install -e ./third_party/sam2")

|

| 58 |

+

# os.system("pip install git+https://github.com/hitsz-zuoqi/sam2/")

|

| 59 |

# os.system("pip install git+https://github.com/ashawkey/diff-gaussian-rasterization/")

|

| 60 |

# os.system("pip install git+https://github.com/camenduru/simple-knn/")

|

| 61 |

os.system("pip install --no-index --no-cache-dir pytorch3d -f https://dl.fbaipublicfiles.com/pytorch3d/packaging/wheels/py310_cu121_pyt251/download.html")

|

|

|

|

| 79 |

# os.system("mv pytorch3d /usr/local/lib/python3.10/site-packages/")

|

| 80 |

# os.system("mv pytorch3d-0.7.8.dist-info /usr/local/lib/python3.10/site-packages/")

|

| 81 |

|

| 82 |

+

|

|

|

|

| 83 |

# launch_env_compile_with_cuda()

|

| 84 |

|

| 85 |

def assert_input_image(input_image):

|

|

|

|

| 268 |

|

| 269 |

|

| 270 |

if __name__ == '__main__':

|

| 271 |

+

launch_pretrained()

|

| 272 |

+

launch_env_not_compile_with_cuda()

|

| 273 |

launch_gradio_app()

|

sam2_configs/__init__.py

ADDED

|

File without changes

|

sam2_configs/sam2.1_hiera_l.yaml

ADDED

|

@@ -0,0 +1,120 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# @package _global_

|

| 2 |

+

|

| 3 |

+

# Model

|

| 4 |

+

model:

|

| 5 |

+

_target_: sam2.modeling.sam2_base.SAM2Base

|

| 6 |

+

image_encoder:

|

| 7 |

+

_target_: sam2.modeling.backbones.image_encoder.ImageEncoder

|

| 8 |

+

scalp: 1

|

| 9 |

+

trunk:

|

| 10 |

+

_target_: sam2.modeling.backbones.hieradet.Hiera

|

| 11 |

+

embed_dim: 144

|

| 12 |

+

num_heads: 2

|

| 13 |

+

stages: [2, 6, 36, 4]

|

| 14 |

+

global_att_blocks: [23, 33, 43]

|

| 15 |

+

window_pos_embed_bkg_spatial_size: [7, 7]

|

| 16 |

+

window_spec: [8, 4, 16, 8]

|

| 17 |

+

neck:

|

| 18 |

+

_target_: sam2.modeling.backbones.image_encoder.FpnNeck

|

| 19 |

+

position_encoding:

|

| 20 |

+

_target_: sam2.modeling.position_encoding.PositionEmbeddingSine

|

| 21 |

+

num_pos_feats: 256

|

| 22 |

+

normalize: true

|

| 23 |

+

scale: null

|

| 24 |

+

temperature: 10000

|

| 25 |

+

d_model: 256

|

| 26 |

+

backbone_channel_list: [1152, 576, 288, 144]

|

| 27 |

+

fpn_top_down_levels: [2, 3] # output level 0 and 1 directly use the backbone features

|

| 28 |

+

fpn_interp_model: nearest

|

| 29 |

+

|

| 30 |

+

memory_attention:

|

| 31 |

+

_target_: sam2.modeling.memory_attention.MemoryAttention

|

| 32 |

+

d_model: 256

|

| 33 |

+

pos_enc_at_input: true

|

| 34 |

+

layer:

|

| 35 |

+

_target_: sam2.modeling.memory_attention.MemoryAttentionLayer

|

| 36 |

+

activation: relu

|

| 37 |

+

dim_feedforward: 2048

|

| 38 |

+

dropout: 0.1

|

| 39 |

+

pos_enc_at_attn: false

|

| 40 |

+

self_attention:

|

| 41 |

+

_target_: sam2.modeling.sam.transformer.RoPEAttention

|

| 42 |

+

rope_theta: 10000.0

|

| 43 |

+

feat_sizes: [32, 32]

|

| 44 |

+

embedding_dim: 256

|

| 45 |

+

num_heads: 1

|

| 46 |

+

downsample_rate: 1

|

| 47 |

+

dropout: 0.1

|

| 48 |

+

d_model: 256

|

| 49 |

+

pos_enc_at_cross_attn_keys: true

|

| 50 |

+

pos_enc_at_cross_attn_queries: false

|

| 51 |

+

cross_attention:

|

| 52 |

+

_target_: sam2.modeling.sam.transformer.RoPEAttention

|

| 53 |

+

rope_theta: 10000.0

|

| 54 |

+

feat_sizes: [32, 32]

|

| 55 |

+

rope_k_repeat: True

|

| 56 |

+

embedding_dim: 256

|

| 57 |

+

num_heads: 1

|

| 58 |

+

downsample_rate: 1

|

| 59 |

+

dropout: 0.1

|

| 60 |

+

kv_in_dim: 64

|

| 61 |

+

num_layers: 4

|

| 62 |

+

|

| 63 |

+

memory_encoder:

|

| 64 |

+

_target_: sam2.modeling.memory_encoder.MemoryEncoder

|

| 65 |

+

out_dim: 64

|

| 66 |

+

position_encoding:

|

| 67 |

+

_target_: sam2.modeling.position_encoding.PositionEmbeddingSine

|

| 68 |

+

num_pos_feats: 64

|

| 69 |

+

normalize: true

|

| 70 |

+

scale: null

|

| 71 |

+

temperature: 10000

|

| 72 |

+

mask_downsampler:

|

| 73 |

+

_target_: sam2.modeling.memory_encoder.MaskDownSampler

|

| 74 |

+

kernel_size: 3

|

| 75 |

+

stride: 2

|

| 76 |

+

padding: 1

|

| 77 |

+

fuser:

|

| 78 |

+

_target_: sam2.modeling.memory_encoder.Fuser

|

| 79 |

+

layer:

|

| 80 |

+

_target_: sam2.modeling.memory_encoder.CXBlock

|

| 81 |

+

dim: 256

|

| 82 |

+

kernel_size: 7

|

| 83 |

+

padding: 3

|

| 84 |

+

layer_scale_init_value: 1e-6

|

| 85 |

+

use_dwconv: True # depth-wise convs

|

| 86 |

+

num_layers: 2

|

| 87 |

+

|

| 88 |

+

num_maskmem: 7

|

| 89 |

+

image_size: 1024

|

| 90 |

+

# apply scaled sigmoid on mask logits for memory encoder, and directly feed input mask as output mask

|

| 91 |

+

sigmoid_scale_for_mem_enc: 20.0

|

| 92 |

+

sigmoid_bias_for_mem_enc: -10.0

|

| 93 |

+

use_mask_input_as_output_without_sam: true

|

| 94 |

+

# Memory

|

| 95 |

+

directly_add_no_mem_embed: true

|

| 96 |

+

no_obj_embed_spatial: true

|

| 97 |

+

# use high-resolution feature map in the SAM mask decoder

|

| 98 |

+

use_high_res_features_in_sam: true

|

| 99 |

+

# output 3 masks on the first click on initial conditioning frames

|

| 100 |

+

multimask_output_in_sam: true

|

| 101 |

+

# SAM heads

|

| 102 |

+

iou_prediction_use_sigmoid: True

|

| 103 |

+

# cross-attend to object pointers from other frames (based on SAM output tokens) in the encoder

|

| 104 |

+

use_obj_ptrs_in_encoder: true

|

| 105 |

+

add_tpos_enc_to_obj_ptrs: true

|

| 106 |

+

proj_tpos_enc_in_obj_ptrs: true

|

| 107 |

+

use_signed_tpos_enc_to_obj_ptrs: true

|

| 108 |

+

only_obj_ptrs_in_the_past_for_eval: true

|

| 109 |

+

# object occlusion prediction

|

| 110 |

+

pred_obj_scores: true

|

| 111 |

+

pred_obj_scores_mlp: true

|

| 112 |

+

fixed_no_obj_ptr: true

|

| 113 |

+

# multimask tracking settings

|

| 114 |

+

multimask_output_for_tracking: true

|

| 115 |

+

use_multimask_token_for_obj_ptr: true

|

| 116 |

+

multimask_min_pt_num: 0

|

| 117 |

+

multimask_max_pt_num: 1

|

| 118 |

+

use_mlp_for_obj_ptr_proj: true

|

| 119 |

+

# Compilation flag

|

| 120 |

+

compile_image_encoder: False

|

third_party/sam2/.clang-format

ADDED

|

@@ -0,0 +1,85 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

AccessModifierOffset: -1

|

| 2 |

+

AlignAfterOpenBracket: AlwaysBreak

|

| 3 |

+

AlignConsecutiveAssignments: false

|

| 4 |

+

AlignConsecutiveDeclarations: false

|

| 5 |

+

AlignEscapedNewlinesLeft: true

|

| 6 |

+

AlignOperands: false

|

| 7 |

+

AlignTrailingComments: false

|

| 8 |

+

AllowAllParametersOfDeclarationOnNextLine: false

|

| 9 |

+

AllowShortBlocksOnASingleLine: false

|

| 10 |

+

AllowShortCaseLabelsOnASingleLine: false

|

| 11 |

+

AllowShortFunctionsOnASingleLine: Empty

|

| 12 |

+

AllowShortIfStatementsOnASingleLine: false

|

| 13 |

+

AllowShortLoopsOnASingleLine: false

|

| 14 |

+

AlwaysBreakAfterReturnType: None

|

| 15 |

+

AlwaysBreakBeforeMultilineStrings: true

|

| 16 |

+

AlwaysBreakTemplateDeclarations: true

|

| 17 |

+

BinPackArguments: false

|

| 18 |

+

BinPackParameters: false

|

| 19 |

+

BraceWrapping:

|

| 20 |

+

AfterClass: false

|

| 21 |

+

AfterControlStatement: false

|

| 22 |

+

AfterEnum: false

|

| 23 |

+

AfterFunction: false

|

| 24 |

+

AfterNamespace: false

|

| 25 |

+

AfterObjCDeclaration: false

|

| 26 |

+

AfterStruct: false

|

| 27 |

+

AfterUnion: false

|

| 28 |

+

BeforeCatch: false

|

| 29 |

+

BeforeElse: false

|

| 30 |

+

IndentBraces: false

|

| 31 |

+

BreakBeforeBinaryOperators: None

|

| 32 |

+

BreakBeforeBraces: Attach

|

| 33 |

+

BreakBeforeTernaryOperators: true

|

| 34 |

+

BreakConstructorInitializersBeforeComma: false

|

| 35 |

+

BreakAfterJavaFieldAnnotations: false

|

| 36 |

+

BreakStringLiterals: false

|

| 37 |

+

ColumnLimit: 80

|

| 38 |

+

CommentPragmas: '^ IWYU pragma:'

|

| 39 |

+

ConstructorInitializerAllOnOneLineOrOnePerLine: true

|

| 40 |

+

ConstructorInitializerIndentWidth: 4

|

| 41 |

+

ContinuationIndentWidth: 4

|

| 42 |

+

Cpp11BracedListStyle: true

|

| 43 |

+

DerivePointerAlignment: false

|

| 44 |

+

DisableFormat: false

|

| 45 |

+

ForEachMacros: [ FOR_EACH, FOR_EACH_R, FOR_EACH_RANGE, ]

|

| 46 |

+

IncludeCategories:

|

| 47 |

+

- Regex: '^<.*\.h(pp)?>'

|

| 48 |

+

Priority: 1

|

| 49 |

+

- Regex: '^<.*'

|

| 50 |

+

Priority: 2

|

| 51 |

+

- Regex: '.*'

|

| 52 |

+

Priority: 3

|

| 53 |

+

IndentCaseLabels: true

|

| 54 |

+

IndentWidth: 2

|

| 55 |

+

IndentWrappedFunctionNames: false

|

| 56 |

+

KeepEmptyLinesAtTheStartOfBlocks: false

|

| 57 |

+

MacroBlockBegin: ''

|

| 58 |

+

MacroBlockEnd: ''

|

| 59 |

+

MaxEmptyLinesToKeep: 1

|

| 60 |

+

NamespaceIndentation: None

|

| 61 |

+

ObjCBlockIndentWidth: 2

|

| 62 |

+

ObjCSpaceAfterProperty: false

|

| 63 |

+

ObjCSpaceBeforeProtocolList: false

|

| 64 |

+

PenaltyBreakBeforeFirstCallParameter: 1

|

| 65 |

+

PenaltyBreakComment: 300

|

| 66 |

+

PenaltyBreakFirstLessLess: 120

|

| 67 |

+

PenaltyBreakString: 1000

|

| 68 |

+

PenaltyExcessCharacter: 1000000

|

| 69 |

+

PenaltyReturnTypeOnItsOwnLine: 200

|

| 70 |

+

PointerAlignment: Left

|

| 71 |

+

ReflowComments: true

|

| 72 |

+

SortIncludes: true

|

| 73 |

+

SpaceAfterCStyleCast: false

|

| 74 |

+

SpaceBeforeAssignmentOperators: true

|

| 75 |

+

SpaceBeforeParens: ControlStatements

|

| 76 |

+

SpaceInEmptyParentheses: false

|

| 77 |

+

SpacesBeforeTrailingComments: 1

|

| 78 |

+

SpacesInAngles: false

|

| 79 |

+

SpacesInContainerLiterals: true

|

| 80 |

+

SpacesInCStyleCastParentheses: false

|

| 81 |

+

SpacesInParentheses: false

|

| 82 |

+

SpacesInSquareBrackets: false

|

| 83 |

+

Standard: Cpp11

|

| 84 |

+

TabWidth: 8

|

| 85 |

+

UseTab: Never

|

third_party/sam2/.github/workflows/check_fmt.yml

ADDED

|

@@ -0,0 +1,17 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: SAM2/fmt

|

| 2 |

+

on:

|

| 3 |

+

pull_request:

|

| 4 |

+

branches:

|

| 5 |

+

- main

|

| 6 |

+

jobs:

|

| 7 |

+

ufmt_check:

|

| 8 |

+

runs-on: ubuntu-latest

|

| 9 |

+

steps:

|

| 10 |

+

- name: Check formatting

|

| 11 |

+

uses: omnilib/ufmt@action-v1

|

| 12 |

+

with:

|

| 13 |

+

path: sam2 tools

|

| 14 |

+

version: "2.0.0b2"

|

| 15 |

+

python-version: "3.10"

|

| 16 |

+

black-version: "24.2.0"

|

| 17 |

+

usort-version: "1.0.2"

|

third_party/sam2/.gitignore

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.vscode/

|

| 2 |

+

.DS_Store

|

| 3 |

+

__pycache__/

|

| 4 |

+

*-checkpoint.ipynb

|

| 5 |

+

.venv

|

| 6 |

+

*.egg*

|

| 7 |

+

build/*

|

| 8 |

+

_C.*

|

| 9 |

+

outputs/*

|

| 10 |

+

checkpoints/*.pt

|

| 11 |

+

demo/backend/checkpoints/*.pt

|

third_party/sam2/.watchmanconfig

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{}

|

third_party/sam2/CODE_OF_CONDUCT.md

ADDED

|

@@ -0,0 +1,80 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Code of Conduct

|

| 2 |

+

|

| 3 |

+

## Our Pledge

|

| 4 |

+

|

| 5 |

+

In the interest of fostering an open and welcoming environment, we as

|

| 6 |

+

contributors and maintainers pledge to make participation in our project and

|

| 7 |

+

our community a harassment-free experience for everyone, regardless of age, body

|

| 8 |

+

size, disability, ethnicity, sex characteristics, gender identity and expression,

|

| 9 |

+

level of experience, education, socio-economic status, nationality, personal

|

| 10 |

+

appearance, race, religion, or sexual identity and orientation.

|

| 11 |

+

|

| 12 |

+

## Our Standards

|

| 13 |

+

|

| 14 |

+

Examples of behavior that contributes to creating a positive environment

|

| 15 |

+

include:

|

| 16 |

+

|

| 17 |

+

* Using welcoming and inclusive language

|

| 18 |

+

* Being respectful of differing viewpoints and experiences

|

| 19 |

+

* Gracefully accepting constructive criticism

|

| 20 |

+

* Focusing on what is best for the community

|

| 21 |

+

* Showing empathy towards other community members

|

| 22 |

+

|

| 23 |

+

Examples of unacceptable behavior by participants include:

|

| 24 |

+

|

| 25 |

+

* The use of sexualized language or imagery and unwelcome sexual attention or

|

| 26 |

+

advances

|

| 27 |

+

* Trolling, insulting/derogatory comments, and personal or political attacks

|

| 28 |

+

* Public or private harassment

|

| 29 |

+

* Publishing others' private information, such as a physical or electronic

|

| 30 |

+

address, without explicit permission

|

| 31 |

+

* Other conduct which could reasonably be considered inappropriate in a

|

| 32 |

+

professional setting

|

| 33 |

+

|

| 34 |

+

## Our Responsibilities

|

| 35 |

+

|

| 36 |

+

Project maintainers are responsible for clarifying the standards of acceptable

|

| 37 |

+

behavior and are expected to take appropriate and fair corrective action in

|

| 38 |

+

response to any instances of unacceptable behavior.

|

| 39 |

+

|

| 40 |

+

Project maintainers have the right and responsibility to remove, edit, or

|

| 41 |

+

reject comments, commits, code, wiki edits, issues, and other contributions

|

| 42 |

+

that are not aligned to this Code of Conduct, or to ban temporarily or

|

| 43 |

+

permanently any contributor for other behaviors that they deem inappropriate,

|

| 44 |

+

threatening, offensive, or harmful.

|

| 45 |

+

|

| 46 |

+

## Scope

|

| 47 |

+

|

| 48 |

+

This Code of Conduct applies within all project spaces, and it also applies when

|

| 49 |

+

an individual is representing the project or its community in public spaces.

|

| 50 |

+

Examples of representing a project or community include using an official

|

| 51 |

+

project e-mail address, posting via an official social media account, or acting

|

| 52 |

+

as an appointed representative at an online or offline event. Representation of

|

| 53 |

+

a project may be further defined and clarified by project maintainers.

|

| 54 |

+

|

| 55 |

+

This Code of Conduct also applies outside the project spaces when there is a

|

| 56 |

+

reasonable belief that an individual's behavior may have a negative impact on

|

| 57 |

+

the project or its community.

|

| 58 |

+

|

| 59 |

+

## Enforcement

|

| 60 |

+

|

| 61 |

+

Instances of abusive, harassing, or otherwise unacceptable behavior may be

|

| 62 |

+

reported by contacting the project team at <[email protected]>. All

|

| 63 |

+

complaints will be reviewed and investigated and will result in a response that

|

| 64 |

+

is deemed necessary and appropriate to the circumstances. The project team is

|

| 65 |

+

obligated to maintain confidentiality with regard to the reporter of an incident.

|

| 66 |

+

Further details of specific enforcement policies may be posted separately.

|

| 67 |

+

|

| 68 |

+

Project maintainers who do not follow or enforce the Code of Conduct in good

|

| 69 |

+

faith may face temporary or permanent repercussions as determined by other

|

| 70 |

+

members of the project's leadership.

|

| 71 |

+

|

| 72 |

+

## Attribution

|

| 73 |

+

|

| 74 |

+

This Code of Conduct is adapted from the [Contributor Covenant][homepage], version 1.4,

|

| 75 |

+

available at https://www.contributor-covenant.org/version/1/4/code-of-conduct.html

|

| 76 |

+

|

| 77 |

+

[homepage]: https://www.contributor-covenant.org

|

| 78 |

+

|

| 79 |

+

For answers to common questions about this code of conduct, see

|

| 80 |

+

https://www.contributor-covenant.org/faq

|

third_party/sam2/CONTRIBUTING.md

ADDED

|

@@ -0,0 +1,31 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Contributing to segment-anything

|

| 2 |

+

We want to make contributing to this project as easy and transparent as

|

| 3 |

+

possible.

|

| 4 |

+

|

| 5 |

+

## Pull Requests

|

| 6 |

+

We actively welcome your pull requests.

|

| 7 |

+

|

| 8 |

+

1. Fork the repo and create your branch from `main`.

|

| 9 |

+

2. If you've added code that should be tested, add tests.

|

| 10 |

+

3. If you've changed APIs, update the documentation.

|

| 11 |

+

4. Ensure the test suite passes.

|

| 12 |

+

5. Make sure your code lints, using the `ufmt format` command. Linting requires `black==24.2.0`, `usort==1.0.2`, and `ufmt==2.0.0b2`, which can be installed via `pip install -e ".[dev]"`.

|

| 13 |

+

6. If you haven't already, complete the Contributor License Agreement ("CLA").

|

| 14 |

+

|

| 15 |

+

## Contributor License Agreement ("CLA")

|

| 16 |

+

In order to accept your pull request, we need you to submit a CLA. You only need

|

| 17 |

+

to do this once to work on any of Facebook's open source projects.

|

| 18 |

+

|

| 19 |

+

Complete your CLA here: <https://code.facebook.com/cla>

|

| 20 |

+

|

| 21 |

+

## Issues

|

| 22 |

+

We use GitHub issues to track public bugs. Please ensure your description is

|

| 23 |

+

clear and has sufficient instructions to be able to reproduce the issue.

|

| 24 |

+

|

| 25 |

+

Facebook has a [bounty program](https://www.facebook.com/whitehat/) for the safe

|

| 26 |

+

disclosure of security bugs. In those cases, please go through the process

|

| 27 |

+

outlined on that page and do not file a public issue.

|

| 28 |

+

|

| 29 |

+

## License

|

| 30 |

+

By contributing to segment-anything, you agree that your contributions will be licensed

|

| 31 |

+

under the LICENSE file in the root directory of this source tree.

|

third_party/sam2/INSTALL.md

ADDED

|

@@ -0,0 +1,189 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Installation

|

| 2 |

+

|

| 3 |

+

### Requirements

|

| 4 |

+

|

| 5 |

+

- Linux with Python ≥ 3.10, PyTorch ≥ 2.5.1 and [torchvision](https://github.com/pytorch/vision/) that matches the PyTorch installation. Install them together at https://pytorch.org to ensure this.

|

| 6 |

+

* Note older versions of Python or PyTorch may also work. However, the versions above are strongly recommended to provide all features such as `torch.compile`.

|

| 7 |

+

- [CUDA toolkits](https://developer.nvidia.com/cuda-toolkit-archive) that match the CUDA version for your PyTorch installation. This should typically be CUDA 12.1 if you follow the default installation command.

|

| 8 |

+

- If you are installing on Windows, it's strongly recommended to use [Windows Subsystem for Linux (WSL)](https://learn.microsoft.com/en-us/windows/wsl/install) with Ubuntu.

|

| 9 |

+

|

| 10 |

+

Then, install SAM 2 from the root of this repository via

|

| 11 |

+

```bash

|

| 12 |

+

pip install -e ".[notebooks]"

|

| 13 |

+

```

|

| 14 |

+

|

| 15 |

+

Note that you may skip building the SAM 2 CUDA extension during installation via environment variable `SAM2_BUILD_CUDA=0`, as follows:

|

| 16 |

+

```bash

|

| 17 |

+

# skip the SAM 2 CUDA extension

|

| 18 |

+

SAM2_BUILD_CUDA=0 pip install -e ".[notebooks]"

|

| 19 |

+

```

|

| 20 |

+

This would also skip the post-processing step at runtime (removing small holes and sprinkles in the output masks, which requires the CUDA extension), but shouldn't affect the results in most cases.

|

| 21 |

+

|

| 22 |

+

### Building the SAM 2 CUDA extension

|

| 23 |

+

|

| 24 |

+

By default, we allow the installation to proceed even if the SAM 2 CUDA extension fails to build. (In this case, the build errors are hidden unless using `-v` for verbose output in `pip install`.)

|

| 25 |

+

|

| 26 |

+

If you see a message like `Skipping the post-processing step due to the error above` at runtime or `Failed to build the SAM 2 CUDA extension due to the error above` during installation, it indicates that the SAM 2 CUDA extension failed to build in your environment. In this case, **you can still use SAM 2 for both image and video applications**. The post-processing step (removing small holes and sprinkles in the output masks) will be skipped, but this shouldn't affect the results in most cases.

|

| 27 |

+

|

| 28 |

+

If you would like to enable this post-processing step, you can reinstall SAM 2 on a GPU machine with environment variable `SAM2_BUILD_ALLOW_ERRORS=0` to force building the CUDA extension (and raise errors if it fails to build), as follows

|

| 29 |

+

```bash

|

| 30 |

+

pip uninstall -y SAM-2 && \

|

| 31 |

+

rm -f ./sam2/*.so && \

|

| 32 |

+

SAM2_BUILD_ALLOW_ERRORS=0 pip install -v -e ".[notebooks]"

|

| 33 |

+

```

|

| 34 |

+

|

| 35 |

+

Note that PyTorch needs to be installed first before building the SAM 2 CUDA extension. It's also necessary to install [CUDA toolkits](https://developer.nvidia.com/cuda-toolkit-archive) that match the CUDA version for your PyTorch installation. (This should typically be CUDA 12.1 if you follow the default installation command.) After installing the CUDA toolkits, you can check its version via `nvcc --version`.

|

| 36 |

+

|

| 37 |

+

Please check the section below on common installation issues if the CUDA extension fails to build during installation or load at runtime.

|

| 38 |

+

|

| 39 |

+

### Common Installation Issues

|

| 40 |

+

|

| 41 |

+

Click each issue for its solutions:

|

| 42 |

+

|

| 43 |

+

<details>

|

| 44 |

+

<summary>

|

| 45 |

+

I got `ImportError: cannot import name '_C' from 'sam2'`

|

| 46 |

+

</summary>

|

| 47 |

+

<br/>

|

| 48 |

+

|

| 49 |

+

This is usually because you haven't run the `pip install -e ".[notebooks]"` step above or the installation failed. Please install SAM 2 first, and see the other issues if your installation fails.

|

| 50 |

+

|

| 51 |

+

In some systems, you may need to run `python setup.py build_ext --inplace` in the SAM 2 repo root as suggested in https://github.com/facebookresearch/sam2/issues/77.

|

| 52 |

+

</details>

|

| 53 |

+

|

| 54 |

+

<details>

|

| 55 |

+

<summary>

|

| 56 |

+

I got `MissingConfigException: Cannot find primary config 'configs/sam2.1/sam2.1_hiera_l.yaml'`

|

| 57 |

+

</summary>

|

| 58 |

+

<br/>

|

| 59 |

+

|

| 60 |

+

This is usually because you haven't run the `pip install -e .` step above, so `sam2` isn't in your Python's `sys.path`. Please run this installation step. In case it still fails after the installation step, you may try manually adding the root of this repo to `PYTHONPATH` via

|

| 61 |

+

```bash

|

| 62 |

+

export SAM2_REPO_ROOT=/path/to/sam2 # path to this repo

|

| 63 |

+

export PYTHONPATH="${SAM2_REPO_ROOT}:${PYTHONPATH}"

|

| 64 |

+

```

|

| 65 |

+

to manually add `sam2_configs` into your Python's `sys.path`.

|

| 66 |

+

|

| 67 |

+

</details>

|

| 68 |

+

|

| 69 |

+

<details>

|

| 70 |

+

<summary>

|

| 71 |

+

I got `RuntimeError: Error(s) in loading state_dict for SAM2Base` when loading the new SAM 2.1 checkpoints

|

| 72 |

+

</summary>

|

| 73 |

+

<br/>

|

| 74 |

+

|

| 75 |

+

This is likely because you have installed a previous version of this repo, which doesn't have the new modules to support the SAM 2.1 checkpoints yet. Please try the following steps:

|

| 76 |

+

|

| 77 |

+

1. pull the latest code from the `main` branch of this repo

|

| 78 |

+

2. run `pip uninstall -y SAM-2` to uninstall any previous installations

|

| 79 |

+

3. then install the latest repo again using `pip install -e ".[notebooks]"`

|

| 80 |

+

|

| 81 |

+

In case the steps above still don't resolve the error, please try running in your Python environment the following

|

| 82 |

+

```python

|

| 83 |

+

from sam2.modeling import sam2_base

|

| 84 |

+

|

| 85 |

+

print(sam2_base.__file__)

|

| 86 |

+

```

|

| 87 |

+

and check whether the content in the printed local path of `sam2/modeling/sam2_base.py` matches the latest one in https://github.com/facebookresearch/sam2/blob/main/sam2/modeling/sam2_base.py (e.g. whether your local file has `no_obj_embed_spatial`) to indentify if you're still using a previous installation.

|

| 88 |

+

|

| 89 |

+

</details>

|

| 90 |

+

|

| 91 |

+

<details>

|

| 92 |

+

<summary>

|

| 93 |

+

My installation failed with `CUDA_HOME environment variable is not set`

|

| 94 |

+

</summary>

|

| 95 |

+

<br/>

|

| 96 |

+

|

| 97 |

+

This usually happens because the installation step cannot find the CUDA toolkits (that contain the NVCC compiler) to build a custom CUDA kernel in SAM 2. Please install [CUDA toolkits](https://developer.nvidia.com/cuda-toolkit-archive) or the version that matches the CUDA version for your PyTorch installation. If the error persists after installing CUDA toolkits, you may explicitly specify `CUDA_HOME` via

|

| 98 |

+

```

|

| 99 |

+

export CUDA_HOME=/usr/local/cuda # change to your CUDA toolkit path

|

| 100 |

+

```

|

| 101 |

+

and rerun the installation.

|

| 102 |

+

|

| 103 |

+

Also, you should make sure

|

| 104 |

+

```

|

| 105 |

+

python -c 'import torch; from torch.utils.cpp_extension import CUDA_HOME; print(torch.cuda.is_available(), CUDA_HOME)'

|

| 106 |

+

```

|

| 107 |

+

print `(True, a directory with cuda)` to verify that the CUDA toolkits are correctly set up.

|

| 108 |

+

|

| 109 |

+

If you are still having problems after verifying that the CUDA toolkit is installed and the `CUDA_HOME` environment variable is set properly, you may have to add the `--no-build-isolation` flag to the pip command:

|

| 110 |

+

```

|

| 111 |

+

pip install --no-build-isolation -e .

|

| 112 |

+

```

|

| 113 |

+

|

| 114 |

+

</details>

|

| 115 |

+

|

| 116 |

+

<details>

|

| 117 |

+

<summary>

|

| 118 |

+

I got `undefined symbol: _ZN3c1015SmallVectorBaseIjE8grow_podEPKvmm` (or similar errors)

|

| 119 |

+

</summary>

|

| 120 |

+

<br/>

|

| 121 |

+

|

| 122 |

+

This usually happens because you have multiple versions of dependencies (PyTorch or CUDA) in your environment. During installation, the SAM 2 library is compiled against one version library while at run time it links against another version. This might be due to that you have different versions of PyTorch or CUDA installed separately via `pip` or `conda`. You may delete one of the duplicates to only keep a single PyTorch and CUDA version.

|

| 123 |

+

|

| 124 |

+

In particular, if you have a lower PyTorch version than 2.5.1, it's recommended to upgrade to PyTorch 2.5.1 or higher first. Otherwise, the installation script will try to upgrade to the latest PyTorch using `pip`, which could sometimes lead to duplicated PyTorch installation if you have previously installed another PyTorch version using `conda`.

|

| 125 |

+

|

| 126 |

+

We have been building SAM 2 against PyTorch 2.5.1 internally. However, a few user comments (e.g. https://github.com/facebookresearch/sam2/issues/22, https://github.com/facebookresearch/sam2/issues/14) suggested that downgrading to PyTorch 2.1.0 might resolve this problem. In case the error persists, you may try changing the restriction from `torch>=2.5.1` to `torch==2.1.0` in both [`pyproject.toml`](pyproject.toml) and [`setup.py`](setup.py) to allow PyTorch 2.1.0.

|

| 127 |

+

</details>

|

| 128 |

+

|

| 129 |

+

<details>

|

| 130 |

+

<summary>

|

| 131 |

+

I got `CUDA error: no kernel image is available for execution on the device`

|

| 132 |

+

</summary>

|

| 133 |

+

<br/>

|

| 134 |

+

|

| 135 |

+

A possible cause could be that the CUDA kernel is somehow not compiled towards your GPU's CUDA [capability](https://developer.nvidia.com/cuda-gpus). This could happen if the installation is done in an environment different from the runtime (e.g. in a slurm system).

|

| 136 |

+

|

| 137 |

+

You can try pulling the latest code from the SAM 2 repo and running the following

|

| 138 |

+

```

|

| 139 |

+

export TORCH_CUDA_ARCH_LIST=9.0 8.0 8.6 8.9 7.0 7.2 7.5 6.0`

|

| 140 |

+

```

|

| 141 |

+

to manually specify the CUDA capability in the compilation target that matches your GPU.

|

| 142 |

+

</details>

|

| 143 |

+

|

| 144 |

+

<details>

|

| 145 |

+

<summary>

|

| 146 |

+

I got `RuntimeError: No available kernel. Aborting execution.` (or similar errors)

|

| 147 |

+

</summary>

|

| 148 |

+

<br/>

|

| 149 |

+

|

| 150 |

+

This is probably because your machine doesn't have a GPU or a compatible PyTorch version for Flash Attention (see also https://discuss.pytorch.org/t/using-f-scaled-dot-product-attention-gives-the-error-runtimeerror-no-available-kernel-aborting-execution/180900 for a discussion in PyTorch forum). You may be able to resolve this error by replacing the line

|

| 151 |

+

```python

|

| 152 |

+

OLD_GPU, USE_FLASH_ATTN, MATH_KERNEL_ON = get_sdpa_settings()

|

| 153 |

+

```

|

| 154 |

+

in [`sam2/modeling/sam/transformer.py`](sam2/modeling/sam/transformer.py) with

|

| 155 |

+

```python

|

| 156 |

+

OLD_GPU, USE_FLASH_ATTN, MATH_KERNEL_ON = True, True, True

|

| 157 |

+

```

|

| 158 |

+

to relax the attention kernel setting and use other kernels than Flash Attention.

|

| 159 |

+

</details>

|

| 160 |

+

|

| 161 |

+

<details>

|

| 162 |

+

<summary>

|

| 163 |

+

I got `Error compiling objects for extension`

|

| 164 |

+

</summary>

|

| 165 |

+

<br/>

|

| 166 |

+

|

| 167 |

+

You may see error log of:

|

| 168 |

+

> unsupported Microsoft Visual Studio version! Only the versions between 2017 and 2022 (inclusive) are supported! The nvcc flag '-allow-unsupported-compiler' can be used to override this version check; however, using an unsupported host compiler may cause compilation failure or incorrect run time execution. Use at your own risk.

|

| 169 |

+

|

| 170 |

+

This is probably because your versions of CUDA and Visual Studio are incompatible. (see also https://stackoverflow.com/questions/78515942/cuda-compatibility-with-visual-studio-2022-version-17-10 for a discussion in stackoverflow).<br>

|

| 171 |

+

You may be able to fix this by adding the `-allow-unsupported-compiler` argument to `nvcc` after L48 in the [setup.py](https://github.com/facebookresearch/sam2/blob/main/setup.py). <br>

|

| 172 |

+

After adding the argument, `get_extension()` will look like this:

|

| 173 |

+

```python

|

| 174 |

+

def get_extensions():

|

| 175 |

+

srcs = ["sam2/csrc/connected_components.cu"]

|

| 176 |

+

compile_args = {

|

| 177 |

+

"cxx": [],

|

| 178 |

+

"nvcc": [

|

| 179 |

+

"-DCUDA_HAS_FP16=1",

|

| 180 |

+

"-D__CUDA_NO_HALF_OPERATORS__",

|

| 181 |

+

"-D__CUDA_NO_HALF_CONVERSIONS__",

|

| 182 |

+

"-D__CUDA_NO_HALF2_OPERATORS__",

|

| 183 |

+

"-allow-unsupported-compiler" # Add this argument

|

| 184 |

+

],

|

| 185 |

+

}

|

| 186 |

+

ext_modules = [CUDAExtension("sam2._C", srcs, extra_compile_args=compile_args)]

|

| 187 |

+

return ext_modules

|

| 188 |

+

```

|

| 189 |

+

</details>

|

third_party/sam2/LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+