Upload README.md with huggingface_hub

Browse files

README.md

CHANGED

|

@@ -18,6 +18,9 @@ tags:

|

|

| 18 |

|

| 19 |

# Model Card for RecNeXt-A3 (With Knowledge Distillation)

|

| 20 |

|

|

|

|

|

|

|

|

|

|

| 21 |

[](https://github.com/suous/RecNeXt/blob/main/LICENSE)

|

| 22 |

[](https://arxiv.org/abs/2412.19628)

|

| 23 |

|

|

@@ -26,10 +29,6 @@ tags:

|

|

| 26 |

<img src="https://raw.githubusercontent.com/suous/RecNeXt/refs/heads/main/figures/code.png" alt="code" style="width: 46%;">

|

| 27 |

</div>

|

| 28 |

|

| 29 |

-

## Abstract

|

| 30 |

-

|

| 31 |

-

Recent advances in vision transformers (ViTs) have demonstrated the advantage of global modeling capabilities, prompting widespread integration of large-kernel convolutions for enlarging the effective receptive field (ERF). However, the quadratic scaling of parameter count and computational complexity (FLOPs) with respect to kernel size poses significant efficiency and optimization challenges. This paper introduces RecConv, a recursive decomposition strategy that efficiently constructs multi-frequency representations using small-kernel convolutions. RecConv establishes a linear relationship between parameter growth and decomposing levels which determines the effective receptive field $k\times 2^\ell$ for a base kernel $k$ and $\ell$ levels of decomposition, while maintaining constant FLOPs regardless of the ERF expansion. Specifically, RecConv achieves a parameter expansion of only $\ell+2$ times and a maximum FLOPs increase of $5/3$ times, compared to the exponential growth ($4^\ell$) of standard and depthwise convolutions. RecNeXt-M3 outperforms RepViT-M1.1 by 1.9 $AP^{box}$ on COCO with similar FLOPs. This innovation provides a promising avenue towards designing efficient and compact networks across various modalities. Codes and models can be found at this https URL .

|

| 32 |

-

|

| 33 |

## Model Details

|

| 34 |

|

| 35 |

- **Model Type**: Image Classification / Feature Extraction

|

|

@@ -38,6 +37,7 @@ Recent advances in vision transformers (ViTs) have demonstrated the advantage of

|

|

| 38 |

- **Parameters**: 9.0M

|

| 39 |

- **MACs**: 1.4G

|

| 40 |

- **Latency**: 2.4ms (iPhone 13, iOS 18)

|

|

|

|

| 41 |

- **Image Size**: 224x224

|

| 42 |

|

| 43 |

- **Architecture Configuration**:

|

|

@@ -51,6 +51,17 @@ Recent advances in vision transformers (ViTs) have demonstrated the advantage of

|

|

| 51 |

|

| 52 |

- **Dataset**: ImageNet-1K

|

| 53 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 54 |

## Model Usage

|

| 55 |

|

| 56 |

### Image Classification

|

|

@@ -93,46 +104,476 @@ We introduce two series of models: the **A** series uses linear attention and ne

|

|

| 93 |

|

| 94 |

> **dist**: distillation; **base**: without distillation (all models are trained over 300 epochs).

|

| 95 |

|

| 96 |

-

| model | top_1_accuracy | params | gmacs | npu_latency | cpu_latency | throughput | fused_weights | training_logs

|

| 97 |

-

|

| 98 |

-

| M0 | 74.7* \| 73.2 | 2.5M | 0.4 | 1.0ms | 189ms |

|

| 99 |

-

| M1 | 79.2* \| 78.0 | 5.2M | 0.9 | 1.4ms | 361ms | 384 | [dist](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m1_distill_300e_fused.pt) \| [base](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m1_without_distill_300e_fused.pt) | [dist](https://

|

| 100 |

-

| M2 | 80.3* \| 79.2 | 6.8M | 1.2 | 1.5ms | 431ms | 325 | [dist](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m2_distill_300e_fused.pt) \| [base](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m2_without_distill_300e_fused.pt) | [dist](https://

|

| 101 |

-

| M3 | 80.9* \| 79.6 | 8.2M | 1.4 | 1.6ms | 482ms | 314 | [dist](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m3_distill_300e_fused.pt) \| [base](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m3_without_distill_300e_fused.pt) | [dist](https://

|

| 102 |

-

| M4 | 82.5* \| 81.

|

| 103 |

-

| M5 | 83.3* \|

|

| 104 |

-

| A0 | 75.0* \| 73.6 | 2.8M | 0.4 | 1.4ms | 177ms |

|

| 105 |

-

| A1 | 79.6* \| 78.3 | 5.9M | 0.9 | 1.9ms | 334ms |

|

| 106 |

-

| A2 | 80.8* \| 79.6 | 7.9M | 1.2 | 2.2ms | 413ms |

|

| 107 |

-

| A3 | 81.1* \| 80.1 | 9.0M | 1.4 | 2.4ms | 447ms |

|

| 108 |

-

| A4 | 82.5* \| 81.6 | 15.8M | 2.4 | 3.6ms | 764ms | 1265 | [dist](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_a4_distill_300e_fused.pt) \| [base](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_a4_without_distill_300e_fused.pt) | [dist](https://

|

| 109 |

-

| A5 | 83.5* \| 83.1 | 25.7M | 4.7 | 5.6ms | 1376ms |

|

| 110 |

|

| 111 |

### Comparison with [LSNet](https://github.com/jameslahm/lsnet)

|

| 112 |

|

| 113 |

-

|

| 114 |

-

|

| 115 |

-

|

| 116 |

-

|

| 117 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 118 |

|

| 119 |

> The NPU latency is measured on an iPhone 13 with models compiled by Core ML Tools.

|

| 120 |

> The CPU latency is accessed on a Quad-core ARM Cortex-A57 processor in ONNX format.

|

| 121 |

> And the throughput is tested on an Nvidia RTX3090 with maximum power-of-two batch size that fits in memory.

|

| 122 |

|

| 123 |

|

| 124 |

-

##

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 125 |

|

| 126 |

-

|

| 127 |

-

|

| 128 |

-

|

| 129 |

-

|

| 130 |

-

|

| 131 |

-

|

| 132 |

-

|

| 133 |

-

|

| 134 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 135 |

```

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 136 |

|

| 137 |

## Limitations

|

| 138 |

|

|

@@ -142,8 +583,21 @@ We introduce two series of models: the **A** series uses linear attention and ne

|

|

| 142 |

|

| 143 |

## Acknowledgement

|

| 144 |

|

| 145 |

-

Classification (ImageNet) code base is partly built with [LeViT](https://github.com/facebookresearch/LeViT), [PoolFormer](https://github.com/sail-sg/poolformer), [EfficientFormer](https://github.com/snap-research/EfficientFormer), [RepViT](https://github.com/THU-MIG/RepViT), and [MogaNet](https://github.com/Westlake-AI/MogaNet).

|

|

|

|

|

|

|

|

|

|

|

|

|

| 146 |

|

| 147 |

-

|

| 148 |

|

| 149 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 18 |

|

| 19 |

# Model Card for RecNeXt-A3 (With Knowledge Distillation)

|

| 20 |

|

| 21 |

+

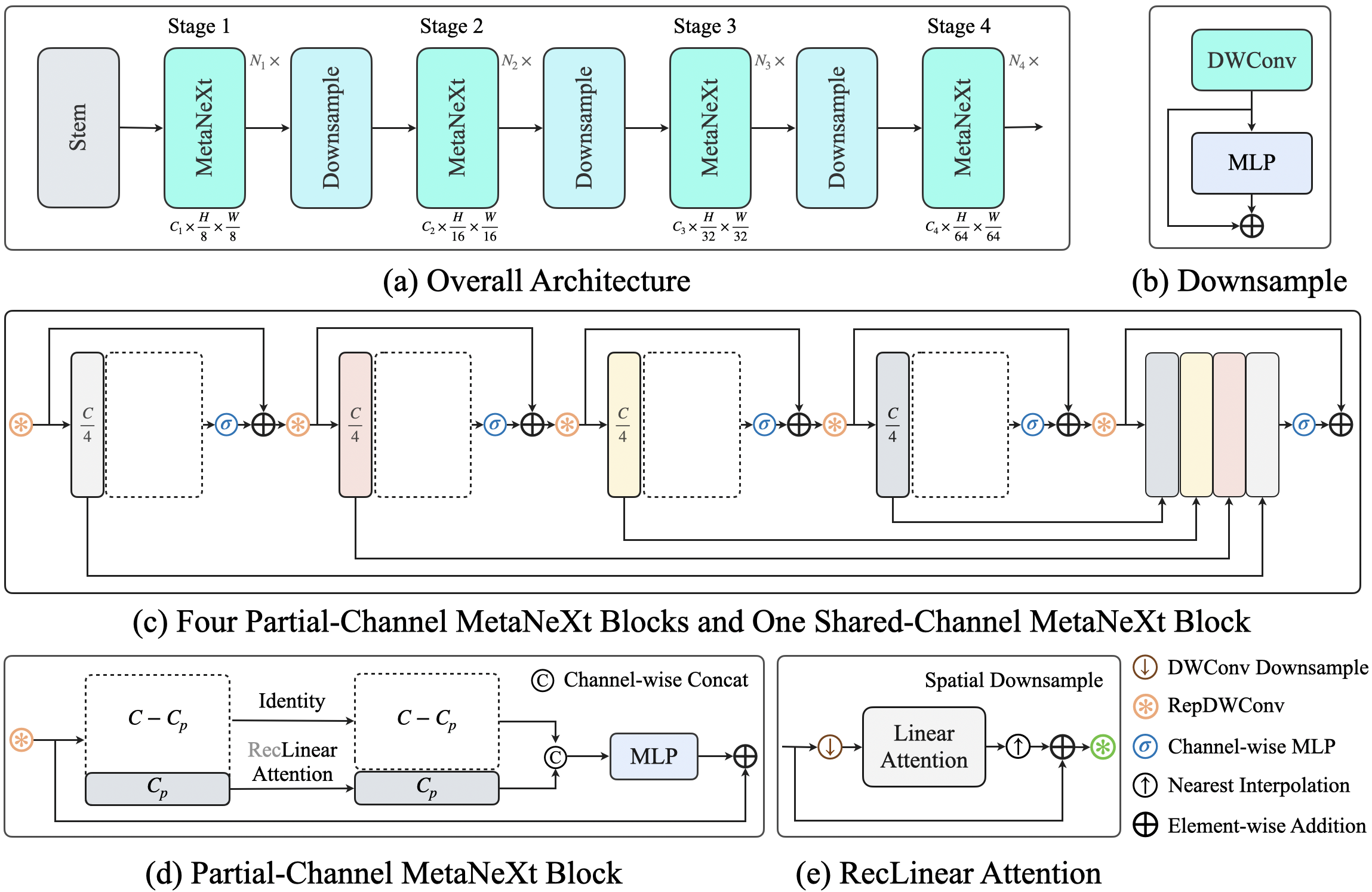

## Abstract

|

| 22 |

+

Recent advances in vision transformers (ViTs) have demonstrated the advantage of global modeling capabilities, prompting widespread integration of large-kernel convolutions for enlarging the effective receptive field (ERF). However, the quadratic scaling of parameter count and computational complexity (FLOPs) with respect to kernel size poses significant efficiency and optimization challenges. This paper introduces RecConv, a recursive decomposition strategy that efficiently constructs multi-frequency representations using small-kernel convolutions. RecConv establishes a linear relationship between parameter growth and decomposing levels which determines the effective receptive field $k\times 2^\ell$ for a base kernel $k$ and $\ell$ levels of decomposition, while maintaining constant FLOPs regardless of the ERF expansion. Specifically, RecConv achieves a parameter expansion of only $\ell+2$ times and a maximum FLOPs increase of $5/3$ times, compared to the exponential growth ($4^\ell$) of standard and depthwise convolutions. RecNeXt-M3 outperforms RepViT-M1.1 by 1.9 $AP^{box}$ on COCO with similar FLOPs. This innovation provides a promising avenue towards designing efficient and compact networks across various modalities. Codes and models can be found at https://github.com/suous/RecNeXt.

|

| 23 |

+

|

| 24 |

[](https://github.com/suous/RecNeXt/blob/main/LICENSE)

|

| 25 |

[](https://arxiv.org/abs/2412.19628)

|

| 26 |

|

|

|

|

| 29 |

<img src="https://raw.githubusercontent.com/suous/RecNeXt/refs/heads/main/figures/code.png" alt="code" style="width: 46%;">

|

| 30 |

</div>

|

| 31 |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 32 |

## Model Details

|

| 33 |

|

| 34 |

- **Model Type**: Image Classification / Feature Extraction

|

|

|

|

| 37 |

- **Parameters**: 9.0M

|

| 38 |

- **MACs**: 1.4G

|

| 39 |

- **Latency**: 2.4ms (iPhone 13, iOS 18)

|

| 40 |

+

- **Throughput**: 2151 (RTX 3090)

|

| 41 |

- **Image Size**: 224x224

|

| 42 |

|

| 43 |

- **Architecture Configuration**:

|

|

|

|

| 51 |

|

| 52 |

- **Dataset**: ImageNet-1K

|

| 53 |

|

| 54 |

+

## Recent Updates

|

| 55 |

+

|

| 56 |

+

**UPDATES** 🔥

|

| 57 |

+

- **2025/07/23**: Added a simple architecture, the overall design follows [LSNet](https://github.com/jameslahm/lsnet).

|

| 58 |

+

- **2025/07/04**: Uploaded classification models to [HuggingFace](https://huggingface.co/suous)🤗.

|

| 59 |

+

- **2025/07/01**: Added more comparisons with [LSNet](https://github.com/jameslahm/lsnet).

|

| 60 |

+

- **2025/06/27**: Added **A** series code and logs, replacing convolution with linear attention.

|

| 61 |

+

- **2025/03/19**: Added more ablation study results, including using attention with RecConv design.

|

| 62 |

+

- **2025/01/02**: Uploaded checkpoints and training logs of RecNeXt-M0.

|

| 63 |

+

- **2024/12/29**: Uploaded checkpoints and training logs of RecNeXt-M1 - M5.

|

| 64 |

+

|

| 65 |

## Model Usage

|

| 66 |

|

| 67 |

### Image Classification

|

|

|

|

| 104 |

|

| 105 |

> **dist**: distillation; **base**: without distillation (all models are trained over 300 epochs).

|

| 106 |

|

| 107 |

+

| model | top_1_accuracy | params | gmacs | npu_latency | cpu_latency | throughput | fused_weights | training_logs |

|

| 108 |

+

|-------|----------------|--------|-------|-------------|-------------|------------|--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

|

| 109 |

+

| M0 | 74.7* \| 73.2 | 2.5M | 0.4 | 1.0ms | 189ms | 750 | [dist](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m0_distill_300e_fused.pt) \| [base](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m0_without_distill_300e_fused.pt) | [dist](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/distill/recnext_m0_distill_300e.txt) \| [base](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/normal/recnext_m0_without_distill_300e.txt) |

|

| 110 |

+

| M1 | 79.2* \| 78.0 | 5.2M | 0.9 | 1.4ms | 361ms | 384 | [dist](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m1_distill_300e_fused.pt) \| [base](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m1_without_distill_300e_fused.pt) | [dist](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/distill/recnext_m1_distill_300e.txt) \| [base](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/normal/recnext_m1_without_distill_300e.txt) |

|

| 111 |

+

| M2 | 80.3* \| 79.2 | 6.8M | 1.2 | 1.5ms | 431ms | 325 | [dist](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m2_distill_300e_fused.pt) \| [base](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m2_without_distill_300e_fused.pt) | [dist](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/distill/recnext_m2_distill_300e.txt) \| [base](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/normal/recnext_m2_without_distill_300e.txt) |

|

| 112 |

+

| M3 | 80.9* \| 79.6 | 8.2M | 1.4 | 1.6ms | 482ms | 314 | [dist](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m3_distill_300e_fused.pt) \| [base](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m3_without_distill_300e_fused.pt) | [dist](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/distill/recnext_m3_distill_300e.txt) \| [base](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/normal/recnext_m3_without_distill_300e.txt) |

|

| 113 |

+

| M4 | 82.5* \| 81.4 | 14.1M | 2.4 | 2.4ms | 843ms | 169 | [dist](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m4_distill_300e_fused.pt) \| [base](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m4_without_distill_300e_fused.pt) | [dist](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/distill/recnext_m4_distill_300e.txt) \| [base](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/normal/recnext_m4_without_distill_300e.txt) |

|

| 114 |

+

| M5 | 83.3* \| 82.9 | 22.9M | 4.7 | 3.4ms | 1487ms | 104 | [dist](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m5_distill_300e_fused.pt) \| [base](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m5_without_distill_300e_fused.pt) | [dist](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/distill/recnext_m5_distill_300e.txt) \| [base](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/normal/recnext_m5_without_distill_300e.txt) |

|

| 115 |

+

| A0 | 75.0* \| 73.6 | 2.8M | 0.4 | 1.4ms | 177ms | 4891 | [dist](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_a0_distill_300e_fused.pt) \| [base](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_a0_without_distill_300e_fused.pt) | [dist](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/distill/recnext_a0_distill_300e.txt) \| [base](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/normal/recnext_a0_without_distill_300e.txt) |

|

| 116 |

+

| A1 | 79.6* \| 78.3 | 5.9M | 0.9 | 1.9ms | 334ms | 2730 | [dist](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_a1_distill_300e_fused.pt) \| [base](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_a1_without_distill_300e_fused.pt) | [dist](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/distill/recnext_a1_distill_300e.txt) \| [base](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/normal/recnext_a1_without_distill_300e.txt) |

|

| 117 |

+

| A2 | 80.8* \| 79.6 | 7.9M | 1.2 | 2.2ms | 413ms | 2331 | [dist](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_a2_distill_300e_fused.pt) \| [base](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_a2_without_distill_300e_fused.pt) | [dist](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/distill/recnext_a2_distill_300e.txt) \| [base](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/normal/recnext_a2_without_distill_300e.txt) |

|

| 118 |

+

| A3 | 81.1* \| 80.1 | 9.0M | 1.4 | 2.4ms | 447ms | 2151 | [dist](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_a3_distill_300e_fused.pt) \| [base](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_a3_without_distill_300e_fused.pt) | [dist](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/distill/recnext_a3_distill_300e.txt) \| [base](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/normal/recnext_a3_without_distill_300e.txt) |

|

| 119 |

+

| A4 | 82.5* \| 81.6 | 15.8M | 2.4 | 3.6ms | 764ms | 1265 | [dist](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_a4_distill_300e_fused.pt) \| [base](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_a4_without_distill_300e_fused.pt) | [dist](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/distill/recnext_a4_distill_300e.txt) \| [base](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/normal/recnext_a4_without_distill_300e.txt) |

|

| 120 |

+

| A5 | 83.5* \| 83.1 | 25.7M | 4.7 | 5.6ms | 1376ms | 733 | [dist](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_a5_distill_300e_fused.pt) \| [base](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_a5_without_distill_300e_fused.pt) | [dist](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/distill/recnext_a5_distill_300e.txt) \| [base](https://raw.githubusercontent.com/suous/RecNeXt/main/logs/normal/recnext_a5_without_distill_300e.txt) |

|

| 121 |

|

| 122 |

### Comparison with [LSNet](https://github.com/jameslahm/lsnet)

|

| 123 |

|

| 124 |

+

We present a simple architecture, the overall design follows [LSNet](https://github.com/jameslahm/lsnet). This framework centers around sharing channel features from the previous layers.

|

| 125 |

+

Our motivation for doing so is to reduce the computational cost of token mixers and minimize feature redundancy in the final stage.

|

| 126 |

+

|

| 127 |

+

|

| 128 |

+

|

| 129 |

+

#### With **Shared-Channel Blocks**

|

| 130 |

+

|

| 131 |

+

| model | top_1_accuracy | params | gmacs | npu_latency | cpu_latency | throughput | fused_weights | training_logs |

|

| 132 |

+

|-------|----------------|--------|-------|-------------|-------------|------------|----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

|

| 133 |

+

| T | 76.8 \| 75.2 | 12.1M | 0.3 | 1.8ms | 105ms | 13957 | [dist](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_t_share_channel_distill_300e_fused.pt) \| [norm](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_t_share_channel_without_distill_300e_fused.pt) | [dist](https://raw.githubusercontent.com/suous/RecNeXt/main/lsnet/logs/distill/recnext_t_share_channel_distill_300e.txt) \| [norm](https://raw.githubusercontent.com/suous/RecNeXt/main/lsnet/logs/normal/recnext_t_share_channel_without_distill_300e.txt) |

|

| 134 |

+

| S | 79.5 \| 78.3 | 15.8M | 0.7 | 2.0ms | 182ms | 8034 | [dist](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_s_share_channel_distill_300e_fused.pt) \| [norm](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_s_share_channel_without_distill_300e_fused.pt) | [dist](https://raw.githubusercontent.com/suous/RecNeXt/main/lsnet/logs/distill/recnext_s_share_channel_distill_300e.txt) \| [norm](https://raw.githubusercontent.com/suous/RecNeXt/main/lsnet/logs/normal/recnext_s_share_channel_without_distill_300e.txt) |

|

| 135 |

+

| B | 81.5 \| 80.3 | 19.2M | 1.1 | 2.5ms | 296ms | 4472 | [dist](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_b_share_channel_distill_300e_fused.pt) \| [norm](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_b_share_channel_without_distill_300e_fused.pt) | [dist](https://raw.githubusercontent.com/suous/RecNeXt/main/lsnet/logs/distill/recnext_b_share_channel_distill_300e.txt) \| [norm](https://raw.githubusercontent.com/suous/RecNeXt/main/lsnet/logs/normal/recnext_b_share_channel_without_distill_300e.txt) |

|

| 136 |

+

|

| 137 |

+

#### Without **Shared-Channel Blocks**

|

| 138 |

+

|

| 139 |

+

| model | top_1_accuracy | params | gmacs | npu_latency | cpu_latency | throughput | fused_weights | training_logs |

|

| 140 |

+

|-------|----------------|--------|-------|-------------|-------------|------------|------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

|

| 141 |

+

| T | 76.6* \| 75.1 | 12.1M | 0.3 | 1.8ms | 109ms | 13878 | [dist](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_t_distill_300e_fused.pt) \| [base](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_t_without_distill_300e_fused.pt) | [dist](https://raw.githubusercontent.com/suous/RecNeXt/main/lsnet/logs/distill/recnext_t_distill_300e.txt) \| [base](https://raw.githubusercontent.com/suous/RecNeXt/main/lsnet/logs/normal/recnext_t_without_distill_300e.txt) |

|

| 142 |

+

| S | 79.6* \| 78.3 | 15.8M | 0.7 | 2.0ms | 188ms | 7989 | [dist](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_s_distill_300e_fused.pt) \| [base](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_s_without_distill_300e_fused.pt) | [dist](https://raw.githubusercontent.com/suous/RecNeXt/main/lsnet/logs/distill/recnext_s_distill_300e.txt) \| [base](https://raw.githubusercontent.com/suous/RecNeXt/main/lsnet/logs/normal/recnext_s_without_distill_300e.txt) |

|

| 143 |

+

| B | 81.4* \| 80.3 | 19.3M | 1.1 | 2.5ms | 290ms | 4450 | [dist](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_b_distill_300e_fused.pt) \| [base](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_b_without_distill_300e_fused.pt) | [dist](https://raw.githubusercontent.com/suous/RecNeXt/main/lsnet/logs/distill/recnext_b_distill_300e.txt) \| [base](https://raw.githubusercontent.com/suous/RecNeXt/main/lsnet/logs/normal/recnext_b_without_distill_300e.txt) |

|

| 144 |

|

| 145 |

> The NPU latency is measured on an iPhone 13 with models compiled by Core ML Tools.

|

| 146 |

> The CPU latency is accessed on a Quad-core ARM Cortex-A57 processor in ONNX format.

|

| 147 |

> And the throughput is tested on an Nvidia RTX3090 with maximum power-of-two batch size that fits in memory.

|

| 148 |

|

| 149 |

|

| 150 |

+

## Latency Measurement

|

| 151 |

+

|

| 152 |

+

The latency reported in RecNeXt for iPhone 13 (iOS 18) uses the benchmark tool from [XCode 14](https://developer.apple.com/videos/play/wwdc2022/10027/).

|

| 153 |

+

|

| 154 |

+

<details>

|

| 155 |

+

<summary>

|

| 156 |

+

RecNeXt-M0

|

| 157 |

+

</summary>

|

| 158 |

+

<img src="https://raw.githubusercontent.com/suous/RecNeXt/main/figures/latency/recnext_m0_224x224.png" alt="recnext_m0">

|

| 159 |

+

</details>

|

| 160 |

+

|

| 161 |

+

<details>

|

| 162 |

+

<summary>

|

| 163 |

+

RecNeXt-M1

|

| 164 |

+

</summary>

|

| 165 |

+

<img src="https://raw.githubusercontent.com/suous/RecNeXt/main/figures/latency/recnext_m1_224x224.png" alt="recnext_m1">

|

| 166 |

+

</details>

|

| 167 |

+

|

| 168 |

+

<details>

|

| 169 |

+

<summary>

|

| 170 |

+

RecNeXt-M2

|

| 171 |

+

</summary>

|

| 172 |

+

<img src="https://raw.githubusercontent.com/suous/RecNeXt/main/figures/latency/recnext_m2_224x224.png" alt="recnext_m2">

|

| 173 |

+

</details>

|

| 174 |

+

|

| 175 |

+

<details>

|

| 176 |

+

<summary>

|

| 177 |

+

RecNeXt-M3

|

| 178 |

+

</summary>

|

| 179 |

+

<img src="https://raw.githubusercontent.com/suous/RecNeXt/main/figures/latency/recnext_m3_224x224.png" alt="recnext_m3">

|

| 180 |

+

</details>

|

| 181 |

+

|

| 182 |

+

<details>

|

| 183 |

+

<summary>

|

| 184 |

+

RecNeXt-M4

|

| 185 |

+

</summary>

|

| 186 |

+

<img src="https://raw.githubusercontent.com/suous/RecNeXt/main/figures/latency/recnext_m4_224x224.png" alt="recnext_m4">

|

| 187 |

+

</details>

|

| 188 |

+

|

| 189 |

+

<details>

|

| 190 |

+

<summary>

|

| 191 |

+

RecNeXt-M5

|

| 192 |

+

</summary>

|

| 193 |

+

<img src="https://raw.githubusercontent.com/suous/RecNeXt/main/figures/latency/recnext_m5_224x224.png" alt="recnext_m5">

|

| 194 |

+

</details>

|

| 195 |

+

|

| 196 |

+

<details>

|

| 197 |

+

<summary>

|

| 198 |

+

RecNeXt-A0

|

| 199 |

+

</summary>

|

| 200 |

+

<img src="https://raw.githubusercontent.com/suous/RecNeXt/main/figures/latency/recnext_a0_224x224.png" alt="recnext_a0">

|

| 201 |

+

</details>

|

| 202 |

+

|

| 203 |

+

<details>

|

| 204 |

+

<summary>

|

| 205 |

+

RecNeXt-A1

|

| 206 |

+

</summary>

|

| 207 |

+

<img src="https://raw.githubusercontent.com/suous/RecNeXt/main/figures/latency/recnext_a1_224x224.png" alt="recnext_a1">

|

| 208 |

+

</details>

|

| 209 |

+

|

| 210 |

+

<details>

|

| 211 |

+

<summary>

|

| 212 |

+

RecNeXt-A2

|

| 213 |

+

</summary>

|

| 214 |

+

<img src="https://raw.githubusercontent.com/suous/RecNeXt/main/figures/latency/recnext_a2_224x224.png" alt="recnext_a2">

|

| 215 |

+

</details>

|

| 216 |

+

|

| 217 |

+

<details>

|

| 218 |

+

<summary>

|

| 219 |

+

RecNeXt-A3

|

| 220 |

+

</summary>

|

| 221 |

+

<img src="https://raw.githubusercontent.com/suous/RecNeXt/main/figures/latency/recnext_a3_224x224.png" alt="recnext_a3">

|

| 222 |

+

</details>

|

| 223 |

+

|

| 224 |

+

<details>

|

| 225 |

+

<summary>

|

| 226 |

+

RecNeXt-A4

|

| 227 |

+

</summary>

|

| 228 |

+

<img src="https://raw.githubusercontent.com/suous/RecNeXt/main/figures/latency/recnext_a4_224x224.png" alt="recnext_a4">

|

| 229 |

+

</details>

|

| 230 |

+

|

| 231 |

+

<details>

|

| 232 |

+

<summary>

|

| 233 |

+

RecNeXt-A5

|

| 234 |

+

</summary>

|

| 235 |

+

<img src="https://raw.githubusercontent.com/suous/RecNeXt/main/figures/latency/recnext_a5_224x224.png" alt="recnext_a5">

|

| 236 |

+

</details>

|

| 237 |

+

|

| 238 |

+

<details>

|

| 239 |

+

<summary>

|

| 240 |

+

RecNeXt-T

|

| 241 |

+

</summary>

|

| 242 |

+

<img src="https://raw.githubusercontent.com/suous/RecNeXt/main/lsnet/figures/latency/recnext_t_224x224.png" alt="recnext_t">

|

| 243 |

+

</details>

|

| 244 |

+

|

| 245 |

+

<details>

|

| 246 |

+

<summary>

|

| 247 |

+

RecNeXt-S

|

| 248 |

+

</summary>

|

| 249 |

+

<img src="https://raw.githubusercontent.com/suous/RecNeXt/main/lsnet/figures/latency/recnext_s_224x224.png" alt="recnext_s">

|

| 250 |

+

</details>

|

| 251 |

+

|

| 252 |

+

<details>

|

| 253 |

+

<summary>

|

| 254 |

+

RecNeXt-B

|

| 255 |

+

</summary>

|

| 256 |

+

<img src="https://raw.githubusercontent.com/suous/RecNeXt/main/lsnet/figures/latency/recnext_b_224x224.png" alt="recnext_b">

|

| 257 |

+

</details>

|

| 258 |

+

|

| 259 |

+

Tips: export the model to Core ML model

|

| 260 |

+

```

|

| 261 |

+

python export_coreml.py --model recnext_m1 --ckpt pretrain/recnext_m1_distill_300e.pth

|

| 262 |

+

```

|

| 263 |

+

Tips: measure the throughput on GPU

|

| 264 |

+

```

|

| 265 |

+

python speed_gpu.py --model recnext_m1

|

| 266 |

+

```

|

| 267 |

|

| 268 |

+

## ImageNet (Training and Evaluation)

|

| 269 |

+

|

| 270 |

+

### Prerequisites

|

| 271 |

+

`conda` virtual environment is recommended.

|

| 272 |

+

```

|

| 273 |

+

conda create -n recnext python=3.8

|

| 274 |

+

pip install -r requirements.txt

|

| 275 |

+

```

|

| 276 |

+

|

| 277 |

+

### Data preparation

|

| 278 |

+

|

| 279 |

+

Download and extract ImageNet train and val images from http://image-net.org/. The training and validation data are expected to be in the `train` folder and `val` folder respectively:

|

| 280 |

+

|

| 281 |

+

```bash

|

| 282 |

+

# script to extract ImageNet dataset: https://github.com/pytorch/examples/blob/main/imagenet/extract_ILSVRC.sh

|

| 283 |

+

# ILSVRC2012_img_train.tar (about 138 GB)

|

| 284 |

+

# ILSVRC2012_img_val.tar (about 6.3 GB)

|

| 285 |

+

```

|

| 286 |

+

|

| 287 |

+

```

|

| 288 |

+

# organize the ImageNet dataset as follows:

|

| 289 |

+

imagenet

|

| 290 |

+

├── train

|

| 291 |

+

│ ├── n01440764

|

| 292 |

+

│ │ ├── n01440764_10026.JPEG

|

| 293 |

+

│ │ ├── n01440764_10027.JPEG

|

| 294 |

+

│ │ ├── ......

|

| 295 |

+

│ ├── ......

|

| 296 |

+

├── val

|

| 297 |

+

│ ├── n01440764

|

| 298 |

+

│ │ ├── ILSVRC2012_val_00000293.JPEG

|

| 299 |

+

│ │ ├── ILSVRC2012_val_00002138.JPEG

|

| 300 |

+

│ │ ├── ......

|

| 301 |

+

│ ├── ......

|

| 302 |

+

```

|

| 303 |

+

|

| 304 |

+

### Training

|

| 305 |

+

To train RecNeXt-M1 on an 8-GPU machine:

|

| 306 |

+

|

| 307 |

+

```

|

| 308 |

+

python -m torch.distributed.launch --nproc_per_node=8 --master_port 12346 --use_env main.py --model recnext_m1 --data-path ~/imagenet --dist-eval

|

| 309 |

```

|

| 310 |

+

Tips: specify your data path and model name!

|

| 311 |

+

|

| 312 |

+

### Testing

|

| 313 |

+

For example, to test RecNeXt-M1:

|

| 314 |

+

```

|

| 315 |

+

python main.py --eval --model recnext_m1 --resume pretrain/recnext_m1_distill_300e.pth --data-path ~/imagenet

|

| 316 |

+

```

|

| 317 |

+

|

| 318 |

+

Use pretrained model without knowledge distillation from [HuggingFace](https://huggingface.co/suous) 🤗.

|

| 319 |

+

```bash

|

| 320 |

+

python main.py --eval --model recnext_m1 --data-path ~/imagenet --pretrained --distillation-type none

|

| 321 |

+

```

|

| 322 |

+

|

| 323 |

+

Use pretrained model with knowledge distillation from [HuggingFace](https://huggingface.co/suous) 🤗.

|

| 324 |

+

```bash

|

| 325 |

+

python main.py --eval --model recnext_m1 --data-path ~/imagenet --pretrained --distillation-type hard

|

| 326 |

+

```

|

| 327 |

+

|

| 328 |

+

### Fused model evaluation

|

| 329 |

+

For example, to evaluate RecNeXt-M1 with the fused model: [](https://colab.research.google.com/github/suous/RecNeXt/blob/main/demo/fused_model_evaluation.ipynb)

|

| 330 |

+

```

|

| 331 |

+

python fuse_eval.py --model recnext_m1 --resume pretrain/recnext_m1_distill_300e_fused.pt --data-path ~/imagenet

|

| 332 |

+

```

|

| 333 |

+

|

| 334 |

+

### Extract model for publishing

|

| 335 |

+

|

| 336 |

+

```

|

| 337 |

+

# without distillation

|

| 338 |

+

python publish.py --model_name recnext_m1 --checkpoint_path pretrain/checkpoint_best.pth --epochs 300

|

| 339 |

+

|

| 340 |

+

# with distillation

|

| 341 |

+

python publish.py --model_name recnext_m1 --checkpoint_path pretrain/checkpoint_best.pth --epochs 300 --distillation

|

| 342 |

+

|

| 343 |

+

# fused model

|

| 344 |

+

python publish.py --model_name recnext_m1 --checkpoint_path pretrain/checkpoint_best.pth --epochs 300 --fused

|

| 345 |

+

```

|

| 346 |

+

|

| 347 |

+

## Downstream Tasks

|

| 348 |

+

[Object Detection and Instance Segmentation](https://github.com/suous/RecNeXt/blob/main/detection/README.md)<br>

|

| 349 |

+

|

| 350 |

+

| model | $AP^b$ | $AP_{50}^b$ | $AP_{75}^b$ | $AP^m$ | $AP_{50}^m$ | $AP_{75}^m$ | Latency | Ckpt | Log |

|

| 351 |

+

|:------|:------:|:-----------:|:-----------:|:------:|:-----------:|:-----------:|:-------:|:---------------------------------------------------------------------------------:|:----------------------------------------------------------------------------------------------:|

|

| 352 |

+

| M3 | 41.7 | 63.4 | 45.4 | 38.6 | 60.5 | 41.4 | 5.2ms | [M3](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m3_coco.pth) | [M3](https://raw.githubusercontent.com/suous/RecNeXt/main/detection/logs/recnext_m3_coco.json) |

|

| 353 |

+

| M4 | 43.5 | 64.9 | 47.7 | 39.7 | 62.1 | 42.4 | 7.6ms | [M4](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m4_coco.pth) | [M4](https://raw.githubusercontent.com/suous/RecNeXt/main/detection/logs/recnext_m4_coco.json) |

|

| 354 |

+

| M5 | 44.6 | 66.3 | 49.0 | 40.6 | 63.5 | 43.5 | 12.4ms | [M5](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m5_coco.pth) | [M5](https://raw.githubusercontent.com/suous/RecNeXt/main/detection/logs/recnext_m5_coco.json) |

|

| 355 |

+

| A3 | 42.1 | 64.1 | 46.2 | 38.8 | 61.1 | 41.6 | 8.3ms | [A3](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_a3_coco.pth) | [A3](https://raw.githubusercontent.com/suous/RecNeXt/main/detection/logs/recnext_a3_coco.json) |

|

| 356 |

+

| A4 | 43.5 | 65.4 | 47.6 | 39.8 | 62.4 | 42.9 | 14.0ms | [A4](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_a4_coco.pth) | [A4](https://raw.githubusercontent.com/suous/RecNeXt/main/detection/logs/recnext_a4_coco.json) |

|

| 357 |

+

| A5 | 44.4 | 66.3 | 48.9 | 40.3 | 63.3 | 43.4 | 25.3ms | [A5](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_a5_coco.pth) | [A5](https://raw.githubusercontent.com/suous/RecNeXt/main/detection/logs/recnext_a5_coco.json) |

|

| 358 |

+

|

| 359 |

+

[Semantic Segmentation](https://github.com/suous/RecNeXt/blob/main/segmentation/README.md)

|

| 360 |

+

|

| 361 |

+

| Model | mIoU | Latency | Ckpt | Log |

|

| 362 |

+

|:-----------|:----:|:-------:|:-----------------------------------------------------------------------------------:|:---------------------------------------------------------------------------------------------------:|

|

| 363 |

+

| RecNeXt-M3 | 41.0 | 5.6ms | [M3](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m3_ade20k.pth) | [M3](https://raw.githubusercontent.com/suous/RecNeXt/main/segmentation/logs/recnext_m3_ade20k.json) |

|

| 364 |

+

| RecNeXt-M4 | 43.6 | 7.2ms | [M4](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m4_ade20k.pth) | [M4](https://raw.githubusercontent.com/suous/RecNeXt/main/segmentation/logs/recnext_m4_ade20k.json) |

|

| 365 |

+

| RecNeXt-M5 | 46.0 | 12.4ms | [M5](https://github.com/suous/RecNeXt/releases/download/v1.0/recnext_m5_ade20k.pth) | [M5](https://raw.githubusercontent.com/suous/RecNeXt/main/segmentation/logs/recnext_m5_ade20k.json) |

|

| 366 |

+

| RecNeXt-A3 | 41.9 | 8.4ms | [A3](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_a3_ade20k.pth) | [A3](https://raw.githubusercontent.com/suous/RecNeXt/main/segmentation/logs/recnext_a3_ade20k.json) |

|

| 367 |

+

| RecNeXt-A4 | 43.0 | 14.0ms | [A4](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_a4_ade20k.pth) | [A4](https://raw.githubusercontent.com/suous/RecNeXt/main/segmentation/logs/recnext_a4_ade20k.json) |

|

| 368 |

+

| RecNeXt-A5 | 46.5 | 25.3ms | [A5](https://github.com/suous/RecNeXt/releases/download/v2.0/recnext_a5_ade20k.pth) | [A5](https://raw.githubusercontent.com/suous/RecNeXt/main/segmentation/logs/recnext_a5_ade20k.json) |

|

| 369 |

+

|

| 370 |

+

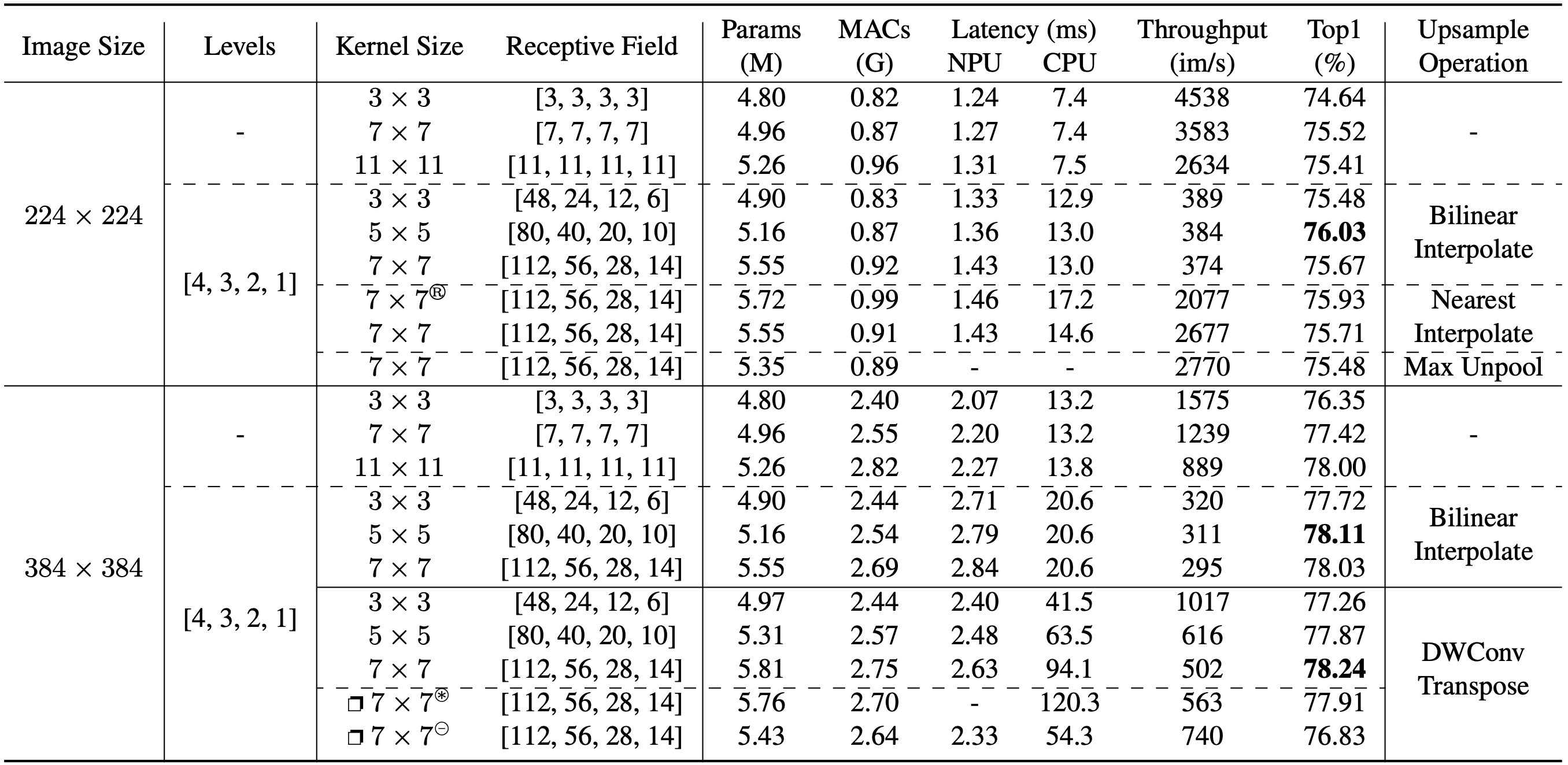

## Ablation Study

|

| 371 |

+

|

| 372 |

+

### Overall Experiments

|

| 373 |

+

|

| 374 |

+

|

| 375 |

+

|

| 376 |

+

<details>

|

| 377 |

+

<summary>

|

| 378 |

+

<span style="font-size: larger; ">Ablation Logs</span>

|

| 379 |

+

</summary>

|

| 380 |

+

|

| 381 |

+

<pre>

|

| 382 |

+

logs/ablation

|

| 383 |

+

├── 224

|

| 384 |

+

│ ├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/logs/ablation/224/recnext_m1_120e_224x224_3x3_7464.txt">recnext_m1_120e_224x224_3x3_7464.txt</a>

|

| 385 |

+

│ ├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/logs/ablation/224/recnext_m1_120e_224x224_7x7_7552.txt">recnext_m1_120e_224x224_7x7_7552.txt</a>

|

| 386 |

+

│ ├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/logs/ablation/224/recnext_m1_120e_224x224_bxb_7541.txt">recnext_m1_120e_224x224_bxb_7541.txt</a>

|

| 387 |

+

│ ├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/logs/ablation/224/recnext_m1_120e_224x224_rec_3x3_7548.txt">recnext_m1_120e_224x224_rec_3x3_7548.txt</a>

|

| 388 |

+

│ ├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/logs/ablation/224/recnext_m1_120e_224x224_rec_5x5_7603.txt">recnext_m1_120e_224x224_rec_5x5_7603.txt</a>

|

| 389 |

+

│ ├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/logs/ablation/224/recnext_m1_120e_224x224_rec_7x7_7567.txt">recnext_m1_120e_224x224_rec_7x7_7567.txt</a>

|

| 390 |

+

│ ├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/logs/ablation/224/recnext_m1_120e_224x224_rec_7x7_nearest_7571.txt">recnext_m1_120e_224x224_rec_7x7_nearest_7571.txt</a>

|

| 391 |

+

│ ├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/logs/ablation/224/recnext_m1_120e_224x224_rec_7x7_nearest_ssm_7593.txt">recnext_m1_120e_224x224_rec_7x7_nearest_ssm_7593.txt</a>

|

| 392 |

+

│ └── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/logs/ablation/224/recnext_m1_120e_224x224_rec_7x7_unpool_7548.txt">recnext_m1_120e_224x224_rec_7x7_unpool_7548.txt</a>

|

| 393 |

+

└── 384

|

| 394 |

+

├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/logs/ablation/384/recnext_m1_120e_384x384_3x3_7635.txt">recnext_m1_120e_384x384_3x3_7635.txt</a>

|

| 395 |

+

├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/logs/ablation/384/recnext_m1_120e_384x384_7x7_7742.txt">recnext_m1_120e_384x384_7x7_7742.txt</a>

|

| 396 |

+

├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/logs/ablation/384/recnext_m1_120e_384x384_bxb_7800.txt">recnext_m1_120e_384x384_bxb_7800.txt</a>

|

| 397 |

+

├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/logs/ablation/384/recnext_m1_120e_384x384_rec_3x3_7772.txt">recnext_m1_120e_384x384_rec_3x3_7772.txt</a>

|

| 398 |

+

├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/logs/ablation/384/recnext_m1_120e_384x384_rec_5x5_7811.txt">recnext_m1_120e_384x384_rec_5x5_7811.txt</a>

|

| 399 |

+

├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/logs/ablation/384/recnext_m1_120e_384x384_rec_7x7_7803.txt">recnext_m1_120e_384x384_rec_7x7_7803.txt</a>

|

| 400 |

+

├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/logs/ablation/384/recnext_m1_120e_384x384_rec_convtrans_3x3_basic_7726.txt">recnext_m1_120e_384x384_rec_convtrans_3x3_basic_7726.txt</a>

|

| 401 |

+

├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/logs/ablation/384/recnext_m1_120e_384x384_rec_convtrans_5x5_basic_7787.txt">recnext_m1_120e_384x384_rec_convtrans_5x5_basic_7787.txt</a>

|

| 402 |

+

├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/logs/ablation/384/recnext_m1_120e_384x384_rec_convtrans_7x7_basic_7824.txt">recnext_m1_120e_384x384_rec_convtrans_7x7_basic_7824.txt</a>

|

| 403 |

+

├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/logs/ablation/384/recnext_m1_120e_384x384_rec_convtrans_7x7_group_7791.txt">recnext_m1_120e_384x384_rec_convtrans_7x7_group_7791.txt</a>

|

| 404 |

+

└── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/logs/ablation/384/recnext_m1_120e_384x384_rec_convtrans_7x7_split_7683.txt">recnext_m1_120e_384x384_rec_convtrans_7x7_split_7683.txt</a>

|

| 405 |

+

</pre>

|

| 406 |

+

</details>

|

| 407 |

+

|

| 408 |

+

<details>

|

| 409 |

+

<summary>

|

| 410 |

+

<span style="font-size: larger; ">RecConv Recurrent Aggregation</span>

|

| 411 |

+

</summary>

|

| 412 |

+

|

| 413 |

+

```python

|

| 414 |

+

class RecConv2d(nn.Module):

|

| 415 |

+

def __init__(self, in_channels, kernel_size=5, bias=False, level=1, mode='nearest'):

|

| 416 |

+

super().__init__()

|

| 417 |

+

self.level = level

|

| 418 |

+

self.mode = mode

|

| 419 |

+

kwargs = {

|

| 420 |

+

'in_channels': in_channels,

|

| 421 |

+

'out_channels': in_channels,

|

| 422 |

+

'groups': in_channels,

|

| 423 |

+

'kernel_size': kernel_size,

|

| 424 |

+

'padding': kernel_size // 2,

|

| 425 |

+

'bias': bias

|

| 426 |

+

}

|

| 427 |

+

self.n = nn.Conv2d(stride=2, **kwargs)

|

| 428 |

+

self.a = nn.Conv2d(**kwargs) if level >1 else None

|

| 429 |

+

self.b = nn.Conv2d(**kwargs)

|

| 430 |

+

self.c = nn.Conv2d(**kwargs)

|

| 431 |

+

self.d = nn.Conv2d(**kwargs)

|

| 432 |

+

|

| 433 |

+

def forward(self, x):

|

| 434 |

+

# 1. Generate Multi-scale Features.

|

| 435 |

+

fs = [x]

|

| 436 |

+

for _ in range(self.level):

|

| 437 |

+

fs.append(self.n(fs[-1]))

|

| 438 |

+

|

| 439 |

+

# 2. Multi-scale Recurrent Aggregation.

|

| 440 |

+

h = None

|

| 441 |

+

for i, o in reversed(list(zip(fs[1:], fs[:-1]))):

|

| 442 |

+

h = self.a(h) + self.b(i) if h is not None else self.b(i)

|

| 443 |

+

h = nn.functional.interpolate(h, size=o.shape[2:], mode=self.mode)

|

| 444 |

+

return self.c(h) + self.d(x)

|

| 445 |

+

```

|

| 446 |

+

</details>

|

| 447 |

+

|

| 448 |

+

### RecConv Variants

|

| 449 |

+

|

| 450 |

+

<div style="display: flex; justify-content: space-between;">

|

| 451 |

+

<img src="https://raw.githubusercontent.com/suous/RecNeXt/main/figures/RecConvB.png" alt="RecConvB" style="width: 49%;">

|

| 452 |

+

<img src="https://raw.githubusercontent.com/suous/RecNeXt/main/figures/RecConvC.png" alt="RecConvC" style="width: 49%;">

|

| 453 |

+

</div>

|

| 454 |

+

|

| 455 |

+

|

| 456 |

+

<details>

|

| 457 |

+

<summary>

|

| 458 |

+

<span style="font-size: larger; ">RecConv Variant Details</span>

|

| 459 |

+

</summary>

|

| 460 |

+

|

| 461 |

+

- **RecConv using group convolutions**

|

| 462 |

+

|

| 463 |

+

```python

|

| 464 |

+

# RecConv Variant A

|

| 465 |

+

# recursive decomposition on both spatial and channel dimensions

|

| 466 |

+

# downsample and upsample through group convolutions

|

| 467 |

+

class RecConv2d(nn.Module):

|

| 468 |

+

def __init__(self, in_channels, kernel_size=5, bias=False, level=2):

|

| 469 |

+

super().__init__()

|

| 470 |

+

self.level = level

|

| 471 |

+

kwargs = {'kernel_size': kernel_size, 'padding': kernel_size // 2, 'bias': bias}

|

| 472 |

+

downs = []

|

| 473 |

+

for l in range(level):

|

| 474 |

+

i_channels = in_channels // (2 ** l)

|

| 475 |

+

o_channels = in_channels // (2 ** (l+1))

|

| 476 |

+

downs.append(nn.Conv2d(in_channels=i_channels, out_channels=o_channels, groups=o_channels, stride=2, **kwargs))

|

| 477 |

+

self.downs = nn.ModuleList(downs)

|

| 478 |

+

|

| 479 |

+

convs = []

|

| 480 |

+

for l in range(level+1):

|

| 481 |

+

channels = in_channels // (2 ** l)

|

| 482 |

+

convs.append(nn.Conv2d(in_channels=channels, out_channels=channels, groups=channels, **kwargs))

|

| 483 |

+

self.convs = nn.ModuleList(reversed(convs))

|

| 484 |

+

|

| 485 |

+

# this is the simplest modification, only support resoltions like 256, 384, etc

|

| 486 |

+

kwargs['kernel_size'] = kernel_size + 1

|

| 487 |

+

ups = []

|

| 488 |

+

for l in range(level):

|

| 489 |

+

i_channels = in_channels // (2 ** (l+1))

|

| 490 |

+

o_channels = in_channels // (2 ** l)

|

| 491 |

+

ups.append(nn.ConvTranspose2d(in_channels=i_channels, out_channels=o_channels, groups=i_channels, stride=2, **kwargs))

|

| 492 |

+

self.ups = nn.ModuleList(reversed(ups))

|

| 493 |

+

|

| 494 |

+

def forward(self, x):

|

| 495 |

+

i = x

|

| 496 |

+

features = []

|

| 497 |

+

for down in self.downs:

|

| 498 |

+

x, s = down(x), x.shape[2:]

|

| 499 |

+

features.append((x, s))

|

| 500 |

+

|

| 501 |

+

x = 0

|

| 502 |

+

for conv, up, (f, s) in zip(self.convs, self.ups, reversed(features)):

|

| 503 |

+

x = up(conv(f + x))

|

| 504 |

+

return self.convs[self.level](i + x)

|

| 505 |

+

```

|

| 506 |

+

|

| 507 |

+

- **RecConv using channel-wise concatenation**

|

| 508 |

+

|

| 509 |

+

```python

|

| 510 |

+

# recursive decomposition on both spatial and channel dimensions

|

| 511 |

+

# downsample using channel-wise split, followed by depthwise convolution with a stride of 2

|

| 512 |

+

# upsample through channel-wise concatenation

|

| 513 |

+

class RecConv2d(nn.Module):

|

| 514 |

+

def __init__(self, in_channels, kernel_size=5, bias=False, level=2):

|

| 515 |

+

super().__init__()

|

| 516 |

+

self.level = level

|

| 517 |

+

kwargs = {'kernel_size': kernel_size, 'padding': kernel_size // 2, 'bias': bias}

|

| 518 |

+

downs = []

|

| 519 |

+

for l in range(level):

|

| 520 |

+

channels = in_channels // (2 ** (l+1))

|

| 521 |

+

downs.append(nn.Conv2d(in_channels=channels, out_channels=channels, groups=channels, stride=2, **kwargs))

|

| 522 |

+

self.downs = nn.ModuleList(downs)

|

| 523 |

+

|

| 524 |

+

convs = []

|

| 525 |

+

for l in range(level+1):

|

| 526 |

+

channels = in_channels // (2 ** l)

|

| 527 |

+

convs.append(nn.Conv2d(in_channels=channels, out_channels=channels, groups=channels, **kwargs))

|

| 528 |

+

self.convs = nn.ModuleList(reversed(convs))

|

| 529 |

+

|

| 530 |

+

. # this is the simplest modification, only support resoltions like 256, 384, etc

|

| 531 |

+

kwargs['kernel_size'] = kernel_size + 1

|

| 532 |

+

ups = []

|

| 533 |

+

for l in range(level):

|

| 534 |

+

channels = in_channels // (2 ** (l+1))

|

| 535 |

+

ups.append(nn.ConvTranspose2d(in_channels=channels, out_channels=channels, groups=channels, stride=2, **kwargs))

|

| 536 |

+

self.ups = nn.ModuleList(reversed(ups))

|

| 537 |

+

|

| 538 |

+

def forward(self, x):

|

| 539 |

+

features = []

|

| 540 |

+

for down in self.downs:

|

| 541 |

+

r, x = torch.chunk(x, 2, dim=1)

|

| 542 |

+

x, s = down(x), x.shape[2:]

|

| 543 |

+

features.append((r, s))

|

| 544 |

+

|

| 545 |

+

for conv, up, (r, s) in zip(self.convs, self.ups, reversed(features)):

|

| 546 |

+

x = torch.cat([r, up(conv(x))], dim=1)

|

| 547 |

+

return self.convs[self.level](x)

|

| 548 |

+

```

|

| 549 |

+

</details>

|

| 550 |

+

|

| 551 |

+

### RecConv Beyond

|

| 552 |

+

|

| 553 |

+

We apply RecConv to [MLLA](https://github.com/LeapLabTHU/MLLA) small variants, replacing linear attention and downsampling layers.

|

| 554 |

+

Result in higher throughput and less training memory usage.

|

| 555 |

+

|

| 556 |

+

<details>

|

| 557 |

+

<summary>

|

| 558 |

+

<span style="font-size: larger; ">Ablation Logs</span>

|

| 559 |

+

</summary>

|

| 560 |

+

|

| 561 |

+

<pre>

|

| 562 |

+

mlla/logs

|

| 563 |

+

├── 1_mlla_nano

|

| 564 |

+

│ ├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/mlla/logs/1_mlla_nano/01_baseline.txt">01_baseline.txt</a>

|

| 565 |

+

│ ├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/mlla/logs/1_mlla_nano/02_recconv_5x5_conv_trans.txt">02_recconv_5x5_conv_trans.txt</a>

|

| 566 |

+

│ ├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/mlla/logs/1_mlla_nano/03_recconv_5x5_nearest_interp.txt">03_recconv_5x5_nearest_interp.txt</a>

|

| 567 |

+

│ ├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/mlla/logs/1_mlla_nano/04_recattn_nearest_interp.txt">04_recattn_nearest_interp.txt</a>

|

| 568 |

+

│ └── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/mlla/logs/1_mlla_nano/05_recattn_nearest_interp_simplify.txt">05_recattn_nearest_interp_simplify.txt</a>

|

| 569 |

+

└── 2_mlla_mini

|

| 570 |

+

├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/mlla/logs/2_mlla_mini/01_baseline.txt">01_baseline.txt</a>

|

| 571 |

+

├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/mlla/logs/2_mlla_mini/02_recconv_5x5_conv_trans.txt">02_recconv_5x5_conv_trans.txt</a>

|

| 572 |

+

├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/mlla/logs/2_mlla_mini/03_recconv_5x5_nearest_interp.txt">03_recconv_5x5_nearest_interp.txt</a>

|

| 573 |

+

├── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/mlla/logs/2_mlla_mini/04_recattn_nearest_interp.txt">04_recattn_nearest_interp.txt</a>

|

| 574 |

+

└── <a style="text-decoration:none" href="https://raw.githubusercontent.com/suous/RecNeXt/main/mlla/logs/2_mlla_mini/05_recattn_nearest_interp_simplify.txt">05_recattn_nearest_interp_simplify.txt</a>

|

| 575 |

+

</pre>

|

| 576 |

+

</details>

|

| 577 |

|

| 578 |

## Limitations

|

| 579 |

|

|

|

|

| 583 |

|

| 584 |

## Acknowledgement

|

| 585 |

|

| 586 |

+

Classification (ImageNet) code base is partly built with [LeViT](https://github.com/facebookresearch/LeViT), [PoolFormer](https://github.com/sail-sg/poolformer), [EfficientFormer](https://github.com/snap-research/EfficientFormer), [RepViT](https://github.com/THU-MIG/RepViT), [LSNet](https://github.com/jameslahm/lsnet), [MLLA](https://github.com/LeapLabTHU/MLLA), and [MogaNet](https://github.com/Westlake-AI/MogaNet).

|

| 587 |

+

|

| 588 |

+

The detection and segmentation pipeline is from [MMCV](https://github.com/open-mmlab/mmcv) ([MMDetection](https://github.com/open-mmlab/mmdetection) and [MMSegmentation](https://github.com/open-mmlab/mmsegmentation)).

|

| 589 |

+

|

| 590 |

+

Thanks for the great implementations!

|

| 591 |

|

| 592 |

+

## Citation

|

| 593 |

|

| 594 |

+

```BibTeX

|

| 595 |

+

@misc{zhao2024recnext,

|

| 596 |

+

title={RecConv: Efficient Recursive Convolutions for Multi-Frequency Representations},

|

| 597 |

+

author={Mingshu Zhao and Yi Luo and Yong Ouyang},

|

| 598 |

+

year={2024},

|

| 599 |

+

eprint={2412.19628},

|

| 600 |

+

archivePrefix={arXiv},

|

| 601 |

+

primaryClass={cs.CV}

|

| 602 |

+

}

|

| 603 |

+

```

|