Add files using upload-large-folder tool

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +2 -0

- a_mllm_notebooks/.ipynb_checkpoints/serve-checkpoint.sh +30 -0

- a_mllm_notebooks/langchain/image.jpg +3 -0

- a_mllm_notebooks/lmdeploy/api_server.ipynb +568 -0

- a_mllm_notebooks/lmdeploy/api_server.md +265 -0

- a_mllm_notebooks/lmdeploy/api_server_vl.ipynb +199 -0

- a_mllm_notebooks/lmdeploy/api_server_vl.md +155 -0

- a_mllm_notebooks/lmdeploy/download_md.ipynb +211 -0

- a_mllm_notebooks/lmdeploy/get_started_vl.ipynb +517 -0

- a_mllm_notebooks/lmdeploy/get_started_vl.md +204 -0

- a_mllm_notebooks/lmdeploy/internvl_25.ipynb +355 -0

- a_mllm_notebooks/lmdeploy/kv_quant.ipynb +114 -0

- a_mllm_notebooks/lmdeploy/kv_quant.md +82 -0

- a_mllm_notebooks/lmdeploy/links.txt +8 -0

- a_mllm_notebooks/lmdeploy/lmdeploy_deepseek_vl.ipynb +665 -0

- a_mllm_notebooks/lmdeploy/lmdeploy_info.ipynb +132 -0

- a_mllm_notebooks/lmdeploy/lmdeploy_serve.sh +47 -0

- a_mllm_notebooks/lmdeploy/long_context.ipynb +169 -0

- a_mllm_notebooks/lmdeploy/long_context.md +119 -0

- a_mllm_notebooks/lmdeploy/pipeline.ipynb +570 -0

- a_mllm_notebooks/lmdeploy/pipeline.md +205 -0

- a_mllm_notebooks/lmdeploy/proxy_server.ipynb +248 -0

- a_mllm_notebooks/lmdeploy/proxy_server.md +97 -0

- a_mllm_notebooks/lmdeploy/pytorch_new_model.ipynb +261 -0

- a_mllm_notebooks/lmdeploy/pytorch_new_model.md +181 -0

- a_mllm_notebooks/lmdeploy/tiger.jpeg +0 -0

- a_mllm_notebooks/lmdeploy/turbomind.ipynb +88 -0

- a_mllm_notebooks/lmdeploy/turbomind.md +68 -0

- a_mllm_notebooks/lmdeploy/w4a16.ipynb +174 -0

- a_mllm_notebooks/lmdeploy/w4a16.md +130 -0

- a_mllm_notebooks/lmdeploy/w8a8.ipynb +75 -0

- a_mllm_notebooks/lmdeploy/w8a8.md +55 -0

- a_mllm_notebooks/openai/.ipynb_checkpoints/infer-checkpoint.py +167 -0

- a_mllm_notebooks/openai/.ipynb_checkpoints/langchain_openai_api-checkpoint.ipynb +0 -0

- a_mllm_notebooks/openai/.ipynb_checkpoints/load_synth_pedes-checkpoint.ipynb +96 -0

- a_mllm_notebooks/openai/.ipynb_checkpoints/openai_api-checkpoint.ipynb +408 -0

- a_mllm_notebooks/openai/.ipynb_checkpoints/ping_server-checkpoint.ipynb +292 -0

- a_mllm_notebooks/openai/.ipynb_checkpoints/serve-checkpoint.sh +60 -0

- a_mllm_notebooks/openai/.ipynb_checkpoints/temp-checkpoint.sh +25 -0

- a_mllm_notebooks/openai/combine_chinese_output.ipynb +526 -0

- a_mllm_notebooks/openai/openai_api.ipynb +408 -0

- a_mllm_notebooks/tensorrt-llm/bert/.gitignore +2 -0

- a_mllm_notebooks/tensorrt-llm/bert/README.md +79 -0

- a_mllm_notebooks/tensorrt-llm/bert/base_benchmark/config.json +22 -0

- a_mllm_notebooks/tensorrt-llm/bert/base_with_attention_plugin_benchmark/config.json +22 -0

- a_mllm_notebooks/tensorrt-llm/bert/build.py +354 -0

- a_mllm_notebooks/tensorrt-llm/bert/large_benchmark/config.json +22 -0

- a_mllm_notebooks/tensorrt-llm/bert/large_with_attention_plugin_benchmark/config.json +22 -0

- a_mllm_notebooks/tensorrt-llm/bert/run.py +128 -0

- a_mllm_notebooks/tensorrt-llm/bert/run_remove_input_padding.py +153 -0

.gitattributes

CHANGED

|

@@ -452,3 +452,5 @@ recognize-anything/images/demo/.ipynb_checkpoints/demo4-checkpoint.jpg filter=lf

|

|

| 452 |

recognize-anything/images/demo/.ipynb_checkpoints/demo2-checkpoint.jpg filter=lfs diff=lfs merge=lfs -text

|

| 453 |

a_mllm_notebooks/vllm/cat.jpg filter=lfs diff=lfs merge=lfs -text

|

| 454 |

a_mllm_notebooks/openai/image.jpg filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 452 |

recognize-anything/images/demo/.ipynb_checkpoints/demo2-checkpoint.jpg filter=lfs diff=lfs merge=lfs -text

|

| 453 |

a_mllm_notebooks/vllm/cat.jpg filter=lfs diff=lfs merge=lfs -text

|

| 454 |

a_mllm_notebooks/openai/image.jpg filter=lfs diff=lfs merge=lfs -text

|

| 455 |

+

a_mllm_notebooks/langchain/image.jpg filter=lfs diff=lfs merge=lfs -text

|

| 456 |

+

a_mllm_notebooks/vllm/.ipynb_checkpoints/cat-checkpoint.jpg filter=lfs diff=lfs merge=lfs -text

|

a_mllm_notebooks/.ipynb_checkpoints/serve-checkpoint.sh

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

eval "$(conda shell.bash hook)"

|

| 2 |

+

conda activate lmdeploy

|

| 3 |

+

|

| 4 |

+

MODEL_NAME=Qwen/Qwen2.5-1.5B-Instruct-AWQ

|

| 5 |

+

# PORT_LIST=(2020 2021 2022 2023 2024 2025 2026 2027 2028 2029 2030 2031)

|

| 6 |

+

|

| 7 |

+

# PORT_LIST from 3063 to 3099

|

| 8 |

+

PORT_LIST=( $(seq 19500 1 19590) )

|

| 9 |

+

# PORT_LIST=(9898)

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

# PROXY_URL=0.0.0.0

|

| 13 |

+

# lmdeploy serve proxy --server-name $PROXY_URL --server-port 8080 --strategy \

|

| 14 |

+

# min_observed_latency &

|

| 15 |

+

# "min_expected_latency" \

|

| 16 |

+

# &

|

| 17 |

+

|

| 18 |

+

for PORT in "${PORT_LIST[@]}"; do

|

| 19 |

+

# get random device id from 0 to 3

|

| 20 |

+

# RANDOM_DEVICE_ID=$((RANDOM % 3))

|

| 21 |

+

RANDOM_DEVICE_ID=1

|

| 22 |

+

# CUDA_VISIBLE_DEVICES=$RANDOM_DEVICE_ID \

|

| 23 |

+

# CUDA_VISIBLE_DEVICES=0,1 \

|

| 24 |

+

# CUDA_VISIBLE_DEVICES=2,3 \

|

| 25 |

+

lmdeploy serve api_server $MODEL_NAME \

|

| 26 |

+

--server-port $PORT \

|

| 27 |

+

--backend turbomind \

|

| 28 |

+

--dtype float16 --proxy-url http://0.0.0.0:8080 \

|

| 29 |

+

--cache-max-entry-count 0.1 --tp 1 &

|

| 30 |

+

done

|

a_mllm_notebooks/langchain/image.jpg

ADDED

|

Git LFS Details

|

a_mllm_notebooks/lmdeploy/api_server.ipynb

ADDED

|

@@ -0,0 +1,568 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "markdown",

|

| 5 |

+

"id": "c1639fe1",

|

| 6 |

+

"metadata": {},

|

| 7 |

+

"source": [

|

| 8 |

+

"# OpenAI Compatible Server\n",

|

| 9 |

+

"\n",

|

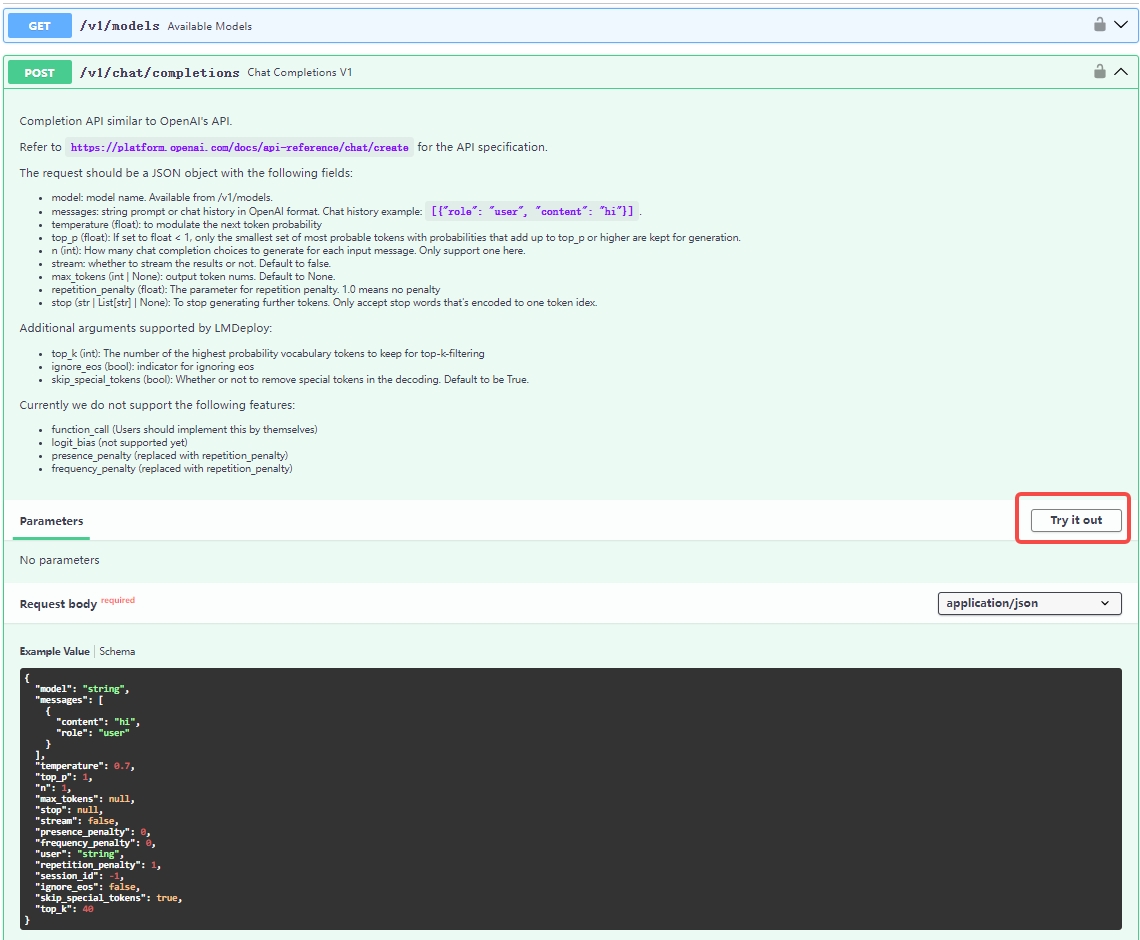

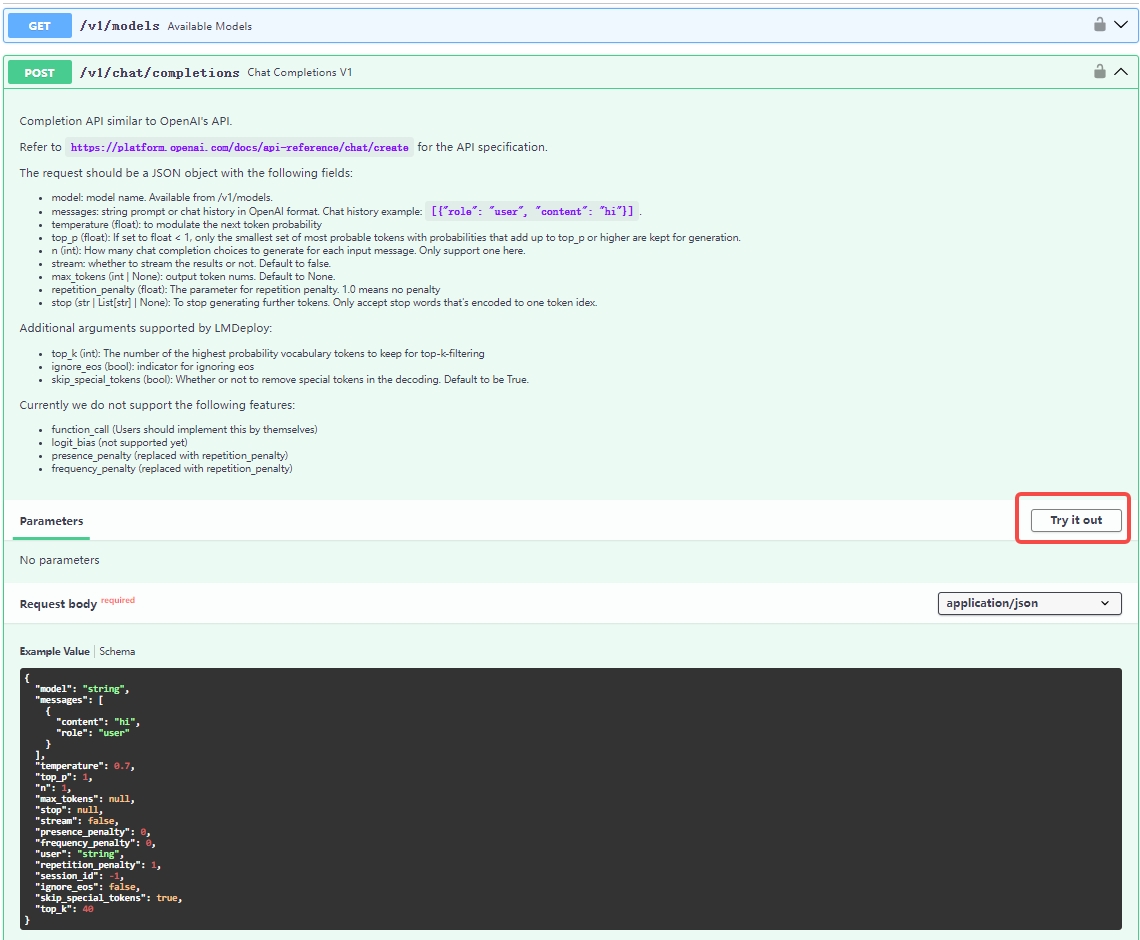

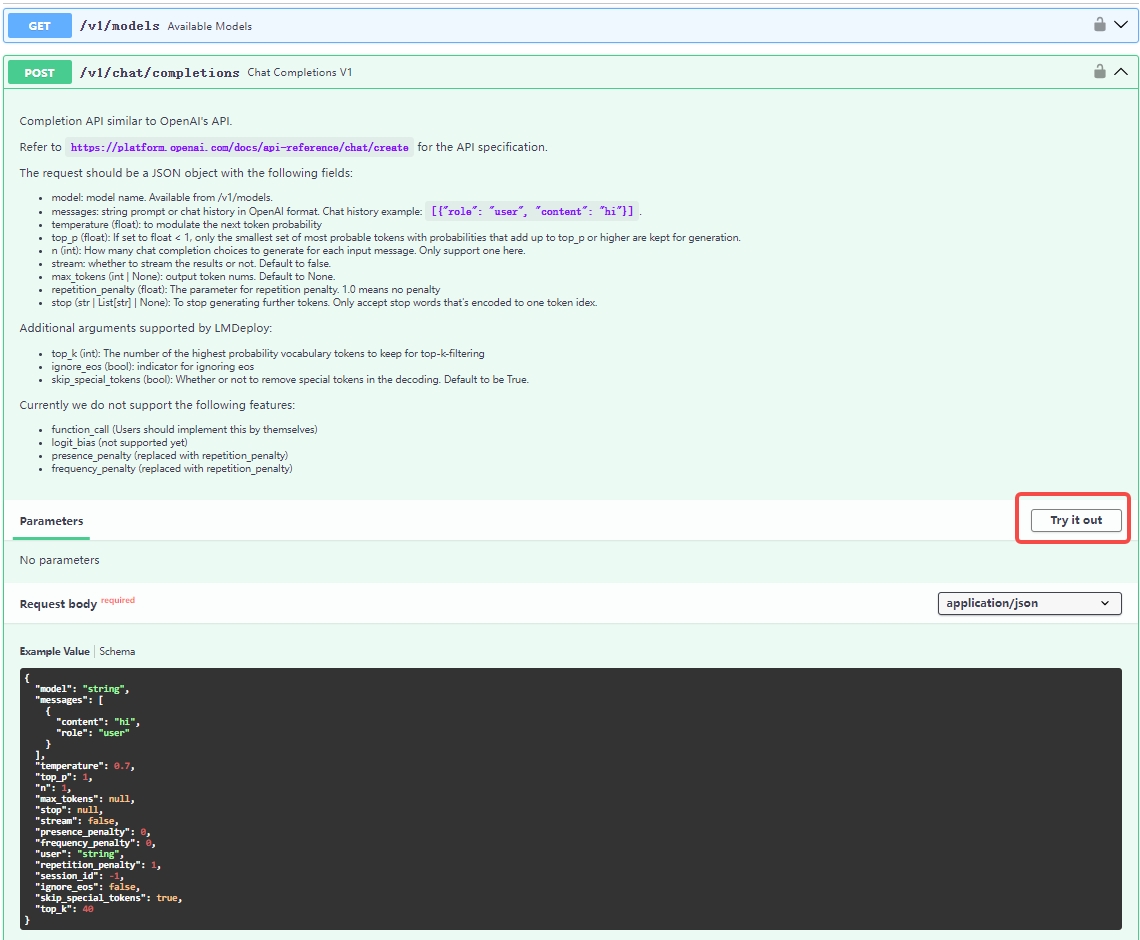

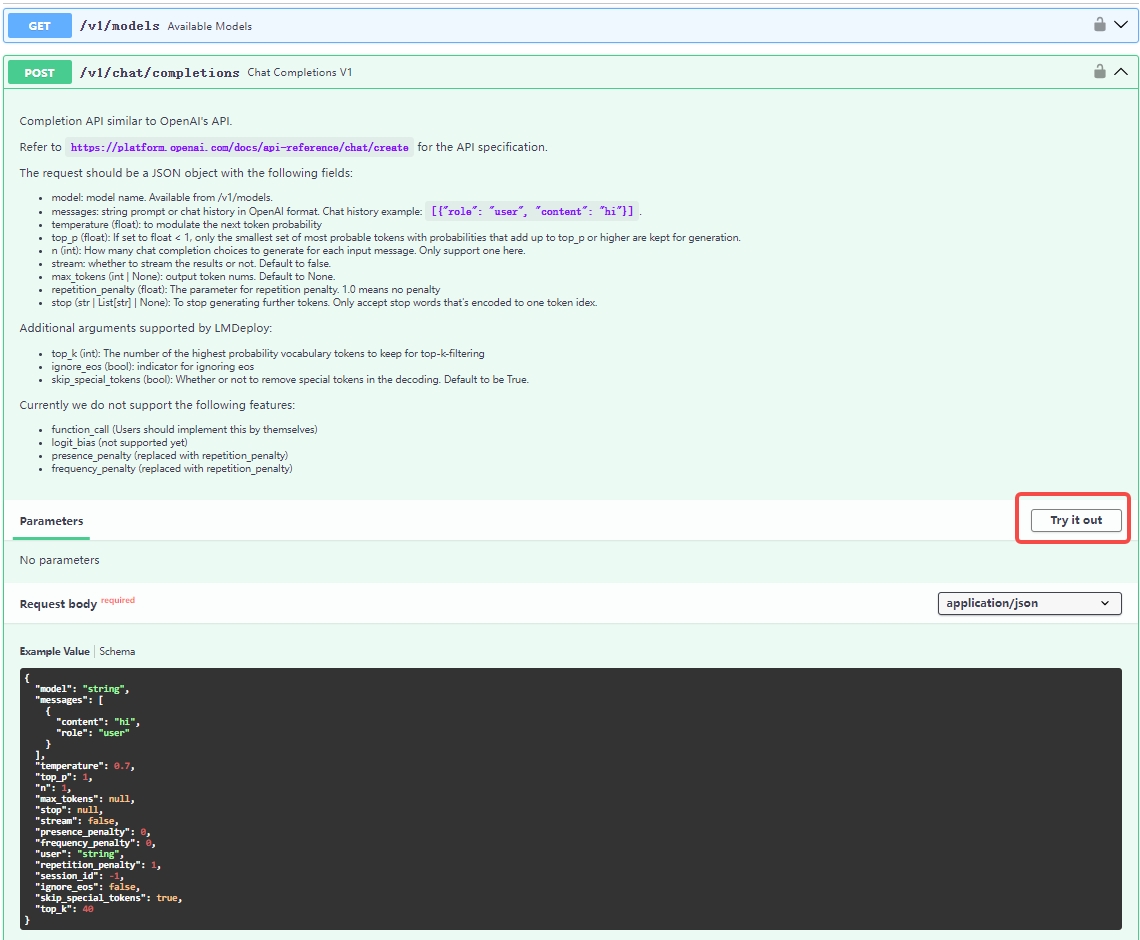

| 10 |

+

"This article primarily discusses the deployment of a single LLM model across multiple GPUs on a single node, providing a service that is compatible with the OpenAI interface, as well as the usage of the service API.\n",

|

| 11 |

+

"For the sake of convenience, we refer to this service as `api_server`. Regarding parallel services with multiple models, please refer to the guide about [Request Distribution Server](proxy_server.md).\n",

|

| 12 |

+

"\n",

|

| 13 |

+

"In the following sections, we will first introduce methods for starting the service, choosing the appropriate one based on your application scenario.\n",

|

| 14 |

+

"\n",

|

| 15 |

+

"Next, we focus on the definition of the service's RESTful API, explore the various ways to interact with the interface, and demonstrate how to try the service through the Swagger UI or LMDeploy CLI tools.\n",

|

| 16 |

+

"\n",

|

| 17 |

+

"Finally, we showcase how to integrate the service into a WebUI, providing you with a reference to easily set up a demonstration demo.\n",

|

| 18 |

+

"\n",

|

| 19 |

+

"## Launch Service\n",

|

| 20 |

+

"\n",

|

| 21 |

+

"Take the [internlm2_5-7b-chat](https://huggingface.co/internlm/internlm2_5-7b-chat) model hosted on huggingface hub as an example, you can choose one the following methods to start the service.\n",

|

| 22 |

+

"\n",

|

| 23 |

+

"### Option 1: Launching with lmdeploy CLI\n",

|

| 24 |

+

"\n",

|

| 25 |

+

"```shell\n",

|

| 26 |

+

"lmdeploy serve api_server internlm/internlm2_5-7b-chat --server-port 23333\n",

|

| 27 |

+

"```\n",

|

| 28 |

+

"\n",

|

| 29 |

+

"The arguments of `api_server` can be viewed through the command `lmdeploy serve api_server -h`, for instance, `--tp` to set tensor parallelism, `--session-len` to specify the max length of the context window, `--cache-max-entry-count` to adjust the GPU mem ratio for k/v cache etc.\n",

|

| 30 |

+

"\n",

|

| 31 |

+

"### Option 2: Deploying with docker\n",

|

| 32 |

+

"\n",

|

| 33 |

+

"With LMDeploy [official docker image](https://hub.docker.com/r/openmmlab/lmdeploy/tags), you can run OpenAI compatible server as follows:\n",

|

| 34 |

+

"\n",

|

| 35 |

+

"```shell\n",

|

| 36 |

+

"docker run --runtime nvidia --gpus all \\\n",

|

| 37 |

+

" -v ~/.cache/huggingface:/root/.cache/huggingface \\\n",

|

| 38 |

+

" --env \"HUGGING_FACE_HUB_TOKEN=<secret>\" \\\n",

|

| 39 |

+

" -p 23333:23333 \\\n",

|

| 40 |

+

" --ipc=host \\\n",

|

| 41 |

+

" openmmlab/lmdeploy:latest \\\n",

|

| 42 |

+

" lmdeploy serve api_server internlm/internlm2_5-7b-chat\n",

|

| 43 |

+

"```\n",

|

| 44 |

+

"\n",

|

| 45 |

+

"The parameters of `api_server` are the same with that mentioned in \"[option 1](#option-1-launching-with-lmdeploy-cli)\" section\n",

|

| 46 |

+

"\n",

|

| 47 |

+

"### Option 3: Deploying to Kubernetes cluster\n",

|

| 48 |

+

"\n",

|

| 49 |

+

"Connect to a running Kubernetes cluster and deploy the internlm2_5-7b-chat model service with [kubectl](https://kubernetes.io/docs/reference/kubectl/) command-line tool (replace `<your token>` with your huggingface hub token):\n",

|

| 50 |

+

"\n",

|

| 51 |

+

"```shell\n",

|

| 52 |

+

"sed 's/{{HUGGING_FACE_HUB_TOKEN}}/<your token>/' k8s/deployment.yaml | kubectl create -f - \\\n",

|

| 53 |

+

" && kubectl create -f k8s/service.yaml\n",

|

| 54 |

+

"```\n",

|

| 55 |

+

"\n",

|

| 56 |

+

"In the example above the model data is placed on the local disk of the node (hostPath). Consider replacing it with high-availability shared storage if multiple replicas are desired, and the storage can be mounted into container using [PersistentVolume](https://kubernetes.io/docs/concepts/storage/persistent-volumes/).\n",

|

| 57 |

+

"\n",

|

| 58 |

+

"## RESTful API\n",

|

| 59 |

+

"\n",

|

| 60 |

+

"LMDeploy's RESTful API is compatible with the following three OpenAI interfaces:\n",

|

| 61 |

+

"\n",

|

| 62 |

+

"- /v1/chat/completions\n",

|

| 63 |

+

"- /v1/models\n",

|

| 64 |

+

"- /v1/completions\n",

|

| 65 |

+

"\n",

|

| 66 |

+

"Additionally, LMDeploy also defines `/v1/chat/interactive` to support interactive inference. The feature of interactive inference is that there's no need to pass the user conversation history as required by `v1/chat/completions`, since the conversation history will be cached on the server side. This method boasts excellent performance during multi-turn long context inference.\n",

|

| 67 |

+

"\n",

|

| 68 |

+

"You can overview and try out the offered RESTful APIs by the website `http://0.0.0.0:23333` as shown in the below image after launching the service successfully.\n",

|

| 69 |

+

"\n",

|

| 70 |

+

"\n",

|

| 71 |

+

"\n",

|

| 72 |

+

"Or, you can use the LMDeploy's built-in CLI tool to verify the service correctness right from the console.\n",

|

| 73 |

+

"\n",

|

| 74 |

+

"```shell\n",

|

| 75 |

+

"# restful_api_url is what printed in api_server.py, e.g. http://localhost:23333\n",

|

| 76 |

+

"lmdeploy serve api_client ${api_server_url}\n",

|

| 77 |

+

"```\n",

|

| 78 |

+

"\n",

|

| 79 |

+

"If you need to integrate the service into your own projects or products, we recommend the following approach:\n",

|

| 80 |

+

"\n",

|

| 81 |

+

"### Integrate with `OpenAI`\n",

|

| 82 |

+

"\n",

|

| 83 |

+

"Here is an example of interaction with the endpoint `v1/chat/completions` service via the openai package.\n",

|

| 84 |

+

"Before running it, please install the openai package by `pip install openai`"

|

| 85 |

+

]

|

| 86 |

+

},

|

| 87 |

+

{

|

| 88 |

+

"cell_type": "code",

|

| 89 |

+

"execution_count": 1,

|

| 90 |

+

"id": "be8a8067",

|

| 91 |

+

"metadata": {},

|

| 92 |

+

"outputs": [

|

| 93 |

+

{

|

| 94 |

+

"data": {

|

| 95 |

+

"text/plain": [

|

| 96 |

+

"0"

|

| 97 |

+

]

|

| 98 |

+

},

|

| 99 |

+

"execution_count": 1,

|

| 100 |

+

"metadata": {},

|

| 101 |

+

"output_type": "execute_result"

|

| 102 |

+

},

|

| 103 |

+

{

|

| 104 |

+

"name": "stderr",

|

| 105 |

+

"output_type": "stream",

|

| 106 |

+

"text": [

|

| 107 |

+

"Fetching 32 files: 100%|██████████████████████████████████████| 32/32 [00:00<00:00, 8631.92it/s]\n",

|

| 108 |

+

"InternLM2ForCausalLM has generative capabilities, as `prepare_inputs_for_generation` is explicitly overwritten. However, it doesn't directly inherit from `GenerationMixin`. From 👉v4.50👈 onwards, `PreTrainedModel` will NOT inherit from `GenerationMixin`, and this model will lose the ability to call `generate` and other related functions.\n",

|

| 109 |

+

" - If you're using `trust_remote_code=True`, you can get rid of this warning by loading the model with an auto class. See https://huggingface.co/docs/transformers/en/model_doc/auto#auto-classes\n",

|

| 110 |

+

" - If you are the owner of the model architecture code, please modify your model class such that it inherits from `GenerationMixin` (after `PreTrainedModel`, otherwise you'll get an exception).\n",

|

| 111 |

+

" - If you are not the owner of the model architecture class, please contact the model code owner to update it.\n",

|

| 112 |

+

"Convert to turbomind format: 0%| | 0/48 [00:00<?, ?it/s]"

|

| 113 |

+

]

|

| 114 |

+

}

|

| 115 |

+

],

|

| 116 |

+

"source": [

|

| 117 |

+

"command = '''lmdeploy serve api_server \\\n",

|

| 118 |

+

"OpenGVLab/InternVL2_5-26B-AWQ \\\n",

|

| 119 |

+

"--server-port 23333 \\\n",

|

| 120 |

+

"--model-format awq \\\n",

|

| 121 |

+

"--backend turbomind \\\n",

|

| 122 |

+

"--tp 4 \\\n",

|

| 123 |

+

"--dtype float16 \\\n",

|

| 124 |

+

"&\n",

|

| 125 |

+

"'''\n",

|

| 126 |

+

"\n",

|

| 127 |

+

"import os\n",

|

| 128 |

+

"os.system(command)"

|

| 129 |

+

]

|

| 130 |

+

},

|

| 131 |

+

{

|

| 132 |

+

"cell_type": "code",

|

| 133 |

+

"execution_count": 1,

|

| 134 |

+

"id": "063f3c9f",

|

| 135 |

+

"metadata": {},

|

| 136 |

+

"outputs": [],

|

| 137 |

+

"source": [

|

| 138 |

+

"# kill all the process having lmdeploy in the name\n",

|

| 139 |

+

"# !ps aux|grep 'lmdeploy' | awk '{print $2}'| xargs kill -9"

|

| 140 |

+

]

|

| 141 |

+

},

|

| 142 |

+

{

|

| 143 |

+

"cell_type": "code",

|

| 144 |

+

"execution_count": 1,

|

| 145 |

+

"id": "63db3f55",

|

| 146 |

+

"metadata": {},

|

| 147 |

+

"outputs": [

|

| 148 |

+

{

|

| 149 |

+

"name": "stdout",

|

| 150 |

+

"output_type": "stream",

|

| 151 |

+

"text": [

|

| 152 |

+

"Fri Dec 20 08:33:19 2024 \n",

|

| 153 |

+

"+---------------------------------------------------------------------------------------+\n",

|

| 154 |

+

"| NVIDIA-SMI 535.183.01 Driver Version: 535.183.01 CUDA Version: 12.2 |\n",

|

| 155 |

+

"|-----------------------------------------+----------------------+----------------------+\n",

|

| 156 |

+

"| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |\n",

|

| 157 |

+

"| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |\n",

|

| 158 |

+

"| | | MIG M. |\n",

|

| 159 |

+

"|=========================================+======================+======================|\n",

|

| 160 |

+

"| 0 NVIDIA A100-PCIE-40GB Off | 00000000:14:00.0 Off | 0 |\n",

|

| 161 |

+

"| N/A 32C P0 36W / 250W | 33714MiB / 40960MiB | 0% Default |\n",

|

| 162 |

+

"| | | Disabled |\n",

|

| 163 |

+

"+-----------------------------------------+----------------------+----------------------+\n",

|

| 164 |

+

"| 1 NVIDIA A100-PCIE-40GB Off | 00000000:15:00.0 Off | 0 |\n",

|

| 165 |

+

"| N/A 33C P0 38W / 250W | 33934MiB / 40960MiB | 0% Default |\n",

|

| 166 |

+

"| | | Disabled |\n",

|

| 167 |

+

"+-----------------------------------------+----------------------+----------------------+\n",

|

| 168 |

+

"| 2 NVIDIA A100-PCIE-40GB Off | 00000000:39:00.0 Off | 0 |\n",

|

| 169 |

+

"| N/A 32C P0 36W / 250W | 33934MiB / 40960MiB | 0% Default |\n",

|

| 170 |

+

"| | | Disabled |\n",

|

| 171 |

+

"+-----------------------------------------+----------------------+----------------------+\n",

|

| 172 |

+

"| 3 NVIDIA A100-PCIE-40GB Off | 00000000:3A:00.0 Off | 0 |\n",

|

| 173 |

+

"| N/A 33C P0 39W / 250W | 34604MiB / 40960MiB | 0% Default |\n",

|

| 174 |

+

"| | | Disabled |\n",

|

| 175 |

+

"+-----------------------------------------+----------------------+----------------------+\n",

|

| 176 |

+

" \n",

|

| 177 |

+

"+---------------------------------------------------------------------------------------+\n",

|

| 178 |

+

"| Processes: |\n",

|

| 179 |

+

"| GPU GI CI PID Type Process name GPU Memory |\n",

|

| 180 |

+

"| ID ID Usage |\n",

|

| 181 |

+

"|=======================================================================================|\n",

|

| 182 |

+

"+---------------------------------------------------------------------------------------+\n"

|

| 183 |

+

]

|

| 184 |

+

}

|

| 185 |

+

],

|

| 186 |

+

"source": [

|

| 187 |

+

"!nvidia-smi"

|

| 188 |

+

]

|

| 189 |

+

},

|

| 190 |

+

{

|

| 191 |

+

"cell_type": "code",

|

| 192 |

+

"execution_count": 4,

|

| 193 |

+

"id": "0d54a9a2",

|

| 194 |

+

"metadata": {},

|

| 195 |

+

"outputs": [

|

| 196 |

+

{

|

| 197 |

+

"name": "stdout",

|

| 198 |

+

"output_type": "stream",

|

| 199 |

+

"text": [

|

| 200 |

+

"INFO: 127.0.0.1:43526 - \"GET /v1/models HTTP/1.1\" 200 OK\n",

|

| 201 |

+

"INFO: 127.0.0.1:43526 - \"POST /v1/chat/completions HTTP/1.1\" 200 OK\n",

|

| 202 |

+

"ChatCompletion(id='1', choices=[Choice(finish_reason='stop', index=0, logprobs=None, message=ChatCompletionMessage(content='1. Prioritize tasks: Make a list of tasks you need to complete and prioritize them based on their importance and urgency. This will help you focus on the most critical tasks first and ensure that you complete them on time.\\n\\n2. Set realistic deadlines: Set realistic deadlines for each task based on the time required to complete it. This will help you avoid procrastination and ensure that you complete tasks on time.\\n\\n3. Use time management tools: Use time management tools like calendars, to-do lists, and time-tracking apps to help you manage your time more effectively. These tools can help you stay organized, focused, and on track with your tasks and deadlines.', refusal=None, role='assistant', function_call=None, tool_calls=None))], created=1734683216, model='OpenGVLab/InternVL2_5-26B-AWQ', object='chat.completion', service_tier=None, system_fingerprint=None, usage=CompletionUsage(completion_tokens=138, prompt_tokens=27, total_tokens=165, completion_tokens_details=None))\n"

|

| 203 |

+

]

|

| 204 |

+

}

|

| 205 |

+

],

|

| 206 |

+

"source": [

|

| 207 |

+

"from openai import OpenAI\n",

|

| 208 |

+

"\n",

|

| 209 |

+

"client = OpenAI(api_key=\"YOUR_API_KEY\", base_url=\"http://0.0.0.0:23333/v1\")\n",

|

| 210 |

+

"model_name = client.models.list().data[0].id\n",

|

| 211 |

+

"response = client.chat.completions.create(\n",

|

| 212 |

+

" model=model_name,\n",

|

| 213 |

+

" messages=[\n",

|

| 214 |

+

" {\"role\": \"system\", \"content\": \"You are a helpful assistant.\"},\n",

|

| 215 |

+

" {\"role\": \"user\", \"content\": \" provide three suggestions about time management\"},\n",

|

| 216 |

+

" ],\n",

|

| 217 |

+

" temperature=0.8,\n",

|

| 218 |

+

" top_p=0.8,\n",

|

| 219 |

+

")\n",

|

| 220 |

+

"print(response)"

|

| 221 |

+

]

|

| 222 |

+

},

|

| 223 |

+

{

|

| 224 |

+

"cell_type": "markdown",

|

| 225 |

+

"id": "6cad3dc4",

|

| 226 |

+

"metadata": {},

|

| 227 |

+

"source": [

|

| 228 |

+

"If you want to use async functions, may try the following example:"

|

| 229 |

+

]

|

| 230 |

+

},

|

| 231 |

+

{

|

| 232 |

+

"cell_type": "code",

|

| 233 |

+

"execution_count": null,

|

| 234 |

+

"id": "056d55bf",

|

| 235 |

+

"metadata": {},

|

| 236 |

+

"outputs": [],

|

| 237 |

+

"source": [

|

| 238 |

+

"import asyncio\n",

|

| 239 |

+

"from openai import AsyncOpenAI\n",

|

| 240 |

+

"\n",

|

| 241 |

+

"\n",

|

| 242 |

+

"async def main():\n",

|

| 243 |

+

" client = AsyncOpenAI(api_key=\"YOUR_API_KEY\", base_url=\"http://0.0.0.0:23333/v1\")\n",

|

| 244 |

+

" model_cards = await client.models.list()._get_page()\n",

|

| 245 |

+

" response = await client.chat.completions.create(\n",

|

| 246 |

+

" model=model_cards.data[0].id,\n",

|

| 247 |

+

" messages=[\n",

|

| 248 |

+

" {\"role\": \"system\", \"content\": \"You are a helpful assistant.\"},\n",

|

| 249 |

+

" {\n",

|

| 250 |

+

" \"role\": \"user\",\n",

|

| 251 |

+

" \"content\": \" provide three suggestions about time management\",\n",

|

| 252 |

+

" },\n",

|

| 253 |

+

" ],\n",

|

| 254 |

+

" temperature=0.8,\n",

|

| 255 |

+

" top_p=0.8,\n",

|

| 256 |

+

" )\n",

|

| 257 |

+

" print(response)\n",

|

| 258 |

+

"\n",

|

| 259 |

+

"\n",

|

| 260 |

+

"asyncio.run(main())"

|

| 261 |

+

]

|

| 262 |

+

},

|

| 263 |

+

{

|

| 264 |

+

"cell_type": "markdown",

|

| 265 |

+

"id": "1bb5033b",

|

| 266 |

+

"metadata": {},

|

| 267 |

+

"source": [

|

| 268 |

+

"You can invoke other OpenAI interfaces using similar methods. For more detailed information, please refer to the [OpenAI API guide](https://platform.openai.com/docs/guides/text-generation)\n",

|

| 269 |

+

"\n",

|

| 270 |

+

"### Integrate with lmdeploy `APIClient`\n",

|

| 271 |

+

"\n",

|

| 272 |

+

"Below are some examples demonstrating how to visit the service through `APIClient`\n",

|

| 273 |

+

"\n",

|

| 274 |

+

"If you want to use the `/v1/chat/completions` endpoint, you can try the following code:"

|

| 275 |

+

]

|

| 276 |

+

},

|

| 277 |

+

{

|

| 278 |

+

"cell_type": "code",

|

| 279 |

+

"execution_count": 6,

|

| 280 |

+

"id": "cc032c11",

|

| 281 |

+

"metadata": {},

|

| 282 |

+

"outputs": [],

|

| 283 |

+

"source": [

|

| 284 |

+

"server_ip = \"0.0.0.0\"\n",

|

| 285 |

+

"server_port = 23333"

|

| 286 |

+

]

|

| 287 |

+

},

|

| 288 |

+

{

|

| 289 |

+

"cell_type": "code",

|

| 290 |

+

"execution_count": 8,

|

| 291 |

+

"id": "85a8aca4",

|

| 292 |

+

"metadata": {},

|

| 293 |

+

"outputs": [

|

| 294 |

+

{

|

| 295 |

+

"name": "stdout",

|

| 296 |

+

"output_type": "stream",

|

| 297 |

+

"text": [

|

| 298 |

+

"INFO: 127.0.0.1:48416 - \"GET /v1/models HTTP/1.1\" 200 OK\n",

|

| 299 |

+

"INFO: 127.0.0.1:48426 - \"POST /v1/chat/completions HTTP/1.1\" 200 OK\n",

|

| 300 |

+

"{'id': '2', 'object': 'chat.completion', 'created': 1734683311, 'model': 'OpenGVLab/InternVL2_5-26B-AWQ', 'choices': [{'index': 0, 'message': {'role': 'assistant', 'content': \"Hello! It looks like you're testing out the system. How can I assist you today? If you have any questions or need help with something specific, feel free to ask!\", 'tool_calls': None}, 'logprobs': None, 'finish_reason': 'stop'}], 'usage': {'prompt_tokens': 53, 'total_tokens': 90, 'completion_tokens': 37}}\n"

|

| 301 |

+

]

|

| 302 |

+

}

|

| 303 |

+

],

|

| 304 |

+

"source": [

|

| 305 |

+

"from lmdeploy.serve.openai.api_client import APIClient\n",

|

| 306 |

+

"\n",

|

| 307 |

+

"api_client = APIClient(f\"http://{server_ip}:{server_port}\")\n",

|

| 308 |

+

"model_name = api_client.available_models[0]\n",

|

| 309 |

+

"messages = [{\"role\": \"user\", \"content\": \"Say this is a test!\"}]\n",

|

| 310 |

+

"for item in api_client.chat_completions_v1(model=model_name, messages=messages):\n",

|

| 311 |

+

" print(item)"

|

| 312 |

+

]

|

| 313 |

+

},

|

| 314 |

+

{

|

| 315 |

+

"cell_type": "markdown",

|

| 316 |

+

"id": "a3f47b52",

|

| 317 |

+

"metadata": {},

|

| 318 |

+

"source": [

|

| 319 |

+

"For the `/v1/completions` endpoint, you can try:"

|

| 320 |

+

]

|

| 321 |

+

},

|

| 322 |

+

{

|

| 323 |

+

"cell_type": "code",

|

| 324 |

+

"execution_count": 9,

|

| 325 |

+

"id": "4095a0e2",

|

| 326 |

+

"metadata": {},

|

| 327 |

+

"outputs": [

|

| 328 |

+

{

|

| 329 |

+

"name": "stdout",

|

| 330 |

+

"output_type": "stream",

|

| 331 |

+

"text": [

|

| 332 |

+

"INFO: 127.0.0.1:45650 - \"GET /v1/models HTTP/1.1\" 200 OK\n",

|

| 333 |

+

"INFO: 127.0.0.1:45660 - \"POST /v1/completions HTTP/1.1\" 200 OK\n",

|

| 334 |

+

"{'id': '3', 'object': 'text_completion', 'created': 1734683319, 'model': 'OpenGVLab/InternVL2_5-26B-AWQ', 'choices': [{'index': 0, 'text': '. I need help with a math problem. \\n\\nFind the smallest value of 2', 'logprobs': None, 'finish_reason': 'length'}], 'usage': {'prompt_tokens': 2, 'total_tokens': 19, 'completion_tokens': 17}}\n"

|

| 335 |

+

]

|

| 336 |

+

}

|

| 337 |

+

],

|

| 338 |

+

"source": [

|

| 339 |

+

"from lmdeploy.serve.openai.api_client import APIClient\n",

|

| 340 |

+

"\n",

|

| 341 |

+

"api_client = APIClient(f\"http://{server_ip}:{server_port}\")\n",

|

| 342 |

+

"model_name = api_client.available_models[0]\n",

|

| 343 |

+

"for item in api_client.completions_v1(model=model_name, prompt=\"hi\"):\n",

|

| 344 |

+

" print(item)"

|

| 345 |

+

]

|

| 346 |

+

},

|

| 347 |

+

{

|

| 348 |

+

"cell_type": "markdown",

|

| 349 |

+

"id": "d7ac9e5f",

|

| 350 |

+

"metadata": {},

|

| 351 |

+

"source": [

|

| 352 |

+

"As for `/v1/chat/interactive`,we disable the feature by default. Please open it by setting `interactive_mode = True`. If you don't, it falls back to openai compatible interfaces.\n",

|

| 353 |

+

"\n",

|

| 354 |

+

"Keep in mind that `session_id` indicates an identical sequence and all requests belonging to the same sequence must share the same `session_id`.\n",

|

| 355 |

+

"For instance, in a sequence with 10 rounds of chatting requests, the `session_id` in each request should be the same."

|

| 356 |

+

]

|

| 357 |

+

},

|

| 358 |

+

{

|

| 359 |

+

"cell_type": "code",

|

| 360 |

+

"execution_count": 10,

|

| 361 |

+

"id": "4b7c4695",

|

| 362 |

+

"metadata": {},

|

| 363 |

+

"outputs": [

|

| 364 |

+

{

|

| 365 |

+

"name": "stdout",

|

| 366 |

+

"output_type": "stream",

|

| 367 |

+

"text": [

|

| 368 |

+

"INFO: 127.0.0.1:52202 - \"POST /v1/chat/interactive HTTP/1.1\" 200 OK\n",

|

| 369 |

+

"{'text': \"Hello! I'm an AI assistant, here to help you with any questions or tasks you have. How can I assist you today?\", 'tokens': 28, 'input_tokens': 54, 'history_tokens': 0, 'finish_reason': 'stop'}\n",

|

| 370 |

+

"INFO: 127.0.0.1:52218 - \"POST /v1/chat/interactive HTTP/1.1\" 200 OK\n",

|

| 371 |

+

"{'text': 'I was developed by SenseTime, a leading artificial intelligence company. SenseTime focuses on developing advanced AI technologies and applications across various industries. If you have any specific questions or need assistance with something, feel free to ask!', 'tokens': 45, 'input_tokens': 14, 'history_tokens': 82, 'finish_reason': 'stop'}\n",

|

| 372 |

+

"INFO: 127.0.0.1:52228 - \"POST /v1/chat/interactive HTTP/1.1\" 200 OK\n",

|

| 373 |

+

"{'text': \"SenseTime is a prominent artificial intelligence company based in China, known for its cutting-edge research and development in AI technologies. Here are some key points about SenseTime:\\n\\n1. **Founding and Growth**:\\n - SenseTime was founded in 2014 and has since grown to become one of the leading AI companies globally.\\n - It has a significant presence in China and has expanded its operations internationally.\\n\\n2. **Research and Development**:\\n - SenseTime is at the forefront of AI research, focusing on areas such as computer vision, deep learning, and natural language processing.\\n - The company has developed various AI applications, including facial recognition, augmented reality, and autonomous driving technologies.\\n\\n3. **Industry Applications**:\\n - SenseTime's AI technologies are applied across various industries, including healthcare, education, finance, and entertainment.\\n - For example, in healthcare, their AI solutions help in medical imaging and diagnostics.\\n\\n4. **Partnerships and Collaborations**:\\n - SenseTime collaborates with numerous academic institutions, research labs, and industry partners to advance AI technologies.\\n - They have partnerships with major tech companies and organizations to integrate their AI solutions into broader ecosystems.\\n\\n5. **Innovation and Impact**:\\n - SenseTime is committed to driving innovation in AI and making it accessible to a wide range of users.\\n - Their work has a significant impact on improving efficiency, accuracy, and decision-making in various fields.\\n\\n6. **Awards and Recognition**:\\n - SenseTime has received numerous awards and recognitions for its contributions to AI and technology.\\n - The company is often cited in media and industry reports for its advancements and leadership in AI.\\n\\nIf you have any specific questions about SenseTime or need more detailed information, feel free to ask!\", 'tokens': 365, 'input_tokens': 16, 'history_tokens': 141, 'finish_reason': 'stop'}\n",

|

| 374 |

+

"INFO: 127.0.0.1:52230 - \"POST /v1/chat/interactive HTTP/1.1\" 200 OK\n",

|

| 375 |

+

"{'text': \"Certainly! Here's a summary of our conversation so far:\\n\\n1. **Introduction**:\\n - I am an AI assistant developed by SenseTime, a leading AI company.\\n\\n2. **About SenseTime**:\\n - SenseTime is a prominent AI company based in China, founded in 2014.\\n - It focuses on advanced AI technologies, including computer vision, deep learning, and natural language processing.\\n - The company develops AI applications for various industries such as healthcare, education, finance, and entertainment.\\n - SenseTime collaborates with academic institutions, research labs, and industry partners to advance AI technologies.\\n - They have received numerous awards and recognitions for their contributions to AI and technology.\\n\\nIf you need more specific information or have any other questions, feel free to ask!\", 'tokens': 163, 'input_tokens': 20, 'history_tokens': 522, 'finish_reason': 'stop'}\n"

|

| 376 |

+

]

|

| 377 |

+

}

|

| 378 |

+

],

|

| 379 |

+

"source": [

|

| 380 |

+

"from lmdeploy.serve.openai.api_client import APIClient\n",

|

| 381 |

+

"\n",

|

| 382 |

+

"api_client = APIClient(f\"http://{server_ip}:{server_port}\")\n",

|

| 383 |

+

"messages = [\n",

|

| 384 |

+

" \"hi, what's your name?\",\n",

|

| 385 |

+

" \"who developed you?\",\n",

|

| 386 |

+

" \"Tell me more about your developers\",\n",

|

| 387 |

+

" \"Summarize the information we've talked so far\",\n",

|

| 388 |

+

"]\n",

|

| 389 |

+

"for message in messages:\n",

|

| 390 |

+

" for item in api_client.chat_interactive_v1(\n",

|

| 391 |

+

" prompt=message, session_id=1, interactive_mode=True, stream=False\n",

|

| 392 |

+

" ):\n",

|

| 393 |

+

" print(item)"

|

| 394 |

+

]

|

| 395 |

+

},

|

| 396 |

+

{

|

| 397 |

+

"cell_type": "markdown",

|

| 398 |

+

"id": "278265b6",

|

| 399 |

+

"metadata": {},

|

| 400 |

+

"source": [

|

| 401 |

+

"### Tools\n",

|

| 402 |

+

"\n",

|

| 403 |

+

"May refer to [api_server_tools](./api_server_tools.md).\n",

|

| 404 |

+

"\n",

|

| 405 |

+

"### Integrate with Java/Golang/Rust\n",

|

| 406 |

+

"\n",

|

| 407 |

+

"May use [openapi-generator-cli](https://github.com/OpenAPITools/openapi-generator-cli) to convert `http://{server_ip}:{server_port}/openapi.json` to java/rust/golang client.\n",

|

| 408 |

+

"Here is an example:\n",

|

| 409 |

+

"\n",

|

| 410 |

+

"```shell\n",

|

| 411 |

+

"$ docker run -it --rm -v ${PWD}:/local openapitools/openapi-generator-cli generate -i /local/openapi.json -g rust -o /local/rust\n",

|

| 412 |

+

"\n",

|

| 413 |

+

"$ ls rust/*\n",

|

| 414 |

+

"rust/Cargo.toml rust/git_push.sh rust/README.md\n",

|

| 415 |

+

"\n",

|

| 416 |

+

"rust/docs:\n",

|

| 417 |

+

"ChatCompletionRequest.md EmbeddingsRequest.md HttpValidationError.md LocationInner.md Prompt.md\n",

|

| 418 |

+

"DefaultApi.md GenerateRequest.md Input.md Messages.md ValidationError.md\n",

|

| 419 |

+

"\n",

|

| 420 |

+

"rust/src:\n",

|

| 421 |

+

"apis lib.rs models\n",

|

| 422 |

+

"```\n",

|

| 423 |

+

"\n",

|

| 424 |

+

"### Integrate with cURL\n",

|

| 425 |

+

"\n",

|

| 426 |

+

"cURL is a tool for observing the output of the RESTful APIs.\n",

|

| 427 |

+

"\n",

|

| 428 |

+

"- list served models `v1/models`"

|

| 429 |

+

]

|

| 430 |

+

},

|

| 431 |

+

{

|

| 432 |

+

"cell_type": "code",

|

| 433 |

+

"execution_count": 12,

|

| 434 |

+

"id": "a1411963",

|

| 435 |

+

"metadata": {},

|

| 436 |

+

"outputs": [

|

| 437 |

+

{

|

| 438 |

+

"name": "stdout",

|

| 439 |

+

"output_type": "stream",

|

| 440 |

+

"text": [

|

| 441 |

+

"INFO: 127.0.0.1:41826 - \"GET /v1/models HTTP/1.1\" 200 OK\n",

|

| 442 |

+

"{\"object\":\"list\",\"data\":[{\"id\":\"OpenGVLab/InternVL2_5-26B-AWQ\",\"object\":\"model\",\"created\":1734683385,\"owned_by\":\"lmdeploy\",\"root\":\"OpenGVLab/InternVL2_5-26B-AWQ\",\"parent\":null,\"permission\":[{\"id\":\"modelperm-iFPz3naHoQtF4of9cmFLoL\",\"object\":\"model_permission\",\"created\":1734683385,\"allow_create_engine\":false,\"allow_sampling\":true,\"allow_logprobs\":true,\"allow_search_indices\":true,\"allow_view\":true,\"allow_fine_tuning\":false,\"organization\":\"*\",\"group\":null,\"is_blocking\":false}]}]}"

|

| 443 |

+

]

|

| 444 |

+

}

|

| 445 |

+

],

|

| 446 |

+

"source": [

|

| 447 |

+

"# %%bash\n",

|

| 448 |

+

"!curl http://{server_ip}:{server_port}/v1/models"

|

| 449 |

+

]

|

| 450 |

+

},

|

| 451 |

+

{

|

| 452 |

+

"cell_type": "markdown",

|

| 453 |

+

"id": "ce68340d",

|

| 454 |

+

"metadata": {},

|

| 455 |

+

"source": [

|

| 456 |

+

"- chat `v1/chat/completions`"

|

| 457 |

+

]

|

| 458 |

+

},

|

| 459 |

+

{

|

| 460 |

+

"cell_type": "code",

|

| 461 |

+

"execution_count": null,

|

| 462 |

+

"id": "8bc660df",

|

| 463 |

+

"metadata": {},

|

| 464 |

+

"outputs": [],

|

| 465 |

+

"source": [

|

| 466 |

+

"%%bash\n",

|

| 467 |

+

"curl http://{server_ip}:{server_port}/v1/chat/completions \\\n",

|

| 468 |

+

" -H \"Content-Type: application/json\" \\\n",

|

| 469 |

+

" -d '{\n",

|

| 470 |

+

" \"model\": \"internlm-chat-7b\",\n",

|

| 471 |

+

" \"messages\": [{\"role\": \"user\", \"content\": \"Hello! How are you?\"}]\n",

|

| 472 |

+

" }'"

|

| 473 |

+

]

|

| 474 |

+

},

|

| 475 |

+

{

|

| 476 |

+

"cell_type": "markdown",

|

| 477 |

+

"id": "1d5552dd",

|

| 478 |

+

"metadata": {},

|

| 479 |

+

"source": [

|

| 480 |

+

"- text completions `v1/completions`\n",

|

| 481 |

+

"\n",

|

| 482 |

+

"```shell\n",

|

| 483 |

+

"curl http://{server_ip}:{server_port}/v1/completions \\\n",

|

| 484 |

+

" -H 'Content-Type: application/json' \\\n",

|

| 485 |

+

" -d '{\n",

|

| 486 |

+

" \"model\": \"llama\",\n",

|

| 487 |

+

" \"prompt\": \"two steps to build a house:\"\n",

|

| 488 |

+

"}'\n",

|

| 489 |

+

"```\n",

|

| 490 |

+

"\n",

|

| 491 |

+

"- interactive chat `v1/chat/interactive`"

|

| 492 |

+

]

|

| 493 |

+

},

|

| 494 |

+

{

|

| 495 |

+

"cell_type": "code",

|

| 496 |

+

"execution_count": null,

|

| 497 |

+

"id": "082c7709",

|

| 498 |

+

"metadata": {},

|

| 499 |

+

"outputs": [],

|

| 500 |

+

"source": [

|

| 501 |

+

"%%bash\n",

|

| 502 |

+

"curl http://{server_ip}:{server_port}/v1/chat/interactive \\\n",

|

| 503 |

+

" -H \"Content-Type: application/json\" \\\n",

|

| 504 |

+

" -d '{\n",

|

| 505 |

+

" \"prompt\": \"Hello! How are you?\",\n",

|

| 506 |

+

" \"session_id\": 1,\n",

|

| 507 |

+

" \"interactive_mode\": true\n",

|

| 508 |

+

" }'"

|

| 509 |

+

]

|

| 510 |

+

},

|

| 511 |

+

{

|

| 512 |

+

"cell_type": "markdown",

|

| 513 |

+

"id": "ec3c5eca",

|

| 514 |

+

"metadata": {},

|

| 515 |

+

"source": [

|

| 516 |

+

"## Integrate with WebUI\n",

|

| 517 |

+

"\n",

|

| 518 |

+

"```shell\n",

|

| 519 |

+

"# api_server_url is what printed in api_server.py, e.g. http://localhost:23333\n",

|

| 520 |

+

"# server_ip and server_port here are for gradio ui\n",

|

| 521 |

+

"# example: lmdeploy serve gradio http://localhost:23333 --server-name localhost --server-port 6006\n",

|

| 522 |

+

"lmdeploy serve gradio api_server_url --server-name ${gradio_ui_ip} --server-port ${gradio_ui_port}\n",

|

| 523 |

+

"```\n",

|

| 524 |

+

"\n",

|

| 525 |

+

"## FAQ\n",

|

| 526 |

+

"\n",

|

| 527 |

+

"1. When user got `\"finish_reason\":\"length\"`, it means the session is too long to be continued. The session length can be\n",

|

| 528 |

+

" modified by passing `--session_len` to api_server.\n",

|

| 529 |

+

"\n",

|

| 530 |

+

"2. When OOM appeared at the server side, please reduce the `cache_max_entry_count` of `backend_config` when launching the service.\n",

|

| 531 |

+

"\n",

|

| 532 |

+

"3. When the request with the same `session_id` to `/v1/chat/interactive` got a empty return value and a negative `tokens`, please consider setting `interactive_mode=false` to restart the session.\n",

|

| 533 |

+

"\n",

|

| 534 |

+

"4. The `/v1/chat/interactive` api disables engaging in multiple rounds of conversation by default. The input argument `prompt` consists of either single strings or entire chat histories.\n",

|

| 535 |

+

"\n",

|

| 536 |

+

"5. Regarding the stop words, we only support characters that encode into a single index. Furthermore, there may be multiple indexes that decode into results containing the stop word. In such cases, if the number of these indexes is too large, we will only use the index encoded by the tokenizer. If you want use a stop symbol that encodes into multiple indexes, you may consider performing string matching on the streaming client side. Once a successful match is found, you can then break out of the streaming loop.\n",

|

| 537 |

+

"\n",

|

| 538 |

+

"6. To customize a chat template, please refer to [chat_template.md](../advance/chat_template.md)."

|

| 539 |

+

]

|

| 540 |

+

}

|

| 541 |

+

],

|

| 542 |

+

"metadata": {

|

| 543 |

+

"jupytext": {

|

| 544 |

+

"cell_metadata_filter": "-all",

|

| 545 |

+

"main_language": "python",

|

| 546 |

+

"notebook_metadata_filter": "-all"

|

| 547 |

+

},

|

| 548 |

+

"kernelspec": {

|

| 549 |

+

"display_name": "lmdeploy",

|

| 550 |

+

"language": "python",

|

| 551 |

+

"name": "python3"

|

| 552 |

+

},

|

| 553 |

+

"language_info": {

|

| 554 |

+

"codemirror_mode": {

|

| 555 |

+

"name": "ipython",

|

| 556 |

+

"version": 3

|

| 557 |

+

},

|

| 558 |

+

"file_extension": ".py",

|

| 559 |

+

"mimetype": "text/x-python",

|

| 560 |

+

"name": "python",

|

| 561 |

+

"nbconvert_exporter": "python",

|

| 562 |

+

"pygments_lexer": "ipython3",

|

| 563 |

+

"version": "3.8.19"

|

| 564 |

+

}

|

| 565 |

+

},

|

| 566 |

+

"nbformat": 4,

|

| 567 |

+

"nbformat_minor": 5

|

| 568 |

+

}

|

a_mllm_notebooks/lmdeploy/api_server.md

ADDED

|

@@ -0,0 +1,265 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# OpenAI Compatible Server

|

| 2 |

+

|

| 3 |

+

This article primarily discusses the deployment of a single LLM model across multiple GPUs on a single node, providing a service that is compatible with the OpenAI interface, as well as the usage of the service API.

|

| 4 |

+

For the sake of convenience, we refer to this service as `api_server`. Regarding parallel services with multiple models, please refer to the guide about [Request Distribution Server](proxy_server.md).

|

| 5 |

+

|

| 6 |

+

In the following sections, we will first introduce methods for starting the service, choosing the appropriate one based on your application scenario.

|

| 7 |

+

|

| 8 |

+

Next, we focus on the definition of the service's RESTful API, explore the various ways to interact with the interface, and demonstrate how to try the service through the Swagger UI or LMDeploy CLI tools.

|

| 9 |

+

|

| 10 |

+

Finally, we showcase how to integrate the service into a WebUI, providing you with a reference to easily set up a demonstration demo.

|

| 11 |

+

|

| 12 |

+

## Launch Service

|

| 13 |

+

|

| 14 |

+

Take the [internlm2_5-7b-chat](https://huggingface.co/internlm/internlm2_5-7b-chat) model hosted on huggingface hub as an example, you can choose one the following methods to start the service.

|

| 15 |

+

|

| 16 |

+

### Option 1: Launching with lmdeploy CLI

|

| 17 |

+

|

| 18 |

+

```shell

|

| 19 |

+

lmdeploy serve api_server internlm/internlm2_5-7b-chat --server-port 23333

|

| 20 |

+

```

|

| 21 |

+

|

| 22 |

+

The arguments of `api_server` can be viewed through the command `lmdeploy serve api_server -h`, for instance, `--tp` to set tensor parallelism, `--session-len` to specify the max length of the context window, `--cache-max-entry-count` to adjust the GPU mem ratio for k/v cache etc.

|

| 23 |

+

|

| 24 |

+

### Option 2: Deploying with docker

|

| 25 |

+

|

| 26 |

+

With LMDeploy [official docker image](https://hub.docker.com/r/openmmlab/lmdeploy/tags), you can run OpenAI compatible server as follows:

|

| 27 |

+

|

| 28 |

+

```shell

|

| 29 |

+

docker run --runtime nvidia --gpus all \

|

| 30 |

+

-v ~/.cache/huggingface:/root/.cache/huggingface \

|

| 31 |

+

--env "HUGGING_FACE_HUB_TOKEN=<secret>" \

|

| 32 |

+

-p 23333:23333 \

|

| 33 |

+

--ipc=host \

|

| 34 |

+

openmmlab/lmdeploy:latest \

|

| 35 |

+

lmdeploy serve api_server internlm/internlm2_5-7b-chat

|

| 36 |

+

```

|

| 37 |

+

|

| 38 |

+