Upload folder using huggingface_hub

Browse files- .gitattributes +1 -0

- README.md +110 -20

- chat_template.jinja +33 -0

- config.json +77 -0

- generation_config.json +15 -0

- model-00001-of-00002.safetensors +3 -0

- model-00002-of-00002.safetensors +3 -0

- model.safetensors.index.json +0 -0

- notebook.ipynb +0 -0

- preprocessor_config.json +11 -0

- quantization_config.json +0 -0

- tokenizer.json +3 -0

- tokenizer_config.json +218 -0

- video_preprocessor_config.json +11 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

tokenizer.json filter=lfs diff=lfs merge=lfs -text

|

README.md

CHANGED

|

@@ -1,20 +1,110 @@

|

|

| 1 |

-

---

|

| 2 |

-

license: mit

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

| 14 |

-

|

| 15 |

-

|

| 16 |

-

|

| 17 |

-

|

| 18 |

-

|

| 19 |

-

|

| 20 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: mit

|

| 3 |

+

language:

|

| 4 |

+

- en

|

| 5 |

+

- zh

|

| 6 |

+

base_model:

|

| 7 |

+

- zai-org/GLM-4-9B-0414

|

| 8 |

+

pipeline_tag: image-text-to-text

|

| 9 |

+

library_name: transformers

|

| 10 |

+

tags:

|

| 11 |

+

- reasoning

|

| 12 |

+

---

|

| 13 |

+

|

| 14 |

+

# GLM-4.1V-9B-Thinking

|

| 15 |

+

|

| 16 |

+

<div align="center">

|

| 17 |

+

<img src=https://raw.githubusercontent.com/zai-org/GLM-4.1V-Thinking/99c5eb6563236f0ff43605d91d107544da9863b2/resources/logo.svg width="40%"/>

|

| 18 |

+

</div>

|

| 19 |

+

<p align="center">

|

| 20 |

+

📖 View the GLM-4.1V-9B-Thinking <a href="https://arxiv.org/abs/2507.01006" target="_blank">paper</a>.

|

| 21 |

+

<br>

|

| 22 |

+

📍 Using GLM-4.1V-9B-Thinking API at <a href="https://www.bigmodel.cn/dev/api/visual-reasoning-model/GLM-4.1V-Thinking">Zhipu Foundation Model Open Platform</a>

|

| 23 |

+

</p>

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

## Model Introduction

|

| 27 |

+

|

| 28 |

+

Vision-Language Models (VLMs) have become foundational components of intelligent systems. As real-world AI tasks grow

|

| 29 |

+

increasingly complex, VLMs must evolve beyond basic multimodal perception to enhance their reasoning capabilities in

|

| 30 |

+

complex tasks. This involves improving accuracy, comprehensiveness, and intelligence, enabling applications such as

|

| 31 |

+

complex problem solving, long-context understanding, and multimodal agents.

|

| 32 |

+

|

| 33 |

+

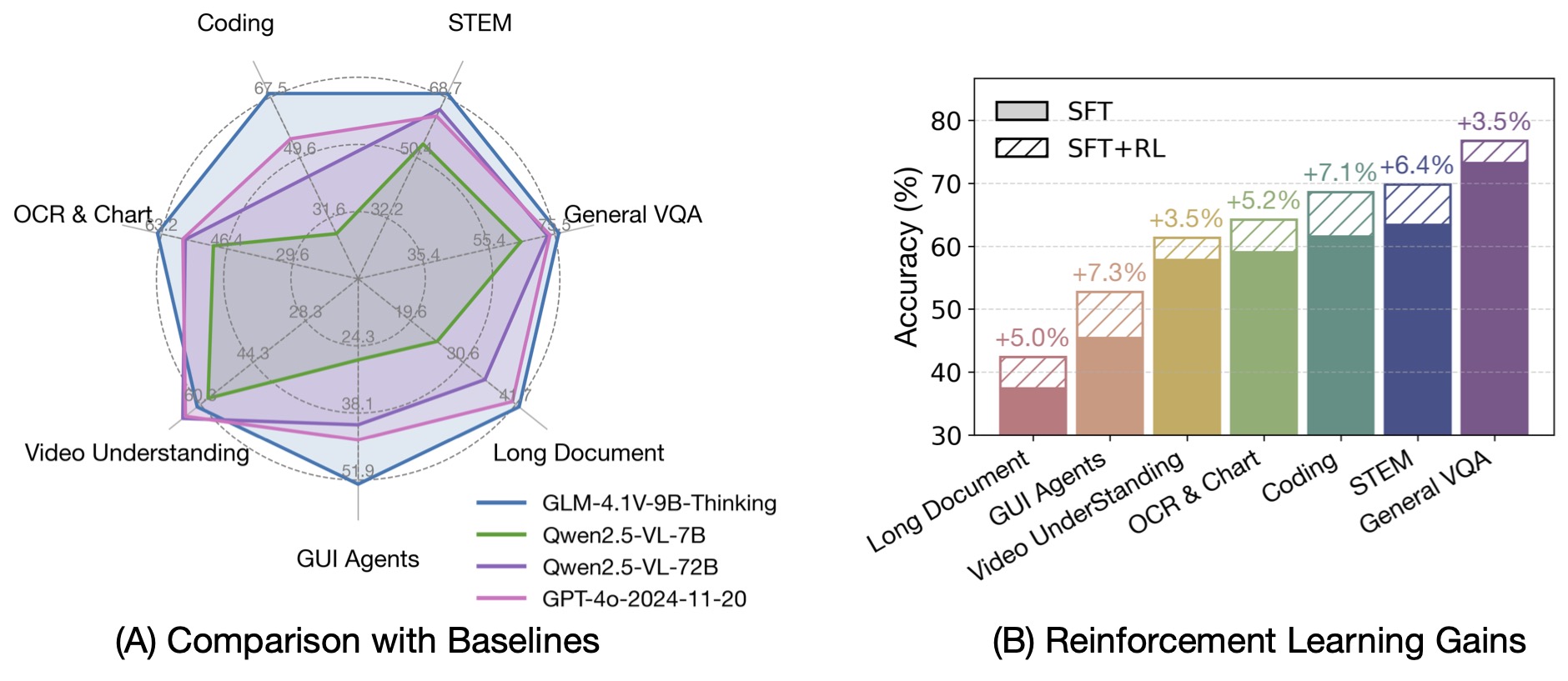

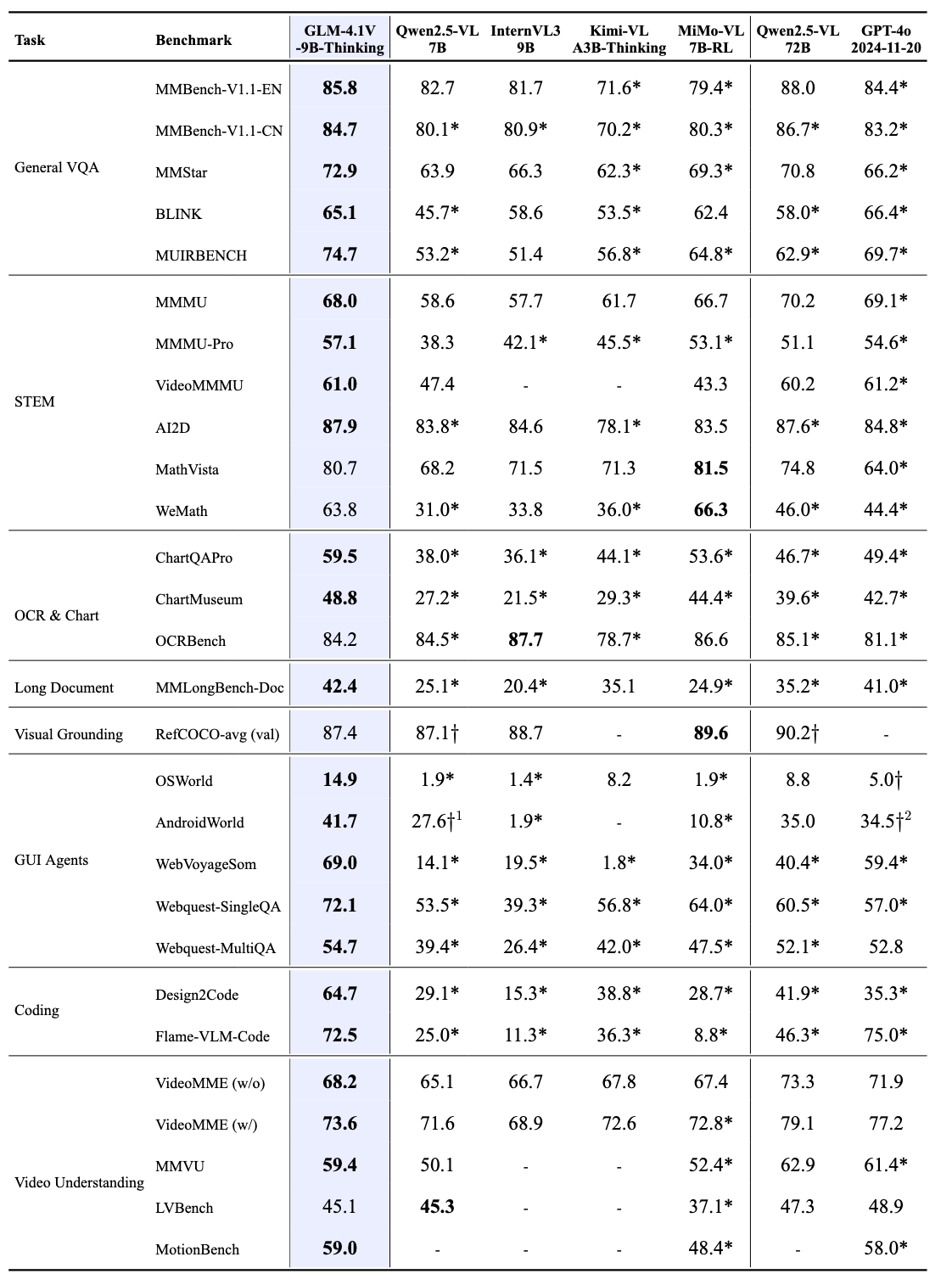

Based on the [GLM-4-9B-0414](https://github.com/zai-org/GLM-4) foundation model, we present the new open-source VLM model

|

| 34 |

+

**GLM-4.1V-9B-Thinking**, designed to explore the upper limits of reasoning in vision-language models. By introducing

|

| 35 |

+

a "thinking paradigm" and leveraging reinforcement learning, the model significantly enhances its capabilities. It

|

| 36 |

+

achieves state-of-the-art performance among 10B-parameter VLMs, matching or even surpassing the 72B-parameter

|

| 37 |

+

Qwen-2.5-VL-72B on 18 benchmark tasks. We are also open-sourcing the base model GLM-4.1V-9B-Base to

|

| 38 |

+

support further research into the boundaries of VLM capabilities.

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

Compared to the previous generation models CogVLM2 and the GLM-4V series, **GLM-4.1V-Thinking** offers the

|

| 43 |

+

following improvements:

|

| 44 |

+

|

| 45 |

+

1. The first reasoning-focused model in the series, achieving world-leading performance not only in mathematics but also

|

| 46 |

+

across various sub-domains.

|

| 47 |

+

2. Supports **64k** context length.

|

| 48 |

+

3. Handles **arbitrary aspect ratios** and up to **4K** image resolution.

|

| 49 |

+

4. Provides an open-source version supporting both **Chinese and English bilingual** usage.

|

| 50 |

+

|

| 51 |

+

## Benchmark Performance

|

| 52 |

+

|

| 53 |

+

By incorporating the Chain-of-Thought reasoning paradigm, GLM-4.1V-9B-Thinking significantly improves answer accuracy,

|

| 54 |

+

richness, and interpretability. It comprehensively surpasses traditional non-reasoning visual models.

|

| 55 |

+

Out of 28 benchmark tasks, it achieved the best performance among 10B-level models on 23 tasks,

|

| 56 |

+

and even outperformed the 72B-parameter Qwen-2.5-VL-72B on 18 tasks.

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

## Quick Inference

|

| 61 |

+

|

| 62 |

+

This is a simple example of running single-image inference using the `transformers` library.

|

| 63 |

+

First, install the `transformers` library from source:

|

| 64 |

+

|

| 65 |

+

```

|

| 66 |

+

pip install transformers>=4.57.1

|

| 67 |

+

```

|

| 68 |

+

|

| 69 |

+

Then, run the following code:

|

| 70 |

+

|

| 71 |

+

```python

|

| 72 |

+

from transformers import AutoProcessor, Glm4vForConditionalGeneration

|

| 73 |

+

import torch

|

| 74 |

+

|

| 75 |

+

MODEL_PATH = "zai-org/GLM-4.1V-9B-Thinking"

|

| 76 |

+

messages = [

|

| 77 |

+

{

|

| 78 |

+

"role": "user",

|

| 79 |

+

"content": [

|

| 80 |

+

{

|

| 81 |

+

"type": "image",

|

| 82 |

+

"url": "https://upload.wikimedia.org/wikipedia/commons/f/fa/Grayscale_8bits_palette_sample_image.png"

|

| 83 |

+

},

|

| 84 |

+

{

|

| 85 |

+

"type": "text",

|

| 86 |

+

"text": "describe this image"

|

| 87 |

+

}

|

| 88 |

+

],

|

| 89 |

+

}

|

| 90 |

+

]

|

| 91 |

+

processor = AutoProcessor.from_pretrained(MODEL_PATH, use_fast=True)

|

| 92 |

+

model = Glm4vForConditionalGeneration.from_pretrained(

|

| 93 |

+

pretrained_model_name_or_path=MODEL_PATH,

|

| 94 |

+

torch_dtype=torch.bfloat16,

|

| 95 |

+

device_map="auto",

|

| 96 |

+

)

|

| 97 |

+

inputs = processor.apply_chat_template(

|

| 98 |

+

messages,

|

| 99 |

+

tokenize=True,

|

| 100 |

+

add_generation_prompt=True,

|

| 101 |

+

return_dict=True,

|

| 102 |

+

return_tensors="pt"

|

| 103 |

+

).to(model.device)

|

| 104 |

+

generated_ids = model.generate(**inputs, max_new_tokens=8192)

|

| 105 |

+

output_text = processor.decode(generated_ids[0][inputs["input_ids"].shape[1]:], skip_special_tokens=False)

|

| 106 |

+

print(output_text)

|

| 107 |

+

```

|

| 108 |

+

|

| 109 |

+

For video reasoning, web demo deployment, and more code, please check

|

| 110 |

+

our [GitHub](https://github.com/zai-org/GLM-V).

|

chat_template.jinja

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[gMASK]<sop>

|

| 2 |

+

{%- for msg in messages %}

|

| 3 |

+

{%- if msg.role == 'system' %}

|

| 4 |

+

<|system|>

|

| 5 |

+

{{ msg.content }}

|

| 6 |

+

{%- elif msg.role == 'user' %}

|

| 7 |

+

<|user|>{{ '\n' }}

|

| 8 |

+

|

| 9 |

+

{%- if msg.content is string %}

|

| 10 |

+

{{ msg.content }}

|

| 11 |

+

{%- else %}

|

| 12 |

+

{%- for item in msg.content %}

|

| 13 |

+

{%- if item.type == 'video' or 'video' in item %}

|

| 14 |

+

<|begin_of_video|><|video|><|end_of_video|>

|

| 15 |

+

{%- elif item.type == 'image' or 'image' in item %}

|

| 16 |

+

<|begin_of_image|><|image|><|end_of_image|>

|

| 17 |

+

{%- elif item.type == 'text' %}

|

| 18 |

+

{{ item.text }}

|

| 19 |

+

{%- endif %}

|

| 20 |

+

{%- endfor %}

|

| 21 |

+

{%- endif %}

|

| 22 |

+

{%- elif msg.role == 'assistant' %}

|

| 23 |

+

{%- if msg.metadata %}

|

| 24 |

+

<|assistant|>{{ msg.metadata }}

|

| 25 |

+

{{ msg.content }}

|

| 26 |

+

{%- else %}

|

| 27 |

+

<|assistant|>

|

| 28 |

+

{{ msg.content }}

|

| 29 |

+

{%- endif %}

|

| 30 |

+

{%- endif %}

|

| 31 |

+

{%- endfor %}

|

| 32 |

+

{% if add_generation_prompt %}<|assistant|>

|

| 33 |

+

{% endif %}

|

config.json

ADDED

|

@@ -0,0 +1,77 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"architectures": [

|

| 3 |

+

"Glm4vForConditionalGeneration"

|

| 4 |

+

],

|

| 5 |

+

"model_type": "glm4v",

|

| 6 |

+

"image_start_token_id": 151339,

|

| 7 |

+

"image_end_token_id": 151340,

|

| 8 |

+

"video_start_token_id": 151341,

|

| 9 |

+

"video_end_token_id": 151342,

|

| 10 |

+

"image_token_id": 151343,

|

| 11 |

+

"video_token_id": 151344,

|

| 12 |

+

"tie_word_embeddings": false,

|

| 13 |

+

"transformers_version": "4.57.1",

|

| 14 |

+

"text_config": {

|

| 15 |

+

"model_type": "glm4v_text",

|

| 16 |

+

"attention_bias": true,

|

| 17 |

+

"attention_dropout": 0.0,

|

| 18 |

+

"pad_token_id": 151329,

|

| 19 |

+

"eos_token_id": [

|

| 20 |

+

151329,

|

| 21 |

+

151336,

|

| 22 |

+

151338,

|

| 23 |

+

151348

|

| 24 |

+

],

|

| 25 |

+

"hidden_act": "silu",

|

| 26 |

+

"hidden_size": 4096,

|

| 27 |

+

"initializer_range": 0.02,

|

| 28 |

+

"intermediate_size": 13696,

|

| 29 |

+

"max_position_embeddings": 65536,

|

| 30 |

+

"num_attention_heads": 32,

|

| 31 |

+

"num_hidden_layers": 40,

|

| 32 |

+

"num_key_value_heads": 2,

|

| 33 |

+

"rms_norm_eps": 1e-05,

|

| 34 |

+

"dtype": "bfloat16",

|

| 35 |

+

"use_cache": true,

|

| 36 |

+

"vocab_size": 151552,

|

| 37 |

+

"partial_rotary_factor": 0.5,

|

| 38 |

+

"rope_theta": 10000,

|

| 39 |

+

"rope_scaling": {

|

| 40 |

+

"rope_type": "default",

|

| 41 |

+

"mrope_section": [

|

| 42 |

+

8,

|

| 43 |

+

12,

|

| 44 |

+

12

|

| 45 |

+

]

|

| 46 |

+

}

|

| 47 |

+

},

|

| 48 |

+

"vision_config": {

|

| 49 |

+

"model_type": "glm4v",

|

| 50 |

+

"hidden_size": 1536,

|

| 51 |

+

"depth": 24,

|

| 52 |

+

"num_heads": 12,

|

| 53 |

+

"attention_bias": false,

|

| 54 |

+

"intermediate_size": 13696,

|

| 55 |

+

"hidden_act": "silu",

|

| 56 |

+

"hidden_dropout_prob": 0.0,

|

| 57 |

+

"initializer_range": 0.02,

|

| 58 |

+

"image_size": 336,

|

| 59 |

+

"patch_size": 14,

|

| 60 |

+

"out_hidden_size": 4096,

|

| 61 |

+

"rms_norm_eps": 1e-05,

|

| 62 |

+

"spatial_merge_size": 2,

|

| 63 |

+

"temporal_patch_size": 2

|

| 64 |

+

},

|

| 65 |

+

"quantization_config": {

|

| 66 |

+

"quant_method": "exl3",

|

| 67 |

+

"version": "0.0.14",

|

| 68 |

+

"bits": 6.0,

|

| 69 |

+

"head_bits": 6,

|

| 70 |

+

"calibration": {

|

| 71 |

+

"rows": 250,

|

| 72 |

+

"cols": 2048

|

| 73 |

+

},

|

| 74 |

+

"out_scales": "auto",

|

| 75 |

+

"codebook": "mcg"

|

| 76 |

+

}

|

| 77 |

+

}

|

generation_config.json

ADDED

|

@@ -0,0 +1,15 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_from_model_config": true,

|

| 3 |

+

"do_sample": true,

|

| 4 |

+

"eos_token_id": [

|

| 5 |

+

151329,

|

| 6 |

+

151336,

|

| 7 |

+

151338,

|

| 8 |

+

151348

|

| 9 |

+

],

|

| 10 |

+

"pad_token_id": 151329,

|

| 11 |

+

"top_p": 0.6,

|

| 12 |

+

"temperature": 0.8,

|

| 13 |

+

"top_k": 2,

|

| 14 |

+

"transformers_version": "4.57.1"

|

| 15 |

+

}

|

model-00001-of-00002.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:43e873d06fdfcfa22ba881af08582b75997ffec25b871c60bfa1906d113d672e

|

| 3 |

+

size 8582744420

|

model-00002-of-00002.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:2fcf149d9a42ddf95d38115c870734a689cabd76d9fad48d818607be67d85815

|

| 3 |

+

size 1036243840

|

model.safetensors.index.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

notebook.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

preprocessor_config.json

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"size": {"shortest_edge": 12544, "longest_edge": 9633792},

|

| 3 |

+

"do_rescale": true,

|

| 4 |

+

"patch_size": 14,

|

| 5 |

+

"temporal_patch_size": 2,

|

| 6 |

+

"merge_size": 2,

|

| 7 |

+

"image_mean": [0.48145466, 0.4578275, 0.40821073],

|

| 8 |

+

"image_std": [0.26862954, 0.26130258, 0.27577711],

|

| 9 |

+

"image_processor_type": "Glm4vImageProcessor",

|

| 10 |

+

"processor_class": "Glm4vProcessor"

|

| 11 |

+

}

|

quantization_config.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

tokenizer.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:76ebeac0d8bd7879ead7b43c16b44981f277e47225de2bd7de9ae1a6cc664a8c

|

| 3 |

+

size 19966496

|

tokenizer_config.json

ADDED

|

@@ -0,0 +1,218 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"added_tokens_decoder": {

|

| 3 |

+

"151329": {

|

| 4 |

+

"content": "<|endoftext|>",

|

| 5 |

+

"lstrip": false,

|

| 6 |

+

"normalized": false,

|

| 7 |

+

"rstrip": false,

|

| 8 |

+

"single_word": false,

|

| 9 |

+

"special": true

|

| 10 |

+

},

|

| 11 |

+

"151330": {

|

| 12 |

+

"content": "[MASK]",

|

| 13 |

+

"lstrip": false,

|

| 14 |

+

"normalized": false,

|

| 15 |

+

"rstrip": false,

|

| 16 |

+

"single_word": false,

|

| 17 |

+

"special": true

|

| 18 |

+

},

|

| 19 |

+

"151331": {

|

| 20 |

+

"content": "[gMASK]",

|

| 21 |

+

"lstrip": false,

|

| 22 |

+

"normalized": false,

|

| 23 |

+

"rstrip": false,

|

| 24 |

+

"single_word": false,

|

| 25 |

+

"special": true

|

| 26 |

+

},

|

| 27 |

+

"151332": {

|

| 28 |

+

"content": "[sMASK]",

|

| 29 |

+

"lstrip": false,

|

| 30 |

+

"normalized": false,

|

| 31 |

+

"rstrip": false,

|

| 32 |

+

"single_word": false,

|

| 33 |

+

"special": true

|

| 34 |

+

},

|

| 35 |

+

"151333": {

|

| 36 |

+

"content": "<sop>",

|

| 37 |

+

"lstrip": false,

|

| 38 |

+

"normalized": false,

|

| 39 |

+

"rstrip": false,

|

| 40 |

+

"single_word": false,

|

| 41 |

+

"special": true

|

| 42 |

+

},

|

| 43 |

+

"151334": {

|

| 44 |

+

"content": "<eop>",

|

| 45 |

+

"lstrip": false,

|

| 46 |

+

"normalized": false,

|

| 47 |

+

"rstrip": false,

|

| 48 |

+

"single_word": false,

|

| 49 |

+

"special": true

|

| 50 |

+

},

|

| 51 |

+

"151335": {

|

| 52 |

+

"content": "<|system|>",

|

| 53 |

+

"lstrip": false,

|

| 54 |

+

"normalized": false,

|

| 55 |

+

"rstrip": false,

|

| 56 |

+

"single_word": false,

|

| 57 |

+

"special": true

|

| 58 |

+

},

|

| 59 |

+

"151336": {

|

| 60 |

+

"content": "<|user|>",

|

| 61 |

+

"lstrip": false,

|

| 62 |

+

"normalized": false,

|

| 63 |

+

"rstrip": false,

|

| 64 |

+

"single_word": false,

|

| 65 |

+

"special": true

|

| 66 |

+

},

|

| 67 |

+

"151337": {

|

| 68 |

+

"content": "<|assistant|>",

|

| 69 |

+

"lstrip": false,

|

| 70 |

+

"normalized": false,

|

| 71 |

+

"rstrip": false,

|

| 72 |

+

"single_word": false,

|

| 73 |

+

"special": true

|

| 74 |

+

},

|

| 75 |

+

"151338": {

|

| 76 |

+

"content": "<|observation|>",

|

| 77 |

+

"lstrip": false,

|

| 78 |

+

"normalized": false,

|

| 79 |

+

"rstrip": false,

|

| 80 |

+

"single_word": false,

|

| 81 |

+

"special": true

|

| 82 |

+

},

|

| 83 |

+

"151339": {

|

| 84 |

+

"content": "<|begin_of_image|>",

|

| 85 |

+

"lstrip": false,

|

| 86 |

+

"normalized": false,

|

| 87 |

+

"rstrip": false,

|

| 88 |

+

"single_word": false,

|

| 89 |

+

"special": true

|

| 90 |

+

},

|

| 91 |

+

"151340": {

|

| 92 |

+

"content": "<|end_of_image|>",

|

| 93 |

+

"lstrip": false,

|

| 94 |

+

"normalized": false,

|

| 95 |

+

"rstrip": false,

|

| 96 |

+

"single_word": false,

|

| 97 |

+

"special": true

|

| 98 |

+

},

|

| 99 |

+

"151341": {

|

| 100 |

+

"content": "<|begin_of_video|>",

|

| 101 |

+

"lstrip": false,

|

| 102 |

+

"normalized": false,

|

| 103 |

+

"rstrip": false,

|

| 104 |

+

"single_word": false,

|

| 105 |

+

"special": true

|

| 106 |

+

},

|

| 107 |

+

"151342": {

|

| 108 |

+

"content": "<|end_of_video|>",

|

| 109 |

+

"lstrip": false,

|

| 110 |

+

"normalized": false,

|

| 111 |

+

"rstrip": false,

|

| 112 |

+

"single_word": false,

|

| 113 |

+

"special": true

|

| 114 |

+

},

|

| 115 |

+

"151343": {

|

| 116 |

+

"content": "<|image|>",

|

| 117 |

+

"lstrip": false,

|

| 118 |

+

"normalized": false,

|

| 119 |

+

"rstrip": false,

|

| 120 |

+

"single_word": false,

|

| 121 |

+

"special": true

|

| 122 |

+

},

|

| 123 |

+

"151344": {

|

| 124 |

+

"content": "<|video|>",

|

| 125 |

+

"lstrip": false,

|

| 126 |

+

"normalized": false,

|

| 127 |

+

"rstrip": false,

|

| 128 |

+

"single_word": false,

|

| 129 |

+

"special": true

|

| 130 |

+

},

|

| 131 |

+

"151345": {

|

| 132 |

+

"content": "<think>",

|

| 133 |

+

"lstrip": false,

|

| 134 |

+

"normalized": false,

|

| 135 |

+

"rstrip": false,

|

| 136 |

+

"single_word": false,

|

| 137 |

+

"special": false

|

| 138 |

+

},

|

| 139 |

+

"151346": {

|

| 140 |

+

"content": "</think>",

|

| 141 |

+

"lstrip": false,

|

| 142 |

+

"normalized": false,

|

| 143 |

+

"rstrip": false,

|

| 144 |

+

"single_word": false,

|

| 145 |

+

"special": false

|

| 146 |

+

},

|

| 147 |

+

"151347": {

|

| 148 |

+

"content": "<answer>",

|

| 149 |

+

"lstrip": false,

|

| 150 |

+

"normalized": false,

|

| 151 |

+

"rstrip": false,

|

| 152 |

+

"single_word": false,

|

| 153 |

+

"special": false

|

| 154 |

+

},

|

| 155 |

+

"151348": {

|

| 156 |

+

"content": "</answer>",

|

| 157 |

+

"lstrip": false,

|

| 158 |

+

"normalized": false,

|

| 159 |

+

"rstrip": false,

|

| 160 |

+

"single_word": false,

|

| 161 |

+

"special": false

|

| 162 |

+

},

|

| 163 |

+

"151349": {

|

| 164 |

+

"content": "<|begin_of_box|>",

|

| 165 |

+

"lstrip": false,

|

| 166 |

+

"normalized": false,

|

| 167 |

+

"rstrip": false,

|

| 168 |

+

"single_word": false,

|

| 169 |

+

"special": false

|

| 170 |

+

},

|

| 171 |

+

"151350": {

|

| 172 |

+

"content": "<|end_of_box|>",

|

| 173 |

+

"lstrip": false,

|

| 174 |

+

"normalized": false,

|

| 175 |

+

"rstrip": false,

|

| 176 |

+

"single_word": false,

|

| 177 |

+

"special": false

|

| 178 |

+

},

|

| 179 |

+

"151351": {

|

| 180 |

+

"content": "<|sep|>",

|

| 181 |

+

"lstrip": false,

|

| 182 |

+

"normalized": false,

|

| 183 |

+

"rstrip": false,

|

| 184 |

+

"single_word": false,

|

| 185 |

+

"special": false

|

| 186 |

+

}

|

| 187 |

+

},

|

| 188 |

+

"additional_special_tokens": [

|

| 189 |

+

"<|endoftext|>",

|

| 190 |

+

"[MASK]",

|

| 191 |

+

"[gMASK]",

|

| 192 |

+

"[sMASK]",

|

| 193 |

+

"<sop>",

|

| 194 |

+

"<eop>",

|

| 195 |

+

"<|system|>",

|

| 196 |

+

"<|user|>",

|

| 197 |

+

"<|assistant|>",

|

| 198 |

+

"<|observation|>",

|

| 199 |

+

"<|begin_of_image|>",

|

| 200 |

+

"<|end_of_image|>",

|

| 201 |

+

"<|begin_of_video|>",

|

| 202 |

+

"<|end_of_video|>",

|

| 203 |

+

"<|image|>",

|

| 204 |

+

"<|video|>"

|

| 205 |

+

],

|

| 206 |

+

"clean_up_tokenization_spaces": false,

|

| 207 |

+

"do_lower_case": false,

|

| 208 |

+

"eos_token": "<|endoftext|>",

|

| 209 |

+

"pad_token": "<|endoftext|>",

|

| 210 |

+

"model_input_names": [

|

| 211 |

+

"input_ids",

|

| 212 |

+

"attention_mask"

|

| 213 |

+

],

|

| 214 |

+

"model_max_length": 65536,

|

| 215 |

+

"padding_side": "left",

|

| 216 |

+

"remove_space": false,

|

| 217 |

+

"tokenizer_class": "PreTrainedTokenizer"

|

| 218 |

+

}

|

video_preprocessor_config.json

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"size": {"shortest_edge": 12544, "longest_edge": 47040000},

|

| 3 |

+

"do_rescale": true,

|

| 4 |

+

"patch_size": 14,

|

| 5 |

+

"temporal_patch_size": 2,

|

| 6 |

+

"merge_size": 2,

|

| 7 |

+

"image_mean": [0.48145466, 0.4578275, 0.40821073],

|

| 8 |

+

"image_std": [0.26862954, 0.26130258, 0.27577711],

|

| 9 |

+

"video_processor_type": "Glm4vVideoProcessor",

|

| 10 |

+

"processor_class": "Glm4vProcessor"

|

| 11 |

+

}

|