metadata

model_size: 494034560

required_memory: 1.84

metrics:

- GLUE_MRPC

license: apache-2.0

datasets:

- jtatman/python-code-dataset-500k

- Vezora/Tested-143k-Python-Alpaca

language:

- en

- es

base_model: Qwen/Qwen2-0.5B

library_name: adapter-transformers

tags:

- code

- python

- tiny

- open

- mini

- minitron

- tinytron

Uploaded model

- Developed by: Agnuxo

- License: apache-2.0

- Finetuned from model: Agnuxo/Tinytron-codegemma-2b

This model was fine-tuned using Unsloth and Huggingface's TRL library.

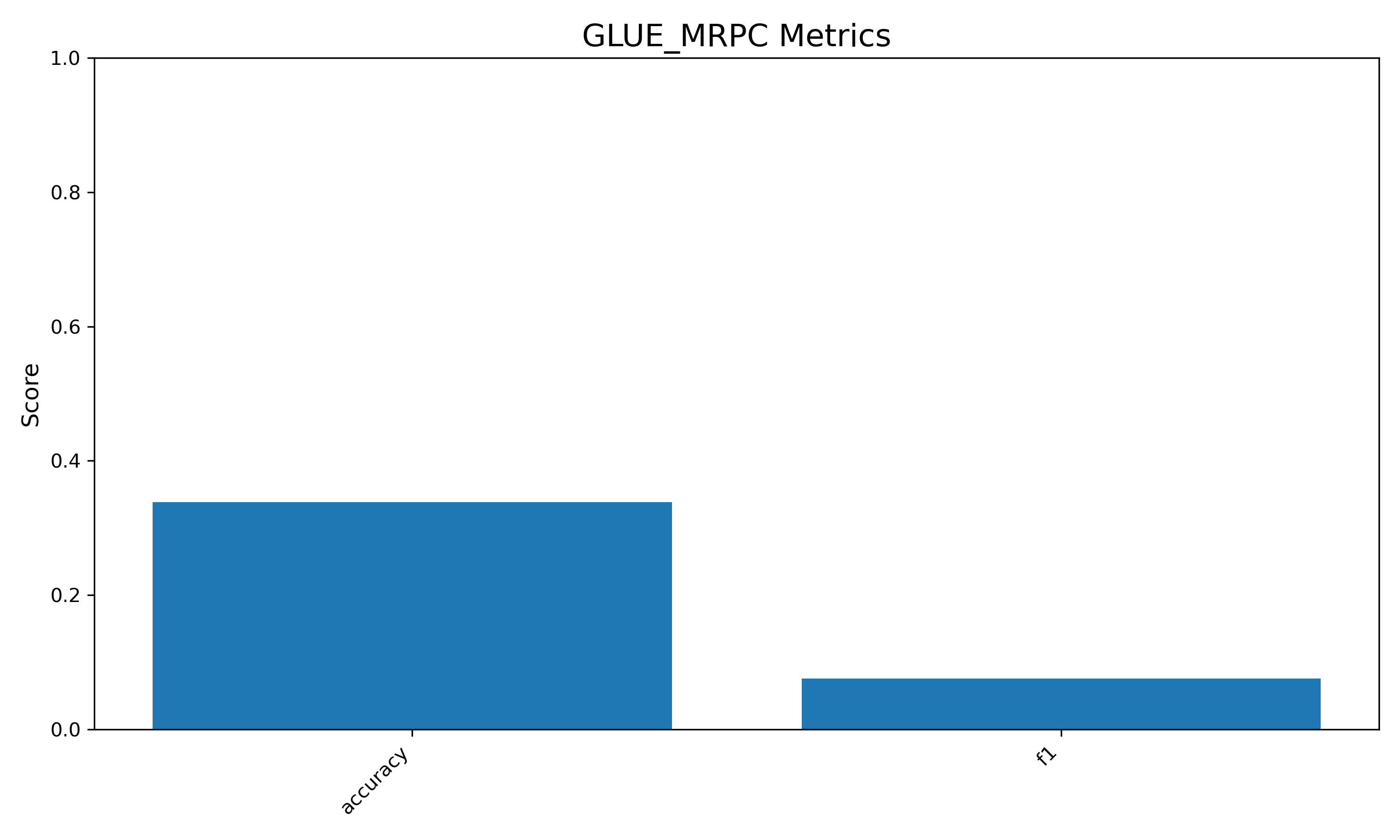

Benchmark Results

This model has been fine-tuned for various tasks and evaluated on the following benchmarks:

GLUE_MRPC

Accuracy: 0.3382 F1: 0.0753

Model Size: 494,034,560 parameters Required Memory: 1.84 GB

For more details, visit my GitHub.

Thanks for your interest in this model!