license: mit

tags:

- video-classification

- I3D

- action-recognition

- anomaly-detection

datasets:

- kinetics-400

- ucf-crime

model-index:

- name: i3d_ucf_finetuned

results:

- task:

type: video-classification

dataset:

name: UCF-Crime

type: ucf-crime

metrics:

- name: Validation Accuracy

type: accuracy

value: 0.6667

I3D UCF Finetuned

Model Description

This is a finetuned I3D (Inflated 3D ConvNet) model for video classification, based on the i3d_r50 architecture from PyTorchVideo. The I3D model uses a ResNet-50 backbone inflated to 3D convolutions to capture both spatial and temporal features from videos. It was originally pretrained on the Kinetics-400 dataset, which contains ~306,245 short videos across 400 human action classes (e.g., running, dancing, cooking).

The model was finetuned on the UCF-Crime dataset to classify videos into 8 specific categories: arrest, Explosion, Fight, normal, roadaccidents, shooting, Stealing, vandalism. During finetuning, the final fully connected layer was modified to output 8 classes, and a Dropout layer (p=0.3) was added to reduce overfitting. The finetuned weights are stored in i3d_ucf_finetuned.pth (109 MB) and can be downloaded from this repository.

Dataset

Pretraining Dataset

- Kinetics-400: A large-scale dataset with ~306,245 videos covering 400 human action classes. It provides robust general features for video understanding, making it an excellent starting point for finetuning.

Finetuning Dataset

- UCF-Crime: A dataset for anomaly detection in videos, containing

1,900 videos (1,610 for training, 290 for testing). The model was finetuned on a subset of UCF-Crime to classify videos into 8 categories:arrest,Explosion,Fight,normal,roadaccidents,shooting,Stealing,vandalism.

Performance

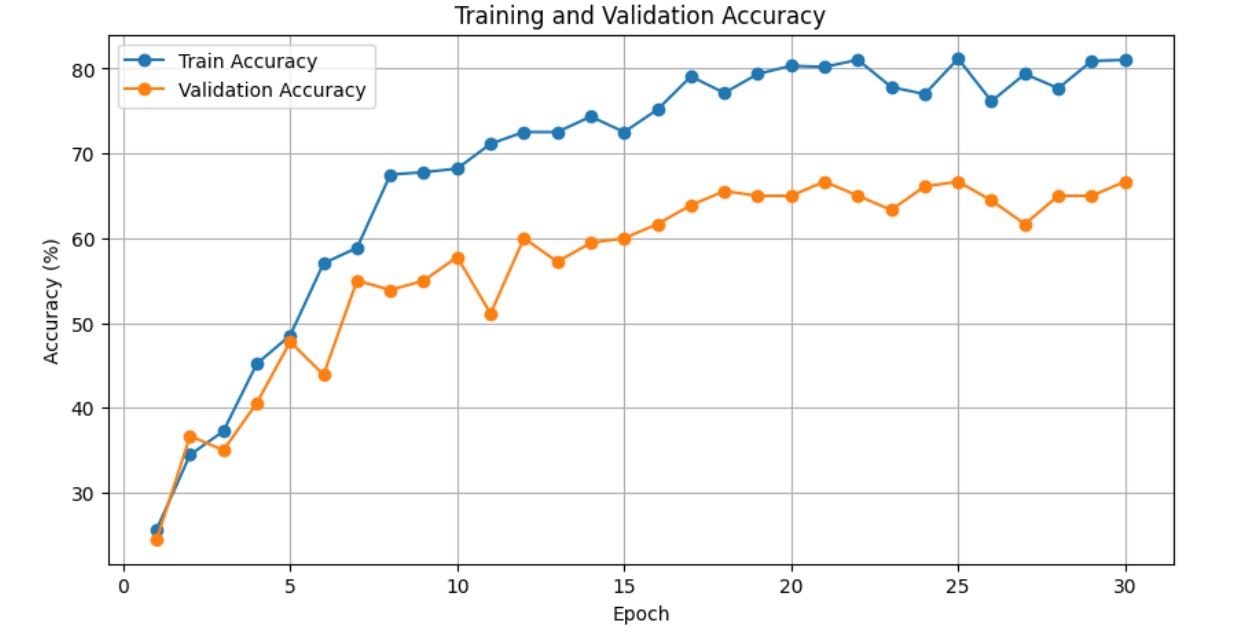

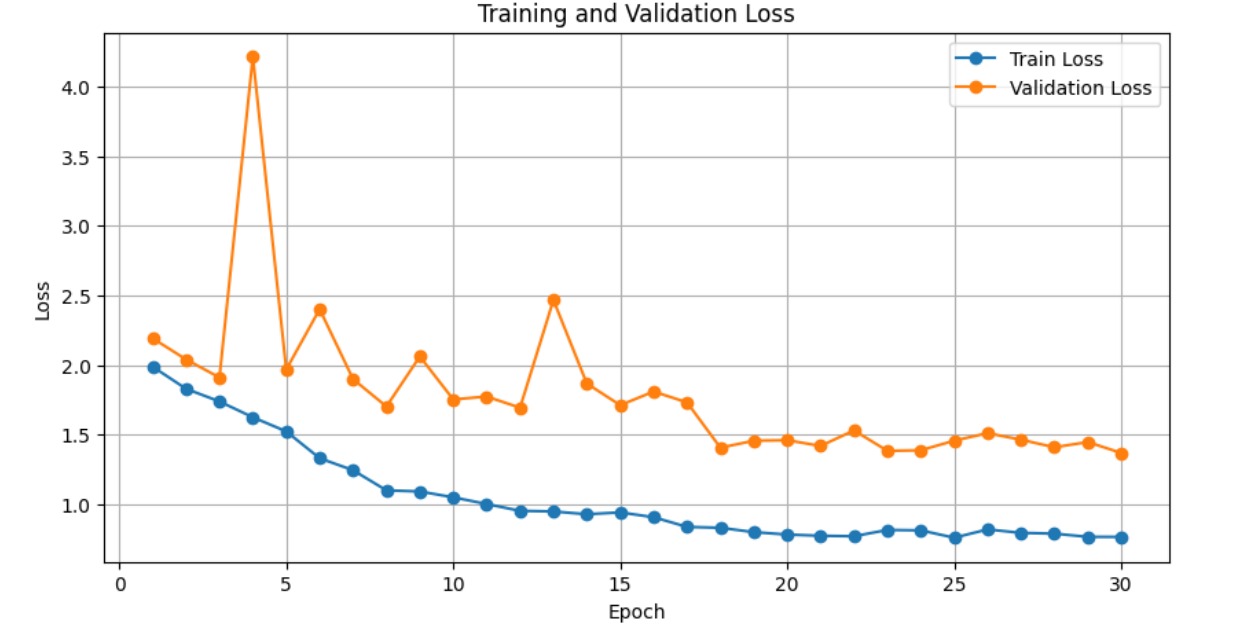

The model was finetuned for 30 epochs. Below are the training and validation performance plots:

Training and Validation Accuracy

- Best Validation Accuracy: ~66.67% (achieved after finetuning on UCF-Crime).

- Training Accuracy: Reached ~81.03% .

Training and Validation Loss

- The training loss decreases steadily, while the validation loss shows some fluctuations, indicating potential room for improving generalization.

Usage

To use the model for video classification, you can load the weights from this repository using the following code:

import torch

import cv2

import numpy as np

import torch.nn as nn

from huggingface_hub import hf_hub_download

# Define the model

def load_i3d_ucf_finetuned(repo_id="Ahmeddawood0001/i3d_ucf_finetuned", filename="i3d_ucf_finetuned.pth"):

class I3DClassifier(nn.Module):

def __init__(self, num_classes):

super(I3DClassifier, self).__init__()

self.i3d = torch.hub.load('facebookresearch/pytorchvideo', 'i3d_r50', pretrained=True)

self.dropout = nn.Dropout(0.3)

self.i3d.blocks[6].proj = nn.Linear(2048, num_classes)

def forward(self, x):

x = self.i3d(x)

x = self.dropout(x)

return x

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = I3DClassifier(num_classes=8).to(device)

weights_path = hf_hub_download(repo_id=repo_id, filename=filename)

model.load_state_dict(torch.load(weights_path))

model.eval()

return model

# Define frame extraction function

def extract_frames(video_path, max_frames=32, frame_size=(224, 224)):

cap = cv2.VideoCapture(video_path)

frames = []

while len(frames) < max_frames:

ret, frame = cap.read()

if not ret:

break

frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

frame = cv2.resize(frame, frame_size)

frames.append(frame)

while len(frames) < max_frames:

frames.append(frames[-1])

frames = frames[:max_frames]

frames = np.stack(frames)

frames = torch.from_numpy(frames).permute(0, 3, 1, 2).float() / 255.0

frames = frames.permute(1, 0, 2, 3)

cap.release()

return frames

# Define classification function

def classify_video(video_path, model, labels):

frames = extract_frames(video_path)

frames = frames.unsqueeze(0).to(device)

with torch.no_grad():

outputs = model(frames)

probabilities = torch.softmax(outputs, dim=1)

predicted_idx = torch.argmax(probabilities, dim=1).item()

predicted_label = labels[predicted_idx]

confidence = probabilities[0, predicted_idx].item()

return predicted_label, confidence

# Example usage

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

labels = ["arrest", "Explosion", "Fight", "normal", "roadaccidents", "shooting", "Stealing", "vandalism"]

model = load_i3d_ucf_finetuned()

video_path = "path/to/your/video.mp4" # Replace with your video path

predicted_label, confidence = classify_video(video_path, model, labels)

print(f"Video: {video_path}")

print(f"Predicted Label: {predicted_label}")

print(f"Confidence: {confidence:.4f}")