Text Difficulty Regression Model

Regression model which predicts difficulty score for an input text. Predicted scores can be mapped to CEFR levels.

Model Details

Frozen BERT-large layers with a regressor on top. Trained on a mix of manually annotated datasets (more details on data will follow) and data translated from Russian into Finnish.

How to Get Started with the Model

Use the code below to get started with the model.

class CustomModel(BertPreTrainedModel):

def __init__(self, config, load_path=None, use_auth_token: str = None,):

super().__init__(config)

self.bert = BertModel(config)

self.pre_classifier = nn.Linear(config.hidden_size, 128)

self.dropout = nn.Dropout(0.2)

self.classifier = nn.Linear(128, 1)

self.activation = nn.ReLU()

nn.init.kaiming_uniform_(self.pre_classifier.weight, nonlinearity='relu')

nn.init.kaiming_uniform_(self.classifier.weight, nonlinearity='relu')

if self.pre_classifier.bias is not None:

nn.init.constant_(self.pre_classifier.bias, 0)

if self.classifier.bias is not None:

nn.init.constant_(self.classifier.bias, 0)

def forward(

self,

input_ids,

labels=None,

attention_mask=None,

token_type_ids=None,

position_ids=None,

):

outputs = self.bert(

input_ids,

attention_mask=attention_mask,

token_type_ids=token_type_ids,

position_ids=position_ids,

)

pooled_output = outputs.pooler_output

pooled_output = self.pre_classifier(pooled_output)

pooled_output = self.activation(pooled_output)

pooled_output = self.dropout(pooled_output)

logits = self.classifier(pooled_output)

if labels is not None:

loss_fn = nn.MSELoss()

loss = loss_fn(logits.view(-1), labels.view(-1))

return loss, logits

else:

return None, logits

# Inference

from safetensors.torch import load_file

# Code to load custom fine-tuned model'

tokenizer = AutoTokenizer.from_pretrained(model_path, trust_remote_code=True)

config = AutoConfig.from_pretrained(model_path, trust_remote_code=True)

config.num_labels = 1

# Load your custom model

model = CustomModel(config)

state_dict = load_file(f'{model_path}/model.safetensors')

model.load_state_dict(state_dict)

model.eval()

inputs = tokenizer(text, return_tensors="pt", padding=True, truncation=True)

inputs = {key: value.to(device) for key, value in inputs.items()}

with torch.no_grad():

_, logits = model(input_ids=inputs["input_ids"], attention_mask=inputs["attention_mask"], token_type_ids=inputs["token_type_ids"])

To map to CEFR, use:

reg2cl2 = {

"0.0": "A1", "1.0": "A1", "1.5": "A1-A2", "2.0": "A2",

"2.5": "A2-B1", "3.0": "B1", "3.5": "B1-B2", "4.0": "B2",

"4.5": "B2-C1", "5.0": "C1", "5.5": "C1-C2", "6.0": "C2"

}

print("Predicted output (logits):", logits.item(), reg2cl2[str(float(round(logits.item())))])

Training Details

Training Hyperparameters

- num_warmup_steps = int(0.1 * num_training_steps)

- num_train_epochs: 24.0

- batch_size: 16

- weight_decay: 0.01

- adam_beta1: 0.9

- adam_beta2: 0.99

- adam_epsilon: 1e-8

- max_grad_norm: 1.0

- fp16: True

- early_stopping: True

Learning rates

# Define separate learning rates

lr_bert = 2e-5 # Learning rate for BERT layers

lr_classifier = 1e-3 # Learning rate for the classifier

optimizer = torch.optim.AdamW([

{"params": model.bert.parameters(), "lr": lr_bert}, # BERT layers

{"params": model.classifier.parameters(), "lr": lr_classifier},

{"params": model.pre_classifier.parameters(), "lr": lr_classifier},

])

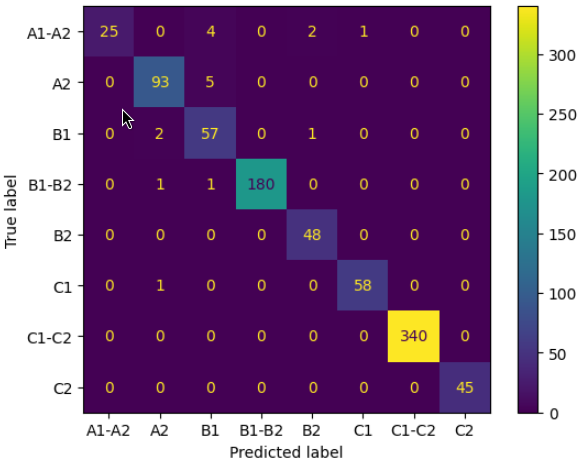

Evaluation on test set

Citation

Please refer to this repo when using the model.

- Downloads last month

- 3

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support

Model tree for Askinkaty/FinBERT_text_difficulty

Base model

TurkuNLP/bert-base-finnish-uncased-v1