AntiCheatPT_256

This Model is the best performing transformer-based model from the thesis: AntiCheatPT: A Transformer-Based Approach to Cheat Detection in Competitive Computer Games by Mille Mei Zhen Loo & Gert Luzkov.

The thesis can be found here

Code: Here

Results

| Metric | Value |

|---|---|

| Accuracy | 0.8917 |

| ROC AUC | 0.9336 |

| Precision | 0.8513 |

| Recall | 0.6313 |

| Specificity | 0.9678 |

| F1 | 0.7250 |

Model architecture

| Component | Value |

|---|---|

| Context window size | 256 |

| Transformer layers | 4 |

| Attention heads | 1 |

| Transformer feedforward dimension | 176 |

| Loss function | Binary Cross Entropy (BCEWithLogitLoss) |

| Optimiser | AdamW (learning rate = 10-4) |

| Scheduler | StepLR (gamma = 0.5, step size = 10) |

| Batch size | 128 |

Data

The input data used for this model was the Context_window_256 dataset based on the CS2CD dataset.

Model testing

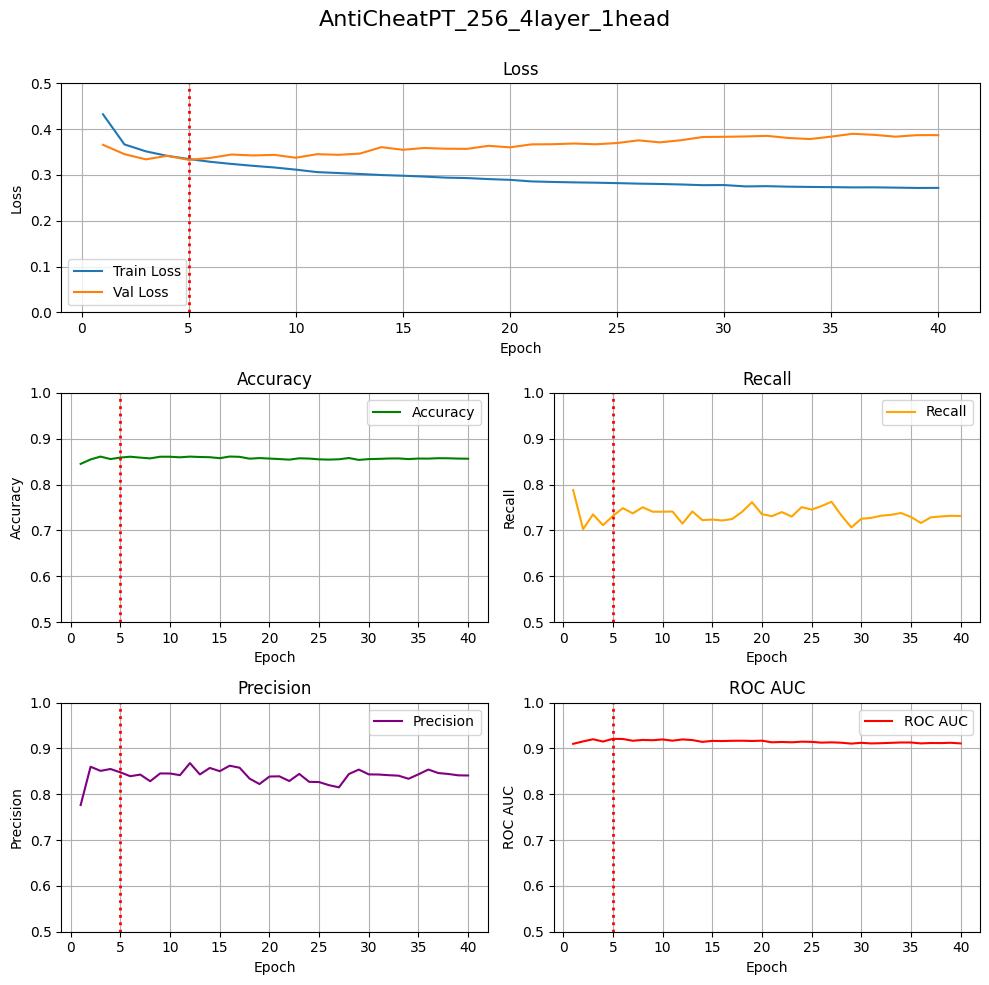

Various validation metrics of training can be seen below:

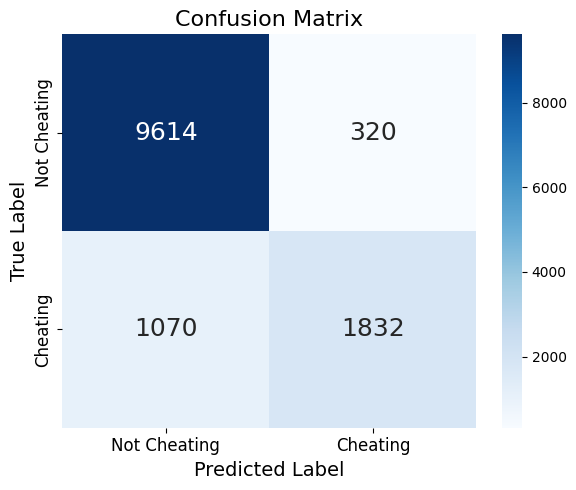

The model confusion matrix on test data can be seen below:

Usage notes

- The dataset is formated in UTF-8 encoding.

- Researchers should cite this dataset appropriately in publications.

Application

- Cheat detection

Acknowledgements

A big heartfelt thanks to Paolo Burelli for supervising the project.

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support