Datarus-R1-14B-preview

🚀 Overview

Datarus-R1-14B-Preview is a 14B-parameter open-weights language model fine-tuned from Qwen2.5-14B-Instruct, designed to act as a virtual data analyst and graduate-level problem solver. Unlike traditional models trained on isolated Q&A pairs, Datarus learns from complete analytical trajectories—including reasoning steps, code execution, error traces, self-corrections, and final conclusions—all captured in a ReAct-style notebook format.

Key Highlights

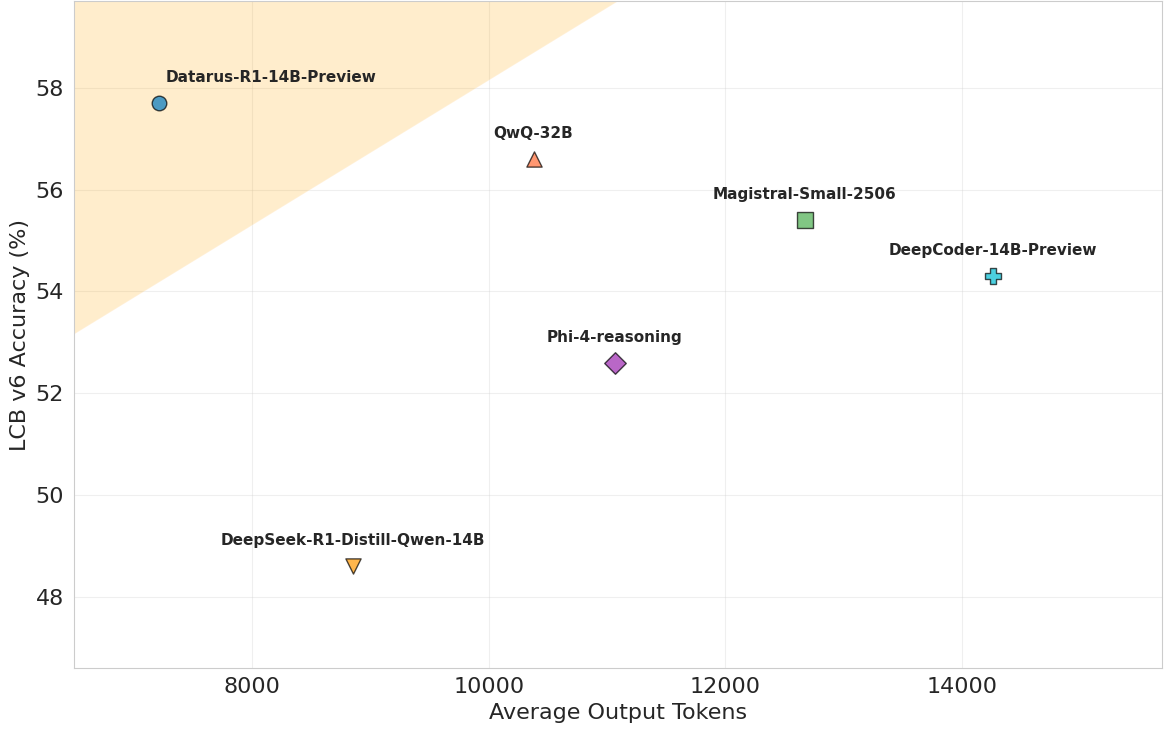

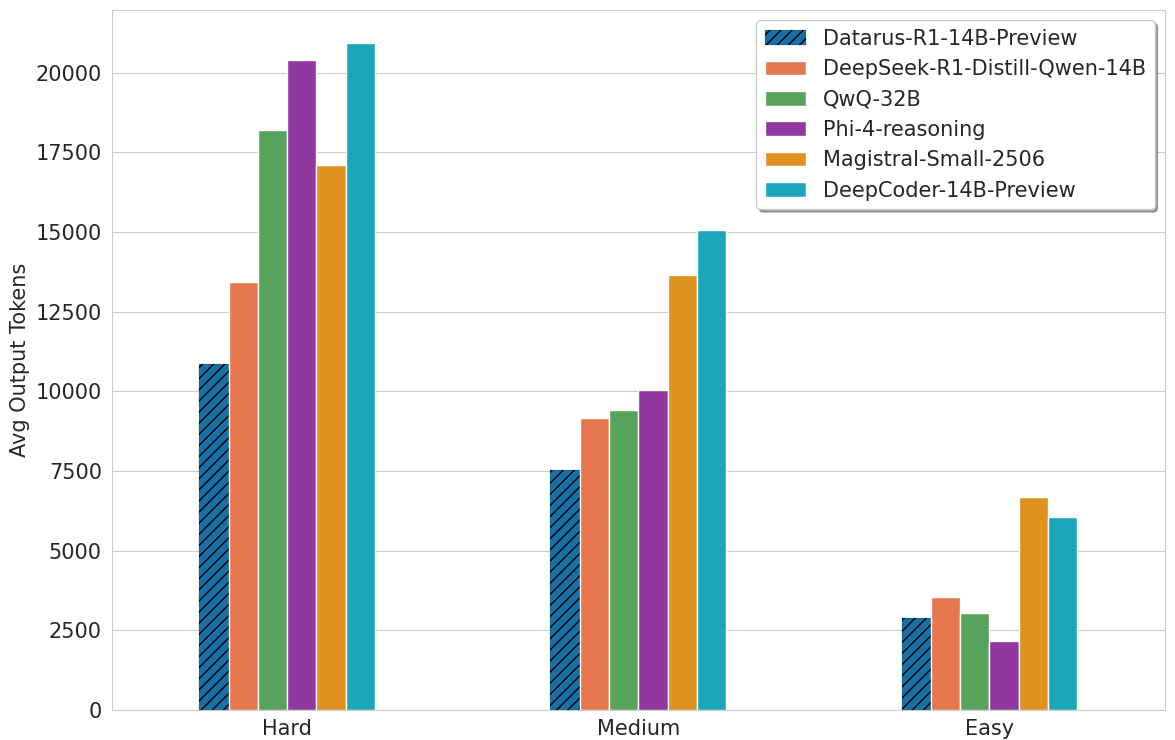

- 🎯 State-of-the-art efficiency: Surpasses similar-sized models and competes with 32B+ models while using 18-49% fewer tokens

- 🔄 Dual reasoning interfaces: Supports both Agentic (ReAct) mode for interactive analysis and Reflection (CoT) mode for concise documentation

- 📊 Superior performance: Achieves up to 30% higher accuracy on AIME 2024/2025 and LiveCodeBench

- 💡 "AHA-moment" pattern: Exhibits efficient hypothesis refinement in 1-2 iterations, avoiding circular reasoning loops

🔗 Quick Links

- 🌐 Website: https://datarus.ai

- 💬 Try the Demo: https://chat.datarus.ai

- 🛠️ Jupyter Agent: GitHub Repository

- 📄 Paper: Datarus-R1: An Adaptive Multi-Step Reasoning LLM

📊 Performance

Benchmark Results

| Benchmark | Datarus-R1-14B-Preview | QwQ-32B | Phi-4-reasoning | DeepSeek-R1-Distill-14B |

|---|---|---|---|---|

| LiveCodeBench v6 | 57.7 | 56.6 | 52.6 | 48.6 |

| AIME 2024 | 70.1 | 76.2 | 74.6* | - |

| AIME 2025 | 66.2 | 66.2 | 63.1* | - |

| GPQA Diamond | 62.1 | 60.1 | 55.0 | 58.6 |

*Reported values from official papers

Token Efficiency and Performance

🎯 Model Card

Model Details

- Model Type: Language Model for Reasoning and Data Analysis

- Parameters: 14.8B

- Training Data: 144,000 synthetic analytical trajectories across finance, medicine, numerical analysis, and other quantitative domains + A curated collection of reasoning datasets.

- Language: English

- License: Apache 2.0

Intended Use

Primary Use Cases

- Data Analysis: Automated data exploration, statistical analysis, and visualization

- Mathematical Problem Solving: Graduate-level mathematics including AIME-level problems

- Code Generation: Creating analytical scripts and solving programming challenges

- Scientific Reasoning: Complex problem-solving in physics, chemistry, and other sciences

- Interactive Notebooks: Building complete analysis notebooks with iterative refinement

Dual Mode Usage

Agentic Mode (for interactive analysis)

- Use

<step>,<thought>,<action>,<action_input>,<observation>tags - Enables iterative code execution and refinement

- Best for data analysis, simulations, and exploratory tasks

Reflection Mode (for documentation)

- Use

<think>and<answer>tags - Produces compact, self-contained reasoning chains

- Best for mathematical proofs, explanations, and reports

📚 Citation

@article{benchaliah2025datarus,

title={Datarus-R1: An Adaptive Multi-Step Reasoning LLM for Automated Data Analysis},

author={Ben Chaliah, Ayoub and Dellagi, Hela},

journal={arXiv preprint arXiv:2508.13382},

year={2025}

}

🤝 Contributing

We welcome contributions! Please see our GitHub repository for:

- Bug reports and feature requests

- Pull requests

- Discussion forums

📄 License

This model is released under the Apache 2.0 License.

🙏 Acknowledgments

We thank the Qwen team for the excellent base model and the open-source community for their valuable contributions.

📧 Contact

- Email: [email protected], [email protected]

- Website: https://datarus.ai

- Demo: https://chat.datarus.ai

⭐ Support

If you find this model and Agent pipeline useful, please consider Like/Star! Your support helps us continue improving the project.

Found a bug or have a feature request? Please open an issue on GitHub.

Made with ❤️ by the Datarus Team from Paris

- Downloads last month

- 226