Model description

ThermalGuard-v1_1 is a specialized language model based on Qwen3-4B, fine-tuned with LoRA (Low-Rank Adaptation) and fully merged for direct deployment. It excels in materials science domains, particularly:

Thermal Barrier Coatings (TBCs)

High-Entropy Alloys (HEAs)

High-Temperature Oxidation

This model has been enhanced with technical knowledge about advanced materials for high-temperature applications, including composition design, microstructure characterization, performance evaluation, and failure mechanisms.

Model Variants

We provide the following versions for different deployment scenarios:

- Full Precision Model (ThermalGuard-v1_1)

- Original merged model (fp16)

- Quantized Version (ThermalGuard-v1_1-q8.gguf)

8-bit quantized GGUF format

Reduced memory footprint (ideal for consumer hardware)

Optimized for LM Studio and other LLM applications

Intended uses & limitations

Intended Uses

Technical documentation generation for high-temperature materials

Research assistance in materials science

Answering technical questions about TBCs, HEAs, and oxidation behavior

Literature review support for materials engineering

Educational tool for materials science students

Limitations

The model's knowledge is current only up to its training data cutoff

May not capture very recent advancements in the field

Should not be used for critical material design decisions without verification

Performance may vary on highly specialized sub-topics

This model is only optimized for Chinese (中文)

Training data

The model was fine-tuned on a combination of:

- Technical literature about:

Thermal barrier coatings (YSZ, gadolinium zirconate, etc.)

High-entropy alloy systems (CoCrFeMnNi, refractory HEAs, etc.)

High-temperature oxidation mechanisms

- Curated datasets:

- ShareGPT conversations with materials science focus

| Metric | Value |

|---------------------|------------------|

| Total Conversations | 18,284 |

| Avg. Turns per Conv.| 2.00 |

| Max Turns | 2 |

| Avg. Chars per Turn | 191.98 |

| User Turns | 18,284 |

| Assistant Turns | 18,284 |

17,473,536 input tokens

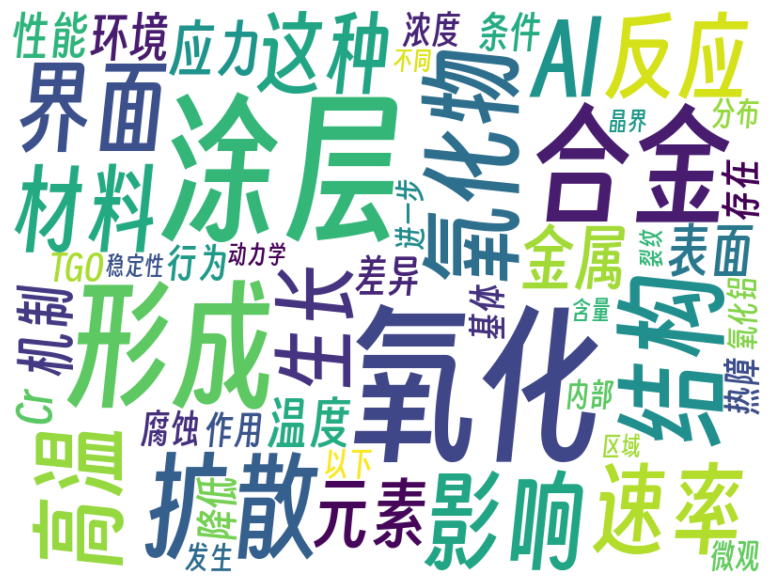

- Keyword cloud

Training procedure

Training hyperparameters

Base Model: Qwen3-4B

Fine-tuning Method: LoRA (Low-Rank Adaptation), later fully merged

Learning Rate: 0.0001

Batch Size: 2 (effective size 4 with gradient accumulation)

Epochs: 3

Optimizer: AdamW (β₁=0.9, β₂=0.999, ε=1e-08)

Scheduler: Cosine learning rate schedule

Mixed Precision: Native AMP

Framework versions

- PEFT 0.15.2

- Transformers 4.52.4

- Pytorch 2.5.1+cu124

- Datasets 3.6.0

- Tokenizers 0.21.1

Usage Example (Merged Model)

For Full Precision Model

from transformers import AutoModelForCausalLM, AutoTokenizer

model_path = "your-org/ThermalGuard-v1_1" # Merged model directory

tokenizer = AutoTokenizer.from_pretrained(model_path)

model = AutoModelForCausalLM.from_pretrained(model_path, device_map="auto")

input_text = "请解释热障涂层的作用和应用场景。"

inputs = tokenizer(input_text, return_tensors="pt").to(model.device)

outputs = model.generate(**inputs, max_new_tokens=200)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

Key Changes for Merged Model:

- No PEFT dependency – The LoRA adapter has been fully merged into the base model.

- Direct loading – Use standard from_pretrained without LoRA-specific wrappers.

- Simplified deployment – Works like any standalone Hugging Face model.

For Quantized GGUF Model (LM Studio/etc.)

- Download ThermalGuard-v1_1-q8.gguf

- Load in compatible applications:

- LM Studio

- llama.cpp

- Prompt Template: ChatML, Qwen3

- Temperature: 0.7

Disclaimer

This model (ThermalGuard) is a research-oriented AI tool independently developed for materials science applications. The model's outputs should be considered as informational suggestions rather than professional advice, and users are advised to verify critical materials science information through authoritative sources.

- Downloads last month

- 19