fix

Browse filesnot me putting the readme in the commit msg 😅

README.md

CHANGED

|

@@ -12,4 +12,68 @@ language:

|

|

| 12 |

- ru

|

| 13 |

base_model:

|

| 14 |

- HuggingFaceTB/SmolLM3-3B-Base

|

| 15 |

-

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 12 |

- ru

|

| 13 |

base_model:

|

| 14 |

- HuggingFaceTB/SmolLM3-3B-Base

|

| 15 |

+

---

|

| 16 |

+

|

| 17 |

+

# SmolLM3 Checkpoints

|

| 18 |

+

|

| 19 |

+

We are releasing intermediate checkpoints of SmolLM3 to enable further research.

|

| 20 |

+

|

| 21 |

+

## Pre-training

|

| 22 |

+

|

| 23 |

+

We release checkpoints every 40,000 steps, which equals 94.4B tokens.

|

| 24 |

+

The GBS (Global Batch Size) in tokens for SmolLM3-3B is 2,359,296. To calculate the number of tokens from a given step:

|

| 25 |

+

|

| 26 |

+

```python

|

| 27 |

+

nb_tokens = nb_step * GBS

|

| 28 |

+

```

|

| 29 |

+

|

| 30 |

+

### Training Stages

|

| 31 |

+

|

| 32 |

+

**Stage 1:** Steps 0 to 3,450,000 (86 checkpoints)

|

| 33 |

+

[config](https://huggingface.co/datasets/HuggingFaceTB/smollm3-configs/blob/main/stage1_8T.yaml)

|

| 34 |

+

|

| 35 |

+

**Stage 2:** Steps 3,450,000 to 4,200,000 (19 checkpoints)

|

| 36 |

+

[config](https://huggingface.co/datasets/HuggingFaceTB/smollm3-configs/blob/main/stage2_8T_9T.yaml)

|

| 37 |

+

|

| 38 |

+

**Stage 3:** Steps 4,200,000 to 4,720,000 (13 checkpoints)

|

| 39 |

+

[config](https://huggingface.co/datasets/HuggingFaceTB/smollm3-configs/blob/main/stage3_9T_11T.yaml)

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

### Long Context Extension

|

| 44 |

+

|

| 45 |

+

For the additional 2 stages that extend the context length to 64k, we sample checkpoints every 4,000 steps (9.4B tokens) for a total of 10 checkpoints:

|

| 46 |

+

|

| 47 |

+

**Long Context 4k to 32k**

|

| 48 |

+

[config](https://huggingface.co/datasets/HuggingFaceTB/smollm3-configs/blob/main/long_context_4k_to_32k.yaml)

|

| 49 |

+

|

| 50 |

+

**Long Context 32k to 64k**

|

| 51 |

+

[config](https://huggingface.co/datasets/HuggingFaceTB/smollm3-configs/blob/main/long_context_32k_to_64k.yaml)

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

## Post-training

|

| 56 |

+

|

| 57 |

+

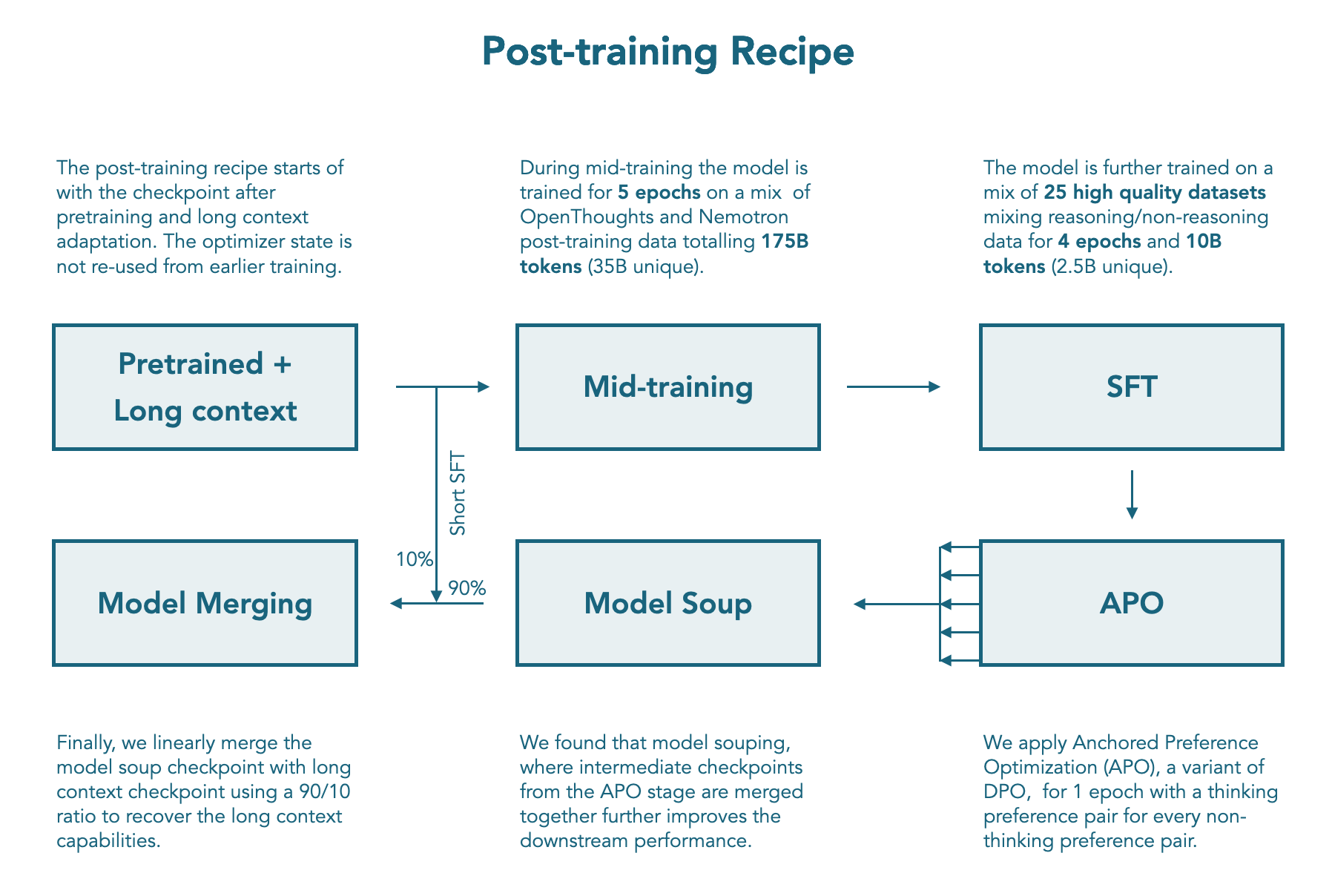

We release checkpoints at every step of our post-training recipe: Mid training, SFT, APO soup, and LC expert.

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

## How to Load a Checkpoint

|

| 62 |

+

|

| 63 |

+

```python

|

| 64 |

+

# pip install transformers

|

| 65 |

+

import torch

|

| 66 |

+

from transformers import AutoModelForCausalLM, AutoTokenizer

|

| 67 |

+

checkpoint = "HuggingFaceTB/SmolLM3-3B-checkpoints"

|

| 68 |

+

revision = "stage1-step-40000" # replace by the revision you want

|

| 69 |

+

device = torch.device("cuda" if torch.cuda.is_available() else "mps" if hasattr(torch, 'mps') and torch.mps.is_available() else "cpu")

|

| 70 |

+

tokenizer = AutoTokenizer.from_pretrained(checkpoint, revision=revision)

|

| 71 |

+

model = AutoModelForCausalLM.from_pretrained(checkpoint, revision=revision).to(device)

|

| 72 |

+

inputs = tokenizer.encode("Gravity is", return_tensors="pt").to(device)

|

| 73 |

+

outputs = model.generate(inputs)

|

| 74 |

+

print(tokenizer.decode(outputs[0]))

|

| 75 |

+

```

|

| 76 |

+

|

| 77 |

+

## License

|

| 78 |

+

|

| 79 |

+

[Apache 2.0](https://www.apache.org/licenses/LICENSE-2.0)

|