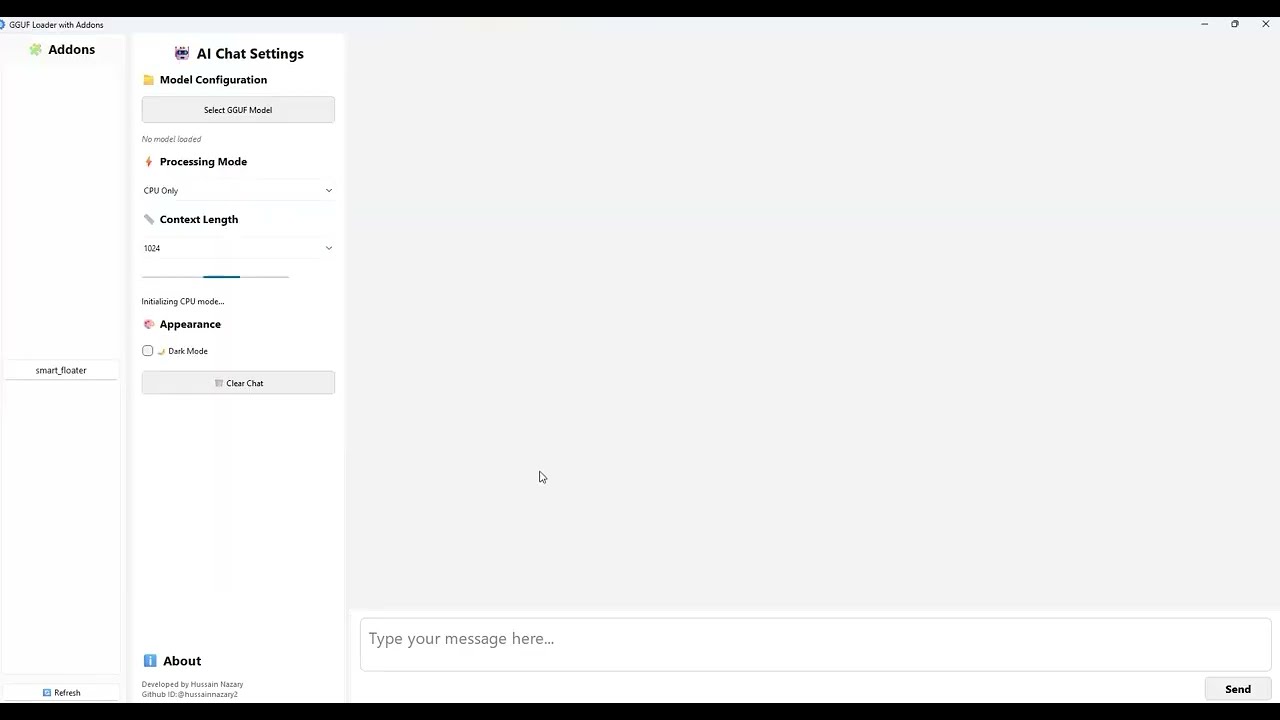

GGUF Loader is a GUI-first, plugin-based launcher for running local LLMs — featuring a built-in floating assistant that gives you instant AI access on top of any window.

🧠 Problem

Open-source LLMs are becoming faster and smarter, but the tools around them are still broken. Most setups require:

- Command-line knowledge

- Model management across multiple platforms

- No way to extend with features like summarization or RAG

- No native way to interact with AI while working

This makes local AI powerful in theory, but unusable for most people in practice.

💡 Solution

GGUF Loader brings everything together:

- One-click model loading with GGUF support

- A modern desktop interface anyone can use

- Built-in floating button that allows AI to follow your workflow

- A growing plugin system — inspired by Blender — so users can extend, customize, and share features

From loading a Mistral model to summarizing your documents or running agents over folders, GGUF Loader is designed to be your personal AI operating system.

🎯 Vision

We believe the future of AI is local, modular, and user-owned.

GGUF Loader is building the user interface layer for that future — a plug-and-play AI desktop engine that works fully offline, built around your workflows and privacy.

🔧 What’s Next

- Public plugin SDK

- Pro features: drag-drop RAG builder, context memory, advanced floating tools

- Addon marketplace (free and paid)

- Community system for sharing tools

🛠️ Dev Philosophy

- 💻 Local-first, no cloud dependency

- 🧩 Modular and hackable

- 🖱️ Usable by non-devs

- ⚡ Fast to launch, fast to load

GGUF Loader is open-source and in active development.

If you're an investor, contributor, or power user — get in touch or star the repo to follow progress.

Update (v2.0.1): GGUF Loader now includes a powerful floating assistant button that lets you interact with any text instantly, system-wide. Plus, it now supports an addon system, allowing developers to extend its capabilities just like a plugin-based platform. These features make GGUF Loader even more flexible and interactive for local AI workflows. Try the new version now!

🧠 GGUF Loader Quickstart

📦 1. Install GGUF Loader via pip

pip install ggufloader

🚀 2. Launch the App

After installation, run the following command in your terminal:

ggufloader

This will start the GGUF Loader interface. You can now load and chat with any GGUF model locally.

🧩 🎬 Demo Video: Addon System + Floating Tool in Local LLM (v2.0.1 Update)

Discover how to supercharge your local AI workflows using the new floating addon system! No coding needed. Works offline. Let me know if you want to support GUI launching, system tray, or shortcuts too.

🔽 Download GGUF Models

⚡ Click a link below to download the model file directly (no Hugging Face page in between).

🧠 GPT-OSS Models (Open Source GPTs)

High-quality, Apache 2.0 licensed, reasoning-focused models for local/enterprise use.