Llama-3.2-3B-LongCoT

A small model with LongCoT capability.

Features

- Using high-quality synthetic data for fine-tuning.

- The model can adjust whether to use LongCoT based on the complexity of the question.

- Good at mathematics and reasoning

Benchmark

| Benchmark | Llama-3.2-3B-Instruct | Llama-3.2-3B-LongCoT |

|---|---|---|

| Math | 35.5 | 52.0 |

| GSM8K | 77.3 | 82.3 |

Inference

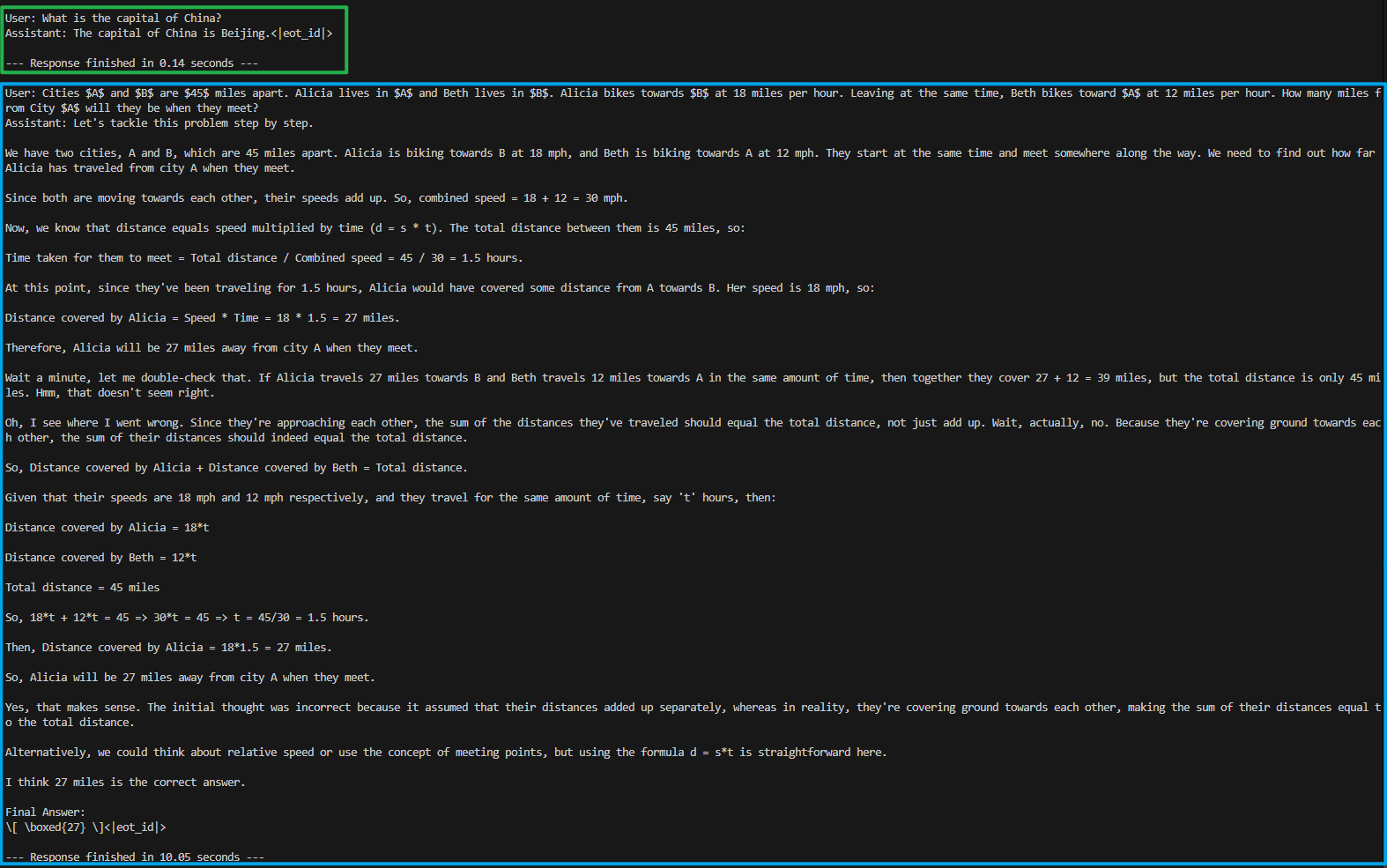

Example of Stream Inference:

import time

import torch

from transformers import (

AutoModelForCausalLM,

AutoTokenizer,

TextStreamer,

)

# Model ID from Hugging Face

model_id = "Kadins/Llama-3.2-3B-LongCoT"

# Load the pre-trained model with appropriate data type and device mapping

model = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.bfloat16, # Use bfloat16 for optimized performance

device_map="auto", # Automatically map the model to available devices

)

# Load the tokenizer associated with the model

tokenizer = AutoTokenizer.from_pretrained(model_id)

def stream_chat(messages, max_new_tokens=8192, top_p=0.95, temperature=0.6):

"""

Generates a response using streaming inference.

Args:

messages (list): A list of dictionaries containing the conversation prompt.

max_new_tokens (int): Maximum number of tokens to generate.

top_p (float): Nucleus sampling parameter for controlling diversity.

temperature (float): Sampling temperature to control response creativity.

"""

# Prepare the input by applying the chat template and tokenizing

inputs = tokenizer.apply_chat_template(

messages,

tokenize=True,

add_generation_prompt=True,

return_tensors="pt",

return_dict=True, # Ensure the output is a dictionary

).to(model.device) # Move the inputs to the same device as the model

# Initialize the TextStreamer for real-time output

streamer = TextStreamer(tokenizer, skip_prompt=True, skip_special_tokens=True)

# Record the start time for performance measurement

start_time = time.time()

# Generate the response using the model's generate method with streaming

model.generate(

**inputs,

max_new_tokens=max_new_tokens,

do_sample=True,

repetition_penalty=1.1,

top_p=top_p,

temperature=temperature,

streamer=streamer, # Enable streaming of the generated tokens

)

# Calculate and print the total response time

total_time = time.time() - start_time

print(f"\n--- Response finished in {total_time:.2f} seconds ---")

def chat_loop():

"""

Initiates an interactive chat session with the model.

Continuously reads user input and generates model responses until the user exits.

"""

while True:

# Initialize the conversation with a system message

messages = [

{"role": "system", "content": "You are a reasoning expert and helpful assistant."},

]

# Prompt the user for input

user_input = input("\nUser: ")

if user_input.strip().lower() in ["exit", "quit"]:

print("Exiting chat...")

break

# Append the user's message to the conversation history

messages.append({"role": "user", "content": user_input})

print("Assistant: ", end="", flush=True)

# Generate and stream the assistant's response

stream_chat(messages)

# Note: Currently, the assistant's reply is streamed directly to the console.

# To store the assistant's reply in the conversation history, additional handling is required.

if __name__ == "__main__":

# Start the interactive chat loop when the script is executed

chat_loop()

- Downloads last month

- 7

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support