metadata

base_model:

- Qwen/Qwen3-14B-Base

datasets:

- MegaScience/MegaScience

language:

- en

license: apache-2.0

metrics:

- accuracy

pipeline_tag: text-generation

library_name: transformers

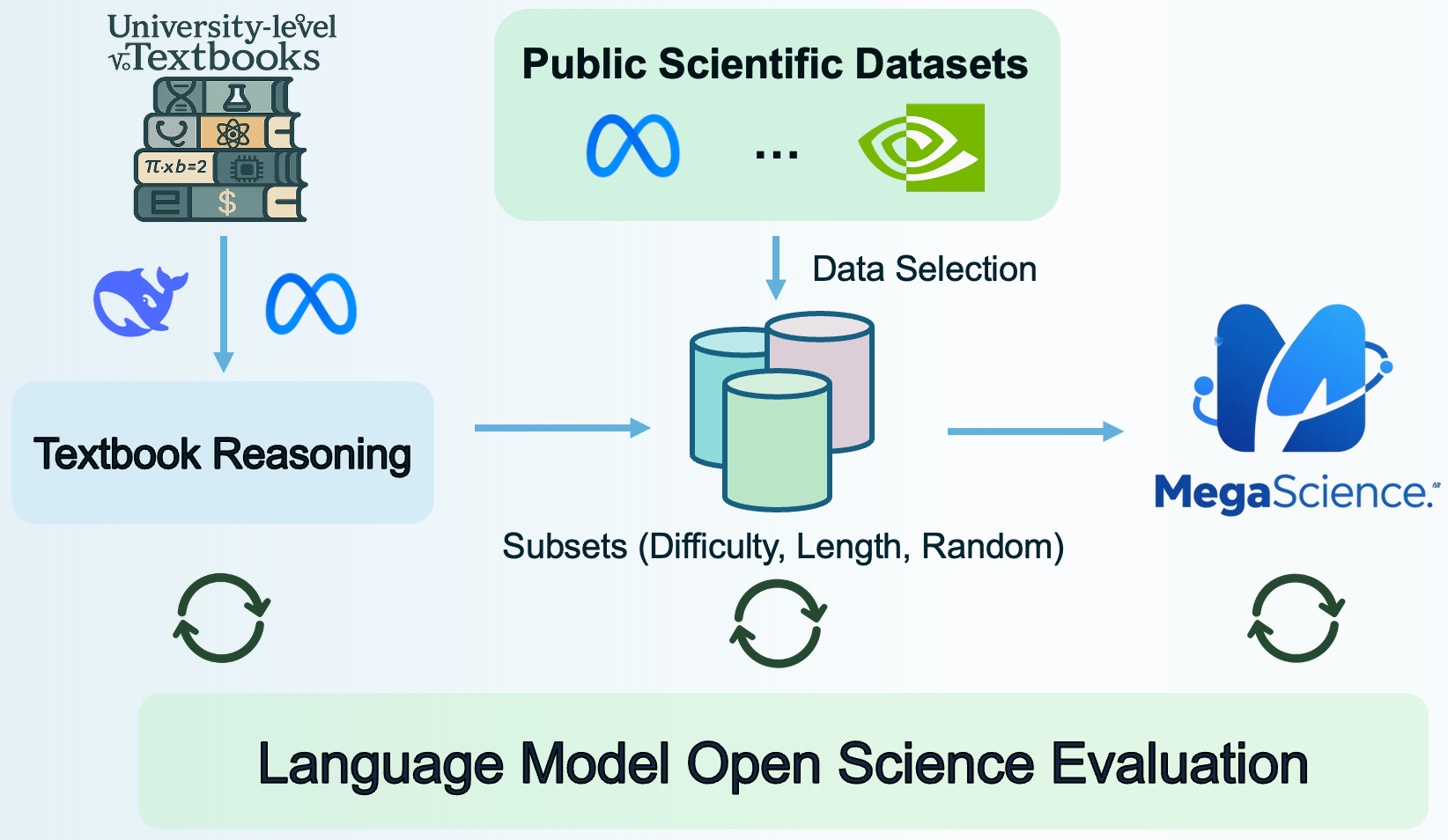

MegaScience: Pushing the Frontiers of Post-Training Datasets for Science Reasoning

This repository contains the Qwen3-14B-MegaScience model, a large language model fine-tuned on the MegaScience dataset for enhanced scientific reasoning.

Project Link: https://huggingface.co/MegaScience (Hugging Face Organization for MegaScience project)

Code Repository: https://github.com/GAIR-NLP/lm-open-science-evaluation

Usage

You can use this model with the transformers library for text generation:

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

model_id = "MegaScience/Qwen3-14B-MegaScience"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.bfloat16, # or torch.float16 if bfloat16 is not supported

device_map="auto"

)

messages = [

{"role": "system", "content": "You are a helpful and knowledgeable assistant."},

{"role": "user", "content": "Explain the concept of quantum entanglement in simple terms."}

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

model_inputs = tokenizer(text, return_tensors="pt").to(model.device)

generated_ids = model.generate(

model_inputs.input_ids,

max_new_tokens=512,

do_sample=True,

temperature=0.7,

top_p=0.9,

eos_token_id=tokenizer.eos_token_id,

)

response = tokenizer.decode(generated_ids[0][model_inputs.input_ids.shape[1]:], skip_special_tokens=True)

print(response)

Qwen3-14B-MegaScience

Training Recipe

- LR: 5e-6

- LR Schedule: Cosine

- Batch Size: 512

- Max Length: 4,096

- Warm Up Ratio: 0.05

- Epochs: 3

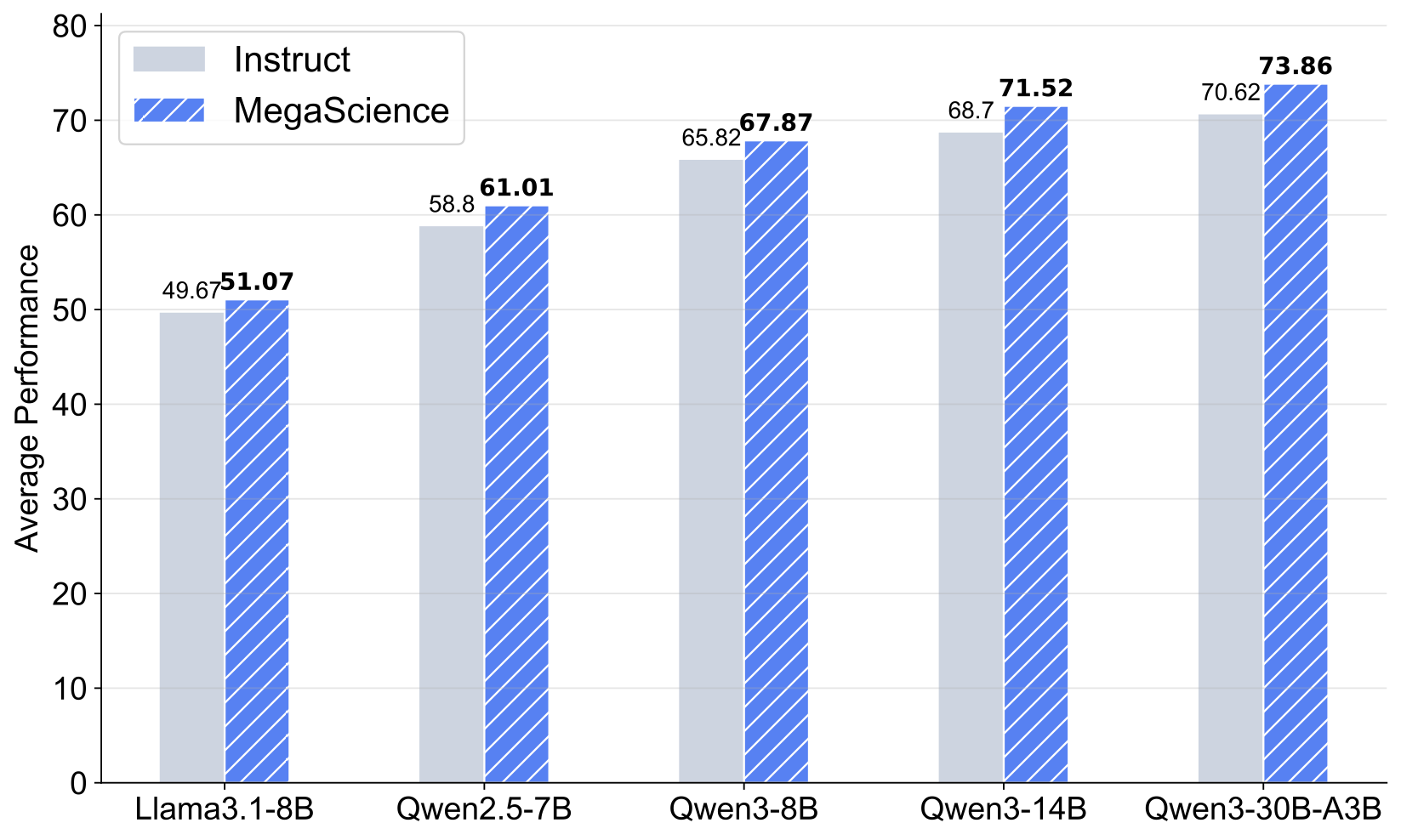

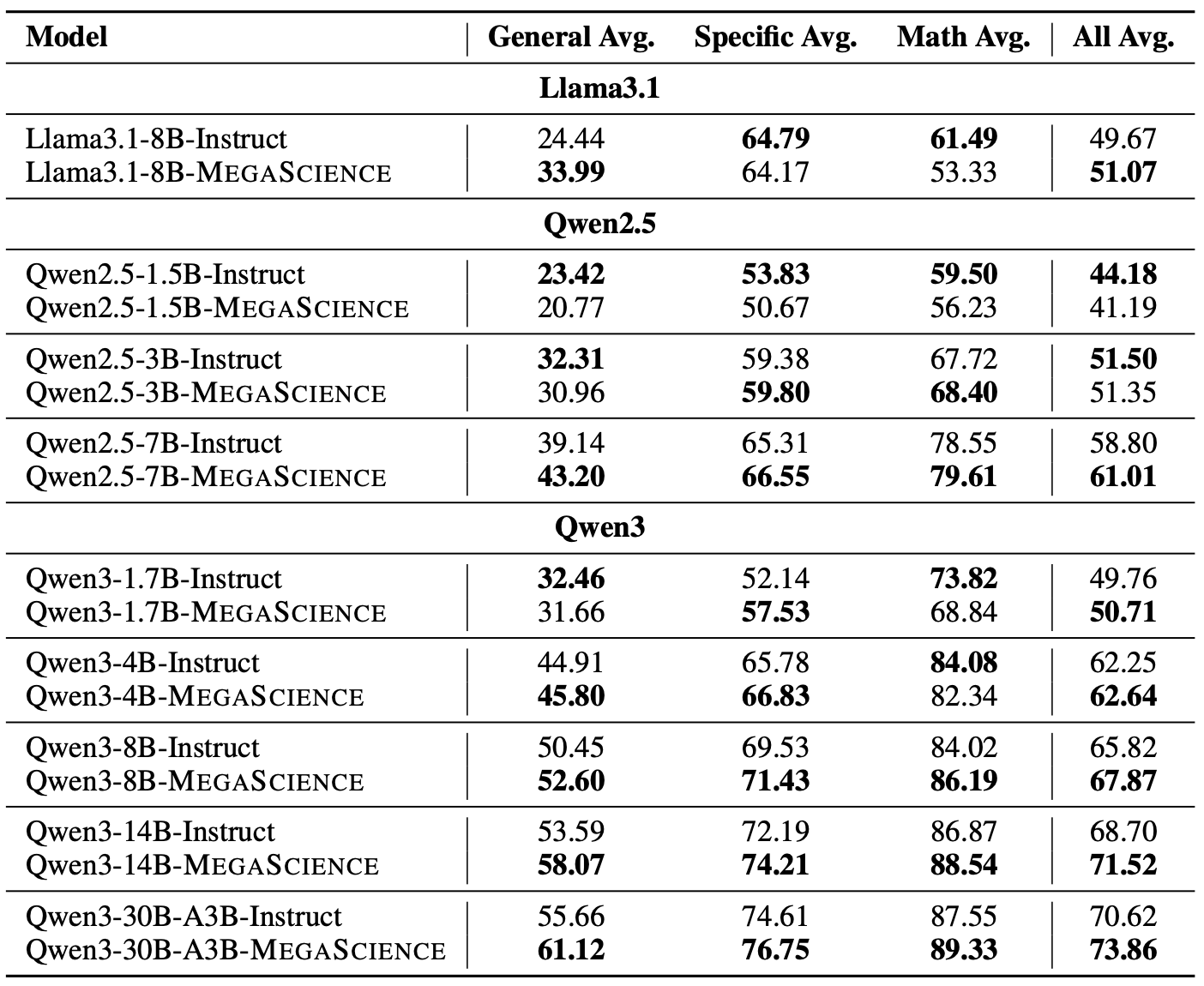

Evaluation Results

More about MegaScience

Citation

If you use our dataset or find our work useful, please cite

@article{fan2025megascience,

title={MegaScience: Pushing the Frontiers of Post-Training Datasets for Science Reasoning},

author={Fan, Run-Ze and Wang, Zengzhi and Liu, Pengfei},

year={2025},

journal={arXiv preprint arXiv:2507.16812},

url={https://arxiv.org/abs/2507.16812}

}