Aryabhatta 1.0 : An exam-focused language model for JEE Math

Overview

Aryabhata 1.0 is a 7B parameter small language model for mathematics developed by Physics Wallah AI Research, optimized for high-stakes Indian competitive exams like JEE Mains. Despite its compact size, Aryabhata 1.0 achieves state-of-the-art performance on exam-centric reasoning tasks with impressive token efficiency and low inference cost.

🚧 Aryabhata 1.0 is an experimental release. We are actively seeking feedback — please contribute in the Discussion tab of this repo.

🧠 Key Features

- Architecture: 7B parameter causal decoder-based model.

- Exam-Centric Optimization: Specifically tuned for JEE-level Mathematics reasoning.

- High Accuracy:

- 86% on JEE Mains January 2025 session.

- 90.2% on JEE Mains April 2025 session.

- Token Efficiency: Operates effectively around a ~2K token window, compared to ~8K required by other reasoning models.

- Compute Efficient: Trained on a 1x2 NVIDIA H100 GPU using optimized pipeline.

🛠️ Training Details

- Training Data: ~130K problem-solution pairs curated from proprietary Physics Wallah exam datasets.

- Training Pipeline:

- Model Merging

- Rejection Sampling

- Supervised Fine-Tuning (SFT)

- Reinforcement Learning with Verifiable Rewards (RLVR)

🔀 Model Merging

We began with model merging (Weighted average) to build a strong initialization (Aryabhata 0.5) by combining diverse model capabilities:

- Qwen 2.5 Math: A robust math-centric LLM with solid symbolic math foundations.

- Ace Math: An enhanced version of Qwen 2.5 Math, fine-tuned by NVIDIA for improved accuracy in mathematics benchmarks.

- DeepSeek R1 Distill Qwen: A long-form reasoning model, fine-tuned on reasoning traces distilled from DeepSeek R1.

📚 Data Curation + Rejection Sampling

We extracted ~250K raw questions from Physics Wallah's internal database and applied aggressive filtering and cleaning:

- Removed: diagram-based, non-English, and option-heavy questions.

- Kept: questions matching the distribution of JEE Main 2019–2024. Final curated dataset: ~130K high-quality questions.

For each question:

- Generated 4 CoTs using Aryabhata 0.5.

- Retained only those leading to correct final answers.

Resulting Dataset:

- ~100K questions

- ~350K high-quality CoTs

We used this dataset for SFT.

🎯 Reinforcement Learning with Verifiable Rewards (RLVR)

We used a custom in-house variant of Group Relative Policy Optimization (GRPO), adapted for math-specific reward functions.

- Removed KL-divergence penalty

- Removed clipping

We used RLVR on the remaining ~30K questions.

This multi-phase training strategy allows Aryabhata 1.0 to capture pedagogy-aligned reasoning patterns, making it highly effective for solving real student queries in mathematics.

📊 Performance Highlights

Evaluation Setup

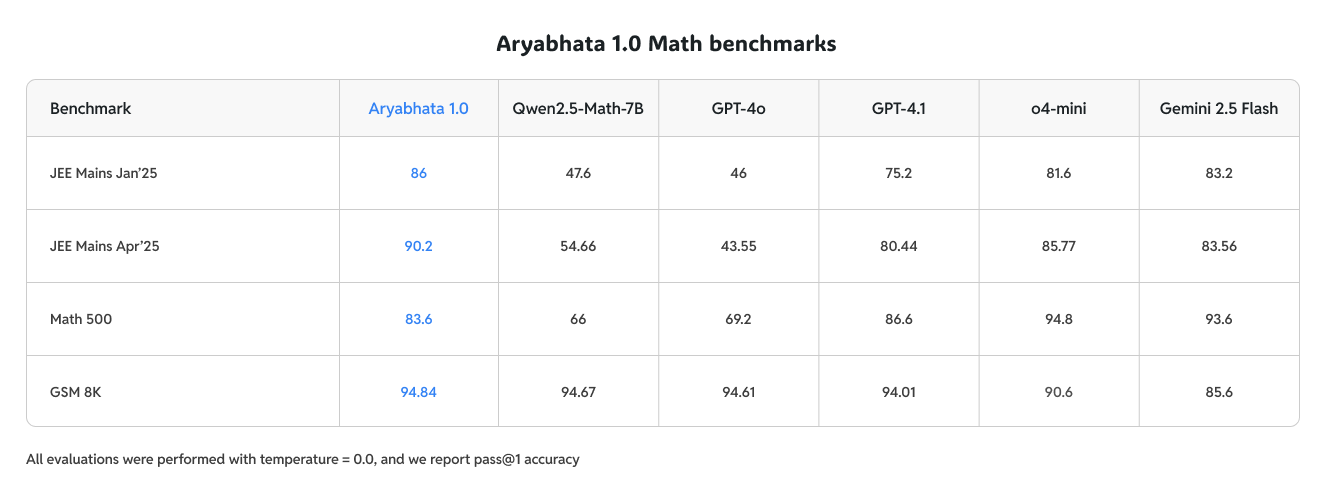

All evaluations were performed with temperature = 0.0, and we report pass@1 accuracy.

Evaluation Datasets

We evaluated the model on two sets of official JEE Mains 2025 mathematics papers:

- January Session: 10 question papers containing 250 questions.

- April Session: 9 question papers containing 225 questions.

Each paper includes a mix of:

- Multiple Choice Questions (MCQs) with one correct option

- Numeric Answer Type (NAT) questions requiring precise numerical responses

Evaluation Metric

We used a composite evaluation metric to reflect real-world grading rigor and reduce false positives:

- Float Match

- Compares predicted and target answers within a tolerance (±1e-9)

- Handles rounding artifacts and small numerical errors robustly

- String Match

- Used for symbolic answers (e.g., fractions, radicals)

- Uses strict exact match — predictions must match ground truth character-for-character

- LLM-as-Judge (GPT-4o-mini)

- Used for Mathematical equivalence for ambiguous formats

🔹 Accuracy Comparison Across Models

Aryabhata has the best accuracy on JEE Main Maths, on par with frontier models

🔹 Accuracy vs Token Usage

Aryabhata is on par with frontier models in terms of accuracy vs token usage

🔧 Intended Use

Primary Use Cases:

- Competitive exam preparation (JEE Main level mathematics problems)

- Question answering and doubt-solving systems

- Educational tutoring and concept explanation

💡 How to Use

🧪 Using with 🤗 Transformers

from transformers import AutoTokenizer, AutoModelForCausalLM, GenerationConfig

model_id = "PhysicsWallahAI/Aryabhata-1.0"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(model_id)

# Define stop strings

stop_strings = ["<|im_end|>", "<|end|>", "<im_start|>", "```python\n", "<|im_start|>", "]}}]}}]"]

def strip_bad_tokens(s, stop_strings):

for suffix in stop_strings:

if s.endswith(suffix):

return s[:-len(suffix)]

return s

# Create generation config (can also set temperature, top_p, etc.)

generation_config = GenerationConfig(

max_new_tokens=4096,

stop_strings = stop_strings

)

query = 'Find all the values of \\sqrt[3]{1}'

messages = [{'role': 'system', 'content': 'Think step-by-step; put only the final answer inside \\boxed{}.'},

{'role': 'user', 'content': query}]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

inputs = tokenizer([text], return_tensors="pt")

outputs = model.generate(**inputs, generation_config=generation_config, tokenizer=tokenizer)

print(strip_bad_tokens(tokenizer.decode(outputs[0], skip_special_tokens=True), stop_strings))

⚡ Using with vLLM

To run the model efficiently using vLLM:

from vllm import LLM, SamplingParams

# Initialize model (downloads from Hugging Face if not local)

llm = LLM(model="PhysicsWallahAI/Aryabhata-1.0")

# Define prompt and sampling configuration

query = 'Find all the values of \\sqrt[3]{1}'

messages = [{'role': 'system', 'content': 'Think step-by-step; put only the final answer inside \\boxed{}.'},

{'role': 'user', 'content': query}]

sampling_params = SamplingParams(temperature=0.0, max_tokens=4*1024, stop=["<|im_end|>", "<|end|>", "<im_start|>", "```python\n", "<|im_start|>", "]}}]}}]"])

# Run inference

results = llm.chat(messages, sampling_params)

# Print result

print(results[0].outputs[0].text.strip())

🚀 Roadmap

Aryabhata 2.0 (Upcoming):

- Extending domain coverage to Physics and Chemistry

- Supporting JEE Advanced, NEET, and Foundation syllabus

- Further optimization for affordability and accuracy in real-time deployments

🤝 Citation

If you use this model, please cite:

@misc{Aryabhata2025,

title = {Aryabhata 1.0: A compact, exam-focused language model tailored for mathematics in Indian competitive exams, especially JEE Main.},

author = {Physics Wallah AI Research},

year = {2025},

note = {\url{https://huggingface.co/PhysicsWallahAI/Aryabhata-1.0}},

}

- Downloads last month

- 17