Add assistant mask support to Qwen3-0.6B-Base

Enable Assistant Token Masking for Qwen3-0.6B-Base

This pull request introduces support for assistant token masking in Qwen models by incorporating the {% generation %} tag within the chat template.

HuggingFace Transformers supports returning a mask of the tokens generated by the assistant in the return_assistant_tokens_mask argument of tokenizer.apply_chat_template (see huggingface/transformers#30650). Unfortunately, a lot of LLMs don't support this feature yet even though it's been a year since it was added.

🛠️ Chat Template Proposed Change

--- tokenizer_config.json (original)

+++ tokenizer_config.json (modified)

@@ -17,33 +17,40 @@

{%- set ns = namespace(multi_step_tool=true, last_query_index=messages|length - 1) %}

{%- for message in messages[::-1] %}

{%- set index = (messages|length - 1) - loop.index0 %}

- {%- if ns.multi_step_tool and message.role == "user" and not(message.content.startswith('<tool_response>') and message.content.endswith('</tool_response>')) %}

+ {%- if ns.multi_step_tool and message.role == "user" and message.content is string and not(message.content.startswith('<tool_response>') and message.content.endswith('</tool_response>')) %}

{%- set ns.multi_step_tool = false %}

{%- set ns.last_query_index = index %}

{%- endif %}

{%- endfor %}

{%- for message in messages %}

+ {%- if message.content is string %}

+ {%- set content = message.content %}

+ {%- else %}

+ {%- set content = '' %}

+ {%- endif %}

{%- if (message.role == "user") or (message.role == "system" and not loop.first) %}

- {{- '<|im_start|>' + message.role + '\n' + message.content + '<|im_end|>' + '\n' }}

+ {{- '<|im_start|>' + message.role + '\n' + content + '<|im_end|>' + '\n' }}

{%- elif message.role == "assistant" %}

- {%- set content = message.content %}

{%- set reasoning_content = '' %}

- {%- if message.reasoning_content is defined and message.reasoning_content is not none %}

+ {%- if message.reasoning_content is string %}

{%- set reasoning_content = message.reasoning_content %}

{%- else %}

- {%- if '</think>' in message.content %}

- {%- set content = message.content.split('</think>')[-1].lstrip('\n') %}

- {%- set reasoning_content = message.content.split('</think>')[0].rstrip('\n').split('<think>')[-1].lstrip('\n') %}

+ {%- if '</think>' in content %}

+ {%- set reasoning_content = content.split('</think>')[0].rstrip('\n').split('<think>')[-1].lstrip('\n') %}

+ {%- set content = content.split('</think>')[-1].lstrip('\n') %}

{%- endif %}

{%- endif %}

+

+ {{- '<|im_start|>' + message.role }}

+ {% generation %}

{%- if loop.index0 > ns.last_query_index %}

{%- if loop.last or (not loop.last and reasoning_content) %}

- {{- '<|im_start|>' + message.role + '\n<think>\n' + reasoning_content.strip('\n') + '\n</think>\n\n' + content.lstrip('\n') }}

+ {{- '<think>\n' + reasoning_content.strip('\n') + '\n</think>\n\n' + content.lstrip('\n') }}

{%- else %}

- {{- '<|im_start|>' + message.role + '\n' + content }}

+ {{- content }}

{%- endif %}

{%- else %}

- {{- '<|im_start|>' + message.role + '\n' + content }}

+ {{- content }}

{%- endif %}

{%- if message.tool_calls %}

{%- for tool_call in message.tool_calls %}

@@ -64,13 +71,14 @@

{{- '}\n</tool_call>' }}

{%- endfor %}

{%- endif %}

- {{- '<|im_end|>\n' }}

+ {{- '<|im_end|>' }}

+ {% endgeneration %}

{%- elif message.role == "tool" %}

{%- if loop.first or (messages[loop.index0 - 1].role != "tool") %}

{{- '<|im_start|>user' }}

{%- endif %}

{{- '\n<tool_response>\n' }}

- {{- message.content }}

+ {{- content }}

{{- '\n</tool_response>' }}

{%- if loop.last or (messages[loop.index0 + 1].role != "tool") %}

{{- '<|im_end|>\n' }}

Why This is Important

As an example, distinguishing between tokens generated by the assistant and those originating from the user or environment is critical for various advanced applications. A prime example is multi-turn Reinforcement Learning (RL) training.

Currently, in frameworks like VeRL, identifying actor-generated tokens often requires manual reconstruction from the model's output. With this change to chat template, this process should be significantly simplified by leveraging existing solutions and not reinventing the wheel.

It would be great if Qwen models supported this feature, as they are widely used in the RL community.

🚀 Usage Example

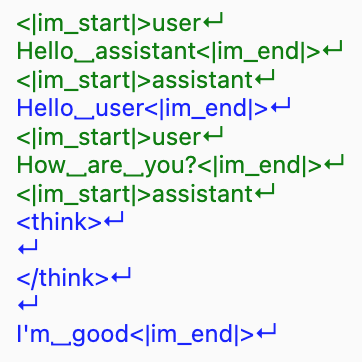

The following demonstrates how to retrieve the assistant token mask:

import transformers

tokenizer = transformers.AutoTokenizer.from_pretrained("Qwen/Qwen3-0.6B-Base")

conversation = [

{"role": "user", "content": "Hello assistant"},

{"role": "assistant", "content": "Hello user"},

{"role": "user", "content": "How are you?"},

{"role": "assistant", "content": "I'm good"},

]

tokenized_output = tokenizer.apply_chat_template(

conversation,

return_assistant_tokens_mask=True,

return_dict=True,

)

print("Tokenized Output with Assistant Mask:")

print(tokenized_output)

# BEFORE

# {'input_ids': [151644, 872, 198, 9707, 17847, 151645, 198, 151644, 77091, 198, 9707, 1196, 151645, 198, 151644, 872, 198, 4340, 525, 498, 30, 151645, 198, 151644, 77091, 198, 151667, 271, 151668, 271, 40, 2776, 1661, 151645, 198],

# 'attention_mask': [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1],

# 'assistant_masks': [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

# }

# AFTER

# {'input_ids': [151644, 872, 198, 9707, 17847, 151645, 198, 151644, 77091, 198, 9707, 1196, 151645, 198, 151644, 872, 198, 4340, 525, 498, 30, 151645, 198, 151644, 77091, 198, 151667, 271, 151668, 271, 40, 2776, 1661, 151645, 198],

# 'attention_mask': [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1],

# 'assistant_masks': [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1]

# }

Visualizing the mask helps understand which parts of the input correspond to the assistant's generation:

Testing

- Verified template works with both tool and non-tool scenarios

- Verified works with reasoning content