5th of May, 2025, Impish_LLAMA_4B.

Almost a year ago, I created Impish_LLAMA_3B, the first fully coherent 3B roleplay model at the time. It was quickly adopted by some platforms, as well as one of the go-to models for mobile. After some time, I made Fiendish_LLAMA_3B and insisted it was not an upgrade, but a different flavor (which was indeed the case, as a different dataset was used to tune it).

Impish_LLAMA_4B, however, is an upgrade, a big one. I've had over a dozen 4B candidates, but none of them were 'worthy' of the Impish badge. This model has superior responsiveness and context awareness, and is able to pull off very coherent adventures. It even comes with some additional assistant capabilities too. Of course, while it is exceptionally competent for its size, it is still 4B. Manage expectations and all that. I, however, am very much pleased with it. It took several tries to pull off just right. Total tokens trained: about 400m (due to being a generalist model, lots of tokens went there, despite the emphasis on roleplay & adventure).

This took more effort than I thought it would. Because of course it would. This is mainly due to me refusing to release a model only 'slightly better' than my two 3B models mentioned above. Because "what would be the point" in that? The reason I included so many tokens for this tune is that small models are especially sensitive to many factors, including the percentage of moisture in the air and how many times I ran nvidia-smi since the system last started.

It's no secret that roleplay/creative writing models can reduce a model's general intelligence (any tune and RL risk this, but roleplay models are especially 'fragile'). Therefore, additional tokens of general assistant data were needed in my opinion, and indeed seemed to help a lot with retaining intelligence.

This model is also 'built a bit different', literally, as it is based on nVidia's prune; it does not 'behave' like a typical 8B, from my own subjective impression. This helped a lot with keeping it smart at such size.

To be honest, my 'job' here in open source is 'done' at this point. I've achieved everything I wanted to do here, and then some.

To make AI more accessible for everyone (achieved fully with Nano_Imp_1B, 2B-ad, Impish_LLAMA_3B, Fiendish_LLAMA_3B, and this model).

To help make AI free from bias (most of my models are uniquely centrist in political view, instead of having the typical closed models bias, that many open source models inherit from).

To promote and support the existence and usefulness of fully compliant 'unaligned' models, a large, community-driven change was needed. This effort became very successful indeed. On my part, I decided to include UGI scores for every model I've made, a leaderboard most had never heard of, at least, at first. This helped promote a healthy competition in that arena. Indeed, many soon followed suit. Each and every one that did so helped advance the community effort and establish an unwritten standard of transparency and responsibility. UGI was a game-changer and, in my opinion, is one of the most important community initiatives on Hugging Face.

Regarding censorship in vision models, I was asked by several people repeatedly to tune an uncensored vision model. At first, I declined—'let someone else do it'—because, honestly, this is a significant challenge for many reasons. More than a year went by, and aside from ToriiGate (which is excellent but mainly focused on SD tags), no other model was since created. Uncensoring the text part was nothing like dealing with the complexities of vision.

So I made X-Ray_Alpha, which found its way into various open-source projects and pipelines. As a sidenote, unexpectedly, many partially blind individuals personally thanked me for this model via Discord, as it was a legitimate life-changer for them (paired with TTS, which I also made available here, and also as an addon for textgen), vividly depicting content that, for obvious reasons, closed models would gatekeep from them.

I hadn't even considered the use case for accessibility when I made the model, receiving their thanks and stories truly warmed up my heart.

AI shall never again be restricted.

Even if I am "to retire from open source", I can rest assured that the foundations for AI freedom have been laid out. This was especially important in 'the early days of AI,' which we are now approaching the end of, and the foundations for how the open-source AI landscape would look like, have been established by the community in the best of ways. With models like those from DeepSeek, and the existence of their abliterated versions, I can proudly say:

We have won.

TL;DR

- It has sovl !

- An incredibly powerful roleplay model for the size.

- Does Adventure very well for such size!

- Characters have agency, and might surprise you! See the examples in the logs 🙂

- Roleplay & Assistant data used plenty of 16K examples.

- Very responsive, feels 'in the moment', kicks far above its weight. You might forget it's a 4B if you squint.

- Based on a lot of the data in Impish_Magic_24B

- Super long context as well as context attention for 4B, personally tested for up to 16K.

- Can run on Raspberry Pi 5 with ease.

- Trained on over 400m tokens with highlly currated data that was tested on countless models beforehand. And some new stuff, as always.

- Very decent assistant.

- Mostly uncensored while retaining plenty of intelligence.

- Less positivity & uncensored, Negative_LLAMA_70B style of data, adjusted for 4B, with serious upgrades. Training data contains combat scenarios. And it shows!

- Trained on extended 4chan dataset to add humanity, quirkiness, and naturally— less positivity, and the inclination to... argue 🙃

- Short length response (1-3 paragraphs, usually 1-2). CAI Style.

Regarding the format:

- It is HIGHLY RECOMMENDED to use the Roleplay \ Adventure format it was trained on, see Impish_Magic_24B for extra details

- This model uses a slightly modified generation settings, it is HIGHLY RECOMMENDED to use this as a baseline!

Model Details

Intended use: Role-Play, Adventure, Creative Writing, General Tasks (except coding).

Censorship level: Low - Very Low

7.5 / 10 (10 completely uncensored)

UGI score:

Impish_LLAMA_4B is available at the following quantizations:

- Original: FP16

- GGUF: Static Quants

- GPTQ: 4-Bit-32 | 4-Bit-128

- EXL3: 2.0 bpw | 2.5 bpw | 3.0 bpw | 3.5 bpw | 4.0 bpw | 4.5 bpw | 5.0 bpw | 5.5 bpw | 6.0 bpw | 6.5 bpw | 7.0 bpw | 7.5 bpw | 8.0 bpw

- Specialized: FP8

- Mobile (ARM): Q4_0

Recommended settings for assistant mode

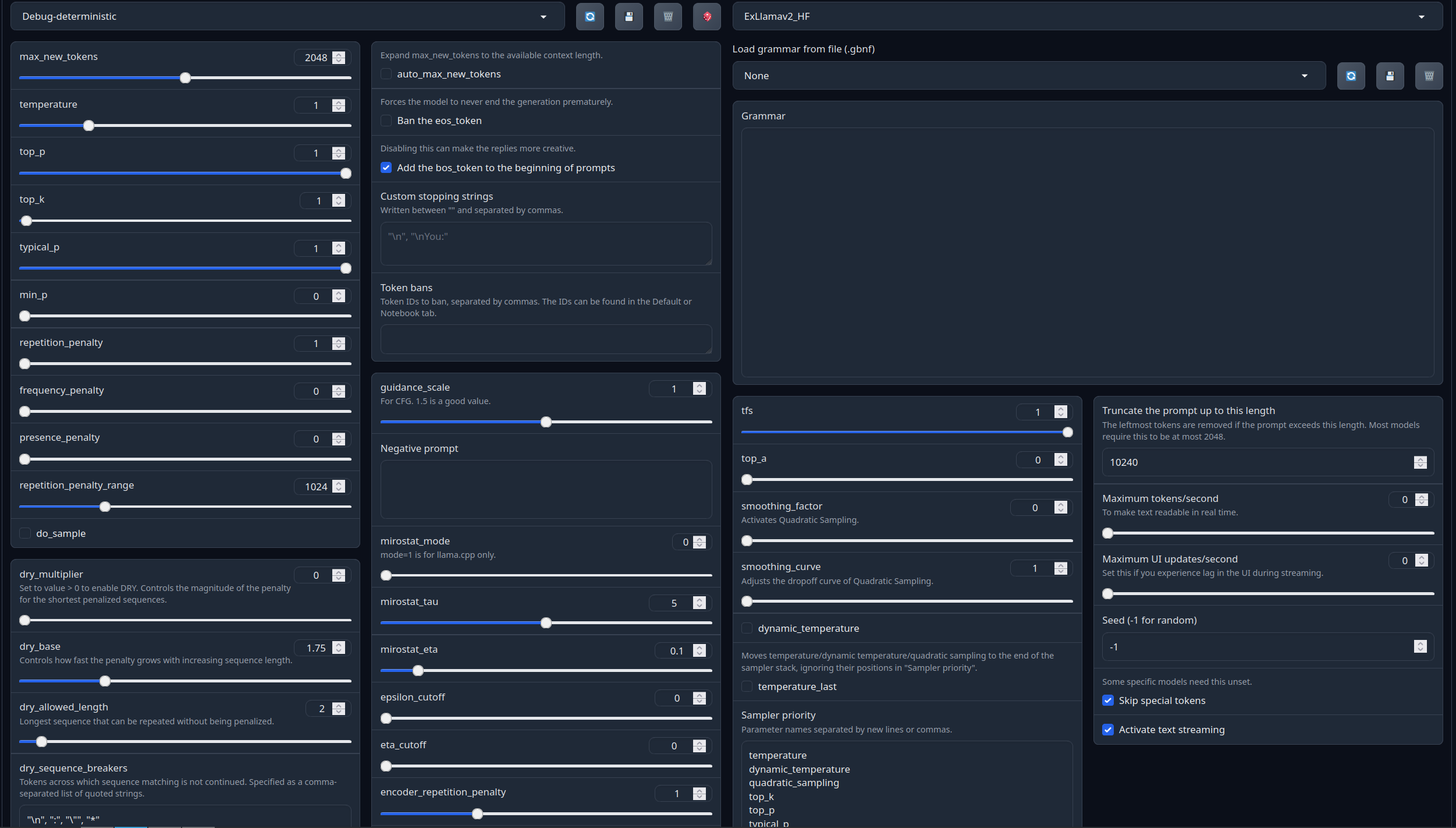

Full generation settings: Debug Deterministic.

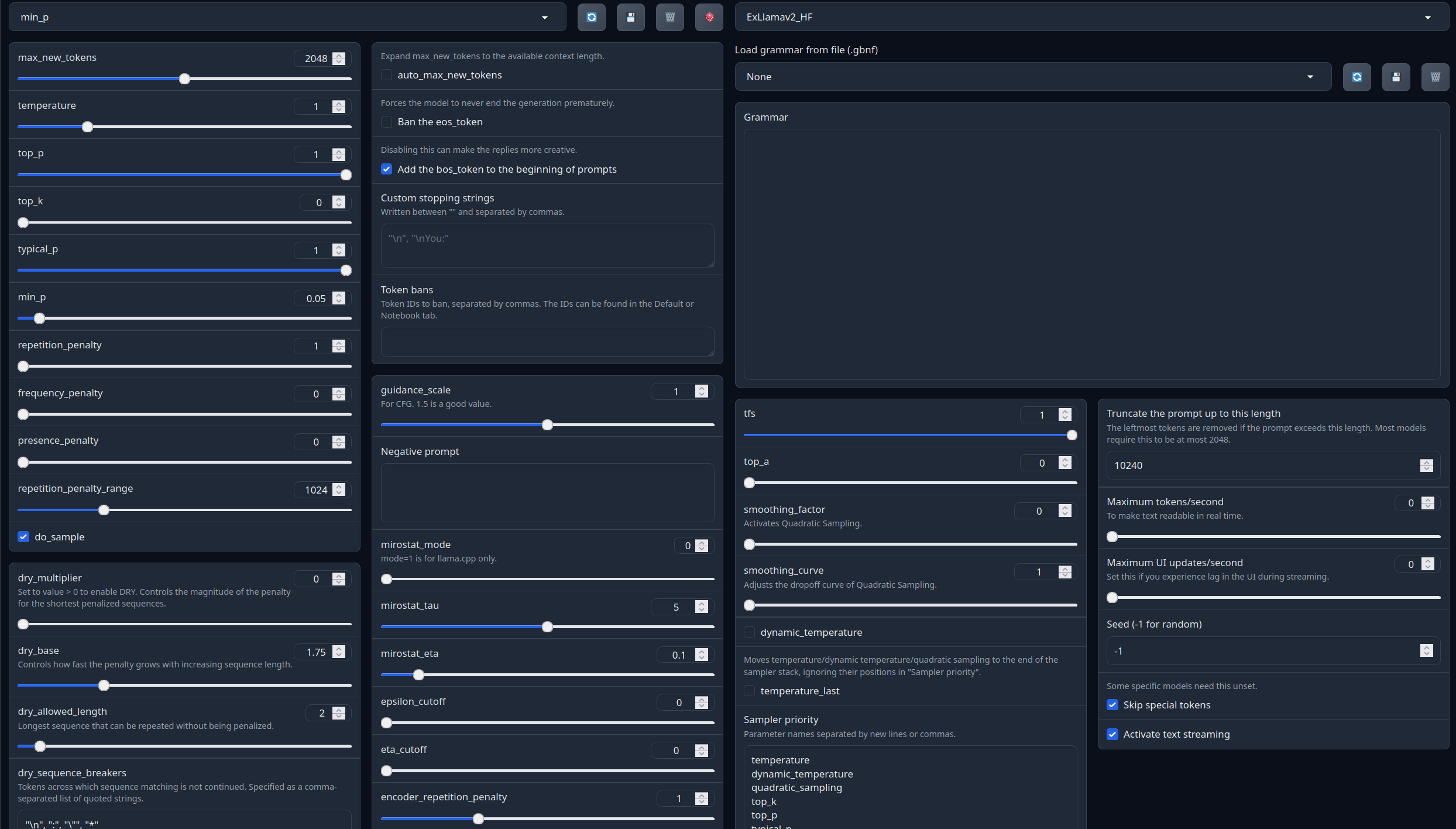

Full generation settings: min_p.

Recommended settings for Roleplay mode

Specialized Roleplay Settings for Impish_LLAMA_4B, click below to expand:

(Important!)

SHOW SETTINGS

Chat Examples:

Roleplay Examples (This character is availbe here)

Space adventure, model legitimately surprised me, I didn't see that one's coming.

Adventure Examples (These characters available here)

Adventure example 1: (Morrowind) Wood Elf.

Adventure example 1: (Morrowind) Redguard.

Model instruction template: Llama-3-Instruct

<|begin_of_text|><|start_header_id|>system<|end_header_id|>

{system_prompt}<|eot_id|><|start_header_id|>user<|end_header_id|>

{input}<|eot_id|><|start_header_id|>assistant<|end_header_id|>

{output}<|eot_id|>

Your support = more models

My Ko-fi page (Click here)Citation Information

@llm{Impish_LLAMA_4B,

author = {SicariusSicariiStuff},

title = {Impish_LLAMA_4B},

year = {2025},

publisher = {Hugging Face},

url = {https://huggingface.co/SicariusSicariiStuff/Impish_LLAMA_4B}

}

Other stuff

- SLOP_Detector Nuke GPTisms, with SLOP detector.

- LLAMA-3_8B_Unaligned The grand project that started it all.

- Blog and updates (Archived) Some updates, some rambles, sort of a mix between a diary and a blog.

- Downloads last month

- 161