TokLIP: Marry Visual Tokens to CLIP for Multimodal Comprehension and Generation

Welcome to the official code repository for "TokLIP: Marry Visual Tokens to CLIP for Multimodal Comprehension and Generation".

Your star means a lot to us in developing this project! ⭐⭐⭐

📰 News

- [2025/08/18] 🚀 Check our latest results on arXiv (PDF)!

- [2025/08/18] 🔥 We release TokLIP XL with 512 resolution 🤗 TokLIP_XL_512!

- [2025/08/05] 🔥 We release the training code!

- [2025/06/05] 🔥 We release the code and models!

- [2025/05/09] 🚀 Our paper is available on arXiv!

👀 Introduction

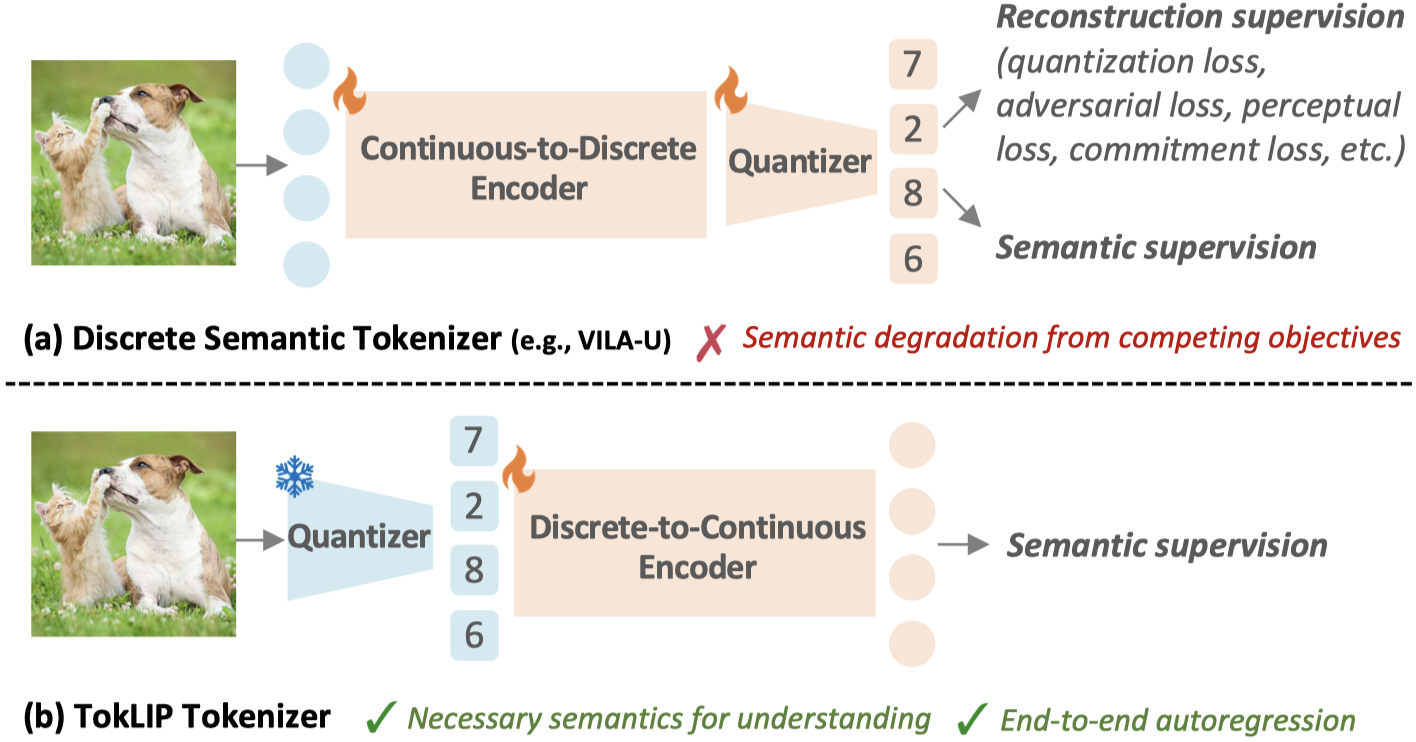

We introduce TokLIP, a visual tokenizer that enhances comprehension by semanticizing vector-quantized (VQ) tokens and incorporating CLIP-level semantics while enabling end-to-end multimodal autoregressive training with standard VQ tokens.

TokLIP integrates a low-level discrete VQ tokenizer with a ViT-based token encoder to capture high-level continuous semantics.

Unlike previous approaches (e.g., VILA-U) that discretize high-level features, TokLIP disentangles training objectives for comprehension and generation, allowing the direct application of advanced VQ tokenizers without the need for tailored quantization operations.

🔧 Installation

conda create -n toklip python=3.10 -y

conda activate toklip

git clone https://github.com/TencentARC/TokLIP

pip install --upgrade pip

pip install -r requirements.txt

⚙️ Usage

Model Weight

| Model | Resolution | VQGAN | IN Top1 | COCO TR@1 | COCO IR@1 | Weight |

|---|---|---|---|---|---|---|

| TokLIP-S | 256 | LlamaGen | 76.4 | 64.06 | 48.46 | 🤗 TokLIP_S_256 |

| TokLIP-L | 384 | LlamaGen | 80.0 | 68.00 | 52.87 | 🤗 TokLIP_L_384 |

| TokLIP-XL | 512 | IBQ | 80.8 | 69.40 | 53.77 | 🤗 TokLIP_XL_512 |

Training

Please refer to img2dataset to prepare the WebDataset required for training. You may choose datasets such as CC3M, CC12M, or LAION.

Prepare the teacher models using

src/covert.py:cd src TIMM_MODEL='original' python covert.py --model_name 'ViT-SO400M-16-SigLIP2-256' --save_path './model/siglip2-so400m-vit-l16-256.pt' TIMM_MODEL='original' python covert.py --model_name 'ViT-SO400M-16-SigLIP2-384' --save_path './model/siglip2-so400m-vit-l16-384.pt'Train TokLIP using the scripts

src\train_toklip_256.shandsrc\train_toklip_384.sh. You need to set--train-dataand--train-num-samplesarguments accordingly.

Evaluation

Please first download the TokLIP model weights.

We provide the evaluation scripts for ImageNet classification and MSCOCO Retrieval in src\test_toklip_256.sh, src\test_toklip_384.sh, and src\test_toklip_512.sh.

Please revise the --pretrained, --imagenet-val, and --coco-dir with your specific paths.

Inference

We provide the inference example in src/inference.py.

cd src

python inference.py --model-config 'ViT-SO400M-16-SigLIP2-384-toklip' --pretrained 'YOUR_TOKLIP_PATH'

Model Usage

We provide build_toklip_encoder function in src/create_toklip.py, you could directly load TokLIP with model, image_size, and model_path parameters.

🔜 TODOs

- Release training codes.

- Release TokLIP-XL with 512 resolution.

📂 Contact

If you have further questions, please open an issue or contact [email protected].

Discussions and potential collaborations are also welcome.

🙏 Acknowledgement

This repo is built upon the following projects:

We thank the authors for their codes.

📝 Citation

Please cite our work if you use our code or discuss our findings in your own research:

@article{lin2025toklip,

title={Toklip: Marry visual tokens to clip for multimodal comprehension and generation},

author={Lin, Haokun and Wang, Teng and Ge, Yixiao and Ge, Yuying and Lu, Zhichao and Wei, Ying and Zhang, Qingfu and Sun, Zhenan and Shan, Ying},

journal={arXiv preprint arXiv:2505.05422},

year={2025}

}

- Downloads last month

- 32

Model tree for TencentARC/TokLIP

Base model

google/siglip2-so400m-patch16-256