News & Updates

Let us know if this works!

👥 Authors

Song Fei1*, Tian Ye1*‡, Lei Zhu1,2†

1The Hong Kong University of Science and Technology (Guangzhou)

2The Hong Kong University of Science and Technology*Equal Contribution, ‡Project Leader, †Corresponding Author

🌟 What is LucidFlux?

LucidFlux is a framework designed to perform high-fidelity image restoration across a wide range of degradations without requiring textual captions. By combining a Flux-based DiT backbone with Light-weight Condition Module and SigLIP semantic alignment, LucidFlux enables caption-free guidance while preserving structural and semantic consistency, achieving superior restoration quality.

📊 Performance Benchmarks

📈 Quantitative Results

| Benchmark | Metric | ResShift | StableSR | SinSR | SeeSR | DreamClear | SUPIR | LucidFlux (Ours) |

|---|---|---|---|---|---|---|---|---|

| RealSR | CLIP-IQA+ ↑ | 0.5005 | 0.4408 | 0.5416 | 0.6731 | 0.5331 | 0.5640 | 0.7074 |

| Q-Align ↑ | 3.1045 | 2.5087 | 3.3615 | 3.6073 | 3.0044 | 3.4682 | 3.7555 | |

| MUSIQ ↑ | 49.50 | 39.98 | 57.95 | 67.57 | 49.48 | 55.68 | 70.20 | |

| MANIQA ↑ | 0.2976 | 0.2356 | 0.3753 | 0.5087 | 0.3092 | 0.3426 | 0.5437 | |

| NIMA ↑ | 4.7026 | 4.3639 | 4.8282 | 4.8957 | 4.4948 | 4.6401 | 5.1072 | |

| CLIP-IQA ↑ | 0.5283 | 0.3521 | 0.6601 | 0.6993 | 0.5390 | 0.4857 | 0.6783 | |

| NIQE ↓ | 9.0674 | 6.8733 | 6.4682 | 5.4594 | 5.2873 | 5.2819 | 4.2893 | |

| RealLQ250 | CLIP-IQA+ ↑ | 0.5529 | 0.5804 | 0.6054 | 0.7034 | 0.6810 | 0.6532 | 0.7406 |

| Q-Align ↑ | 3.6318 | 3.5586 | 3.7451 | 4.1423 | 4.0640 | 4.1347 | 4.3935 | |

| MUSIQ ↑ | 59.50 | 57.25 | 65.45 | 70.38 | 67.08 | 65.81 | 73.01 | |

| MANIQA ↑ | 0.3397 | 0.2937 | 0.4230 | 0.4895 | 0.4400 | 0.3826 | 0.5589 | |

| NIMA ↑ | 5.0624 | 5.0538 | 5.2397 | 5.3146 | 5.2200 | 5.0806 | 5.4836 | |

| CLIP-IQA ↑ | 0.6129 | 0.5160 | 0.7166 | 0.7063 | 0.6950 | 0.5767 | 0.7122 | |

| NIQE ↓ | 6.6326 | 4.6236 | 5.4425 | 4.4383 | 3.8700 | 3.6591 | 3.6742 |

🎭 Gallery & Examples

🎨 LucidFlux Gallery

🔍 Comparison with Open-Source Methods

| LQ | SinSR | SeeSR | SUPIR | DreamClear | Ours |

| |||||

| |||||

| |||||

| |||||

| |||||

Show more examples

| |||||

| |||||

| |||||

| |||||

|

💼 Comparison with Commercial Models

| LQ | HYPIR | Topaz | SeeDream 4.0 | Gemini-NanoBanana | GPT-4o | Ours |

| ||||||

| ||||||

| ||||||

| ||||||

Show more examples

| ||||||

| ||||||

| ||||||

|

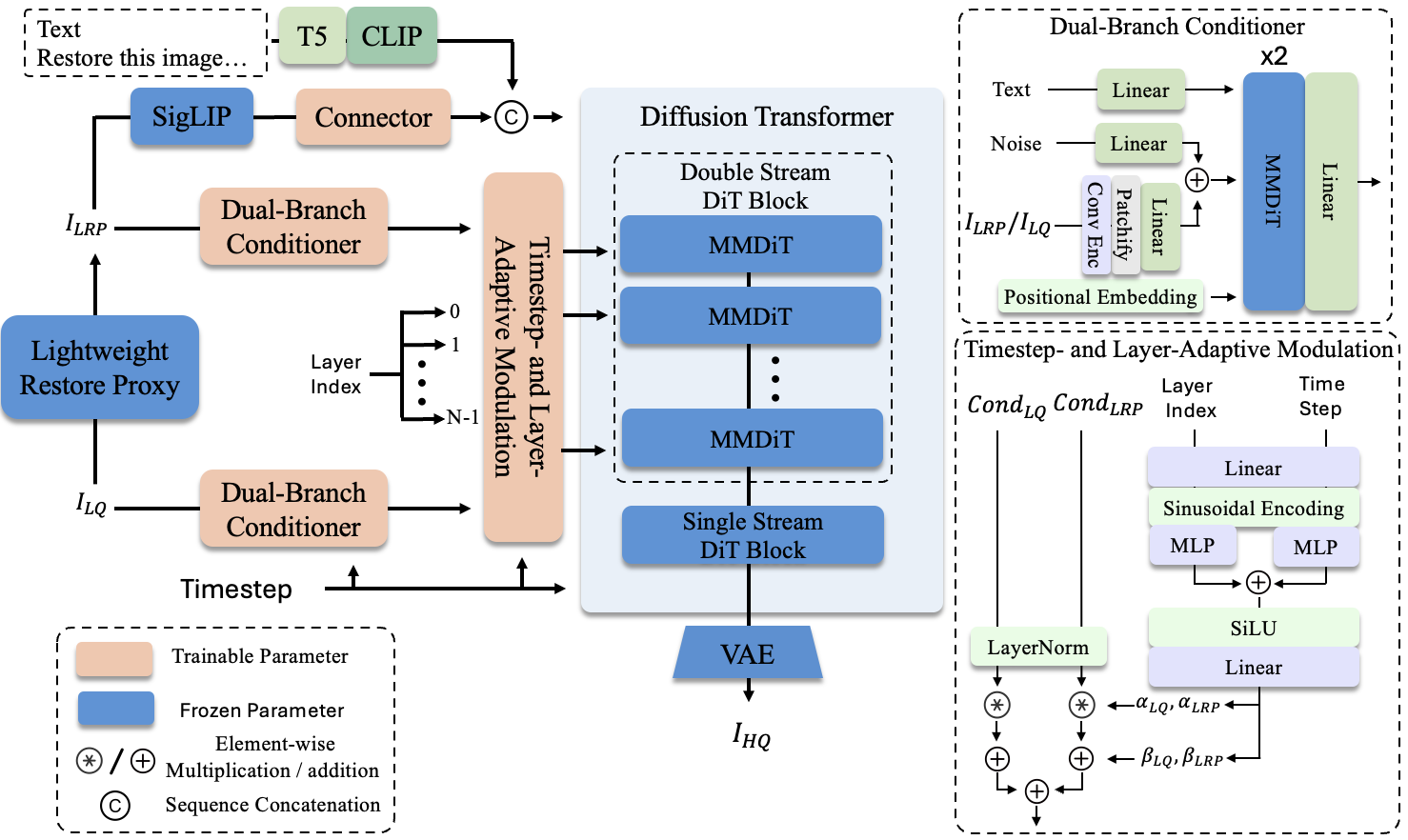

🏗️ Model Architecture

Caption-Free Universal Image Restoration with a Large-Scale Diffusion Transformer

Our unified framework consists of four critical components in the training workflow:

🔤 Scaling Up Real-world High-Quality Data for Universal Image Restoration

🎨 Two Parallel Light-weight Condition Module Branches for Low-Quality Image Conditioning

🎯 Timestep and Layer-Adaptive Condition Injection

🔄 Semantic Priors from Siglip for Caption-Free Semantic Alignment

🚀 Quick Start

🔧 Installation

# Clone the repository

git clone https://github.com/W2GenAI-Lab/LucidFlux.git

cd LucidFlux

# Create conda environment

conda create -n lucidflux python=3.9

conda activate lucidflux

# Install dependencies

pip install -r requirements.txt

Inference

Prepare models in 2 steps, then run a single command.

- Login to Hugging Face (required for gated FLUX.1-dev). Skip if already logged-in.

python -m tools.hf_login --token "$HF_TOKEN"

- Download required weights to fixed paths and export env vars

# FLUX.1-dev (flow+ae), SwinIR prior, T5, CLIP, SigLIP and LucidFlux checkpoint to ./weights

python -m tools.download_weights --dest weights

# Exports FLUX_DEV_FLOW/FLUX_DEV_AE to your shell

source weights/env.sh

Run inference (uses fixed relative paths):

bash inference.sh

You can also obtain results of LucidFlux on RealSR and RealLQ250 from Hugging Face: LucidFlux.

🪪 License

The provided code and pre-trained weights are licensed under the FLUX.1 [dev].

🙏 Acknowledgments

This code is based on FLUX. Some code are brought from DreamClear, x-flux. We thank the authors for their awesome work.

🏛️ Thanks to our affiliated institutions for their support.

🤝 Special thanks to the open-source community for inspiration.

📬 Contact

For any questions or inquiries, please reach out to us:

- Song Fei:

[email protected] - Tian Ye:

[email protected]

🧑🤝🧑 WeChat Group

点击展开二维码(WeChat Group QR Code)