bert-base-cased-ChemTok-ZN250K-V1

This model is a fine-tuned version of bert-base-cased on 250K SMILES strings from the ZN15 dataset. It achieves the following results on the evaluation set:

- Loss: 0.1640

Model description

This domain adaptation of bert-base-cased has been trained on 250K molecular SMILES strings, with added tokens:

new_tokens = ["[C@H]","[C@@H]","(F)","(Cl)","c1","c2","(O)","N#C","(=O)",

"([N+]([O-])=O)","[O-]","(OC)","(C)","[NH3+]","(I)","[Na+]","C#N"]

Intended uses & limitations

It is meant to be used for finetuning classification models for drug-related tasks, and for generative unmasking.

Training and evaluation data

More information needed

Training procedure

Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 128

- eval_batch_size: 128

- seed: 42

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 30

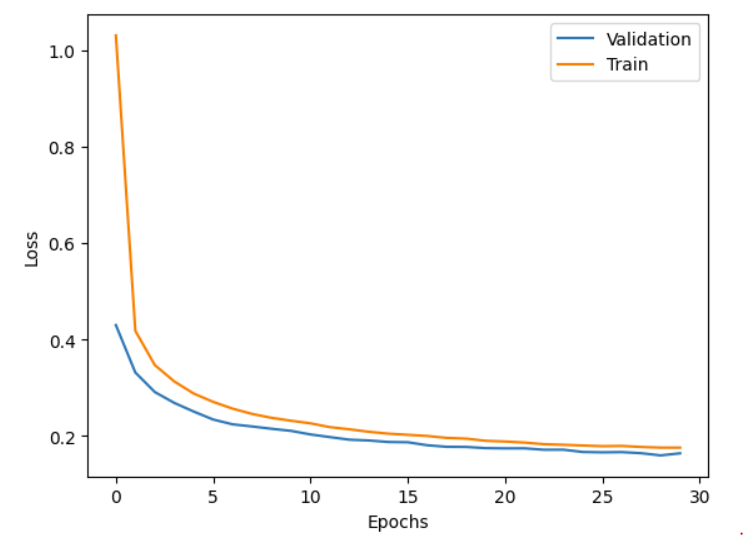

Training results

| Training Loss | Epoch | Step | Validation Loss |

|---|---|---|---|

| 1.0308 | 1.0 | 1657 | 0.4300 |

| 0.4181 | 2.0 | 3314 | 0.3316 |

| 0.3471 | 3.0 | 4971 | 0.2911 |

| 0.3131 | 4.0 | 6628 | 0.2686 |

| 0.288 | 5.0 | 8285 | 0.2506 |

| 0.2707 | 6.0 | 9942 | 0.2341 |

| 0.2567 | 7.0 | 11599 | 0.2241 |

| 0.2457 | 8.0 | 13256 | 0.2197 |

| 0.2376 | 9.0 | 14913 | 0.2149 |

| 0.2316 | 10.0 | 16570 | 0.2106 |

| 0.2262 | 11.0 | 18227 | 0.2032 |

| 0.2183 | 12.0 | 19884 | 0.1976 |

| 0.2138 | 13.0 | 21541 | 0.1923 |

| 0.2087 | 14.0 | 23198 | 0.1907 |

| 0.2048 | 15.0 | 24855 | 0.1875 |

| 0.2024 | 16.0 | 26512 | 0.1869 |

| 0.2 | 17.0 | 28169 | 0.1808 |

| 0.196 | 18.0 | 29826 | 0.1775 |

| 0.1945 | 19.0 | 31483 | 0.1773 |

| 0.19 | 20.0 | 33140 | 0.1748 |

| 0.1885 | 21.0 | 34797 | 0.1743 |

| 0.1863 | 22.0 | 36454 | 0.1744 |

| 0.1829 | 23.0 | 38111 | 0.1714 |

| 0.1817 | 24.0 | 39768 | 0.1715 |

| 0.1802 | 25.0 | 41425 | 0.1668 |

| 0.1788 | 26.0 | 43082 | 0.1661 |

| 0.1793 | 27.0 | 44739 | 0.1665 |

| 0.1772 | 28.0 | 46396 | 0.1642 |

| 0.1758 | 29.0 | 48053 | 0.1597 |

| 0.1758 | 30.0 | 49710 | 0.1640 |

Framework versions

- Transformers 4.51.3

- Pytorch 2.6.0+cu124

- Datasets 3.5.0

- Tokenizers 0.21.1

- Downloads last month

- 8

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support