GGUF quantized and fp8 scaled versions of LTX-Video

ggc x2

GGUF file(s) available. Select which one to use:

- ltxv-2b-0.9.6-dev-q4_0.gguf

- ltxv-2b-0.9.6-dev-q8_0.gguf

- ltxv-2b-0.9.6-distilled-q4_0.gguf

- ltxv-2b-0.9.6-distilled-q8_0.gguf

Enter your choice (1 to 4): _

run it with gguf-node via comfyui

- drag ltx-video-2b-v0.9.1-r2-q4_0.gguf (1.09GB) to > ./ComfyUI/models/diffusion_models

- drag t5xxl_fp16-q4_0.gguf (2.9GB) to > ./ComfyUI/models/text_encoders

- drag ltxv_vae_fp32-f16.gguf (838MB) to > ./ComfyUI/models/vae

run it straight (no installation needed way)

- run the .bat file in the main directory (assuming you are using the gguf-node pack below)

- drag the workflow json file (below) to > your browser

workflow

- example workflow for gguf (see demo above)

- example workflow for the original safetensors

review

q2_kgguf is super fast but not usable; keep it for testing only- surprisingly

0.9_fp8_e4m3fnand0.9-vae_fp8_e4m3fnare working pretty good - mix-and-match possible; you could mix up using the vae(s) available with different model file(s) here; test which combination works best

- you could opt to use the t5xxl scaled safetensors or t5xxl gguf (more quantized versions of t5xxl can be found here) as text encoder

- new set of enhanced vae (from fp8 to fp32) added in this pack; the low ram version gguf vae is also available right away; upgrade your node for the new feature: gguf vae loader

- gguf-node is available (see details here) for running the new features (the point below might not be directly related to the model)

- you are able to make your own

fp8_e4m3fnscaled safetensors and/or convert it to gguf with the new node via comfyui

run it with diffusers🧨 (alternative 1)

import torch

from transformers import T5EncoderModel

from diffusers import LTXPipeline, GGUFQuantizationConfig, LTXVideoTransformer3DModel

from diffusers.utils import export_to_video

model_path = (

"https://huggingface.co/calcuis/ltxv-gguf/blob/main/ltx-video-2b-v0.9-q8_0.gguf"

)

transformer = LTXVideoTransformer3DModel.from_single_file(

model_path,

quantization_config=GGUFQuantizationConfig(compute_dtype=torch.bfloat16),

torch_dtype=torch.bfloat16,

)

text_encoder = T5EncoderModel.from_pretrained(

"calcuis/ltxv-gguf",

gguf_file="t5xxl_fp16-q4_0.gguf",

torch_dtype=torch.bfloat16,

)

pipe = LTXPipeline.from_pretrained(

"callgg/ltxv-decoder",

text_encoder=text_encoder,

transformer=transformer,

torch_dtype=torch.bfloat16

).to("cuda")

prompt = "A woman with long brown hair and light skin smiles at another woman with long blonde hair. The woman with brown hair wears a black jacket and has a small, barely noticeable mole on her right cheek. The camera angle is a close-up, focused on the woman with brown hair's face. The lighting is warm and natural, likely from the setting sun, casting a soft glow on the scene. The scene appears to be real-life footage"

negative_prompt = "worst quality, inconsistent motion, blurry, jittery, distorted"

video = pipe(

prompt=prompt,

negative_prompt=negative_prompt,

width=704,

height=480,

num_frames=25,

num_inference_steps=50,

).frames[0]

export_to_video(video, "output.mp4", fps=24)

run it with gguf-connector (alternative 2)

- simply execute the command below in console/terminal

- note: during the first time launch, it will pull the model file(s) to local cache automatically; then opt to run it entirely offline; i.e., from local URL: http://127.0.0.1:7860 with lazy webui

- upgraded the base model from 0.9 to 0.9.6 distilled for better results

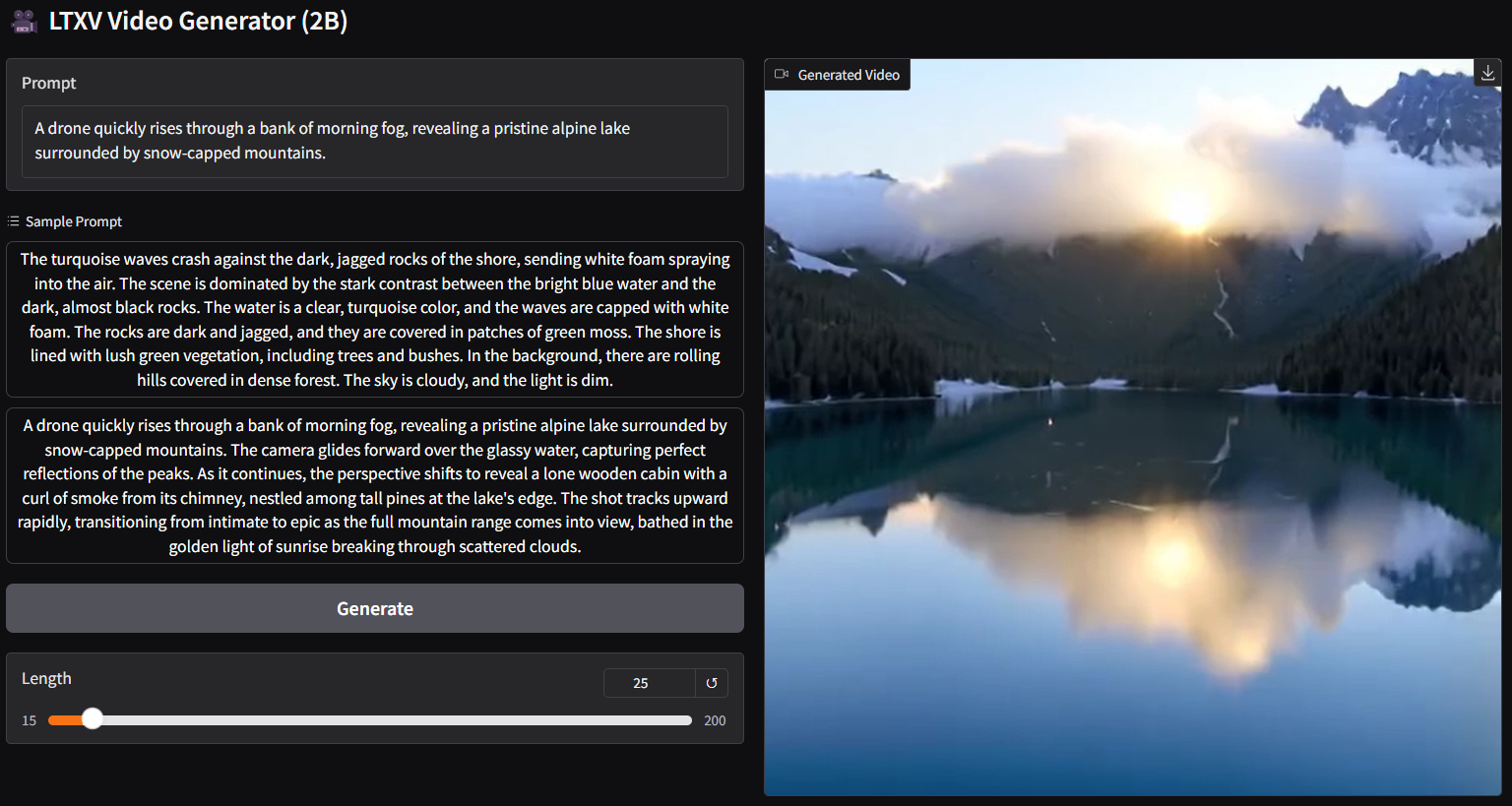

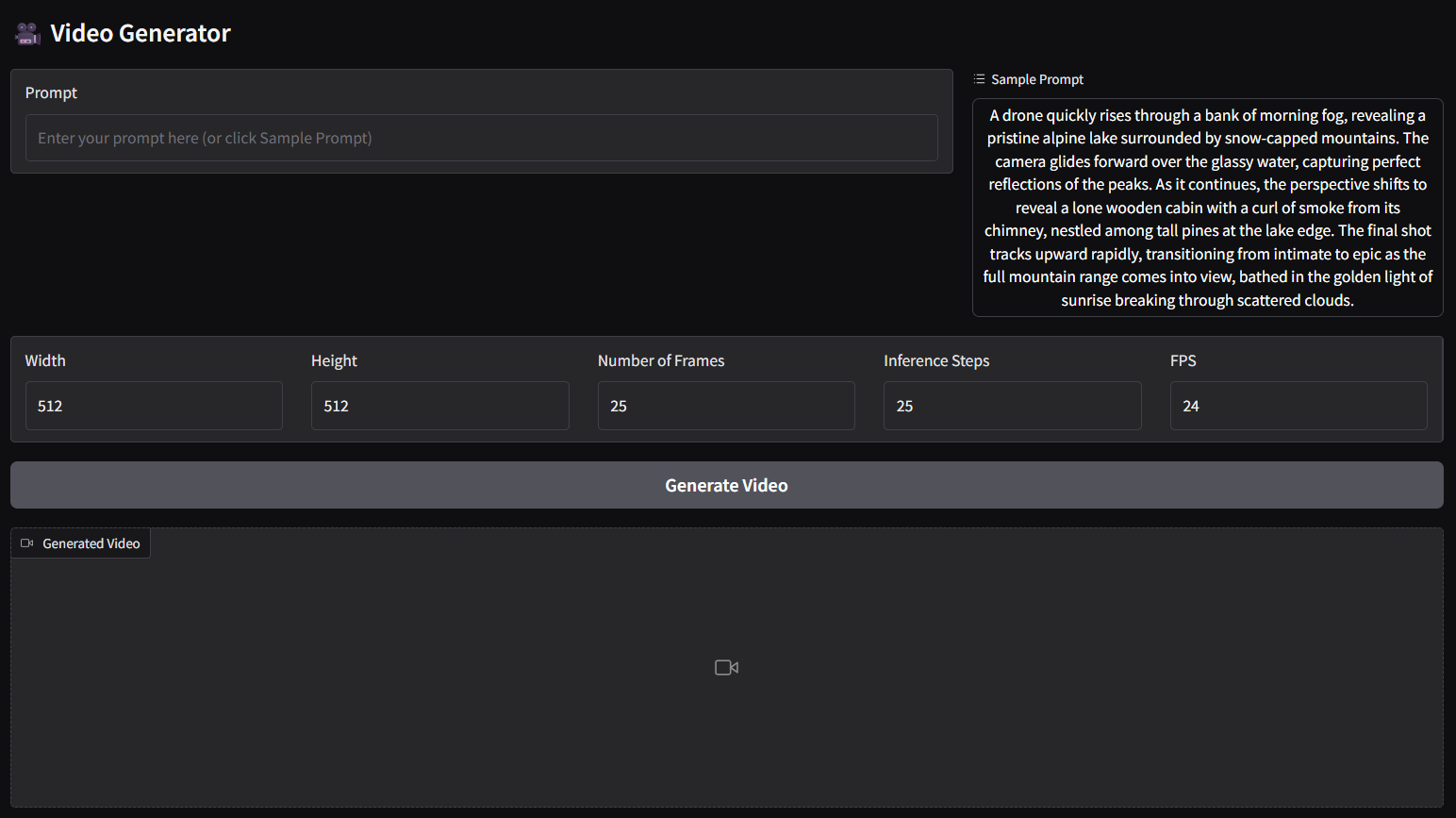

ggc vg

- the above command is for text to video (t2v) panel

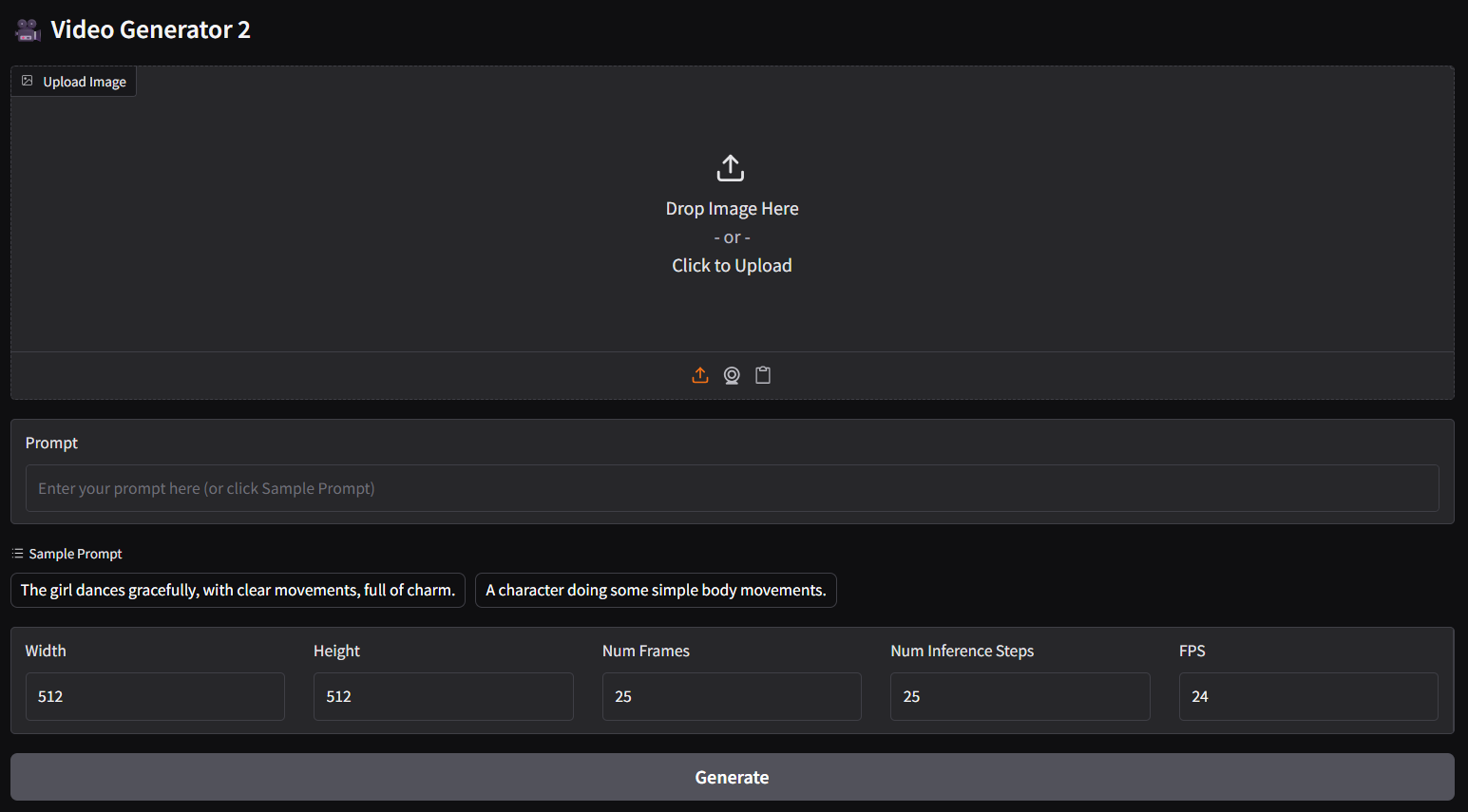

- for image-text to video (i2v) panel, please execute:

ggc v1

reference

- base model from lightricks

- comfyui from comfyanonymous

- comfyui-gguf city96

- gguf-comfy pack

- gguf-connector (pypi)

- gguf-node (pypi|repo|pack)

- Prompt

- A drone quickly rises through a bank of morning fog, revealing a pristine alpine lake surrounded by snow-capped mountains. The camera glides forward over the glassy water, capturing perfect reflections of the peaks. As it continues, the perspective shifts to reveal a lone wooden cabin with a curl of smoke from its chimney, nestled among tall pines at the lake's edge. The final shot tracks upward rapidly, transitioning from intimate to epic as the full mountain range comes into view, bathed in the golden light of sunrise breaking through scattered clouds.

- Negative Prompt

- low quality, worst quality, deformed, distorted, disfigured, motion smear, motion artifacts, fused fingers, bad anatomy, weird hand, ugly

- Prompt

- The turquoise waves crash against the dark, jagged rocks of the shore, sending white foam spraying into the air. The scene is dominated by the stark contrast between the bright blue water and the dark, almost black rocks. The water is a clear, turquoise color, and the waves are capped with white foam. The rocks are dark and jagged, and they are covered in patches of green moss. The shore is lined with lush green vegetation, including trees and bushes. In the background, there are rolling hills covered in dense forest. The sky is cloudy, and the light is dim.

- Prompt

- The camera pans across a cityscape of tall buildings with a circular building in the center. The camera moves from left to right, showing the tops of the buildings and the circular building in the center. The buildings are various shades of gray and white, and the circular building has a green roof. The camera angle is high, looking down at the city. The lighting is bright, with the sun shining from the upper left, casting shadows from the buildings. The scene is computer-generated imagery.

- Prompt

- Drone view of waves crashing against the rugged cliffs along Big Sur’s garay point beach. The crashing blue waters create white-tipped waves, while the golden light of the setting sun illuminates the rocky shore. A small island with a lighthouse sits in the distance, and green shrubbery covers the cliff’s edge. The steep drop from the road down to the beach is a dramatic feat, with the cliff’s edges jutting out over the sea. This is a view that captures the raw beauty of the coast and the rugged landscape of the Pacific Coast Highway.

- Downloads last month

- 14,629

Hardware compatibility

Log In

to view the estimation

2-bit

3-bit

4-bit

5-bit

6-bit

8-bit

16-bit

32-bit

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support

Model tree for calcuis/ltxv-gguf

Base model

Lightricks/LTX-Video