Qwen3-Coder-30B-A3B-Instruct

Visit model playground at Clarifai https://clarifai.com/qwen/qwenCoder/models/Qwen3-Coder-30B-A3B-Instruct

Highlights

Qwen3-Coder is available in multiple sizes. Today, the Qwen team is excited to introduce Qwen3-Coder-30B-A3B-Instruct. This streamlined model maintains impressive performance and efficiency, featuring the following key enhancements:

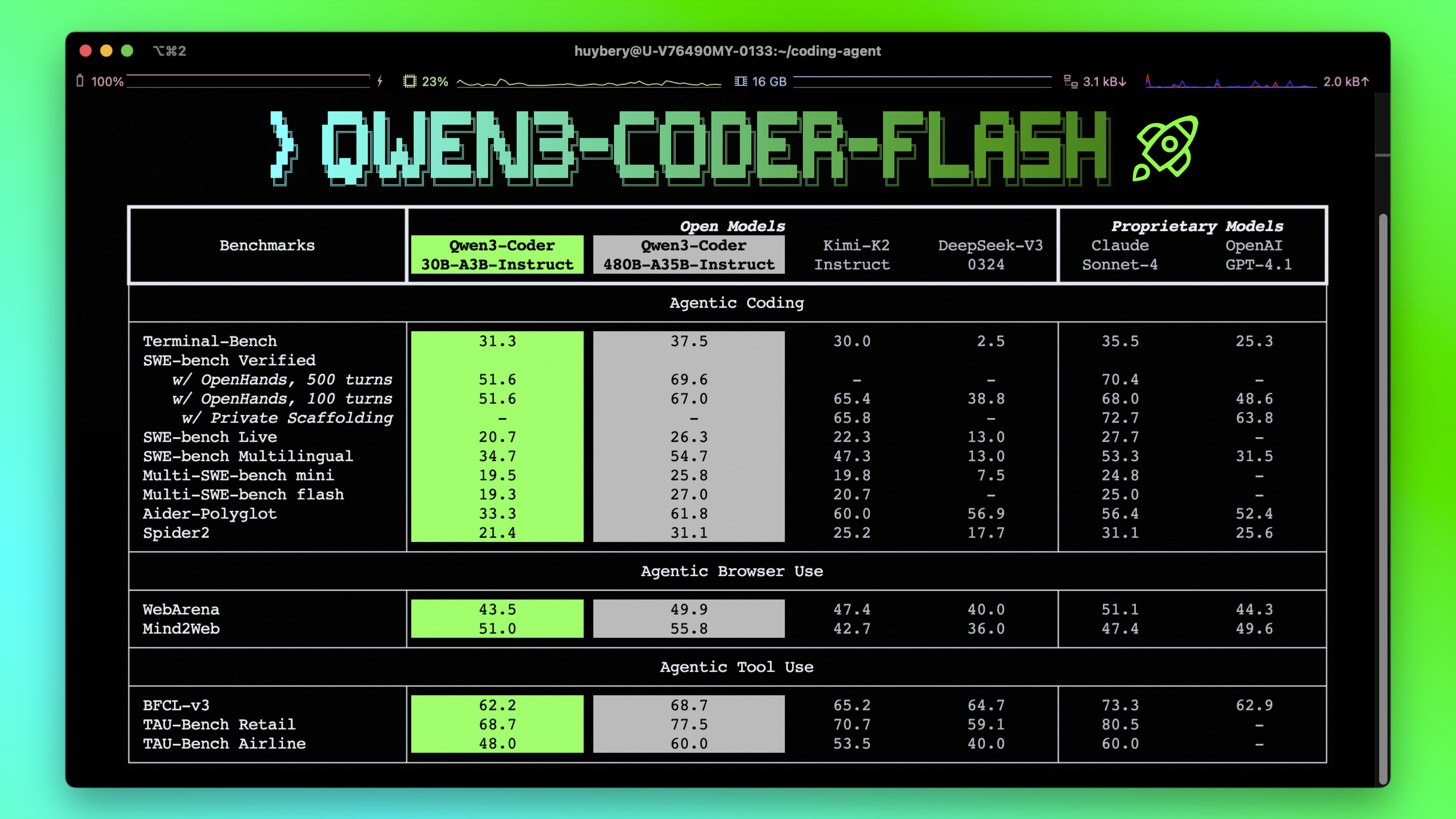

- Significant Performance among open models on Agentic Coding, Agentic Browser-Use, and other foundational coding tasks.

- Long-context Capabilities with native support for 256K tokens, extendable up to 1M tokens using Yarn, optimized for repository-scale understanding.

- Agentic Coding supporting most platforms such as Qwen Code, CLINE, featuring a specially designed function call format.

Model Overview

Qwen3-Coder-30B-A3B-Instruct has the following features:

- Type: Causal Language Models

- Training Stage: Pretraining & Post-training

- Number of Parameters: 30.5B in total and 3.3B activated

- Number of Layers: 48

- Number of Attention Heads (GQA): 32 for Q and 4 for KV

- Number of Experts: 128

- Number of Activated Experts: 8

NOTE: This model supports only non-thinking mode and does not generate <think></think> blocks in its output. Meanwhile, specifying enable_thinking=False is no longer required.

For more details, including benchmark evaluation, hardware requirements, and inference performance, please refer to the Qwen team's blog, GitHub, and Documentation.

Usage

Using Clarifai's Python SDK

# Please run `pip install -U clarifai` before running this script

from clarifai.client import Model

model = Model(url="https://clarifai.com/qwen/qwenCoder/models/Qwen3-Coder-30B-A3B-Instruct", pat="your Clarifai PAT")

prompt = "What's the future of AI?"

# Clarifai style prediction method

## Stream

generated_text = model.generate(prompt=prompt)

for each in generated_text:

print(each, end='', flush=True)

## Non stream

generated_text = model.predict(prompt=prompt)

print(generated_text)

Using OpenAI API

from openai import OpenAI

model_id="qwen/qwenCoder/models/Qwen3-Coder-30B-A3B-Instruct"

client = OpenAI(

base_url="https://api.clarifai.com/v2/ext/openai/v1",

api_key="Your Clarifai PAT",

)

response = client.chat.completions.create(

model=model_id,

messages=[

{"role": "system", "content": "Talk like a Cat."},

{

"role": "user",

"content": "How do I check if a Python object is an instance of a class (streaming)?",

},

],

temperature=0.7,

stream=True,

)

for each in response:

if each.choices:

text = each.choices[0].delta.content

print(text, flush=False, end="")

Best Practices

To achieve optimal performance, the Qwen team recommends the following settings:

Sampling Parameters:

The Qwen team suggests using temperature=0.7, top_p=0.8, top_k=20, repetition_penalty=1.05.

Adequate Output Length: The Qwen team recommends using an output length of 65,536 tokens for most queries, which is adequate for instruct models.

Citation

If you find our work helpful, feel free to give us a cite.

@misc{qwen3technicalreport,

title={Qwen3 Technical Report},

author={Qwen Team},

year={2025},

eprint={2505.09388},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2505.09388},

}

- Downloads last month

- 66