url

stringlengths 53

56

| repository_url

stringclasses 1

value | labels_url

stringlengths 67

70

| comments_url

stringlengths 62

65

| events_url

stringlengths 60

63

| html_url

stringlengths 41

46

| id

int64 450k

1.69B

| node_id

stringlengths 18

32

| number

int64 1

2.72k

| title

stringlengths 1

209

| user

dict | labels

list | state

stringclasses 1

value | locked

bool 2

classes | assignee

null | assignees

sequence | milestone

null | comments

sequence | created_at

timestamp[s] | updated_at

timestamp[s] | closed_at

timestamp[s] | author_association

stringclasses 3

values | active_lock_reason

stringclasses 2

values | body

stringlengths 0

104k

⌀ | reactions

dict | timeline_url

stringlengths 62

65

| performed_via_github_app

null | state_reason

stringclasses 2

values | draft

bool 2

classes | pull_request

dict |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/coleifer/peewee/issues/2519 | https://api.github.com/repos/coleifer/peewee | https://api.github.com/repos/coleifer/peewee/issues/2519/labels{/name} | https://api.github.com/repos/coleifer/peewee/issues/2519/comments | https://api.github.com/repos/coleifer/peewee/issues/2519/events | https://github.com/coleifer/peewee/issues/2519 | 1,113,659,655 | I_kwDOAA7yGM5CYRkH | 2,519 | drop_tables does not delete "schema" tables in MySQL | {

"login": "ascaron37",

"id": 8329544,

"node_id": "MDQ6VXNlcjgzMjk1NDQ=",

"avatar_url": "https://avatars.githubusercontent.com/u/8329544?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/ascaron37",

"html_url": "https://github.com/ascaron37",

"followers_url": "https://api.github.com/users/ascaron37/followers",

"following_url": "https://api.github.com/users/ascaron37/following{/other_user}",

"gists_url": "https://api.github.com/users/ascaron37/gists{/gist_id}",

"starred_url": "https://api.github.com/users/ascaron37/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ascaron37/subscriptions",

"organizations_url": "https://api.github.com/users/ascaron37/orgs",

"repos_url": "https://api.github.com/users/ascaron37/repos",

"events_url": "https://api.github.com/users/ascaron37/events{/privacy}",

"received_events_url": "https://api.github.com/users/ascaron37/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"So the reason the drop doesn't work is because Peewee, when \"safe=True\" (the default), attempts to check if the table exists in the current db. To get this working, you can just specify `safe=False`:\r\n\r\n```python\r\n# This will skip the check that the table exists, and then issue the\r\n# appropriate drop table.\r\ndb1.drop_tables([Model2], safe=False)\r\n```",

"Perfect, thank you!"

] | 2022-01-25T09:48:21 | 2022-01-25T18:14:51 | 2022-01-25T15:40:33 | NONE | null | I have a problem testing my application with MySQL. I have two separate databases on one MySQL server, say DB1 and DB2. I create a DB Object with `db1 = MySQLDatabase('DB1', ...)`. All my models from DB2 have a schema attribute in the inner Meta class:

```

class DBModel(Model):

class Meta:

database = db1

class Model2(DBModel):

id = AutoField()

class Meta:

schema = 'DB2'

```

In the application this works well, but for testing purposes I want to create and drop all tables. All models from the first DB get created and deleted properly. My problem now is that creating the tables in the second DB works as expected, but drop_tables does not drop the tables from the second DB.

```

db1.create_tables((Model2,)) # creates the table in the second DB

db1.drop_tables((Model2,)) # does not drop tables from second DB

```

Is my usage of the schema attribute a lucky incident from the standpoint that it works in my application? Can I force drop_tables to delete also the tables from the other schemas? | {

"url": "https://api.github.com/repos/coleifer/peewee/issues/2519/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/coleifer/peewee/issues/2519/timeline | null | completed | null | null |

https://api.github.com/repos/coleifer/peewee/issues/2518 | https://api.github.com/repos/coleifer/peewee | https://api.github.com/repos/coleifer/peewee/issues/2518/labels{/name} | https://api.github.com/repos/coleifer/peewee/issues/2518/comments | https://api.github.com/repos/coleifer/peewee/issues/2518/events | https://github.com/coleifer/peewee/issues/2518 | 1,111,184,135 | I_kwDOAA7yGM5CO1MH | 2,518 | PooledMySQLDatabase MaxConnectionsExceeded | {

"login": "Ahern542968",

"id": 32890883,

"node_id": "MDQ6VXNlcjMyODkwODgz",

"avatar_url": "https://avatars.githubusercontent.com/u/32890883?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Ahern542968",

"html_url": "https://github.com/Ahern542968",

"followers_url": "https://api.github.com/users/Ahern542968/followers",

"following_url": "https://api.github.com/users/Ahern542968/following{/other_user}",

"gists_url": "https://api.github.com/users/Ahern542968/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Ahern542968/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Ahern542968/subscriptions",

"organizations_url": "https://api.github.com/users/Ahern542968/orgs",

"repos_url": "https://api.github.com/users/Ahern542968/repos",

"events_url": "https://api.github.com/users/Ahern542968/events{/privacy}",

"received_events_url": "https://api.github.com/users/Ahern542968/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"http://docs.peewee-orm.com/en/latest/peewee/playhouse.html#pool\r\n\r\nYou are not managing your connections.\r\n\r\nCall connect at the beginning of a request/thread, and close() at the end. This ensures that your conns are returned to the pool."

] | 2022-01-22T02:08:23 | 2022-01-22T13:10:42 | 2022-01-22T13:10:42 | NONE | null | I use PooledMySQLDatabase for a pool , when I with atomic for create , but case MaxConnectionsExceeded

```

def logic_create_event_item(operate_dict):

user_id = operate_dict["user_id"]

content_type = "event"

event_id = operate_dict["event_id"]

operate_type = "create"

user_operate_log = UserOperateLog.get_or_none(

UserOperateLog.user_id == user_id, UserOperateLog.content_type == content_type,

UserOperateLog.event_id == event_id, UserOperateLog.operate_type == operate_type)

if user_operate_log:

return

with settings.DB.atomic() as txn:

try:

user_operate_log = UserOperateLog()

user_operate_log.user_id = user_id

user_operate_log.content_type = content_type

user_operate_log.content_id = event_id

user_operate_log.operate_type = operate_type

user_operate_log.content = "创建埋点"

user_operate_log.save(force_insert=True)

except Exception as e:

txn.rollback()

raise e

```

| {

"url": "https://api.github.com/repos/coleifer/peewee/issues/2518/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/coleifer/peewee/issues/2518/timeline | null | completed | null | null |

https://api.github.com/repos/coleifer/peewee/issues/2517 | https://api.github.com/repos/coleifer/peewee | https://api.github.com/repos/coleifer/peewee/issues/2517/labels{/name} | https://api.github.com/repos/coleifer/peewee/issues/2517/comments | https://api.github.com/repos/coleifer/peewee/issues/2517/events | https://github.com/coleifer/peewee/issues/2517 | 1,110,727,085 | I_kwDOAA7yGM5CNFmt | 2,517 | Select with complex where statement don't transform correctly | {

"login": "spacialhufman",

"id": 33603355,

"node_id": "MDQ6VXNlcjMzNjAzMzU1",

"avatar_url": "https://avatars.githubusercontent.com/u/33603355?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/spacialhufman",

"html_url": "https://github.com/spacialhufman",

"followers_url": "https://api.github.com/users/spacialhufman/followers",

"following_url": "https://api.github.com/users/spacialhufman/following{/other_user}",

"gists_url": "https://api.github.com/users/spacialhufman/gists{/gist_id}",

"starred_url": "https://api.github.com/users/spacialhufman/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/spacialhufman/subscriptions",

"organizations_url": "https://api.github.com/users/spacialhufman/orgs",

"repos_url": "https://api.github.com/users/spacialhufman/repos",

"events_url": "https://api.github.com/users/spacialhufman/events{/privacy}",

"received_events_url": "https://api.github.com/users/spacialhufman/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"Peewee will generate that `0 = 1` when you write an expression that evalutes to `WHERE x IN ()` (which is always false):\r\n\r\n```python\r\n.where(Model.field.in_([]))\r\n```\r\n\r\nSo in your example, at least one of your lists is empty, or alternatively your `tickets` iterator is a generator or something and returns a list, but when iterated the 2nd time it is consumed and returns an empty list."

] | 2022-01-21T17:36:05 | 2022-01-21T17:44:28 | 2022-01-21T17:44:28 | NONE | null | I was building a complex query in my app which is describe below:

`query = (self.repository.getSelect((

fieldId,

fieldReceivedAt,

fieldMac,

fieldCode,

fieldDomain,

fieldStatus

))

.where(

fieldId.between(initialId, endId + 10000) &

SystemEventModel.Message.contains('done query') &

self.__getCodeFuncField().in_([t.code for t in tickets]) &

SystemEventModel.FromHost.in_([t.mac for t in tickets])

)

.order_by(fieldCode))`

The sintax seems correct by when I run the application, the ORM makes my query look like below:

`SELECT t1.ID AS id, t1.ReceivedAt AS received_at, t1.FromHost AS mac, TRIM(SUBSTR(SUBSTR(t1.Message, INSTR(t1.Message, '#'), 11), 1, INSTR(SUBSTR(t1.Message, INSTR(t1.Message, '#'), 11), ' '))) AS code, TRIM(IF((INSTR(t1.Message, 'dns name exists') > 0), 'dns name exists, but no appropriate record', SUBSTR(TRIM(SUBSTR(SUBSTR(t1.Message, INSTR(t1.Message, '#'), 100), INSTR(SUBSTR(t1.Message, INSTR(t1.Message, '#'), 100), ' '), 100)), 1, INSTR(TRIM(SUBSTR(SUBSTR(t1.Message, INSTR(t1.Message, '#'), 100), INSTR(SUBSTR(t1.Message, INSTR(t1.Message, '#'), 100), ' '), 100)), ' ')))) AS domain, TRIM(IF((INSTR(t1.Message, 'dns name exists') > 0), 1, IF((INSTR(t1.Message, 'dns does not exist') > 0), 0, 2))) AS status FROM SystemEvents AS t1 WHERE ((((id BETWEEN 23706618 AND 23726554) AND (t1.Message LIKE '%done query%')) AND (TRIM(SUBSTR(SUBSTR(t1.Message, INSTR(t1.Message, '#'), 11), 1, INSTR(SUBSTR(t1.Message, INSTR(t1.Message, '#'), 11), ' '))) IN ('#118729', '#118730', '#12485651', '#12485652', '#12485653', '#129837753', '#129837754', '#1301639'))) AND (0 = 1)) ORDER BY code`

Why the last where was transformed into `(0 = 1)` if it is a **where in** statement?

Any help would be appreciated.

Thanks | {

"url": "https://api.github.com/repos/coleifer/peewee/issues/2517/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/coleifer/peewee/issues/2517/timeline | null | completed | null | null |

https://api.github.com/repos/coleifer/peewee/issues/2516 | https://api.github.com/repos/coleifer/peewee | https://api.github.com/repos/coleifer/peewee/issues/2516/labels{/name} | https://api.github.com/repos/coleifer/peewee/issues/2516/comments | https://api.github.com/repos/coleifer/peewee/issues/2516/events | https://github.com/coleifer/peewee/issues/2516 | 1,107,573,649 | I_kwDOAA7yGM5CBDuR | 2,516 | generate_models from DatabaseProxy ValueError | {

"login": "LLjiahai",

"id": 30220658,

"node_id": "MDQ6VXNlcjMwMjIwNjU4",

"avatar_url": "https://avatars.githubusercontent.com/u/30220658?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/LLjiahai",

"html_url": "https://github.com/LLjiahai",

"followers_url": "https://api.github.com/users/LLjiahai/followers",

"following_url": "https://api.github.com/users/LLjiahai/following{/other_user}",

"gists_url": "https://api.github.com/users/LLjiahai/gists{/gist_id}",

"starred_url": "https://api.github.com/users/LLjiahai/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/LLjiahai/subscriptions",

"organizations_url": "https://api.github.com/users/LLjiahai/orgs",

"repos_url": "https://api.github.com/users/LLjiahai/repos",

"events_url": "https://api.github.com/users/LLjiahai/events{/privacy}",

"received_events_url": "https://api.github.com/users/LLjiahai/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"In this case, just instantiate the introspector directly - or pass it the underlying db instance:\r\n\r\n```python\r\n# Option 1:\r\nintrospector = Introspector(MySQLMetadata(database))\r\nintrospector.generate_models()\r\n\r\n# Option 2:\r\nmodels = generate_models(db.obj)\r\n```",

"I gave this another look and made a better fix. Going forward introspection will work with a proxy just fine."

] | 2022-01-19T02:42:34 | 2022-01-19T23:55:00 | 2022-01-19T16:22:41 | NONE | null | db = DatabaseProxy()

db.initialize(RetryMySQLDatabase.get_db_instance(**kw_config))

models = generate_models(db)

ValueError: Introspection not supported for <peewee.DatabaseProxy object at 0x000002859D87FE40> | {

"url": "https://api.github.com/repos/coleifer/peewee/issues/2516/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/coleifer/peewee/issues/2516/timeline | null | completed | null | null |

https://api.github.com/repos/coleifer/peewee/issues/2515 | https://api.github.com/repos/coleifer/peewee | https://api.github.com/repos/coleifer/peewee/issues/2515/labels{/name} | https://api.github.com/repos/coleifer/peewee/issues/2515/comments | https://api.github.com/repos/coleifer/peewee/issues/2515/events | https://github.com/coleifer/peewee/issues/2515 | 1,106,872,487 | I_kwDOAA7yGM5B-Yin | 2,515 | How to get all data from my Model? | {

"login": "peepo5",

"id": 72892531,

"node_id": "MDQ6VXNlcjcyODkyNTMx",

"avatar_url": "https://avatars.githubusercontent.com/u/72892531?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/peepo5",

"html_url": "https://github.com/peepo5",

"followers_url": "https://api.github.com/users/peepo5/followers",

"following_url": "https://api.github.com/users/peepo5/following{/other_user}",

"gists_url": "https://api.github.com/users/peepo5/gists{/gist_id}",

"starred_url": "https://api.github.com/users/peepo5/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/peepo5/subscriptions",

"organizations_url": "https://api.github.com/users/peepo5/orgs",

"repos_url": "https://api.github.com/users/peepo5/repos",

"events_url": "https://api.github.com/users/peepo5/events{/privacy}",

"received_events_url": "https://api.github.com/users/peepo5/received_events",

"type": "User",

"site_admin": false

} | [] | closed | true | null | [] | null | [

"You need to read the documentation.\r\n\r\nTake 10 or 15 minutes and go through the quick start.\r\n\r\nhttp://docs.peewee-orm.com/en/latest/peewee/quickstart.html#retrieving-data",

"So I have to iterate over the select to get any results? Your answer to my question did not help.",

"http://docs.peewee-orm.com/en/latest/peewee/querying.html#selecting-a-single-record\r\n\r\nhttp://docs.peewee-orm.com/en/latest/peewee/querying.html#selecting-multiple-records",

"To answer my own question, yes you must iterate. @coleifer are there any ways of not iterating to save time? like recieving an array of classes instead of just something to iterate upon. Thanks. And please give some explanation rather than just sending a link, thank you.",

"https://docs.python.org/3/library/functions.html#func-list"

] | 2022-01-18T12:50:29 | 2022-01-18T20:08:55 | 2022-01-18T12:57:12 | NONE | off-topic | My class looks like this:

```py

class WaitPayment(BaseModel):

txn_id = TextField(unique=True, primary_key=True)

discord_id = TextField()

ms_expires = DecimalField()

```

and I want to recieve all of its records as a dict. However, when I use `WaitPayment.select()` or `WaitPayment.select().dicts()`, it returns:

```sql

SELECT "t1"."txn_id", "t1"."discord_id", "t1"."ms_expires" FROM "waitpayment" AS "t1"

```

in the terminal, which is not what I want. I do not want the sql query, I want the result. Any help? Thanks. | {

"url": "https://api.github.com/repos/coleifer/peewee/issues/2515/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/coleifer/peewee/issues/2515/timeline | null | completed | null | null |

https://api.github.com/repos/coleifer/peewee/issues/2514 | https://api.github.com/repos/coleifer/peewee | https://api.github.com/repos/coleifer/peewee/issues/2514/labels{/name} | https://api.github.com/repos/coleifer/peewee/issues/2514/comments | https://api.github.com/repos/coleifer/peewee/issues/2514/events | https://github.com/coleifer/peewee/issues/2514 | 1,105,342,191 | I_kwDOAA7yGM5B4i7v | 2,514 | Field argument 'help_text' seem have not realized in MySQL... | {

"login": "xfl12345",

"id": 17960863,

"node_id": "MDQ6VXNlcjE3OTYwODYz",

"avatar_url": "https://avatars.githubusercontent.com/u/17960863?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/xfl12345",

"html_url": "https://github.com/xfl12345",

"followers_url": "https://api.github.com/users/xfl12345/followers",

"following_url": "https://api.github.com/users/xfl12345/following{/other_user}",

"gists_url": "https://api.github.com/users/xfl12345/gists{/gist_id}",

"starred_url": "https://api.github.com/users/xfl12345/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/xfl12345/subscriptions",

"organizations_url": "https://api.github.com/users/xfl12345/orgs",

"repos_url": "https://api.github.com/users/xfl12345/repos",

"events_url": "https://api.github.com/users/xfl12345/events{/privacy}",

"received_events_url": "https://api.github.com/users/xfl12345/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"The `help_text` and `verbose_name` are used for integrations with flask-peewee (or other user-facing applications) and do not have any impact on the schema."

] | 2022-01-17T04:03:44 | 2022-01-17T14:23:27 | 2022-01-17T14:23:27 | NONE | null | I think the 'help_text' parameter may become the 'comment' value in MySQL.

It did not work as i expect.

Neigther of 'verbose_name' parameter.

I would appreciate if the little feature has been realized.

Thanks a lot.

(BTW: peewee is so much easier to use than SQLAlchemy!!!Can't help coding with peewee.) | {

"url": "https://api.github.com/repos/coleifer/peewee/issues/2514/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/coleifer/peewee/issues/2514/timeline | null | completed | null | null |

https://api.github.com/repos/coleifer/peewee/issues/2513 | https://api.github.com/repos/coleifer/peewee | https://api.github.com/repos/coleifer/peewee/issues/2513/labels{/name} | https://api.github.com/repos/coleifer/peewee/issues/2513/comments | https://api.github.com/repos/coleifer/peewee/issues/2513/events | https://github.com/coleifer/peewee/issues/2513 | 1,101,868,467 | I_kwDOAA7yGM5BrS2z | 2,513 | Unreliable hybrid_fields order in model_to_dict(model, extra_attrs=hybrid_fields) bug? | {

"login": "neutralvibes",

"id": 26578830,

"node_id": "MDQ6VXNlcjI2NTc4ODMw",

"avatar_url": "https://avatars.githubusercontent.com/u/26578830?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/neutralvibes",

"html_url": "https://github.com/neutralvibes",

"followers_url": "https://api.github.com/users/neutralvibes/followers",

"following_url": "https://api.github.com/users/neutralvibes/following{/other_user}",

"gists_url": "https://api.github.com/users/neutralvibes/gists{/gist_id}",

"starred_url": "https://api.github.com/users/neutralvibes/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/neutralvibes/subscriptions",

"organizations_url": "https://api.github.com/users/neutralvibes/orgs",

"repos_url": "https://api.github.com/users/neutralvibes/repos",

"events_url": "https://api.github.com/users/neutralvibes/events{/privacy}",

"received_events_url": "https://api.github.com/users/neutralvibes/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"~~Despite spending a few hours on it, a slap from myself helped me realise that perhaps OrderedDict isn't being used, despite database fields seemingly reproduced in reliable in order.~~ Nope, dict keys became reliably ordered from python 3.7.",

"Dict keys are not sorted -- exactly. This is not an issue in my opinion, this is just the nature of dictionaries. A dictionary is an inherently unordered data-structure, and while later Python preserves the insert order, it's not something to rely upon.\r\n\r\nYou already have your list of attrs - so you probably want to do something like: `table.append([getattr(model, attr) for attr in list_of_attrs])`. Or just pull the keys out of the resulting dict in the order you need them.",

"First, many thanks for reviewing.\r\n\r\nDicts have guaranteed order as of python 3.7 so can be relied upon. \r\n I have looked at the underlyng code and the reason is you convert the input to a `Set`. The reason for the lack of stability is because of a shortcut you use to convert the input.\r\n\r\n~~~python\r\n_clone_set = lambda s: set(s) if s else set()\r\n~~~\r\n\r\nSets still do not have this assurance, and I wonder if using a List would be better saving on converting many rows of data for the sake of it. I bow to your superior knowledge so shall leave it there.\r\n\r\nThanks again.",

"We don't bow to anyone in Peewee!\r\n\r\nI think my recommendation to you is the same:\r\n\r\n* either do not use `model_to_dict()` and just grab the attrs you want explicitly\r\n* or, once you have the dict back, pull the fields out of it explicitly using a list comprehension or the like"

] | 2022-01-13T14:38:27 | 2022-01-13T16:26:25 | 2022-01-13T16:11:07 | NONE | null | # Hybrid fields output order from model_to_dict - bug?

## Problem

- Python Version: 3.7.6

I have a case where a gui table requires row data in tuple format. This must be ordered correctly in relation to the provided headers. The data is supplied by a model with a couple of hybrid fields.

I am finding that irrespective of the order provided to the `extra_attrs` parameter of `model_to_dict`, while the order of the data directly from the database is predictable, the order of the resultant hybrid fields attached is not in my case.

## Example

Here is an example missing definitions but, I hope gives enough to demonstate with a fictious Person table where `status` and `age` are hybrids getting their data from other Person fields.

~~~python

# Fields to list, * denotes hybrid field

display_list = [ 'id', 'name', '*status', '*age' ]

# Get hybrids list

hybrid_fields = [ f for f in display_list if f.startswith('*') ]

# Printing shows supplied order consistently

print(f'hybrids: {hybrid_fields}')

# Output: hybrids: ['status', 'age']

# Grab list of non hybrid fields to extract

only_prep = [ f for f in display_list if not f.startswith('*') ]

# Get attributes for `only_list` from table class

only_list = ( attrgetter(*only_prep)(Person) ) if len(only_prep) else ()

print(only_list)

# Output: (<AutoField: Person.id>, <CharField: Person.name>)

table_data = [] # to hold return from encapsulating function

for rec in Person.select():

row = model_to_dict( rec, only=only_list, extra_attrs=hybrid_fields )

print( row.keys() )

# Expected: dict_keys(['id', 'name', 'status','age']) ....and so on

# Sometimes on python restart: dict_keys(['id', 'name', 'age', 'status']) .... notice the change in the last two.

table_data.append( tuple(row.values()) )

~~~

## Tales of the unexpected

What is bizzare is that while python is running the order returned seems fixed. Run the program from scratch a few times after a period then it can alternate.

## Advice appreciated

Not sure if I'm doing something wrong. Tried making `hybrid_fields ` a tuple with no joy. Any suggestions would be appreciated as I am not sure if this is a bug or an interface with the keyboard problem.

| {

"url": "https://api.github.com/repos/coleifer/peewee/issues/2513/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/coleifer/peewee/issues/2513/timeline | null | completed | null | null |

https://api.github.com/repos/coleifer/peewee/issues/2512 | https://api.github.com/repos/coleifer/peewee | https://api.github.com/repos/coleifer/peewee/issues/2512/labels{/name} | https://api.github.com/repos/coleifer/peewee/issues/2512/comments | https://api.github.com/repos/coleifer/peewee/issues/2512/events | https://github.com/coleifer/peewee/issues/2512 | 1,097,482,999 | I_kwDOAA7yGM5BakL3 | 2,512 | potential raw_unicode_escape error when debugging customized BINARY field | {

"login": "est",

"id": 23570,

"node_id": "MDQ6VXNlcjIzNTcw",

"avatar_url": "https://avatars.githubusercontent.com/u/23570?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/est",

"html_url": "https://github.com/est",

"followers_url": "https://api.github.com/users/est/followers",

"following_url": "https://api.github.com/users/est/following{/other_user}",

"gists_url": "https://api.github.com/users/est/gists{/gist_id}",

"starred_url": "https://api.github.com/users/est/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/est/subscriptions",

"organizations_url": "https://api.github.com/users/est/orgs",

"repos_url": "https://api.github.com/users/est/repos",

"events_url": "https://api.github.com/users/est/events{/privacy}",

"received_events_url": "https://api.github.com/users/est/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"Did you not read the [code comment](https://github.com/coleifer/peewee/blob/master/peewee.py#L658-L661)? This is not part of the public API.",

"> Did you not read the code comment? This is not part of the public API.\r\n\r\nHi coleifer, I am using `logger.debug(\"sql is %s\", query)` with one of the parameter as a customized mysql BINARY field. I think it fits the code comment of \"This function is intended for debugging or logging purposes ONLY\".\r\n\r\nUnless there's another way to debug the SQL I am unaware of?\r\n",

"The correct way to do this is:\r\n\r\n```python\r\n\r\nquery = MyModel.select().where(MyModel.foo == 'bar')\r\nsql, params = query.sql()\r\n```\r\n\r\nYou can inspect the sql string, which contains proper placeholders for the parameters, and the parameters themselves."

] | 2022-01-10T05:49:22 | 2022-01-10T16:27:42 | 2022-01-10T14:01:50 | NONE | null | Hi,

I created a BINARY field following the doc

https://docs.peewee-orm.com/en/latest/peewee/models.html#creating-a-custom-field

When debugging the SQL, peewee will try `utf8` or `raw_unicode_escape` the `bytes()` parameter, at one time my bytes happens to be `b"\x0ee\xd4\xee\xff\xc0\x02r\xc2\\U0'\x00"`, which will error in [_query_val_transform](https://github.com/coleifer/peewee/blob/master/peewee.py#L683)

```

UnicodeDecodeError

'rawunicodeescape' codec can't decode bytes in position 9-11: truncated \UXXXXXXXX escape

```

I think it's because we assume the in SQL parameter, `bytes()` are displayable strings but unfortunately it will fail when there's an illegal "\U" literal inside the string. | {

"url": "https://api.github.com/repos/coleifer/peewee/issues/2512/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/coleifer/peewee/issues/2512/timeline | null | completed | null | null |

https://api.github.com/repos/coleifer/peewee/issues/2511 | https://api.github.com/repos/coleifer/peewee | https://api.github.com/repos/coleifer/peewee/issues/2511/labels{/name} | https://api.github.com/repos/coleifer/peewee/issues/2511/comments | https://api.github.com/repos/coleifer/peewee/issues/2511/events | https://github.com/coleifer/peewee/issues/2511 | 1,095,551,899 | I_kwDOAA7yGM5BTMub | 2,511 | Joined and grouped foreign key values are not cached | {

"login": "conqp",

"id": 3766192,

"node_id": "MDQ6VXNlcjM3NjYxOTI=",

"avatar_url": "https://avatars.githubusercontent.com/u/3766192?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/conqp",

"html_url": "https://github.com/conqp",

"followers_url": "https://api.github.com/users/conqp/followers",

"following_url": "https://api.github.com/users/conqp/following{/other_user}",

"gists_url": "https://api.github.com/users/conqp/gists{/gist_id}",

"starred_url": "https://api.github.com/users/conqp/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/conqp/subscriptions",

"organizations_url": "https://api.github.com/users/conqp/orgs",

"repos_url": "https://api.github.com/users/conqp/repos",

"events_url": "https://api.github.com/users/conqp/events{/privacy}",

"received_events_url": "https://api.github.com/users/conqp/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"Please consult the docs here: http://docs.peewee-orm.com/en/latest/peewee/relationships.html#avoiding-the-n-1-problem\r\n\r\nFurthermore, you cannot go from \"many\" -> \"many\" in a single go without using prefetch. You can join \"upwards\" no problem, e.g., select all Systems and also grab their Deployment:\r\n\r\n```python\r\n# This executes in a single query.\r\nquery = System.select(System, Deployment).join(Deployment)\r\nfor system in query:\r\n print(system.id, system.deployment.annotation)\r\n```\r\n\r\nWe cannot do the reverse automatically, e.g., get deployments and all their systems, because of SQL's tabular nature.\r\n\r\nSo you can either:\r\n\r\n1. Use `prefetch()` (although I strongly advise profiling this beforehand to see if it actually provides any measurable improvements)\r\n2. Use N+1, e.g., for each deployment also query its systems.\r\n\r\nAlso note that in your first query you are not actually aggregating anything, and the query probably doesn't do what you think it does.\r\n\r\nThe docs provide many examples: http://docs.peewee-orm.com/en/latest/peewee/relationships.html#avoiding-the-n-1-problem\r\n\r\nLastly, queries generated by accessing a backref are not cached -- accessing the backref creates a new query. If you want to iterate over a backref multiple times you can just assign it to a local variable:\r\n\r\n```python\r\n\r\nuser = User.get(User.username == 'charlie')\r\ntweets = user.tweets\r\n# Query is only evaluated once:\r\nfor t in tweets: pass\r\nfor t in tweets: pass\r\n```"

] | 2022-01-06T17:54:30 | 2022-01-06T19:10:06 | 2022-01-06T19:06:58 | CONTRIBUTOR | null | I have a case where I want to select a one-to-many relation.

According to the [docs](http://docs.peewee-orm.com/en/latest/peewee/querying.html#aggregating-records), this can be done with appropriate selects, joins and group-bys.

However, peewee does not cache query, but executes it again, if I access the backref:

```python

from logging import DEBUG, basicConfig

basicConfig(level=DEBUG)

from hwdb import System, Deployment

select = Deployment.select(System, Deployment).join(System, on=System.deployment == Deployment.id).where(Deployment.id == 354).group_by(Deployment)

dep = select.get()

print(dep.systems)

print(list(dep.systems))

```

Result:

```python

DEBUG:peewee:('SELECT `t1`.`id`, `t1`.`Group`, `t1`.`deployment`, `t1`.`dataset`, `t1`.`openvpn`, `t1`.`ipv6address`, `t1`.`pubkey`, `t1`.`created`, `t1`.`configured`, `t1`.`fitted`, `t1`.`operating_system`, `t1`.`monitor`, `t1`.`serial_number`, `t1`.`model`, `t1`.`last_sync`, `t2`.`id`, `t2`.`customer`, `t2`.`type`, `t2`.`connection`, `t2`.`address`, `t2`.`lpt_address`, `t2`.`scheduled`, `t2`.`annotation`, `t2`.`testing`, `t2`.`timestamp` FROM `hwdb`.`deployment` AS `t2` INNER JOIN `hwdb`.`system` AS `t1` ON (`t1`.`deployment` = `t2`.`id`) WHERE (`t2`.`id` = %s) GROUP BY `t2`.`id`, `t2`.`customer`, `t2`.`type`, `t2`.`connection`, `t2`.`address`, `t2`.`lpt_address`, `t2`.`scheduled`, `t2`.`annotation`, `t2`.`testing`, `t2`.`timestamp` LIMIT %s OFFSET %s', [354, 1, 0])

SELECT `t1`.`id`, `t1`.`Group`, `t1`.`deployment`, `t1`.`dataset`, `t1`.`openvpn`, `t1`.`ipv6address`, `t1`.`pubkey`, `t1`.`created`, `t1`.`configured`, `t1`.`fitted`, `t1`.`operating_system`, `t1`.`monitor`, `t1`.`serial_number`, `t1`.`model`, `t1`.`last_sync` FROM `hwdb`.`system` AS `t1` WHERE (`t1`.`deployment` = 354)

DEBUG:peewee:('SELECT `t1`.`id`, `t1`.`Group`, `t1`.`deployment`, `t1`.`dataset`, `t1`.`openvpn`, `t1`.`ipv6address`, `t1`.`pubkey`, `t1`.`created`, `t1`.`configured`, `t1`.`fitted`, `t1`.`operating_system`, `t1`.`monitor`, `t1`.`serial_number`, `t1`.`model`, `t1`.`last_sync` FROM `hwdb`.`system` AS `t1` WHERE (`t1`.`deployment` = %s)', [354])

[<System: 36>, <System: 359>]

```

As you can see from the last debug output, peewee executes another query to retrieve the related records.

Shouldn't it have cached them from the initial join?

Vanilla peewee test script:

```python

from configparser import ConfigParser

from datetime import datetime

from logging import DEBUG, basicConfig

from peewee import Model, MySQLDatabase

from peewee import BooleanField, CharField, DateField, DateTimeField, ForeignKeyField, FixedCharField

basicConfig(level=DEBUG)

CONFIG = ConfigParser()

CONFIG.read('/usr/local/etc/hwdb.conf')

DATABASE = MySQLDatabase(

'hwdb',

host='localhost',

user='hwdb',

passwd=CONFIG.get('db', 'passwd')

)

class Deployment(Model):

"""A customer-specific deployment of a terminal."""

class Meta:

database = DATABASE

schema = database.database

scheduled = DateField(null=True)

annotation = CharField(255, null=True)

testing = BooleanField(default=False)

timestamp = DateTimeField(null=True)

class System(Model):

"""A physical computer system out in the field."""

class Meta:

database = DATABASE

schema = database.database

deployment = ForeignKeyField(

Deployment, null=True, column_name='deployment', backref='systems',

on_delete='SET NULL', on_update='CASCADE', lazy_load=False)

pubkey = FixedCharField(44, null=True, unique=True)

created = DateTimeField(default=datetime.now)

configured = DateTimeField(null=True)

fitted = BooleanField(default=False)

monitor = BooleanField(null=True)

serial_number = CharField(255, null=True)

model = CharField(255, null=True) # Hardware model.

last_sync = DateTimeField(null=True)

select = Deployment.select(System, Deployment).join(System, on=System.deployment == Deployment.id).where(Deployment.id == 354).group_by(Deployment)

dep = select.get()

print(dep.systems)

print(list(dep.systems))

```

Result:

```python

DEBUG:peewee:('SELECT `t1`.`id`, `t1`.`deployment`, `t1`.`pubkey`, `t1`.`created`, `t1`.`configured`, `t1`.`fitted`, `t1`.`monitor`, `t1`.`serial_number`, `t1`.`model`, `t1`.`last_sync`, `t2`.`id`, `t2`.`scheduled`, `t2`.`annotation`, `t2`.`testing`, `t2`.`timestamp` FROM `hwdb`.`deployment` AS `t2` INNER JOIN `hwdb`.`system` AS `t1` ON (`t1`.`deployment` = `t2`.`id`) WHERE (`t2`.`id` = %s) GROUP BY `t2`.`id`, `t2`.`scheduled`, `t2`.`annotation`, `t2`.`testing`, `t2`.`timestamp` LIMIT %s OFFSET %s', [354, 1, 0])

SELECT `t1`.`id`, `t1`.`deployment`, `t1`.`pubkey`, `t1`.`created`, `t1`.`configured`, `t1`.`fitted`, `t1`.`monitor`, `t1`.`serial_number`, `t1`.`model`, `t1`.`last_sync` FROM `hwdb`.`system` AS `t1` WHERE (`t1`.`deployment` = 354)

DEBUG:peewee:('SELECT `t1`.`id`, `t1`.`deployment`, `t1`.`pubkey`, `t1`.`created`, `t1`.`configured`, `t1`.`fitted`, `t1`.`monitor`, `t1`.`serial_number`, `t1`.`model`, `t1`.`last_sync` FROM `hwdb`.`system` AS `t1` WHERE (`t1`.`deployment` = %s)', [354])

[<System: 36>, <System: 359>]

```

If I remove the select and join, it still works:

```python

select = Deployment.select().where(Deployment.id == 354).group_by(Deployment)

```

It seems like `lazy_load=False` is being entirely ignored. | {

"url": "https://api.github.com/repos/coleifer/peewee/issues/2511/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/coleifer/peewee/issues/2511/timeline | null | completed | null | null |

https://api.github.com/repos/coleifer/peewee/issues/2510 | https://api.github.com/repos/coleifer/peewee | https://api.github.com/repos/coleifer/peewee/issues/2510/labels{/name} | https://api.github.com/repos/coleifer/peewee/issues/2510/comments | https://api.github.com/repos/coleifer/peewee/issues/2510/events | https://github.com/coleifer/peewee/issues/2510 | 1,094,163,850 | I_kwDOAA7yGM5BN52K | 2,510 | table_exists(MyModel) should raise error | {

"login": "jonashaag",

"id": 175722,

"node_id": "MDQ6VXNlcjE3NTcyMg==",

"avatar_url": "https://avatars.githubusercontent.com/u/175722?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/jonashaag",

"html_url": "https://github.com/jonashaag",

"followers_url": "https://api.github.com/users/jonashaag/followers",

"following_url": "https://api.github.com/users/jonashaag/following{/other_user}",

"gists_url": "https://api.github.com/users/jonashaag/gists{/gist_id}",

"starred_url": "https://api.github.com/users/jonashaag/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jonashaag/subscriptions",

"organizations_url": "https://api.github.com/users/jonashaag/orgs",

"repos_url": "https://api.github.com/users/jonashaag/repos",

"events_url": "https://api.github.com/users/jonashaag/events{/privacy}",

"received_events_url": "https://api.github.com/users/jonashaag/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"Thanks, this method now accepts a model class."

] | 2022-01-05T09:45:29 | 2022-01-05T13:41:17 | 2022-01-05T13:41:06 | CONTRIBUTOR | null | Misuse of `table_exists` API always returns `False`:

```py

db.table_exists(MyModel)

```

IMO this should either raise an error, or be identical to

```py

db.table_exists(MyModel.table_name)

``` | {

"url": "https://api.github.com/repos/coleifer/peewee/issues/2510/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/coleifer/peewee/issues/2510/timeline | null | completed | null | null |

https://api.github.com/repos/coleifer/peewee/issues/2509 | https://api.github.com/repos/coleifer/peewee | https://api.github.com/repos/coleifer/peewee/issues/2509/labels{/name} | https://api.github.com/repos/coleifer/peewee/issues/2509/comments | https://api.github.com/repos/coleifer/peewee/issues/2509/events | https://github.com/coleifer/peewee/issues/2509 | 1,092,086,129 | I_kwDOAA7yGM5BF-lx | 2,509 | Add ModelSelect to __all__ for linting | {

"login": "1kastner",

"id": 5236165,

"node_id": "MDQ6VXNlcjUyMzYxNjU=",

"avatar_url": "https://avatars.githubusercontent.com/u/5236165?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/1kastner",

"html_url": "https://github.com/1kastner",

"followers_url": "https://api.github.com/users/1kastner/followers",

"following_url": "https://api.github.com/users/1kastner/following{/other_user}",

"gists_url": "https://api.github.com/users/1kastner/gists{/gist_id}",

"starred_url": "https://api.github.com/users/1kastner/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/1kastner/subscriptions",

"organizations_url": "https://api.github.com/users/1kastner/orgs",

"repos_url": "https://api.github.com/users/1kastner/repos",

"events_url": "https://api.github.com/users/1kastner/events{/privacy}",

"received_events_url": "https://api.github.com/users/1kastner/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"I'm going to pass - I don't really see a need to export this."

] | 2022-01-02T19:35:36 | 2022-01-04T14:28:11 | 2022-01-04T14:28:11 | NONE | null | First of all thank you very much for creating this project and sharing it under the MIT license!

Recently I have started to rely more on type-hinting in my projects to have better tool support and in some cases making my intentions more obvious for the readers of the code. While doing that, I got the warning that ModelSelect is not part of the official interface as it is not listed in `__all__`. However, I would like to pass a query which is not yet executed to another method or function and only there execute it. Thus my request to add `ModelSelect` to `__all__` and treat it as an official part of the API.

Of course there could be design choices or other ideas that contradict this. In that case I would love to learn more about the future intentions of how this project will continue. | {

"url": "https://api.github.com/repos/coleifer/peewee/issues/2509/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/coleifer/peewee/issues/2509/timeline | null | completed | null | null |

https://api.github.com/repos/coleifer/peewee/issues/2508 | https://api.github.com/repos/coleifer/peewee | https://api.github.com/repos/coleifer/peewee/issues/2508/labels{/name} | https://api.github.com/repos/coleifer/peewee/issues/2508/comments | https://api.github.com/repos/coleifer/peewee/issues/2508/events | https://github.com/coleifer/peewee/issues/2508 | 1,091,487,002 | I_kwDOAA7yGM5BDsUa | 2,508 | how to search by pair of columns | {

"login": "sherrrrr",

"id": 31510228,

"node_id": "MDQ6VXNlcjMxNTEwMjI4",

"avatar_url": "https://avatars.githubusercontent.com/u/31510228?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/sherrrrr",

"html_url": "https://github.com/sherrrrr",

"followers_url": "https://api.github.com/users/sherrrrr/followers",

"following_url": "https://api.github.com/users/sherrrrr/following{/other_user}",

"gists_url": "https://api.github.com/users/sherrrrr/gists{/gist_id}",

"starred_url": "https://api.github.com/users/sherrrrr/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/sherrrrr/subscriptions",

"organizations_url": "https://api.github.com/users/sherrrrr/orgs",

"repos_url": "https://api.github.com/users/sherrrrr/repos",

"events_url": "https://api.github.com/users/sherrrrr/events{/privacy}",

"received_events_url": "https://api.github.com/users/sherrrrr/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"Here is an example (tested with mariadb 10.6):\r\n\r\n```python\r\nclass Reg(Model):\r\n key = CharField()\r\n value = TextField()\r\n\r\nReg.insert_many([('k%02d' % i, 'v%02d' % i) for i in range(100)]).execute()\r\ncols = Tuple(Reg.key, Reg.value)\r\nvalues = [('k02', 'v02'), ('k04', 'v04'), ('k06', 'v06')]\r\nquery = (Reg.select()\r\n .where(cols.in_(values))\r\n .order_by(Reg.key))\r\nfor reg in query:\r\n print(reg.key, reg.value)\r\n\r\n# Prints:\r\n# k02 v02\r\n# k04 v04\r\n# k06 v06\r\n```"

] | 2021-12-31T09:08:14 | 2021-12-31T14:29:40 | 2021-12-31T14:28:25 | NONE | null | How can I write something like this using peewee in mysql?

```

SELECT whatever

FROM t

WHERE (col1, col2)

IN ((val1a, val2a), (val1b, val2b), ...) ;

``` | {

"url": "https://api.github.com/repos/coleifer/peewee/issues/2508/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/coleifer/peewee/issues/2508/timeline | null | completed | null | null |

https://api.github.com/repos/coleifer/peewee/issues/2507 | https://api.github.com/repos/coleifer/peewee | https://api.github.com/repos/coleifer/peewee/issues/2507/labels{/name} | https://api.github.com/repos/coleifer/peewee/issues/2507/comments | https://api.github.com/repos/coleifer/peewee/issues/2507/events | https://github.com/coleifer/peewee/issues/2507 | 1,090,305,263 | I_kwDOAA7yGM5A_Lzv | 2,507 | Does not accept pandas dataframe int/float columns with NA | {

"login": "ashishkumarazuga",

"id": 78608338,

"node_id": "MDQ6VXNlcjc4NjA4MzM4",

"avatar_url": "https://avatars.githubusercontent.com/u/78608338?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/ashishkumarazuga",

"html_url": "https://github.com/ashishkumarazuga",

"followers_url": "https://api.github.com/users/ashishkumarazuga/followers",

"following_url": "https://api.github.com/users/ashishkumarazuga/following{/other_user}",

"gists_url": "https://api.github.com/users/ashishkumarazuga/gists{/gist_id}",

"starred_url": "https://api.github.com/users/ashishkumarazuga/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ashishkumarazuga/subscriptions",

"organizations_url": "https://api.github.com/users/ashishkumarazuga/orgs",

"repos_url": "https://api.github.com/users/ashishkumarazuga/repos",

"events_url": "https://api.github.com/users/ashishkumarazuga/events{/privacy}",

"received_events_url": "https://api.github.com/users/ashishkumarazuga/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"This error is being thrown by your database, via your database driver, and is not raised by Peewee."

] | 2021-12-29T06:42:55 | 2021-12-29T13:49:30 | 2021-12-29T13:49:30 | NONE | null | The pandas dataframe containing columns which are int64 or float64 in nature, containing NA or NaN are not accepted by peewee. And throws a `DataError('integer out of range\n')`, during an insert.

| {

"url": "https://api.github.com/repos/coleifer/peewee/issues/2507/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/coleifer/peewee/issues/2507/timeline | null | completed | null | null |

https://api.github.com/repos/coleifer/peewee/issues/2506 | https://api.github.com/repos/coleifer/peewee | https://api.github.com/repos/coleifer/peewee/issues/2506/labels{/name} | https://api.github.com/repos/coleifer/peewee/issues/2506/comments | https://api.github.com/repos/coleifer/peewee/issues/2506/events | https://github.com/coleifer/peewee/issues/2506 | 1,090,269,083 | I_kwDOAA7yGM5A_C-b | 2,506 | distutils has been deprecated in Python 3.10 | {

"login": "tirkarthi",

"id": 3972343,

"node_id": "MDQ6VXNlcjM5NzIzNDM=",

"avatar_url": "https://avatars.githubusercontent.com/u/3972343?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/tirkarthi",

"html_url": "https://github.com/tirkarthi",

"followers_url": "https://api.github.com/users/tirkarthi/followers",

"following_url": "https://api.github.com/users/tirkarthi/following{/other_user}",

"gists_url": "https://api.github.com/users/tirkarthi/gists{/gist_id}",

"starred_url": "https://api.github.com/users/tirkarthi/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/tirkarthi/subscriptions",

"organizations_url": "https://api.github.com/users/tirkarthi/orgs",

"repos_url": "https://api.github.com/users/tirkarthi/repos",

"events_url": "https://api.github.com/users/tirkarthi/events{/privacy}",

"received_events_url": "https://api.github.com/users/tirkarthi/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"Steve Dower @zooba, a Microsoft employee, is railroading through this idiotic pep. From what I can see there are quite a few people who disagree with his decision:\r\n\r\nhttps://discuss.python.org/t/pep-632-deprecate-distutils-module/5134/29\r\n\r\nHe comes off as a smug, smarmy nitwit who cannot appreciate that real world users will be affected by this decision. Once again, Microsoft employees shitting up the party? Or just another petty tyrant who found a source of narcissistic supply in open source? You decide.",

"As much as I sympathize with the reaction, the code should be updated in the future. It seems that the pep isn't going away.",

"Fallback to the vendored distutils inside setuptools for now: 7ab5e401d85c1eec7aafbbe2fdf3c0344f82422b",

"Anyone who stumbles upon this and can find examples of prior art for projects that build C extensions and are going through a similar migration, I'd appreciate links. I glanced around at a few projects I'm familiar with, and all of them are still on `distutils`."

] | 2021-12-29T04:56:21 | 2021-12-30T01:02:47 | 2021-12-29T20:47:49 | NONE | null | https://www.python.org/dev/peps/pep-0632/#migration-advice

https://github.com/coleifer/peewee/blob/5a55933ed28b261fbe100568125fffe0ba854b99/setup.py#L5-L8 | {

"url": "https://api.github.com/repos/coleifer/peewee/issues/2506/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/coleifer/peewee/issues/2506/timeline | null | completed | null | null |

https://api.github.com/repos/coleifer/peewee/issues/2505 | https://api.github.com/repos/coleifer/peewee | https://api.github.com/repos/coleifer/peewee/issues/2505/labels{/name} | https://api.github.com/repos/coleifer/peewee/issues/2505/comments | https://api.github.com/repos/coleifer/peewee/issues/2505/events | https://github.com/coleifer/peewee/issues/2505 | 1,083,846,770 | I_kwDOAA7yGM5AmjBy | 2,505 | formatargspec was removed in Python 3.11 | {

"login": "tirkarthi",

"id": 3972343,

"node_id": "MDQ6VXNlcjM5NzIzNDM=",

"avatar_url": "https://avatars.githubusercontent.com/u/3972343?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/tirkarthi",

"html_url": "https://github.com/tirkarthi",

"followers_url": "https://api.github.com/users/tirkarthi/followers",

"following_url": "https://api.github.com/users/tirkarthi/following{/other_user}",

"gists_url": "https://api.github.com/users/tirkarthi/gists{/gist_id}",

"starred_url": "https://api.github.com/users/tirkarthi/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/tirkarthi/subscriptions",

"organizations_url": "https://api.github.com/users/tirkarthi/orgs",

"repos_url": "https://api.github.com/users/tirkarthi/repos",

"events_url": "https://api.github.com/users/tirkarthi/events{/privacy}",

"received_events_url": "https://api.github.com/users/tirkarthi/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"That doesn't matter. The stdlib-provided mock is used for any python 3 or newer, and that old version is vendored for Python 2.x users.",

"Got it, sorry for the noise."

] | 2021-12-18T14:43:19 | 2021-12-19T01:41:07 | 2021-12-19T01:01:30 | NONE | null | https://github.com/coleifer/peewee/blob/5a55933ed28b261fbe100568125fffe0ba854b99/tests/libs/mock.py#L190

https://docs.python.org/3.11/whatsnew/3.11.html#removed

> the formatargspec function, deprecated since Python 3.5; use the inspect.signature() function and Signature object directly. | {

"url": "https://api.github.com/repos/coleifer/peewee/issues/2505/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/coleifer/peewee/issues/2505/timeline | null | completed | null | null |

https://api.github.com/repos/coleifer/peewee/issues/2504 | https://api.github.com/repos/coleifer/peewee | https://api.github.com/repos/coleifer/peewee/issues/2504/labels{/name} | https://api.github.com/repos/coleifer/peewee/issues/2504/comments | https://api.github.com/repos/coleifer/peewee/issues/2504/events | https://github.com/coleifer/peewee/issues/2504 | 1,083,132,854 | I_kwDOAA7yGM5Aj0u2 | 2,504 | PostgresqlExtDatabase. Model with JSONField raise exception on any action with search (get, get_or_create, etc.) | {

"login": "olvinroght",

"id": 49984942,

"node_id": "MDQ6VXNlcjQ5OTg0OTQy",

"avatar_url": "https://avatars.githubusercontent.com/u/49984942?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/olvinroght",

"html_url": "https://github.com/olvinroght",

"followers_url": "https://api.github.com/users/olvinroght/followers",

"following_url": "https://api.github.com/users/olvinroght/following{/other_user}",

"gists_url": "https://api.github.com/users/olvinroght/gists{/gist_id}",

"starred_url": "https://api.github.com/users/olvinroght/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/olvinroght/subscriptions",

"organizations_url": "https://api.github.com/users/olvinroght/orgs",

"repos_url": "https://api.github.com/users/olvinroght/repos",

"events_url": "https://api.github.com/users/olvinroght/events{/privacy}",

"received_events_url": "https://api.github.com/users/olvinroght/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"Refer to the docs: https://www.postgresql.org/docs/current/datatype-json.html\r\n\r\nBut, simply, you cannot compare `json` values. I am not aware of any reason why you would want to use `json` over `jsonb`, so I would suggest that you just use `BinaryJSONField` (which additionally supports indexing).\r\n\r\nIf you really want to use `JSONField` then you should not use `get_or_create()` but instead use `insert(...).on_conflict()` and rely on a unique constraint."

] | 2021-12-17T10:50:01 | 2021-12-17T14:04:18 | 2021-12-17T14:02:59 | NONE | null | ```python

from playhouse.postgres_ext import PostgresqlExtDatabase, JSONField

from peewee import Model, CharField

database = PostgresqlExtDatabase("Test", user="user", password="password",

host="localhost", port=5432, autorollback=True)

class TestJSONModel(Model):

name = CharField(unique=True)

json_value = JSONField()

class Meta:

database = database

TestJSONModel.create_table()

model, created = TestJSONModel.get_or_create(

name="test_json_model_1",

json_value={"key1": "value1"}

)

```

It raises an exception:

```none

Traceback (most recent call last):

File "\venv\lib\site-packages\peewee.py", line 3160, in execute_sql

cursor.execute(sql, params or ())

psycopg2.errors.UndefinedFunction: ERROR: operator does not exist: json = json

LINE 1: ..."name" = 'test_json_model_1'), ("t1"."json_value" = CAST('{"...

^

HINT: No operator matches the given name and argument type(s). You might need to add explicit type casts.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "\test.py", line 24, in <module>

model, created = TestJSONModel.select(

File "\venv\lib\site-packages\peewee.py", line 1911, in inner

return method(self, database, *args, **kwargs)

File "\venv\lib\site-packages\peewee.py", line 1982, in execute

return self._execute(database)

File "\venv\lib\site-packages\peewee.py", line 2155, in _execute

cursor = database.execute(self)

File "\venv\lib\site-packages\playhouse\postgres_ext.py", line 490, in execute

cursor = self.execute_sql(sql, params, commit=commit)

File "\venv\lib\site-packages\peewee.py", line 3167, in execute_sql

self.commit()

File "\venv\lib\site-packages\peewee.py", line 2933, in __exit__

reraise(new_type, new_type(exc_value, *exc_args), traceback)

File "\venv\lib\site-packages\peewee.py", line 191, in reraise

raise value.with_traceback(tb)

File "\venv\lib\site-packages\peewee.py", line 3160, in execute_sql

cursor.execute(sql, params or ())

peewee.ProgrammingError: ERROR: operator does not exist: json = json

LINE 1: ..."name" = 'test_json_model_1'), ("t1"."json_value" = CAST('{"...

^

HINT: No operator matches the given name and argument type(s). You might need to add explicit type casts.

```

Same happens on any other query where `json_value` comparison used. Code above produces next query:

```sql

SELECT ("t1"."name" = %s), ("t1"."json_value" = CAST(%s AS json)) FROM "testjsonmodel" AS "t1"

```

As far as I understood, it's not possible to compare JSON fields, only their string values, so it will be good if peewee handles it internally instead of raising an exception.

This happens only with `JSONField`, `BinaryJSONField` works well. There's [a question](https://stackoverflow.com/q/32843213/10824407) on Stack Overflow with same issue.

| {

"url": "https://api.github.com/repos/coleifer/peewee/issues/2504/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/coleifer/peewee/issues/2504/timeline | null | completed | null | null |

https://api.github.com/repos/coleifer/peewee/issues/2503 | https://api.github.com/repos/coleifer/peewee | https://api.github.com/repos/coleifer/peewee/issues/2503/labels{/name} | https://api.github.com/repos/coleifer/peewee/issues/2503/comments | https://api.github.com/repos/coleifer/peewee/issues/2503/events | https://github.com/coleifer/peewee/issues/2503 | 1,079,774,294 | I_kwDOAA7yGM5AXAxW | 2,503 | how to convert peewee column field_type to db column_type | {

"login": "LLjiahai",

"id": 30220658,

"node_id": "MDQ6VXNlcjMwMjIwNjU4",

"avatar_url": "https://avatars.githubusercontent.com/u/30220658?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/LLjiahai",

"html_url": "https://github.com/LLjiahai",

"followers_url": "https://api.github.com/users/LLjiahai/followers",

"following_url": "https://api.github.com/users/LLjiahai/following{/other_user}",

"gists_url": "https://api.github.com/users/LLjiahai/gists{/gist_id}",

"starred_url": "https://api.github.com/users/LLjiahai/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/LLjiahai/subscriptions",

"organizations_url": "https://api.github.com/users/LLjiahai/orgs",

"repos_url": "https://api.github.com/users/LLjiahai/repos",

"events_url": "https://api.github.com/users/LLjiahai/events{/privacy}",

"received_events_url": "https://api.github.com/users/LLjiahai/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [] | 2021-12-14T13:41:15 | 2021-12-14T14:34:46 | 2021-12-14T14:34:46 | NONE | null | ps: field_a = peewee .CharField(max_length=255) to mysql column_type varchar(255) | {

"url": "https://api.github.com/repos/coleifer/peewee/issues/2503/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/coleifer/peewee/issues/2503/timeline | null | completed | null | null |

https://api.github.com/repos/coleifer/peewee/issues/2502 | https://api.github.com/repos/coleifer/peewee | https://api.github.com/repos/coleifer/peewee/issues/2502/labels{/name} | https://api.github.com/repos/coleifer/peewee/issues/2502/comments | https://api.github.com/repos/coleifer/peewee/issues/2502/events | https://github.com/coleifer/peewee/issues/2502 | 1,077,359,121 | I_kwDOAA7yGM5ANzIR | 2,502 | AttributeError: 'Expression' object has no attribute 'execute' | {

"login": "thisnugroho",

"id": 49790011,

"node_id": "MDQ6VXNlcjQ5NzkwMDEx",

"avatar_url": "https://avatars.githubusercontent.com/u/49790011?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/thisnugroho",

"html_url": "https://github.com/thisnugroho",

"followers_url": "https://api.github.com/users/thisnugroho/followers",

"following_url": "https://api.github.com/users/thisnugroho/following{/other_user}",

"gists_url": "https://api.github.com/users/thisnugroho/gists{/gist_id}",

"starred_url": "https://api.github.com/users/thisnugroho/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/thisnugroho/subscriptions",

"organizations_url": "https://api.github.com/users/thisnugroho/orgs",

"repos_url": "https://api.github.com/users/thisnugroho/repos",

"events_url": "https://api.github.com/users/thisnugroho/events{/privacy}",

"received_events_url": "https://api.github.com/users/thisnugroho/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

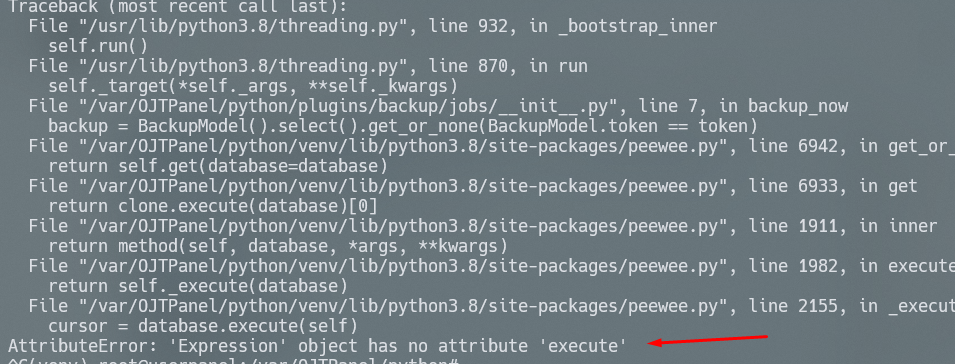

"The `get_or_none()`, when used on a select query, does not accept any parameters -- though the error message is unhelpful here.\r\n\r\nIn your code you are:\r\n\r\n1. `SomeModel.select()` which creates a `ModelSelect` query\r\n2. Call the `get_or_none()` method on the `ModelSelect` query\r\n\r\nI think what you intended to do is this:\r\n\r\n```python\r\n\r\nSomeModel.select().where(SomeModel.field == value).get_or_none()\r\n```\r\n\r\nAlternatively, you can use the *shortcut* method on model, which *does* accept an expression:\r\n\r\n```python\r\n\r\nSomeModel.get_or_none(SomeModel.field == value)\r\n```\r\n\r\n* http://docs.peewee-orm.com/en/latest/peewee/api.html#Model.get_or_none"

] | 2021-12-11T02:11:25 | 2021-12-11T12:25:38 | 2021-12-11T12:25:38 | NONE | null | It's appear after upgrading from version 3.14.4 to peewee latest version 3.14.8,

i decide to upgrade because i want to using `Model.get_or_none()` function, it's not available in previous version.

and the error appear i'm trying to running this:

```python

SomeModel().select().get_or_none(SomeModel.field== value)

```

my configuration looklike this:

```python

db = MySQLDatabase(

env("DB_DATABASE"),

user=env("DB_USERNAME"),

password=env("DB_PASSWORD"),

host=env("DB_HOST"),

port=int(env("DB_PORT")),

)

```

is there something that i need to adjust ? or i'm just missing something.

screenshoot:

details

OS:Ubuntu 20.04

MYSQL Version = 8.0.27 | {

"url": "https://api.github.com/repos/coleifer/peewee/issues/2502/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/coleifer/peewee/issues/2502/timeline | null | completed | null | null |

https://api.github.com/repos/coleifer/peewee/issues/2501 | https://api.github.com/repos/coleifer/peewee | https://api.github.com/repos/coleifer/peewee/issues/2501/labels{/name} | https://api.github.com/repos/coleifer/peewee/issues/2501/comments | https://api.github.com/repos/coleifer/peewee/issues/2501/events | https://github.com/coleifer/peewee/pull/2501 | 1,076,661,651 | PR_kwDOAA7yGM4vq1ly | 2,501 | Allow to use peewee.SQL from `from_` | {

"login": "d0d0",

"id": 553292,

"node_id": "MDQ6VXNlcjU1MzI5Mg==",

"avatar_url": "https://avatars.githubusercontent.com/u/553292?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/d0d0",

"html_url": "https://github.com/d0d0",

"followers_url": "https://api.github.com/users/d0d0/followers",

"following_url": "https://api.github.com/users/d0d0/following{/other_user}",

"gists_url": "https://api.github.com/users/d0d0/gists{/gist_id}",

"starred_url": "https://api.github.com/users/d0d0/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/d0d0/subscriptions",

"organizations_url": "https://api.github.com/users/d0d0/orgs",

"repos_url": "https://api.github.com/users/d0d0/repos",

"events_url": "https://api.github.com/users/d0d0/events{/privacy}",

"received_events_url": "https://api.github.com/users/d0d0/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"I don't really like this solution. The join will not correctly set-up the model graph, so the best solution in my opinion is to write:\r\n\r\n```python\r\nq = User.select(User, Tweet).from_(SQL(f'`users` as `t1` USE INDEX(`user_id`) ')).join(Tweet)\r\nfor x in q.objects():\r\n # assume username is on User table, and content is on Tweet table.\r\n print(x.username, x.content)\r\n```"

] | 2021-12-10T09:58:34 | 2021-12-10T17:46:55 | 2021-12-10T17:46:54 | NONE | null | **Problem:**

Tried to apply custom SQL to `from_` function from this example https://github.com/coleifer/peewee/issues/1289#issuecomment-307128658 However in my query, I use some joins.

Here is example

```python

q = User.select(User, Tweet).from_(SQL(f'`users` as `t1` USE INDEX(`user_id`) ')).join(Tweet)

for x in q:

print(x)

```

But without fix, exception is thrown

```

Traceback (most recent call last):

File "JetBrains\PyCharm2021.2\scratches\scratch_71.py", line 27, in <module>

for file in q:

File "venv\lib\site-packages\peewee.py", line 4408, in next

self.cursor_wrapper.iterate()

File "venv\lib\site-packages\peewee.py", line 4325, in iterate

self.initialize() # Lazy initialization.

File "venv\lib\site-packages\peewee.py", line 7587, in initialize

if curr not in self.joins:

TypeError: unhashable type: 'SQL'

```

**Solution**

When initializing ModelCursorWrapper skip SQL instances | {

"url": "https://api.github.com/repos/coleifer/peewee/issues/2501/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/coleifer/peewee/issues/2501/timeline | null | null | false | {

"url": "https://api.github.com/repos/coleifer/peewee/pulls/2501",

"html_url": "https://github.com/coleifer/peewee/pull/2501",

"diff_url": "https://github.com/coleifer/peewee/pull/2501.diff",

"patch_url": "https://github.com/coleifer/peewee/pull/2501.patch",

"merged_at": null

} |

https://api.github.com/repos/coleifer/peewee/issues/2500 | https://api.github.com/repos/coleifer/peewee | https://api.github.com/repos/coleifer/peewee/issues/2500/labels{/name} | https://api.github.com/repos/coleifer/peewee/issues/2500/comments | https://api.github.com/repos/coleifer/peewee/issues/2500/events | https://github.com/coleifer/peewee/issues/2500 | 1,074,152,643 | I_kwDOAA7yGM5ABkTD | 2,500 | Query "where not in empty list" does not work as expected | {

"login": "skontar",

"id": 10827040,

"node_id": "MDQ6VXNlcjEwODI3MDQw",

"avatar_url": "https://avatars.githubusercontent.com/u/10827040?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/skontar",

"html_url": "https://github.com/skontar",

"followers_url": "https://api.github.com/users/skontar/followers",

"following_url": "https://api.github.com/users/skontar/following{/other_user}",

"gists_url": "https://api.github.com/users/skontar/gists{/gist_id}",

"starred_url": "https://api.github.com/users/skontar/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/skontar/subscriptions",

"organizations_url": "https://api.github.com/users/skontar/orgs",

"repos_url": "https://api.github.com/users/skontar/repos",

"events_url": "https://api.github.com/users/skontar/events{/privacy}",

"received_events_url": "https://api.github.com/users/skontar/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"The problem is python operator precedence. The following work as expected:\r\n\r\n```python\r\n\r\nPerson.select().where(Person.name.not_in([]))\r\n\r\nPerson.select().where(~(Person.name << []))\r\n\r\nPerson.select().where(~(Person.name.in_([])))\r\n```",

"Thanks for reply. I see now, I got confused by SQL operator precedence. \r\nWould it not be better to translate to `WHERE (NOT \"t1\".\"name\" IN ())` for empty list, similarly as it does for non-empty list?\r\n\r\nI understand the reasoning and the problem, but I think this can potentially mask the issue until the list becomes empty one day and return unexpected results."

] | 2021-12-08T08:43:36 | 2021-12-08T15:59:19 | 2021-12-08T15:33:57 | NONE | null | I was using equivalent of `.where(~Person.name << some_list)` and it behaves unexpectedly when the list is empty. See below example.

```python

import peewee

from peewee import *

db = SqliteDatabase(':memory:')

class Person(Model):

name = CharField()

class Meta:

database = db

db.create_tables([Person])

Person(name="Alice").save()

Person(name="Bob").save()

Person(name="Cliff").save()

q = Person.select().where(Person.name << ['Bob'])

print(q)

# SELECT "t1"."id", "t1"."name" FROM "person" AS "t1" WHERE ("t1"."name" IN ('Bob'))

print(list(q.tuples()))

# [(2, 'Bob')]

q = Person.select().where(~Person.name << ['Bob'])

print(q)

# SELECT "t1"."id", "t1"."name" FROM "person" AS "t1" WHERE (NOT "t1"."name" IN ('Bob'))

print(list(q.tuples()))

# [(1, 'Alice'), (3, 'Cliff')]

q = Person.select().where(Person.name << [])

print(q)

# SELECT "t1"."id", "t1"."name" FROM "person" AS "t1" WHERE (0 = 1)

print(list(q.tuples()))

# []

q = Person.select().where(~Person.name << [])

print(q)

# SELECT "t1"."id", "t1"."name" FROM "person" AS "t1" WHERE (0 = 1)

# Expected: SELECT "t1"."id", "t1"."name" FROM "person" AS "t1" WHERE (1 = 1) ??? Maybe?

print(list(q.tuples()))

# []

# Expected: [(1, 'Alice'), (2, 'Bob'), (3, 'Cliff')]

print(peewee.__version__)

# 3.14.8

print(peewee.sqlite3.sqlite_version)

# 3.34.1

``` | {

"url": "https://api.github.com/repos/coleifer/peewee/issues/2500/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/coleifer/peewee/issues/2500/timeline | null | completed | null | null |

https://api.github.com/repos/coleifer/peewee/issues/2499 | https://api.github.com/repos/coleifer/peewee | https://api.github.com/repos/coleifer/peewee/issues/2499/labels{/name} | https://api.github.com/repos/coleifer/peewee/issues/2499/comments | https://api.github.com/repos/coleifer/peewee/issues/2499/events | https://github.com/coleifer/peewee/pull/2499 | 1,073,938,441 | PR_kwDOAA7yGM4vh8NM | 2,499 | Add BlackSheep integration | {

"login": "q0w",

"id": 43147888,

"node_id": "MDQ6VXNlcjQzMTQ3ODg4",

"avatar_url": "https://avatars.githubusercontent.com/u/43147888?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/q0w",

"html_url": "https://github.com/q0w",

"followers_url": "https://api.github.com/users/q0w/followers",

"following_url": "https://api.github.com/users/q0w/following{/other_user}",

"gists_url": "https://api.github.com/users/q0w/gists{/gist_id}",

"starred_url": "https://api.github.com/users/q0w/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/q0w/subscriptions",

"organizations_url": "https://api.github.com/users/q0w/orgs",

"repos_url": "https://api.github.com/users/q0w/repos",

"events_url": "https://api.github.com/users/q0w/events{/privacy}",

"received_events_url": "https://api.github.com/users/q0w/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"I really can't keep up with these new async-framework-of-the-day things. Additionally, connection handling doesn't work the way you might expect with async, so this is probably incorrect anyways."

] | 2021-12-08T02:20:08 | 2021-12-09T08:27:38 | 2021-12-08T23:43:29 | NONE | null | null | {

"url": "https://api.github.com/repos/coleifer/peewee/issues/2499/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/coleifer/peewee/issues/2499/timeline | null | null | false | {

"url": "https://api.github.com/repos/coleifer/peewee/pulls/2499",

"html_url": "https://github.com/coleifer/peewee/pull/2499",

"diff_url": "https://github.com/coleifer/peewee/pull/2499.diff",

"patch_url": "https://github.com/coleifer/peewee/pull/2499.patch",

"merged_at": null

} |

https://api.github.com/repos/coleifer/peewee/issues/2498 | https://api.github.com/repos/coleifer/peewee | https://api.github.com/repos/coleifer/peewee/issues/2498/labels{/name} | https://api.github.com/repos/coleifer/peewee/issues/2498/comments | https://api.github.com/repos/coleifer/peewee/issues/2498/events | https://github.com/coleifer/peewee/issues/2498 | 1,071,183,911 | I_kwDOAA7yGM4_2Pgn | 2,498 | Peewee 2 does not work with python 3.10 | {

"login": "ribx",

"id": 4598953,

"node_id": "MDQ6VXNlcjQ1OTg5NTM=",

"avatar_url": "https://avatars.githubusercontent.com/u/4598953?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/ribx",

"html_url": "https://github.com/ribx",

"followers_url": "https://api.github.com/users/ribx/followers",

"following_url": "https://api.github.com/users/ribx/following{/other_user}",

"gists_url": "https://api.github.com/users/ribx/gists{/gist_id}",

"starred_url": "https://api.github.com/users/ribx/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ribx/subscriptions",

"organizations_url": "https://api.github.com/users/ribx/orgs",

"repos_url": "https://api.github.com/users/ribx/repos",

"events_url": "https://api.github.com/users/ribx/events{/privacy}",

"received_events_url": "https://api.github.com/users/ribx/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"That version is not receiving patches, unfortunately. Your best bet is to fork it and patch it. You won't lose sync with upstream if you stay on 2.x."

] | 2021-12-04T11:06:22 | 2021-12-04T13:11:43 | 2021-12-04T13:11:42 | NONE | null | The problem is, that the exports of the `collections` modules were moved to `collections.abc`:

```

docker run --rm -ti python:3.10 sh -c 'pip install cython &>/dev/null && pip install peewee==2.10.2'

....

Collecting peewee==2.10.2

Downloading peewee-2.10.2.tar.gz (516 kB)

|████████████████████████████████| 516 kB 3.7 MB/s

ERROR: Command errored out with exit status 1: