Unnamed: 0

int64 0

832k

| id

float64 2.49B

32.1B

| type

stringclasses 1

value | created_at

stringlengths 19

19

| repo

stringlengths 4

112

| repo_url

stringlengths 33

141

| action

stringclasses 3

values | title

stringlengths 1

1.02k

| labels

stringlengths 4

1.54k

| body

stringlengths 1

262k

| index

stringclasses 17

values | text_combine

stringlengths 95

262k

| label

stringclasses 2

values | text

stringlengths 96

252k

| binary_label

int64 0

1

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

204,841 | 7,091,419,575 | IssuesEvent | 2018-01-12 13:00:37 | cilium/cilium | https://api.github.com/repos/cilium/cilium | closed | Cilium Monitor Not Working | area/monitor kind/bug need-more-info priority/high | current cilium stable, minikube version: v0.22.3

```

root@minikube:/# cilium version

Cilium 1.0.0-rc2 2f33f7a Wed, 6 Dec 2017 15:22:37 -0800 go version go1.9 linux/amd64

```

Cilium status is fine:

```

root@minikube:/# cilium status

KVStore: Ok Etcd: http://127.0.0.1:2379 - (Leader) 3.1.5

ContainerRuntime: Ok

Kubernetes: Ok OK

Kubernetes APIs: ["core/v1::Node", "CustomResourceDefinition", "cilium/v2::CiliumNetworkPolicy", "extensions/v1beta1::NetworkPolicy", "networking.k8s.io/v1::NetworkPolicy", "core/v1::Service", "core/v1::Endpoint", "extensions/v1beta1::Ingress"]

Cilium: Ok OK

NodeMonitor: Listening for events on 2 CPUs with 64x4096 of shared memory

Cilium health daemon: Ok

Known cluster nodes:

minikube (localhost):

Primary Address: 192.168.99.100

Type: InternalIP

AllocRange: 10.15.0.0/16

```

```

root@minikube:/# cilium monitor

Listening for events on 2 CPUs with 64x4096 of shared memory

Press Ctrl-C to quit

Error: unable to connect to monitor dial unix /var/run/cilium/monitor.sock: connect: connection refused

```

There is not gops output:

```

root@minikube:/# gops

1006 701 gops go1.9 /usr/local/bin/gops

255 1 cilium-node-monitor go1.9 /usr/bin/cilium-node-monitor

262 1 cilium-health * go1.9 /usr/bin/cilium-health

1 0 cilium-agent * go1.9 /usr/bin/cilium-agent

root@minikube:/# gops stack 255

couldn't get port by PID: dial tcp 127.0.0.1:0: getsockopt: connection refused

```

I was told there are no logs for cilium-node-monitor, but I did confirm via "ps" that it was running:

```

root@minikube:/# ps -Af | grep cilium

root 1 0 0 01:26 ? 00:00:02 cilium-agent --debug=false -t vxlan --kvstore etcd --kvstore-opt etcd.config=/var/lib/etcd-config/etcd.config --disable-ipv4=false

root 255 1 0 01:27 ? 00:00:00 cilium-node-monitor

root 262 1 0 01:27 ? 00:00:01 cilium-health -d

root 908 701 0 01:33 ? 00:00:00 grep cilium

``` | 1.0 | Cilium Monitor Not Working - current cilium stable, minikube version: v0.22.3

```

root@minikube:/# cilium version

Cilium 1.0.0-rc2 2f33f7a Wed, 6 Dec 2017 15:22:37 -0800 go version go1.9 linux/amd64

```

Cilium status is fine:

```

root@minikube:/# cilium status

KVStore: Ok Etcd: http://127.0.0.1:2379 - (Leader) 3.1.5

ContainerRuntime: Ok

Kubernetes: Ok OK

Kubernetes APIs: ["core/v1::Node", "CustomResourceDefinition", "cilium/v2::CiliumNetworkPolicy", "extensions/v1beta1::NetworkPolicy", "networking.k8s.io/v1::NetworkPolicy", "core/v1::Service", "core/v1::Endpoint", "extensions/v1beta1::Ingress"]

Cilium: Ok OK

NodeMonitor: Listening for events on 2 CPUs with 64x4096 of shared memory

Cilium health daemon: Ok

Known cluster nodes:

minikube (localhost):

Primary Address: 192.168.99.100

Type: InternalIP

AllocRange: 10.15.0.0/16

```

```

root@minikube:/# cilium monitor

Listening for events on 2 CPUs with 64x4096 of shared memory

Press Ctrl-C to quit

Error: unable to connect to monitor dial unix /var/run/cilium/monitor.sock: connect: connection refused

```

There is not gops output:

```

root@minikube:/# gops

1006 701 gops go1.9 /usr/local/bin/gops

255 1 cilium-node-monitor go1.9 /usr/bin/cilium-node-monitor

262 1 cilium-health * go1.9 /usr/bin/cilium-health

1 0 cilium-agent * go1.9 /usr/bin/cilium-agent

root@minikube:/# gops stack 255

couldn't get port by PID: dial tcp 127.0.0.1:0: getsockopt: connection refused

```

I was told there are no logs for cilium-node-monitor, but I did confirm via "ps" that it was running:

```

root@minikube:/# ps -Af | grep cilium

root 1 0 0 01:26 ? 00:00:02 cilium-agent --debug=false -t vxlan --kvstore etcd --kvstore-opt etcd.config=/var/lib/etcd-config/etcd.config --disable-ipv4=false

root 255 1 0 01:27 ? 00:00:00 cilium-node-monitor

root 262 1 0 01:27 ? 00:00:01 cilium-health -d

root 908 701 0 01:33 ? 00:00:00 grep cilium

``` | non_test | cilium monitor not working current cilium stable minikube version root minikube cilium version cilium wed dec go version linux cilium status is fine root minikube cilium status kvstore ok etcd leader containerruntime ok kubernetes ok ok kubernetes apis cilium ok ok nodemonitor listening for events on cpus with of shared memory cilium health daemon ok known cluster nodes minikube localhost primary address type internalip allocrange root minikube cilium monitor listening for events on cpus with of shared memory press ctrl c to quit error unable to connect to monitor dial unix var run cilium monitor sock connect connection refused there is not gops output root minikube gops gops usr local bin gops cilium node monitor usr bin cilium node monitor cilium health usr bin cilium health cilium agent usr bin cilium agent root minikube gops stack couldn t get port by pid dial tcp getsockopt connection refused i was told there are no logs for cilium node monitor but i did confirm via ps that it was running root minikube ps af grep cilium root cilium agent debug false t vxlan kvstore etcd kvstore opt etcd config var lib etcd config etcd config disable false root cilium node monitor root cilium health d root grep cilium | 0 |

8,394 | 6,535,815,873 | IssuesEvent | 2017-08-31 15:47:22 | Patternslib/Patterns | https://api.github.com/repos/Patternslib/Patterns | closed | Bumper update interval | Pattern bumper Performance | Bumpers move rather choppy when the user scrolls. This can be solved with some CSS tricks with position sticky. Except position sticky is only applicable for certain cases and it's taken out recent versions of Chrome again, setting us back to square one.

Is the smoothness of the bumper related to the interval in which it measures/updates the position? If so could we increase this interval so that bumpers become smooth in all browsers?

| True | Bumper update interval - Bumpers move rather choppy when the user scrolls. This can be solved with some CSS tricks with position sticky. Except position sticky is only applicable for certain cases and it's taken out recent versions of Chrome again, setting us back to square one.

Is the smoothness of the bumper related to the interval in which it measures/updates the position? If so could we increase this interval so that bumpers become smooth in all browsers?

| non_test | bumper update interval bumpers move rather choppy when the user scrolls this can be solved with some css tricks with position sticky except position sticky is only applicable for certain cases and it s taken out recent versions of chrome again setting us back to square one is the smoothness of the bumper related to the interval in which it measures updates the position if so could we increase this interval so that bumpers become smooth in all browsers | 0 |

84,708 | 16,539,217,454 | IssuesEvent | 2021-05-27 14:55:07 | HansenBerlin/altenheim-kalender | https://api.github.com/repos/HansenBerlin/altenheim-kalender | opened | POC Kalenderimport | CODE EPIC | Zunächst nur als POC (eine Klasse reicht erstmal) sollte die Möglichkeit des Imports von Kalendern (Google usw.) gecheckt werden. Ggfs gibt dies das Framework her, dazu mal die Doku checken oder @dannyneup fragen, der hat sich damit auseinandergesetzt. | 1.0 | POC Kalenderimport - Zunächst nur als POC (eine Klasse reicht erstmal) sollte die Möglichkeit des Imports von Kalendern (Google usw.) gecheckt werden. Ggfs gibt dies das Framework her, dazu mal die Doku checken oder @dannyneup fragen, der hat sich damit auseinandergesetzt. | non_test | poc kalenderimport zunächst nur als poc eine klasse reicht erstmal sollte die möglichkeit des imports von kalendern google usw gecheckt werden ggfs gibt dies das framework her dazu mal die doku checken oder dannyneup fragen der hat sich damit auseinandergesetzt | 0 |

323,116 | 27,695,754,218 | IssuesEvent | 2023-03-14 01:58:12 | owncast/owncast | https://api.github.com/repos/owncast/owncast | closed | Flaky test: Browser notification modal content | backlog tests | ### Share your bug report, feature request, or comment.

The "Notifications not supported" message gets marked as changed in UI tests even when it has not changed.

Example:

Story: https://owncast.online/components/?path=%2Fstory%2Fowncast-modals-browser-notifications--basic | 1.0 | Flaky test: Browser notification modal content - ### Share your bug report, feature request, or comment.

The "Notifications not supported" message gets marked as changed in UI tests even when it has not changed.

Example:

Story: https://owncast.online/components/?path=%2Fstory%2Fowncast-modals-browser-notifications--basic | test | flaky test browser notification modal content share your bug report feature request or comment the notifications not supported message gets marked as changed in ui tests even when it has not changed example story | 1 |

50,045 | 6,050,845,033 | IssuesEvent | 2017-06-12 22:02:49 | rancher/rancher | https://api.github.com/repos/rancher/rancher | closed | Failed host doesn't have delete in detail's menu | area/host area/ui kind/bug status/resolved status/to-test | **Rancher versions:** 6/8

**Steps to Reproduce:**

1. Create a host that will fail

2. Click on Host name after it's error

3. Click on menu

**Results:** No actions available. Should be same as main host page menu. Which has machine config, delete and view in api

| 1.0 | Failed host doesn't have delete in detail's menu - **Rancher versions:** 6/8

**Steps to Reproduce:**

1. Create a host that will fail

2. Click on Host name after it's error

3. Click on menu

**Results:** No actions available. Should be same as main host page menu. Which has machine config, delete and view in api

| test | failed host doesn t have delete in detail s menu rancher versions steps to reproduce create a host that will fail click on host name after it s error click on menu results no actions available should be same as main host page menu which has machine config delete and view in api | 1 |

816,838 | 30,614,082,340 | IssuesEvent | 2023-07-24 00:10:42 | umatt-ece/new-members-package | https://api.github.com/repos/umatt-ece/new-members-package | opened | GitHub Navigation | high priority | # GitHub Navigation

## Description

Create a GitHub Navigation tutorial to introduce members who may not be familiar. Additionally, specify team policies for managing repositories, teams, projects, and issues.

## Tasks

- [ ] Create GitHub.md tutorial.

## Linked Issues

## Additional Notes

| 1.0 | GitHub Navigation - # GitHub Navigation

## Description

Create a GitHub Navigation tutorial to introduce members who may not be familiar. Additionally, specify team policies for managing repositories, teams, projects, and issues.

## Tasks

- [ ] Create GitHub.md tutorial.

## Linked Issues

## Additional Notes

| non_test | github navigation github navigation description create a github navigation tutorial to introduce members who may not be familiar additionally specify team policies for managing repositories teams projects and issues tasks create github md tutorial linked issues additional notes | 0 |

165,354 | 13,999,597,077 | IssuesEvent | 2020-10-28 11:03:48 | crazycapivara/mapboxer | https://api.github.com/repos/crazycapivara/mapboxer | closed | Move api example to examples folder | documentation | Move all api examples from `inst/api-reference` to `examples/api-reference` | 1.0 | Move api example to examples folder - Move all api examples from `inst/api-reference` to `examples/api-reference` | non_test | move api example to examples folder move all api examples from inst api reference to examples api reference | 0 |

68,207 | 14,914,580,742 | IssuesEvent | 2021-01-22 15:36:12 | AlexRogalskiy/object-mappers-playground | https://api.github.com/repos/AlexRogalskiy/object-mappers-playground | opened | CVE-2013-4002 (High) detected in xercesImpl-2.9.1.jar | security vulnerability | ## CVE-2013-4002 - High Severity Vulnerability

<details><summary><img src='https://whitesource-resources.whitesourcesoftware.com/vulnerability_details.png' width=19 height=20> Vulnerable Library - <b>xercesImpl-2.9.1.jar</b></p></summary>

<p>Xerces2 is the next generation of high performance, fully compliant XML parsers in the

Apache Xerces family. This new version of Xerces introduces the Xerces Native Interface (XNI),

a complete framework for building parser components and configurations that is extremely

modular and easy to program.</p>

<p>Path to dependency file: object-mappers-playground/modules/objectmappers-all/pom.xml</p>

<p>Path to vulnerable library: /home/wss-scanner/.m2/repository/xerces/xercesImpl/2.9.1/xercesImpl-2.9.1.jar,/home/wss-scanner/.m2/repository/xerces/xercesImpl/2.9.1/xercesImpl-2.9.1.jar</p>

<p>

Dependency Hierarchy:

- milyn-smooks-all-1.7.1.jar (Root Library)

- milyn-commons-1.7.1.jar

- :x: **xercesImpl-2.9.1.jar** (Vulnerable Library)

<p>Found in HEAD commit: <a href="https://github.com/AlexRogalskiy/object-mappers-playground/commit/ccc3fe199db8575ce11d67be41855d33c5e718ab">ccc3fe199db8575ce11d67be41855d33c5e718ab</a></p>

</p>

</details>

<p></p>

<details><summary><img src='https://whitesource-resources.whitesourcesoftware.com/high_vul.png' width=19 height=20> Vulnerability Details</summary>

<p>

XMLscanner.java in Apache Xerces2 Java Parser before 2.12.0, as used in the Java Runtime Environment (JRE) in IBM Java 5.0 before 5.0 SR16-FP3, 6 before 6 SR14, 6.0.1 before 6.0.1 SR6, and 7 before 7 SR5 as well as Oracle Java SE 7u40 and earlier, Java SE 6u60 and earlier, Java SE 5.0u51 and earlier, JRockit R28.2.8 and earlier, JRockit R27.7.6 and earlier, Java SE Embedded 7u40 and earlier, and possibly other products allows remote attackers to cause a denial of service via vectors related to XML attribute names.

<p>Publish Date: 2013-07-23

<p>URL: <a href=https://vuln.whitesourcesoftware.com/vulnerability/CVE-2013-4002>CVE-2013-4002</a></p>

</p>

</details>

<p></p>

<details><summary><img src='https://whitesource-resources.whitesourcesoftware.com/cvss3.png' width=19 height=20> CVSS 2 Score Details (<b>7.1</b>)</summary>

<p>

Base Score Metrics not available</p>

</p>

</details>

<p></p>

<details><summary><img src='https://whitesource-resources.whitesourcesoftware.com/suggested_fix.png' width=19 height=20> Suggested Fix</summary>

<p>

<p>Type: Upgrade version</p>

<p>Origin: <a href="https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2013-4002">https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2013-4002</a></p>

<p>Release Date: 2013-07-23</p>

<p>Fix Resolution: xerces:xercesImpl:Xerces-J_2_12_0</p>

</p>

</details>

<p></p>

***

Step up your Open Source Security Game with WhiteSource [here](https://www.whitesourcesoftware.com/full_solution_bolt_github) | True | CVE-2013-4002 (High) detected in xercesImpl-2.9.1.jar - ## CVE-2013-4002 - High Severity Vulnerability

<details><summary><img src='https://whitesource-resources.whitesourcesoftware.com/vulnerability_details.png' width=19 height=20> Vulnerable Library - <b>xercesImpl-2.9.1.jar</b></p></summary>

<p>Xerces2 is the next generation of high performance, fully compliant XML parsers in the

Apache Xerces family. This new version of Xerces introduces the Xerces Native Interface (XNI),

a complete framework for building parser components and configurations that is extremely

modular and easy to program.</p>

<p>Path to dependency file: object-mappers-playground/modules/objectmappers-all/pom.xml</p>

<p>Path to vulnerable library: /home/wss-scanner/.m2/repository/xerces/xercesImpl/2.9.1/xercesImpl-2.9.1.jar,/home/wss-scanner/.m2/repository/xerces/xercesImpl/2.9.1/xercesImpl-2.9.1.jar</p>

<p>

Dependency Hierarchy:

- milyn-smooks-all-1.7.1.jar (Root Library)

- milyn-commons-1.7.1.jar

- :x: **xercesImpl-2.9.1.jar** (Vulnerable Library)

<p>Found in HEAD commit: <a href="https://github.com/AlexRogalskiy/object-mappers-playground/commit/ccc3fe199db8575ce11d67be41855d33c5e718ab">ccc3fe199db8575ce11d67be41855d33c5e718ab</a></p>

</p>

</details>

<p></p>

<details><summary><img src='https://whitesource-resources.whitesourcesoftware.com/high_vul.png' width=19 height=20> Vulnerability Details</summary>

<p>

XMLscanner.java in Apache Xerces2 Java Parser before 2.12.0, as used in the Java Runtime Environment (JRE) in IBM Java 5.0 before 5.0 SR16-FP3, 6 before 6 SR14, 6.0.1 before 6.0.1 SR6, and 7 before 7 SR5 as well as Oracle Java SE 7u40 and earlier, Java SE 6u60 and earlier, Java SE 5.0u51 and earlier, JRockit R28.2.8 and earlier, JRockit R27.7.6 and earlier, Java SE Embedded 7u40 and earlier, and possibly other products allows remote attackers to cause a denial of service via vectors related to XML attribute names.

<p>Publish Date: 2013-07-23

<p>URL: <a href=https://vuln.whitesourcesoftware.com/vulnerability/CVE-2013-4002>CVE-2013-4002</a></p>

</p>

</details>

<p></p>

<details><summary><img src='https://whitesource-resources.whitesourcesoftware.com/cvss3.png' width=19 height=20> CVSS 2 Score Details (<b>7.1</b>)</summary>

<p>

Base Score Metrics not available</p>

</p>

</details>

<p></p>

<details><summary><img src='https://whitesource-resources.whitesourcesoftware.com/suggested_fix.png' width=19 height=20> Suggested Fix</summary>

<p>

<p>Type: Upgrade version</p>

<p>Origin: <a href="https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2013-4002">https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2013-4002</a></p>

<p>Release Date: 2013-07-23</p>

<p>Fix Resolution: xerces:xercesImpl:Xerces-J_2_12_0</p>

</p>

</details>

<p></p>

***

Step up your Open Source Security Game with WhiteSource [here](https://www.whitesourcesoftware.com/full_solution_bolt_github) | non_test | cve high detected in xercesimpl jar cve high severity vulnerability vulnerable library xercesimpl jar is the next generation of high performance fully compliant xml parsers in the apache xerces family this new version of xerces introduces the xerces native interface xni a complete framework for building parser components and configurations that is extremely modular and easy to program path to dependency file object mappers playground modules objectmappers all pom xml path to vulnerable library home wss scanner repository xerces xercesimpl xercesimpl jar home wss scanner repository xerces xercesimpl xercesimpl jar dependency hierarchy milyn smooks all jar root library milyn commons jar x xercesimpl jar vulnerable library found in head commit a href vulnerability details xmlscanner java in apache java parser before as used in the java runtime environment jre in ibm java before before before and before as well as oracle java se and earlier java se and earlier java se and earlier jrockit and earlier jrockit and earlier java se embedded and earlier and possibly other products allows remote attackers to cause a denial of service via vectors related to xml attribute names publish date url a href cvss score details base score metrics not available suggested fix type upgrade version origin a href release date fix resolution xerces xercesimpl xerces j step up your open source security game with whitesource | 0 |

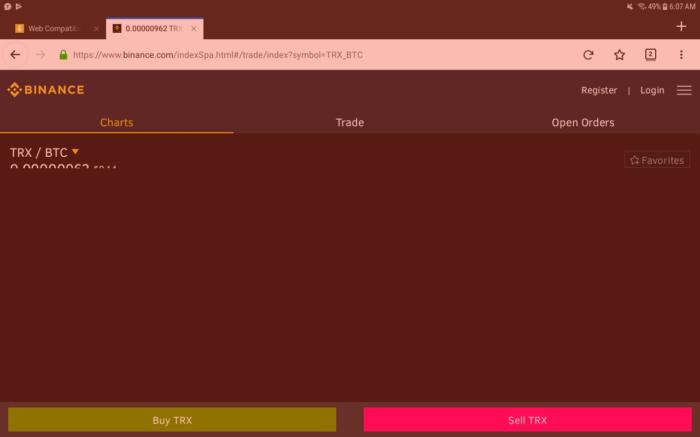

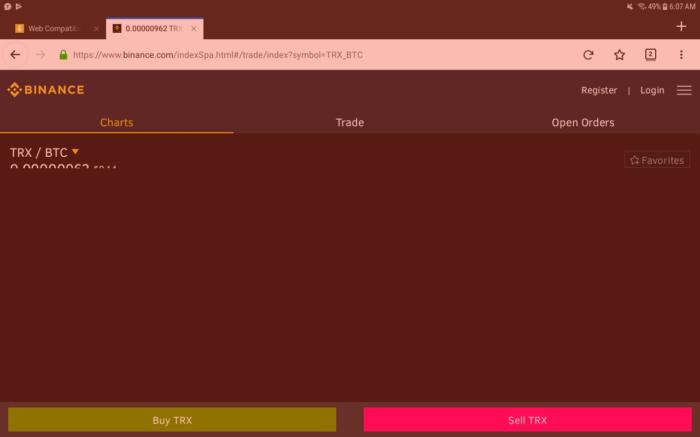

252,363 | 8,035,026,341 | IssuesEvent | 2018-07-30 01:34:16 | webcompat/web-bugs | https://api.github.com/repos/webcompat/web-bugs | closed | www.binance.com - site is not usable | browser-firefox-mobile-tablet priority-normal | <!-- @browser: Firefox Mobile (Tablet) 57.0 -->

<!-- @ua_header: Mozilla/5.0 (Android 7.1.1; Tablet; rv:57.0) Gecko/57.0 Firefox/57.0 -->

<!-- @reported_with: web -->

**URL**: https://www.binance.com/indexSpa.html#/trade/index?symbol=TRX_BTC

**Browser / Version**: Firefox Mobile (Tablet) 57.0

**Operating System**: Android 7.1.1

**Tested Another Browser**: Yes

**Problem type**: Site is not usable

**Description**: doesn't render properly

**Steps to Reproduce**:

1. Go to binance.com

2. Click on any coin

3. Graphs fail to render properly

Works fine on latest Chrome for Android

[](https://webcompat.com/uploads/2018/1/3f89a31b-17c4-4460-a229-08bdbdf87bcd.jpeg)

_From [webcompat.com](https://webcompat.com/) with ❤️_ | 1.0 | www.binance.com - site is not usable - <!-- @browser: Firefox Mobile (Tablet) 57.0 -->

<!-- @ua_header: Mozilla/5.0 (Android 7.1.1; Tablet; rv:57.0) Gecko/57.0 Firefox/57.0 -->

<!-- @reported_with: web -->

**URL**: https://www.binance.com/indexSpa.html#/trade/index?symbol=TRX_BTC

**Browser / Version**: Firefox Mobile (Tablet) 57.0

**Operating System**: Android 7.1.1

**Tested Another Browser**: Yes

**Problem type**: Site is not usable

**Description**: doesn't render properly

**Steps to Reproduce**:

1. Go to binance.com

2. Click on any coin

3. Graphs fail to render properly

Works fine on latest Chrome for Android

[](https://webcompat.com/uploads/2018/1/3f89a31b-17c4-4460-a229-08bdbdf87bcd.jpeg)

_From [webcompat.com](https://webcompat.com/) with ❤️_ | non_test | site is not usable url browser version firefox mobile tablet operating system android tested another browser yes problem type site is not usable description doesn t render properly steps to reproduce go to binance com click on any coin graphs fail to render properly works fine on latest chrome for android from with ❤️ | 0 |

88,274 | 17,532,384,600 | IssuesEvent | 2021-08-12 00:16:22 | backdrop/backdrop-issues | https://api.github.com/repos/backdrop/backdrop-issues | closed | Get run-tests.sh script with profile cache running on newer environments | type - bug report status - has pull request pr - needs testing pr - needs code review | **Description of the bug**

Currently running tests via script run-tests.sh _with profile cache on_ doesn't work on newer environments, namely mysql 5.7+ or mariadb 10.2.2+.

These db versions with settings like listed below choke on the `alter table` to MyISAM in backdrop_web_test_case_cache.php.

Message:

`ERROR: SQLSTATE[42000]: Syntax error or access violation: 1071 Specified key was too long; max key length is 1000 bytes

`

**Steps To Reproduce**

Make sure your db is new enough (on Ubuntu Bionic, Debian Buster...) and has following variables set:

- innodb_large_prefix ON

- innodb_default_row_format dynamic

- innodb_file_per_table ON

- innodb_file_format Barracuda

Then download and install Backdrop (via install.sh or however).

Then go to the Backdrop root directory and try to run the tests with profile caching (param --cache):

`./core/scripts/run-tests.sh --url http://localhost/ --force --cache BlockTestCase`

**Actual behavior**

PDO Exception and no tests run at all.

**Expected behavior**

Tests running.

**Additional information**

I ran into this issue when trying to get Simpletests working with travisCI, but now found a way to reproduce.

Here's the [Zulipchat thread](https://backdrop.zulipchat.com/#narrow/stream/218635-Backdrop/topic/pdo.20when.20running.20tests) with my question.

Joseph Flatt provided a trick: setting `$test->prepareCache(TRUE)` in run-tests.sh bypasses the table conversions to MyISAM - and then the tests run without problem. But patching around in core is no real solution, of course.

**Please note:**

Variable innodb_large_prefix is deprecated in both, mysql and mariadb, and large prefixes will be the default setting after this variable has gone.

See details to the large prefix variable for [MySQL](https://dev.mysql.com/doc/refman/5.7/en/innodb-parameters.html#sysvar_innodb_large_prefix) and [MariaDB](https://mariadb.com/kb/en/innodb-system-variables/#innodb_large_prefix). | 1.0 | Get run-tests.sh script with profile cache running on newer environments - **Description of the bug**

Currently running tests via script run-tests.sh _with profile cache on_ doesn't work on newer environments, namely mysql 5.7+ or mariadb 10.2.2+.

These db versions with settings like listed below choke on the `alter table` to MyISAM in backdrop_web_test_case_cache.php.

Message:

`ERROR: SQLSTATE[42000]: Syntax error or access violation: 1071 Specified key was too long; max key length is 1000 bytes

`

**Steps To Reproduce**

Make sure your db is new enough (on Ubuntu Bionic, Debian Buster...) and has following variables set:

- innodb_large_prefix ON

- innodb_default_row_format dynamic

- innodb_file_per_table ON

- innodb_file_format Barracuda

Then download and install Backdrop (via install.sh or however).

Then go to the Backdrop root directory and try to run the tests with profile caching (param --cache):

`./core/scripts/run-tests.sh --url http://localhost/ --force --cache BlockTestCase`

**Actual behavior**

PDO Exception and no tests run at all.

**Expected behavior**

Tests running.

**Additional information**

I ran into this issue when trying to get Simpletests working with travisCI, but now found a way to reproduce.

Here's the [Zulipchat thread](https://backdrop.zulipchat.com/#narrow/stream/218635-Backdrop/topic/pdo.20when.20running.20tests) with my question.

Joseph Flatt provided a trick: setting `$test->prepareCache(TRUE)` in run-tests.sh bypasses the table conversions to MyISAM - and then the tests run without problem. But patching around in core is no real solution, of course.

**Please note:**

Variable innodb_large_prefix is deprecated in both, mysql and mariadb, and large prefixes will be the default setting after this variable has gone.

See details to the large prefix variable for [MySQL](https://dev.mysql.com/doc/refman/5.7/en/innodb-parameters.html#sysvar_innodb_large_prefix) and [MariaDB](https://mariadb.com/kb/en/innodb-system-variables/#innodb_large_prefix). | non_test | get run tests sh script with profile cache running on newer environments description of the bug currently running tests via script run tests sh with profile cache on doesn t work on newer environments namely mysql or mariadb these db versions with settings like listed below choke on the alter table to myisam in backdrop web test case cache php message error sqlstate syntax error or access violation specified key was too long max key length is bytes steps to reproduce make sure your db is new enough on ubuntu bionic debian buster and has following variables set innodb large prefix on innodb default row format dynamic innodb file per table on innodb file format barracuda then download and install backdrop via install sh or however then go to the backdrop root directory and try to run the tests with profile caching param cache core scripts run tests sh url force cache blocktestcase actual behavior pdo exception and no tests run at all expected behavior tests running additional information i ran into this issue when trying to get simpletests working with travisci but now found a way to reproduce here s the with my question joseph flatt provided a trick setting test preparecache true in run tests sh bypasses the table conversions to myisam and then the tests run without problem but patching around in core is no real solution of course please note variable innodb large prefix is deprecated in both mysql and mariadb and large prefixes will be the default setting after this variable has gone see details to the large prefix variable for and | 0 |

130,860 | 27,777,797,053 | IssuesEvent | 2023-03-16 18:30:27 | patternfly/pf-codemods | https://api.github.com/repos/patternfly/pf-codemods | closed | Tooltip - reference prop renamed to triggerRef | codemod | Follow up to breaking change PR https://github.com/patternfly/patternfly-react/pull/8733

Tooltip's reference prop was renamed to triggerRef

_Required actions:_

1. Build codemod

2. Build test

3. Update readme with description & example | 1.0 | Tooltip - reference prop renamed to triggerRef - Follow up to breaking change PR https://github.com/patternfly/patternfly-react/pull/8733

Tooltip's reference prop was renamed to triggerRef

_Required actions:_

1. Build codemod

2. Build test

3. Update readme with description & example | non_test | tooltip reference prop renamed to triggerref follow up to breaking change pr tooltip s reference prop was renamed to triggerref required actions build codemod build test update readme with description example | 0 |

285,371 | 24,661,865,337 | IssuesEvent | 2022-10-18 07:22:42 | kartoza/ckanext-dalrrd-emc-dcpr | https://api.github.com/repos/kartoza/ckanext-dalrrd-emc-dcpr | opened | do linked reserouces work via CSW? | test | using QGIS MetaSearch, test that EMC records that have valid OGC resource links, return those links in a valid way in the response such that you can load them directly into QGIS.

Use existing records in the EMC that you've identified to have these links else create your own testing records with valid links | 1.0 | do linked reserouces work via CSW? - using QGIS MetaSearch, test that EMC records that have valid OGC resource links, return those links in a valid way in the response such that you can load them directly into QGIS.

Use existing records in the EMC that you've identified to have these links else create your own testing records with valid links | test | do linked reserouces work via csw using qgis metasearch test that emc records that have valid ogc resource links return those links in a valid way in the response such that you can load them directly into qgis use existing records in the emc that you ve identified to have these links else create your own testing records with valid links | 1 |

562,367 | 16,658,230,805 | IssuesEvent | 2021-06-05 22:58:30 | eclipse/lyo | https://api.github.com/repos/eclipse/lyo | closed | Bintray shutting down; need to check our POMs | Component: N/A (project-wide) Priority: Critical Type: Maintenance | https://news.ycombinator.com/item?id=26016505

I think Shaclex was the only dependency we fetched via Bintray. Need to migrate before May 1, better yet Feb 28. | 1.0 | Bintray shutting down; need to check our POMs - https://news.ycombinator.com/item?id=26016505

I think Shaclex was the only dependency we fetched via Bintray. Need to migrate before May 1, better yet Feb 28. | non_test | bintray shutting down need to check our poms i think shaclex was the only dependency we fetched via bintray need to migrate before may better yet feb | 0 |

198,485 | 22,659,645,823 | IssuesEvent | 2022-07-02 01:11:04 | nvenkatesh1/SCA_test_JS | https://api.github.com/repos/nvenkatesh1/SCA_test_JS | closed | extract-text-webpack-plugin-1.0.1.tgz: 1 vulnerabilities (highest severity is: 7.8) - autoclosed | security vulnerability | <details><summary><img src='https://whitesource-resources.whitesourcesoftware.com/vulnerability_details.png' width=19 height=20> Vulnerable Library - <b>extract-text-webpack-plugin-1.0.1.tgz</b></p></summary>

<p></p>

<p>Path to dependency file: /package.json</p>

<p>Path to vulnerable library: /node_modules/webpack/node_modules/async/package.json,/app/compilers/react-compiler/node_modules/async/package.json,/node_modules/istanbul/node_modules/async/package.json,/node_modules/extract-text-webpack-plugin/node_modules/async/package.json,/node_modules/handlebars/node_modules/async/package.json</p>

<p>

<p>Found in HEAD commit: <a href="https://github.com/nvenkatesh1/SCA_test_JS/commit/eb47eeefc02a252a76628fec10a3c26aacb34024">eb47eeefc02a252a76628fec10a3c26aacb34024</a></p></details>

## Vulnerabilities

| CVE | Severity | <img src='https://whitesource-resources.whitesourcesoftware.com/cvss3.png' width=19 height=20> CVSS | Dependency | Type | Fixed in | Remediation Available |

| ------------- | ------------- | ----- | ----- | ----- | --- | --- |

| [CVE-2021-43138](https://vuln.whitesourcesoftware.com/vulnerability/CVE-2021-43138) | <img src='https://whitesource-resources.whitesourcesoftware.com/high_vul.png' width=19 height=20> High | 7.8 | async-1.5.2.tgz | Transitive | N/A | ❌ |

## Details

<details>

<summary><img src='https://whitesource-resources.whitesourcesoftware.com/high_vul.png' width=19 height=20> CVE-2021-43138</summary>

### Vulnerable Library - <b>async-1.5.2.tgz</b></p>

<p>Higher-order functions and common patterns for asynchronous code</p>

<p>Library home page: <a href="https://registry.npmjs.org/async/-/async-1.5.2.tgz">https://registry.npmjs.org/async/-/async-1.5.2.tgz</a></p>

<p>Path to dependency file: /package.json</p>

<p>Path to vulnerable library: /node_modules/webpack/node_modules/async/package.json,/app/compilers/react-compiler/node_modules/async/package.json,/node_modules/istanbul/node_modules/async/package.json,/node_modules/extract-text-webpack-plugin/node_modules/async/package.json,/node_modules/handlebars/node_modules/async/package.json</p>

<p>

Dependency Hierarchy:

- extract-text-webpack-plugin-1.0.1.tgz (Root Library)

- :x: **async-1.5.2.tgz** (Vulnerable Library)

<p>Found in HEAD commit: <a href="https://github.com/nvenkatesh1/SCA_test_JS/commit/eb47eeefc02a252a76628fec10a3c26aacb34024">eb47eeefc02a252a76628fec10a3c26aacb34024</a></p>

<p>Found in base branch: <b>master</b></p>

</p>

<p></p>

### Vulnerability Details

<p>

In Async before 2.6.4 and 3.x before 3.2.2, a malicious user can obtain privileges via the mapValues() method, aka lib/internal/iterator.js createObjectIterator prototype pollution.

<p>Publish Date: 2022-04-06

<p>URL: <a href=https://vuln.whitesourcesoftware.com/vulnerability/CVE-2021-43138>CVE-2021-43138</a></p>

</p>

<p></p>

### CVSS 3 Score Details (<b>7.8</b>)

<p>

Base Score Metrics:

- Exploitability Metrics:

- Attack Vector: Local

- Attack Complexity: Low

- Privileges Required: None

- User Interaction: Required

- Scope: Unchanged

- Impact Metrics:

- Confidentiality Impact: High

- Integrity Impact: High

- Availability Impact: High

</p>

For more information on CVSS3 Scores, click <a href="https://www.first.org/cvss/calculator/3.0">here</a>.

</p>

<p></p>

### Suggested Fix

<p>

<p>Type: Upgrade version</p>

<p>Origin: <a href="https://nvd.nist.gov/vuln/detail/CVE-2021-43138">https://nvd.nist.gov/vuln/detail/CVE-2021-43138</a></p>

<p>Release Date: 2022-04-06</p>

<p>Fix Resolution: async - v3.2.2</p>

</p>

<p></p>

</details> | True | extract-text-webpack-plugin-1.0.1.tgz: 1 vulnerabilities (highest severity is: 7.8) - autoclosed - <details><summary><img src='https://whitesource-resources.whitesourcesoftware.com/vulnerability_details.png' width=19 height=20> Vulnerable Library - <b>extract-text-webpack-plugin-1.0.1.tgz</b></p></summary>

<p></p>

<p>Path to dependency file: /package.json</p>

<p>Path to vulnerable library: /node_modules/webpack/node_modules/async/package.json,/app/compilers/react-compiler/node_modules/async/package.json,/node_modules/istanbul/node_modules/async/package.json,/node_modules/extract-text-webpack-plugin/node_modules/async/package.json,/node_modules/handlebars/node_modules/async/package.json</p>

<p>

<p>Found in HEAD commit: <a href="https://github.com/nvenkatesh1/SCA_test_JS/commit/eb47eeefc02a252a76628fec10a3c26aacb34024">eb47eeefc02a252a76628fec10a3c26aacb34024</a></p></details>

## Vulnerabilities

| CVE | Severity | <img src='https://whitesource-resources.whitesourcesoftware.com/cvss3.png' width=19 height=20> CVSS | Dependency | Type | Fixed in | Remediation Available |

| ------------- | ------------- | ----- | ----- | ----- | --- | --- |

| [CVE-2021-43138](https://vuln.whitesourcesoftware.com/vulnerability/CVE-2021-43138) | <img src='https://whitesource-resources.whitesourcesoftware.com/high_vul.png' width=19 height=20> High | 7.8 | async-1.5.2.tgz | Transitive | N/A | ❌ |

## Details

<details>

<summary><img src='https://whitesource-resources.whitesourcesoftware.com/high_vul.png' width=19 height=20> CVE-2021-43138</summary>

### Vulnerable Library - <b>async-1.5.2.tgz</b></p>

<p>Higher-order functions and common patterns for asynchronous code</p>

<p>Library home page: <a href="https://registry.npmjs.org/async/-/async-1.5.2.tgz">https://registry.npmjs.org/async/-/async-1.5.2.tgz</a></p>

<p>Path to dependency file: /package.json</p>

<p>Path to vulnerable library: /node_modules/webpack/node_modules/async/package.json,/app/compilers/react-compiler/node_modules/async/package.json,/node_modules/istanbul/node_modules/async/package.json,/node_modules/extract-text-webpack-plugin/node_modules/async/package.json,/node_modules/handlebars/node_modules/async/package.json</p>

<p>

Dependency Hierarchy:

- extract-text-webpack-plugin-1.0.1.tgz (Root Library)

- :x: **async-1.5.2.tgz** (Vulnerable Library)

<p>Found in HEAD commit: <a href="https://github.com/nvenkatesh1/SCA_test_JS/commit/eb47eeefc02a252a76628fec10a3c26aacb34024">eb47eeefc02a252a76628fec10a3c26aacb34024</a></p>

<p>Found in base branch: <b>master</b></p>

</p>

<p></p>

### Vulnerability Details

<p>

In Async before 2.6.4 and 3.x before 3.2.2, a malicious user can obtain privileges via the mapValues() method, aka lib/internal/iterator.js createObjectIterator prototype pollution.

<p>Publish Date: 2022-04-06

<p>URL: <a href=https://vuln.whitesourcesoftware.com/vulnerability/CVE-2021-43138>CVE-2021-43138</a></p>

</p>

<p></p>

### CVSS 3 Score Details (<b>7.8</b>)

<p>

Base Score Metrics:

- Exploitability Metrics:

- Attack Vector: Local

- Attack Complexity: Low

- Privileges Required: None

- User Interaction: Required

- Scope: Unchanged

- Impact Metrics:

- Confidentiality Impact: High

- Integrity Impact: High

- Availability Impact: High

</p>

For more information on CVSS3 Scores, click <a href="https://www.first.org/cvss/calculator/3.0">here</a>.

</p>

<p></p>

### Suggested Fix

<p>

<p>Type: Upgrade version</p>

<p>Origin: <a href="https://nvd.nist.gov/vuln/detail/CVE-2021-43138">https://nvd.nist.gov/vuln/detail/CVE-2021-43138</a></p>

<p>Release Date: 2022-04-06</p>

<p>Fix Resolution: async - v3.2.2</p>

</p>

<p></p>

</details> | non_test | extract text webpack plugin tgz vulnerabilities highest severity is autoclosed vulnerable library extract text webpack plugin tgz path to dependency file package json path to vulnerable library node modules webpack node modules async package json app compilers react compiler node modules async package json node modules istanbul node modules async package json node modules extract text webpack plugin node modules async package json node modules handlebars node modules async package json found in head commit a href vulnerabilities cve severity cvss dependency type fixed in remediation available high async tgz transitive n a details cve vulnerable library async tgz higher order functions and common patterns for asynchronous code library home page a href path to dependency file package json path to vulnerable library node modules webpack node modules async package json app compilers react compiler node modules async package json node modules istanbul node modules async package json node modules extract text webpack plugin node modules async package json node modules handlebars node modules async package json dependency hierarchy extract text webpack plugin tgz root library x async tgz vulnerable library found in head commit a href found in base branch master vulnerability details in async before and x before a malicious user can obtain privileges via the mapvalues method aka lib internal iterator js createobjectiterator prototype pollution publish date url a href cvss score details base score metrics exploitability metrics attack vector local attack complexity low privileges required none user interaction required scope unchanged impact metrics confidentiality impact high integrity impact high availability impact high for more information on scores click a href suggested fix type upgrade version origin a href release date fix resolution async | 0 |

1,358 | 5,865,047,597 | IssuesEvent | 2017-05-13 00:19:53 | ansible/ansible-modules-extras | https://api.github.com/repos/ansible/ansible-modules-extras | closed | Install Chocolatey from Internal Source | affects_2.3 feature_idea waiting_on_maintainer windows | Was asked if this was available and it doesn't appear it is from looking at the source. @nitzmahone

##### ISSUE TYPE

- Feature Idea

##### COMPONENT NAME

win_chocolatey

##### ANSIBLE VERSION

##### CONFIGURATION

##### OS / ENVIRONMENT

Windows

##### SUMMARY

Folks would like to be able to have Chocolatey installed from internal sources when using the Ansible module, particularly when they are completely offline.

This would be a good capability added to the module. It is already supported by other config mgmt tools so those could provide good references on how to add it to the chocolatey ansible module.

| True | Install Chocolatey from Internal Source - Was asked if this was available and it doesn't appear it is from looking at the source. @nitzmahone

##### ISSUE TYPE

- Feature Idea

##### COMPONENT NAME

win_chocolatey

##### ANSIBLE VERSION

##### CONFIGURATION

##### OS / ENVIRONMENT

Windows

##### SUMMARY

Folks would like to be able to have Chocolatey installed from internal sources when using the Ansible module, particularly when they are completely offline.

This would be a good capability added to the module. It is already supported by other config mgmt tools so those could provide good references on how to add it to the chocolatey ansible module.

| non_test | install chocolatey from internal source was asked if this was available and it doesn t appear it is from looking at the source nitzmahone issue type feature idea component name win chocolatey ansible version configuration os environment windows summary folks would like to be able to have chocolatey installed from internal sources when using the ansible module particularly when they are completely offline this would be a good capability added to the module it is already supported by other config mgmt tools so those could provide good references on how to add it to the chocolatey ansible module | 0 |

469,804 | 13,526,198,183 | IssuesEvent | 2020-09-15 13:58:04 | carbon-design-system/ibm-dotcom-library | https://api.github.com/repos/carbon-design-system/ibm-dotcom-library | closed | Web component: Button Group Prod QA testing | Airtable Done QA package: web components priority: high | <!-- Avoid any type of solutions in this user story -->

<!-- replace _{{...}}_ with your own words or remove -->

#### User Story

<!-- {{Provide a detailed description of the user's need here, but avoid any type of solutions}} -->

> As a `[user role below]`:

developer using the ibm.com Library `Button Group`

> I need to:

have a version of the component that has been tested for accessibility compliance as well as on multiple browsers and platforms

> so that I can:

be confident that my ibm.com web site users will have a good experience

#### Additional information

<!-- {{Please provide any additional information or resources for reference}} -->

- [Browser Stack link](https://ibm.ent.box.com/notes/578734426612)

- [Browser Standard](https://w3.ibm.com/standards/web/browser/)

- Browser versions to be tested: Tier 1 browsers will be tested with defects created as Sev 1 or Sev 2. Tier 2 browser defects will be created as Sev 3 defects.

- Platforms to be tested, by priority: 1) Desktop 2) Mobile 3) Tablet

- Mobile & Tablet iOS versions: 13.1 and 13.3

- Mobile & Tablet Android versions: 9.0 Pie and 8.1 Oreo

- Browsers to be tested: Desktop: Chrome, Firefox, Safari, Edge, Mobile: Chrome, Safari, Samsung Internet, UC Browser, Tablet: Safari, Chrome, Android

- [Accessibility Checklist](https://www.ibm.com/able/guidelines/ci162/accessibility_checklist.html)

- [Creating a QA bug](https://ibm.ent.box.com/notes/603242247385)

- **See the Epic for the Design and Functional specs information**

- Dev issue (#3492)

- Once development is finished the updated code is available in the [**Web Components Canary Environment**](https://ibmdotcom-web-components-canary.mybluemix.net/?path=/story/overview-getting-started--page) for testing.

- The [**React canary environment**](https://ibmdotcom-react-canary.mybluemix.net/?path=/story/overview-getting-started--page) component should be used for comparison

#### Acceptance criteria

- [ ] Accessibility testing is complete. Component is compliant.

- [ ] All browser versions are tested

- [ ] All operating systems are tested

- [ ] All devices are tested

- [ ] Defects are recorded and retested when fixed | 1.0 | Web component: Button Group Prod QA testing - <!-- Avoid any type of solutions in this user story -->

<!-- replace _{{...}}_ with your own words or remove -->

#### User Story

<!-- {{Provide a detailed description of the user's need here, but avoid any type of solutions}} -->

> As a `[user role below]`:

developer using the ibm.com Library `Button Group`

> I need to:

have a version of the component that has been tested for accessibility compliance as well as on multiple browsers and platforms

> so that I can:

be confident that my ibm.com web site users will have a good experience

#### Additional information

<!-- {{Please provide any additional information or resources for reference}} -->

- [Browser Stack link](https://ibm.ent.box.com/notes/578734426612)

- [Browser Standard](https://w3.ibm.com/standards/web/browser/)

- Browser versions to be tested: Tier 1 browsers will be tested with defects created as Sev 1 or Sev 2. Tier 2 browser defects will be created as Sev 3 defects.

- Platforms to be tested, by priority: 1) Desktop 2) Mobile 3) Tablet

- Mobile & Tablet iOS versions: 13.1 and 13.3

- Mobile & Tablet Android versions: 9.0 Pie and 8.1 Oreo

- Browsers to be tested: Desktop: Chrome, Firefox, Safari, Edge, Mobile: Chrome, Safari, Samsung Internet, UC Browser, Tablet: Safari, Chrome, Android

- [Accessibility Checklist](https://www.ibm.com/able/guidelines/ci162/accessibility_checklist.html)

- [Creating a QA bug](https://ibm.ent.box.com/notes/603242247385)

- **See the Epic for the Design and Functional specs information**

- Dev issue (#3492)

- Once development is finished the updated code is available in the [**Web Components Canary Environment**](https://ibmdotcom-web-components-canary.mybluemix.net/?path=/story/overview-getting-started--page) for testing.

- The [**React canary environment**](https://ibmdotcom-react-canary.mybluemix.net/?path=/story/overview-getting-started--page) component should be used for comparison

#### Acceptance criteria

- [ ] Accessibility testing is complete. Component is compliant.

- [ ] All browser versions are tested

- [ ] All operating systems are tested

- [ ] All devices are tested

- [ ] Defects are recorded and retested when fixed | non_test | web component button group prod qa testing user story as a developer using the ibm com library button group i need to have a version of the component that has been tested for accessibility compliance as well as on multiple browsers and platforms so that i can be confident that my ibm com web site users will have a good experience additional information browser versions to be tested tier browsers will be tested with defects created as sev or sev tier browser defects will be created as sev defects platforms to be tested by priority desktop mobile tablet mobile tablet ios versions and mobile tablet android versions pie and oreo browsers to be tested desktop chrome firefox safari edge mobile chrome safari samsung internet uc browser tablet safari chrome android see the epic for the design and functional specs information dev issue once development is finished the updated code is available in the for testing the component should be used for comparison acceptance criteria accessibility testing is complete component is compliant all browser versions are tested all operating systems are tested all devices are tested defects are recorded and retested when fixed | 0 |

74,083 | 15,303,962,459 | IssuesEvent | 2021-02-24 16:22:32 | elastic/kibana | https://api.github.com/repos/elastic/kibana | opened | Detect and prevent the use of mismatched encryption keys | Team:Security discuss enhancement | Kibana relies on a number of encryption keys. Arguably the most important key is `xpack.encryptedSavedObjects.encryptionKey`, as this controls the encryption/decryption of actions, alerts, and other sensitive user data.

Kibana requires that this key is set to the same value across all instances. If two Kibana instances have different encryption keys, then they will be encrypting saved objects that cannot be decrypted by the other instance.

We should attempt to detect if there is a potential encryption key mismatch, and alert consumers of the ESO plugin so that they can take appropriate action.

One potential solution is to save a "canary" saved object, whose sole purpose is to test that it can be successfully decrypted by the current instance. If we cannot decrypt this object, then it stands to reason that this instance is not properly configured. I expect there are scenarios where we'd need to allow the canary to be forcefully replaced, however. | True | Detect and prevent the use of mismatched encryption keys - Kibana relies on a number of encryption keys. Arguably the most important key is `xpack.encryptedSavedObjects.encryptionKey`, as this controls the encryption/decryption of actions, alerts, and other sensitive user data.

Kibana requires that this key is set to the same value across all instances. If two Kibana instances have different encryption keys, then they will be encrypting saved objects that cannot be decrypted by the other instance.

We should attempt to detect if there is a potential encryption key mismatch, and alert consumers of the ESO plugin so that they can take appropriate action.

One potential solution is to save a "canary" saved object, whose sole purpose is to test that it can be successfully decrypted by the current instance. If we cannot decrypt this object, then it stands to reason that this instance is not properly configured. I expect there are scenarios where we'd need to allow the canary to be forcefully replaced, however. | non_test | detect and prevent the use of mismatched encryption keys kibana relies on a number of encryption keys arguably the most important key is xpack encryptedsavedobjects encryptionkey as this controls the encryption decryption of actions alerts and other sensitive user data kibana requires that this key is set to the same value across all instances if two kibana instances have different encryption keys then they will be encrypting saved objects that cannot be decrypted by the other instance we should attempt to detect if there is a potential encryption key mismatch and alert consumers of the eso plugin so that they can take appropriate action one potential solution is to save a canary saved object whose sole purpose is to test that it can be successfully decrypted by the current instance if we cannot decrypt this object then it stands to reason that this instance is not properly configured i expect there are scenarios where we d need to allow the canary to be forcefully replaced however | 0 |

112,166 | 17,080,009,330 | IssuesEvent | 2021-07-08 02:47:53 | faizulho/gatsby-starter-docz-netlifycms-1 | https://api.github.com/repos/faizulho/gatsby-starter-docz-netlifycms-1 | opened | CVE-2020-28469 (High) detected in glob-parent-3.1.0.tgz, glob-parent-5.1.1.tgz | security vulnerability | ## CVE-2020-28469 - High Severity Vulnerability

<details><summary><img src='https://whitesource-resources.whitesourcesoftware.com/vulnerability_details.png' width=19 height=20> Vulnerable Libraries - <b>glob-parent-3.1.0.tgz</b>, <b>glob-parent-5.1.1.tgz</b></p></summary>

<p>

<details><summary><b>glob-parent-3.1.0.tgz</b></p></summary>

<p>Strips glob magic from a string to provide the parent directory path</p>

<p>Library home page: <a href="https://registry.npmjs.org/glob-parent/-/glob-parent-3.1.0.tgz">https://registry.npmjs.org/glob-parent/-/glob-parent-3.1.0.tgz</a></p>

<p>Path to dependency file: gatsby-starter-docz-netlifycms-1/package.json</p>

<p>Path to vulnerable library: gatsby-starter-docz-netlifycms-1/node_modules/watchpack-chokidar2/node_modules/glob-parent/package.json,gatsby-starter-docz-netlifycms-1/node_modules/@nicolo-ribaudo/chokidar-2/node_modules/glob-parent/package.json,gatsby-starter-docz-netlifycms-1/node_modules/webpack-dev-server/node_modules/glob-parent/package.json</p>

<p>

Dependency Hierarchy:

- docz-2.3.1.tgz (Root Library)

- gatsby-theme-docz-2.3.1.tgz

- babel-plugin-export-metadata-2.3.0.tgz

- cli-7.12.10.tgz

- chokidar-2-2.1.8-no-fsevents.tgz

- :x: **glob-parent-3.1.0.tgz** (Vulnerable Library)

</details>

<details><summary><b>glob-parent-5.1.1.tgz</b></p></summary>

<p>Extract the non-magic parent path from a glob string.</p>

<p>Library home page: <a href="https://registry.npmjs.org/glob-parent/-/glob-parent-5.1.1.tgz">https://registry.npmjs.org/glob-parent/-/glob-parent-5.1.1.tgz</a></p>

<p>Path to dependency file: gatsby-starter-docz-netlifycms-1/package.json</p>

<p>Path to vulnerable library: gatsby-starter-docz-netlifycms-1/node_modules/glob-parent/package.json</p>

<p>

Dependency Hierarchy:

- gatsby-3.9.0.tgz (Root Library)

- chokidar-3.4.2.tgz

- :x: **glob-parent-5.1.1.tgz** (Vulnerable Library)

</details>

<p>Found in HEAD commit: <a href="https://github.com/faizulho/gatsby-starter-docz-netlifycms-1/commit/70a9e87b1e68c0bef6964284e0899376209b0f3d">70a9e87b1e68c0bef6964284e0899376209b0f3d</a></p>

<p>Found in base branch: <b>master</b></p>

</p>

</details>

<p></p>

<details><summary><img src='https://whitesource-resources.whitesourcesoftware.com/high_vul.png' width=19 height=20> Vulnerability Details</summary>

<p>

This affects the package glob-parent before 5.1.2. The enclosure regex used to check for strings ending in enclosure containing path separator.

<p>Publish Date: 2021-06-03

<p>URL: <a href=https://vuln.whitesourcesoftware.com/vulnerability/CVE-2020-28469>CVE-2020-28469</a></p>

</p>

</details>

<p></p>

<details><summary><img src='https://whitesource-resources.whitesourcesoftware.com/cvss3.png' width=19 height=20> CVSS 3 Score Details (<b>7.5</b>)</summary>

<p>

Base Score Metrics:

- Exploitability Metrics:

- Attack Vector: Network

- Attack Complexity: Low

- Privileges Required: None

- User Interaction: None

- Scope: Unchanged

- Impact Metrics:

- Confidentiality Impact: None

- Integrity Impact: None

- Availability Impact: High

</p>

For more information on CVSS3 Scores, click <a href="https://www.first.org/cvss/calculator/3.0">here</a>.

</p>

</details>

<p></p>

<details><summary><img src='https://whitesource-resources.whitesourcesoftware.com/suggested_fix.png' width=19 height=20> Suggested Fix</summary>

<p>

<p>Type: Upgrade version</p>

<p>Origin: <a href="https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2020-28469">https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2020-28469</a></p>

<p>Release Date: 2021-06-03</p>

<p>Fix Resolution: glob-parent - 5.1.2</p>

</p>

</details>

<p></p>

***

Step up your Open Source Security Game with WhiteSource [here](https://www.whitesourcesoftware.com/full_solution_bolt_github) | True | CVE-2020-28469 (High) detected in glob-parent-3.1.0.tgz, glob-parent-5.1.1.tgz - ## CVE-2020-28469 - High Severity Vulnerability

<details><summary><img src='https://whitesource-resources.whitesourcesoftware.com/vulnerability_details.png' width=19 height=20> Vulnerable Libraries - <b>glob-parent-3.1.0.tgz</b>, <b>glob-parent-5.1.1.tgz</b></p></summary>

<p>

<details><summary><b>glob-parent-3.1.0.tgz</b></p></summary>

<p>Strips glob magic from a string to provide the parent directory path</p>

<p>Library home page: <a href="https://registry.npmjs.org/glob-parent/-/glob-parent-3.1.0.tgz">https://registry.npmjs.org/glob-parent/-/glob-parent-3.1.0.tgz</a></p>

<p>Path to dependency file: gatsby-starter-docz-netlifycms-1/package.json</p>

<p>Path to vulnerable library: gatsby-starter-docz-netlifycms-1/node_modules/watchpack-chokidar2/node_modules/glob-parent/package.json,gatsby-starter-docz-netlifycms-1/node_modules/@nicolo-ribaudo/chokidar-2/node_modules/glob-parent/package.json,gatsby-starter-docz-netlifycms-1/node_modules/webpack-dev-server/node_modules/glob-parent/package.json</p>

<p>

Dependency Hierarchy:

- docz-2.3.1.tgz (Root Library)

- gatsby-theme-docz-2.3.1.tgz

- babel-plugin-export-metadata-2.3.0.tgz

- cli-7.12.10.tgz

- chokidar-2-2.1.8-no-fsevents.tgz

- :x: **glob-parent-3.1.0.tgz** (Vulnerable Library)

</details>

<details><summary><b>glob-parent-5.1.1.tgz</b></p></summary>

<p>Extract the non-magic parent path from a glob string.</p>

<p>Library home page: <a href="https://registry.npmjs.org/glob-parent/-/glob-parent-5.1.1.tgz">https://registry.npmjs.org/glob-parent/-/glob-parent-5.1.1.tgz</a></p>

<p>Path to dependency file: gatsby-starter-docz-netlifycms-1/package.json</p>

<p>Path to vulnerable library: gatsby-starter-docz-netlifycms-1/node_modules/glob-parent/package.json</p>

<p>

Dependency Hierarchy:

- gatsby-3.9.0.tgz (Root Library)

- chokidar-3.4.2.tgz

- :x: **glob-parent-5.1.1.tgz** (Vulnerable Library)

</details>

<p>Found in HEAD commit: <a href="https://github.com/faizulho/gatsby-starter-docz-netlifycms-1/commit/70a9e87b1e68c0bef6964284e0899376209b0f3d">70a9e87b1e68c0bef6964284e0899376209b0f3d</a></p>

<p>Found in base branch: <b>master</b></p>

</p>

</details>

<p></p>

<details><summary><img src='https://whitesource-resources.whitesourcesoftware.com/high_vul.png' width=19 height=20> Vulnerability Details</summary>

<p>

This affects the package glob-parent before 5.1.2. The enclosure regex used to check for strings ending in enclosure containing path separator.

<p>Publish Date: 2021-06-03

<p>URL: <a href=https://vuln.whitesourcesoftware.com/vulnerability/CVE-2020-28469>CVE-2020-28469</a></p>

</p>

</details>

<p></p>

<details><summary><img src='https://whitesource-resources.whitesourcesoftware.com/cvss3.png' width=19 height=20> CVSS 3 Score Details (<b>7.5</b>)</summary>

<p>

Base Score Metrics:

- Exploitability Metrics:

- Attack Vector: Network

- Attack Complexity: Low

- Privileges Required: None

- User Interaction: None

- Scope: Unchanged

- Impact Metrics:

- Confidentiality Impact: None

- Integrity Impact: None

- Availability Impact: High

</p>

For more information on CVSS3 Scores, click <a href="https://www.first.org/cvss/calculator/3.0">here</a>.

</p>

</details>

<p></p>

<details><summary><img src='https://whitesource-resources.whitesourcesoftware.com/suggested_fix.png' width=19 height=20> Suggested Fix</summary>

<p>

<p>Type: Upgrade version</p>

<p>Origin: <a href="https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2020-28469">https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2020-28469</a></p>

<p>Release Date: 2021-06-03</p>

<p>Fix Resolution: glob-parent - 5.1.2</p>

</p>

</details>

<p></p>

***

Step up your Open Source Security Game with WhiteSource [here](https://www.whitesourcesoftware.com/full_solution_bolt_github) | non_test | cve high detected in glob parent tgz glob parent tgz cve high severity vulnerability vulnerable libraries glob parent tgz glob parent tgz glob parent tgz strips glob magic from a string to provide the parent directory path library home page a href path to dependency file gatsby starter docz netlifycms package json path to vulnerable library gatsby starter docz netlifycms node modules watchpack node modules glob parent package json gatsby starter docz netlifycms node modules nicolo ribaudo chokidar node modules glob parent package json gatsby starter docz netlifycms node modules webpack dev server node modules glob parent package json dependency hierarchy docz tgz root library gatsby theme docz tgz babel plugin export metadata tgz cli tgz chokidar no fsevents tgz x glob parent tgz vulnerable library glob parent tgz extract the non magic parent path from a glob string library home page a href path to dependency file gatsby starter docz netlifycms package json path to vulnerable library gatsby starter docz netlifycms node modules glob parent package json dependency hierarchy gatsby tgz root library chokidar tgz x glob parent tgz vulnerable library found in head commit a href found in base branch master vulnerability details this affects the package glob parent before the enclosure regex used to check for strings ending in enclosure containing path separator publish date url a href cvss score details base score metrics exploitability metrics attack vector network attack complexity low privileges required none user interaction none scope unchanged impact metrics confidentiality impact none integrity impact none availability impact high for more information on scores click a href suggested fix type upgrade version origin a href release date fix resolution glob parent step up your open source security game with whitesource | 0 |

12,005 | 14,738,162,537 | IssuesEvent | 2021-01-07 03:56:43 | kdjstudios/SABillingGitlab | https://api.github.com/repos/kdjstudios/SABillingGitlab | closed | Billing Cycles Process - Improvments and Fixes | anc-process anp-not prioritized ant-enhancement | In GitLab by @kdjstudios on May 11, 2018, 12:40

Hello Team,

This is going to be a list of all the concerns and improvements to insure an easy to use and functional Billing cycle Process

- Add staged fees to accounts that will get applied to the same billing cycle. (This is due to ops now having to create the next billing cycle after finalizing in order to create manual invoices) #890

- Add the ability to process a billing cycle with no usage file. This is due to the increased amount of billing cycles that do not need a usage file. #893

- Add the step between create next cycle and upload to allow for manual invoice creation without confusion.

- Reverting/Deleting a cycle concerns

- When performing a revert, Will this revert manually created invoices or just one created by the upload?

- Is the delete functionality shown to what permission level?

- Does this delete manually created invoices or just ones created by the upload? | 1.0 | Billing Cycles Process - Improvments and Fixes - In GitLab by @kdjstudios on May 11, 2018, 12:40

Hello Team,

This is going to be a list of all the concerns and improvements to insure an easy to use and functional Billing cycle Process

- Add staged fees to accounts that will get applied to the same billing cycle. (This is due to ops now having to create the next billing cycle after finalizing in order to create manual invoices) #890

- Add the ability to process a billing cycle with no usage file. This is due to the increased amount of billing cycles that do not need a usage file. #893

- Add the step between create next cycle and upload to allow for manual invoice creation without confusion.

- Reverting/Deleting a cycle concerns

- When performing a revert, Will this revert manually created invoices or just one created by the upload?

- Is the delete functionality shown to what permission level?

- Does this delete manually created invoices or just ones created by the upload? | non_test | billing cycles process improvments and fixes in gitlab by kdjstudios on may hello team this is going to be a list of all the concerns and improvements to insure an easy to use and functional billing cycle process add staged fees to accounts that will get applied to the same billing cycle this is due to ops now having to create the next billing cycle after finalizing in order to create manual invoices add the ability to process a billing cycle with no usage file this is due to the increased amount of billing cycles that do not need a usage file add the step between create next cycle and upload to allow for manual invoice creation without confusion reverting deleting a cycle concerns when performing a revert will this revert manually created invoices or just one created by the upload is the delete functionality shown to what permission level does this delete manually created invoices or just ones created by the upload | 0 |

129,375 | 10,572,682,896 | IssuesEvent | 2019-10-07 10:07:24 | kubernetes/kubernetes | https://api.github.com/repos/kubernetes/kubernetes | opened | node-kubelet-master tests fail during startup | kind/failing-test | **Which jobs are failing**:

node-kubelet-master in master blocking

https://testgrid.k8s.io/sig-release-master-blocking#node-kubelet-master

**Which test(s) are failing**:

test startup, the tests don't even get run

**Since when has it been failing**:

10/02

**Testgrid link**:

https://testgrid.k8s.io/sig-release-master-blocking#node-kubelet-master

**Reason for failure**:

```

I1002 02:30:10.594] >>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

I1002 02:30:10.594] > START TEST >

I1002 02:30:10.594] >>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

I1002 02:30:10.595] Start Test Suite on Host

I1002 02:30:10.595]

I1002 02:30:10.595] Failure Finished Test Suite on Host

I1002 02:30:10.595] unable to create gce instance with running docker daemon for image cos-stable-60-9592-84-0. googleapi: Error 404: The resource 'projects/k8s-jkns-ci-node-e2e/zones/us-west1-b/instances/tmp-node-e2e-3039b3ed-cos-stable-60-9592-84-0' was not found, notFound

```

**Anything else we need to know**:

/kind flake

/priority important-soon

/milestone v1.17

/sig node

cc. @droslean @hasheddan @Verolop @epk | 1.0 | node-kubelet-master tests fail during startup - **Which jobs are failing**:

node-kubelet-master in master blocking

https://testgrid.k8s.io/sig-release-master-blocking#node-kubelet-master

**Which test(s) are failing**:

test startup, the tests don't even get run

**Since when has it been failing**:

10/02

**Testgrid link**:

https://testgrid.k8s.io/sig-release-master-blocking#node-kubelet-master

**Reason for failure**:

```

I1002 02:30:10.594] >>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

I1002 02:30:10.594] > START TEST >

I1002 02:30:10.594] >>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

I1002 02:30:10.595] Start Test Suite on Host

I1002 02:30:10.595]

I1002 02:30:10.595] Failure Finished Test Suite on Host

I1002 02:30:10.595] unable to create gce instance with running docker daemon for image cos-stable-60-9592-84-0. googleapi: Error 404: The resource 'projects/k8s-jkns-ci-node-e2e/zones/us-west1-b/instances/tmp-node-e2e-3039b3ed-cos-stable-60-9592-84-0' was not found, notFound

```

**Anything else we need to know**:

/kind flake

/priority important-soon

/milestone v1.17

/sig node

cc. @droslean @hasheddan @Verolop @epk | test | node kubelet master tests fail during startup which jobs are failing node kubelet master in master blocking which test s are failing test startup the tests don t even get run since when has it been failing testgrid link reason for failure start test start test suite on host failure finished test suite on host unable to create gce instance with running docker daemon for image cos stable googleapi error the resource projects jkns ci node zones us b instances tmp node cos stable was not found notfound anything else we need to know kind flake priority important soon milestone sig node cc droslean hasheddan verolop epk | 1 |