problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

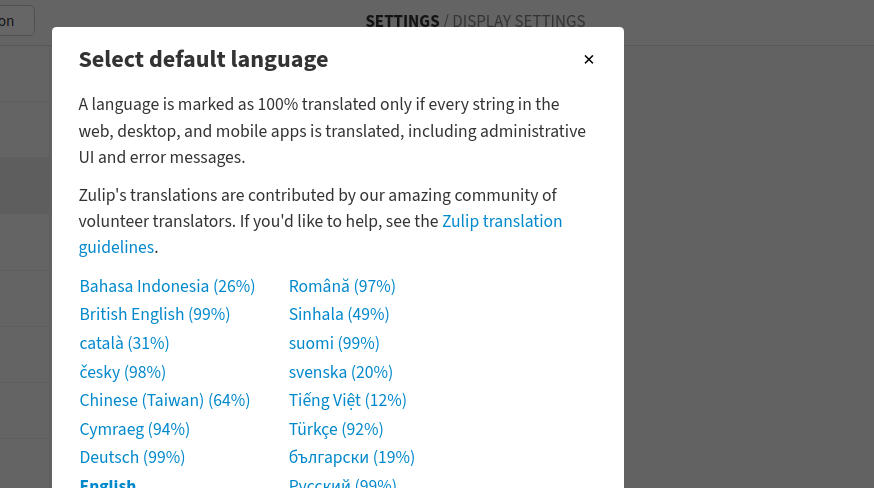

gh_patches_debug_56182 | rasdani/github-patches | git_diff | cookiecutter__cookiecutter-1562 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

prompt.read_user_dict() is broken due to click upgrade from 7.1.2 to 8.0.0

* Cookiecutter version: 1.7.3

* Template project url: -

* Python version: 3.9.5

* Operating System: macOS Catalina 10.15.7

### Description:

Apparently, there is a breaking change in `click==8.0.0` affecting dictionary values in cookiecutter.json

cookiecutter.json example:

```json

{

"project_name": "",

"project_policy": {"project_policy_example": "yes"}

}

```

```

% python -m cookiecutter ../Projects/project-configs

devplatform_project_name [infra-dev]:

project_name []: t

project_policy [default]:

Error: Unable to decode to JSON.

```

Looking closer at the cookiecutter.promt, I can see that in `read_user_dict()`, click passes `user_value='default'` to `process_json()`, instead of passing an actual default value from the cookiecutter.json as it was in `click 7.1.2`.

Link to the `process_json()` code: https://github.com/cookiecutter/cookiecutter/blob/master/cookiecutter/prompt.py#L81

As far as I can suppose, that issue could have been introduced by this PR https://github.com/pallets/click/pull/1517/

### Quick local fix

Install click first and specify version older than 8.0.0

```

pip install click==7.1.2

pip install cookiecutter

```

### Quick fix for cookiecutter library

in `setup.py` replace 'click>=7.0' with `'click>=7,<8.0.0'`

### What I've run:

```shell

% python3.9 -m venv test39

% source test39/bin/activate

% python -V

Python 3.9.5

% python -m pip install click==7.1.2

Collecting click==7.1.2

Using cached click-7.1.2-py2.py3-none-any.whl (82 kB)

Installing collected packages: click

Successfully installed click-7.1.2

(test39) ro.solyanik@macbook-ro Environments % python -m pip install cookiecutter

Collecting cookiecutter

Using cached cookiecutter-1.7.3-py2.py3-none-any.whl (34 kB)

Collecting six>=1.10

................................................

Installing collected packages: six, python-dateutil, MarkupSafe, urllib3, text-unidecode, Jinja2, idna, chardet, certifi, arrow, requests, python-slugify, poyo, jinja2-time, binaryornot, cookiecutter

Successfully installed Jinja2-3.0.1 MarkupSafe-2.0.1 arrow-1.1.0 binaryornot-0.4.4 certifi-2020.12.5 chardet-4.0.0 cookiecutter-1.7.3 idna-2.10 jinja2-time-0.2.0 poyo-0.5.0 python-dateutil-2.8.1 python-slugify-5.0.2 requests-2.25.1 six-1.16.0 text-unidecode-1.3 urllib3-1.26.4

% python -m cookiecutter ../Projects/project-configs

project_name []: t

project_policy [default]:

% ls t

Makefile README.md t tests

% rm -rf t

% python -m pip install click==8.0.0

Collecting click==8.0.0

Using cached click-8.0.0-py3-none-any.whl (96 kB)

Installing collected packages: click

Attempting uninstall: click

Found existing installation: click 7.1.2

Uninstalling click-7.1.2:

Successfully uninstalled click-7.1.2

Successfully installed click-8.0.0

% python -m cookiecutter ../Projects/project-configs

devplatform_project_name [infra-dev]:

project_name []: t

project_policy [default]:

Error: Unable to decode to JSON.

project_policy [default]:

Error: Unable to decode to JSON.

```

</issue>

<code>

[start of setup.py]

1 #!/usr/bin/env python

2 """cookiecutter distutils configuration."""

3 from setuptools import setup

4

5 version = "2.0.0"

6

7 with open('README.md', encoding='utf-8') as readme_file:

8 readme = readme_file.read()

9

10 requirements = [

11 'binaryornot>=0.4.4',

12 'Jinja2>=2.7,<4.0.0',

13 'click>=7.0',

14 'pyyaml>=5.3.1',

15 'jinja2-time>=0.2.0',

16 'python-slugify>=4.0.0',

17 'requests>=2.23.0',

18 ]

19

20 setup(

21 name='cookiecutter',

22 version=version,

23 description=(

24 'A command-line utility that creates projects from project '

25 'templates, e.g. creating a Python package project from a '

26 'Python package project template.'

27 ),

28 long_description=readme,

29 long_description_content_type='text/markdown',

30 author='Audrey Feldroy',

31 author_email='[email protected]',

32 url='https://github.com/cookiecutter/cookiecutter',

33 packages=['cookiecutter'],

34 package_dir={'cookiecutter': 'cookiecutter'},

35 entry_points={'console_scripts': ['cookiecutter = cookiecutter.__main__:main']},

36 include_package_data=True,

37 python_requires='>=3.6',

38 install_requires=requirements,

39 license='BSD',

40 zip_safe=False,

41 classifiers=[

42 "Development Status :: 5 - Production/Stable",

43 "Environment :: Console",

44 "Intended Audience :: Developers",

45 "Natural Language :: English",

46 "License :: OSI Approved :: BSD License",

47 "Programming Language :: Python :: 3 :: Only",

48 "Programming Language :: Python :: 3",

49 "Programming Language :: Python :: 3.6",

50 "Programming Language :: Python :: 3.7",

51 "Programming Language :: Python :: 3.8",

52 "Programming Language :: Python :: 3.9",

53 "Programming Language :: Python :: Implementation :: CPython",

54 "Programming Language :: Python :: Implementation :: PyPy",

55 "Programming Language :: Python",

56 "Topic :: Software Development",

57 ],

58 keywords=[

59 "cookiecutter",

60 "Python",

61 "projects",

62 "project templates",

63 "Jinja2",

64 "skeleton",

65 "scaffolding",

66 "project directory",

67 "package",

68 "packaging",

69 ],

70 )

71

[end of setup.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -10,7 +10,7 @@

requirements = [

'binaryornot>=0.4.4',

'Jinja2>=2.7,<4.0.0',

- 'click>=7.0',

+ 'click>=7.0,<8.0.0',

'pyyaml>=5.3.1',

'jinja2-time>=0.2.0',

'python-slugify>=4.0.0',

| {"golden_diff": "diff --git a/setup.py b/setup.py\n--- a/setup.py\n+++ b/setup.py\n@@ -10,7 +10,7 @@\n requirements = [\n 'binaryornot>=0.4.4',\n 'Jinja2>=2.7,<4.0.0',\n- 'click>=7.0',\n+ 'click>=7.0,<8.0.0',\n 'pyyaml>=5.3.1',\n 'jinja2-time>=0.2.0',\n 'python-slugify>=4.0.0',\n", "issue": "prompt.read_user_dict() is broken due to click upgrade from 7.1.2 to 8.0.0\n* Cookiecutter version: 1.7.3\r\n* Template project url: -\r\n* Python version: 3.9.5\r\n* Operating System: macOS Catalina 10.15.7\r\n\r\n### Description:\r\n\r\nApparently, there is a breaking change in `click==8.0.0` affecting dictionary values in cookiecutter.json\r\ncookiecutter.json example:\r\n```json\r\n{\r\n \"project_name\": \"\",\r\n \"project_policy\": {\"project_policy_example\": \"yes\"}\r\n}\r\n```\r\n \r\n```\r\n% python -m cookiecutter ../Projects/project-configs\r\ndevplatform_project_name [infra-dev]: \r\nproject_name []: t\r\nproject_policy [default]: \r\nError: Unable to decode to JSON.\r\n```\r\n\r\nLooking closer at the cookiecutter.promt, I can see that in `read_user_dict()`, click passes `user_value='default'` to `process_json()`, instead of passing an actual default value from the cookiecutter.json as it was in `click 7.1.2`. \r\nLink to the `process_json()` code: https://github.com/cookiecutter/cookiecutter/blob/master/cookiecutter/prompt.py#L81\r\n\r\n\r\nAs far as I can suppose, that issue could have been introduced by this PR https://github.com/pallets/click/pull/1517/\r\n\r\n### Quick local fix\r\nInstall click first and specify version older than 8.0.0\r\n```\r\npip install click==7.1.2\r\npip install cookiecutter\r\n```\r\n\r\n### Quick fix for cookiecutter library\r\nin `setup.py` replace 'click>=7.0' with `'click>=7,<8.0.0'`\r\n\r\n### What I've run:\r\n\r\n```shell\r\n% python3.9 -m venv test39 \r\n \r\n% source test39/bin/activate\r\n\r\n% python -V\r\nPython 3.9.5\r\n\r\n\r\n% python -m pip install click==7.1.2\r\nCollecting click==7.1.2\r\n Using cached click-7.1.2-py2.py3-none-any.whl (82 kB)\r\nInstalling collected packages: click\r\nSuccessfully installed click-7.1.2\r\n(test39) ro.solyanik@macbook-ro Environments % python -m pip install cookiecutter\r\nCollecting cookiecutter\r\n Using cached cookiecutter-1.7.3-py2.py3-none-any.whl (34 kB)\r\nCollecting six>=1.10\r\n................................................\r\nInstalling collected packages: six, python-dateutil, MarkupSafe, urllib3, text-unidecode, Jinja2, idna, chardet, certifi, arrow, requests, python-slugify, poyo, jinja2-time, binaryornot, cookiecutter\r\nSuccessfully installed Jinja2-3.0.1 MarkupSafe-2.0.1 arrow-1.1.0 binaryornot-0.4.4 certifi-2020.12.5 chardet-4.0.0 cookiecutter-1.7.3 idna-2.10 jinja2-time-0.2.0 poyo-0.5.0 python-dateutil-2.8.1 python-slugify-5.0.2 requests-2.25.1 six-1.16.0 text-unidecode-1.3 urllib3-1.26.4\r\n\r\n% python -m cookiecutter ../Projects/project-configs\r\nproject_name []: t\r\nproject_policy [default]: \r\n\r\n% ls t \r\nMakefile README.md t tests\r\n\r\n% rm -rf t\r\n\r\n% python -m pip install click==8.0.0 \r\nCollecting click==8.0.0\r\n Using cached click-8.0.0-py3-none-any.whl (96 kB)\r\nInstalling collected packages: click\r\n Attempting uninstall: click\r\n Found existing installation: click 7.1.2\r\n Uninstalling click-7.1.2:\r\n Successfully uninstalled click-7.1.2\r\nSuccessfully installed click-8.0.0\r\n\r\n% python -m cookiecutter ../Projects/project-configs\r\ndevplatform_project_name [infra-dev]: \r\nproject_name []: t\r\nproject_policy [default]: \r\nError: Unable to decode to JSON.\r\nproject_policy [default]: \r\nError: Unable to decode to JSON.\r\n```\n", "before_files": [{"content": "#!/usr/bin/env python\n\"\"\"cookiecutter distutils configuration.\"\"\"\nfrom setuptools import setup\n\nversion = \"2.0.0\"\n\nwith open('README.md', encoding='utf-8') as readme_file:\n readme = readme_file.read()\n\nrequirements = [\n 'binaryornot>=0.4.4',\n 'Jinja2>=2.7,<4.0.0',\n 'click>=7.0',\n 'pyyaml>=5.3.1',\n 'jinja2-time>=0.2.0',\n 'python-slugify>=4.0.0',\n 'requests>=2.23.0',\n]\n\nsetup(\n name='cookiecutter',\n version=version,\n description=(\n 'A command-line utility that creates projects from project '\n 'templates, e.g. creating a Python package project from a '\n 'Python package project template.'\n ),\n long_description=readme,\n long_description_content_type='text/markdown',\n author='Audrey Feldroy',\n author_email='[email protected]',\n url='https://github.com/cookiecutter/cookiecutter',\n packages=['cookiecutter'],\n package_dir={'cookiecutter': 'cookiecutter'},\n entry_points={'console_scripts': ['cookiecutter = cookiecutter.__main__:main']},\n include_package_data=True,\n python_requires='>=3.6',\n install_requires=requirements,\n license='BSD',\n zip_safe=False,\n classifiers=[\n \"Development Status :: 5 - Production/Stable\",\n \"Environment :: Console\",\n \"Intended Audience :: Developers\",\n \"Natural Language :: English\",\n \"License :: OSI Approved :: BSD License\",\n \"Programming Language :: Python :: 3 :: Only\",\n \"Programming Language :: Python :: 3\",\n \"Programming Language :: Python :: 3.6\",\n \"Programming Language :: Python :: 3.7\",\n \"Programming Language :: Python :: 3.8\",\n \"Programming Language :: Python :: 3.9\",\n \"Programming Language :: Python :: Implementation :: CPython\",\n \"Programming Language :: Python :: Implementation :: PyPy\",\n \"Programming Language :: Python\",\n \"Topic :: Software Development\",\n ],\n keywords=[\n \"cookiecutter\",\n \"Python\",\n \"projects\",\n \"project templates\",\n \"Jinja2\",\n \"skeleton\",\n \"scaffolding\",\n \"project directory\",\n \"package\",\n \"packaging\",\n ],\n)\n", "path": "setup.py"}]} | 2,233 | 124 |

gh_patches_debug_3807 | rasdani/github-patches | git_diff | quantumlib__Cirq-3574 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Docs build is failing

Since the black formatter merge the RTD builds are failing with some weird pip error:

https://readthedocs.org/projects/cirq/builds/

Need to look into it and resolve it if the error is on our end or report it to the RTD team if it's on their end.

</issue>

<code>

[start of setup.py]

1 # Copyright 2018 The Cirq Developers

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # https://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 import io

16 import os

17 from setuptools import find_packages, setup

18

19 # This reads the __version__ variable from cirq/_version.py

20 __version__ = ''

21 exec(open('cirq/_version.py').read())

22

23 name = 'cirq'

24

25 description = (

26 'A framework for creating, editing, and invoking '

27 'Noisy Intermediate Scale Quantum (NISQ) circuits.'

28 )

29

30 # README file as long_description.

31 long_description = io.open('README.rst', encoding='utf-8').read()

32

33 # If CIRQ_PRE_RELEASE_VERSION is set then we update the version to this value.

34 # It is assumed that it ends with one of `.devN`, `.aN`, `.bN`, `.rcN` and hence

35 # it will be a pre-release version on PyPi. See

36 # https://packaging.python.org/guides/distributing-packages-using-setuptools/#pre-release-versioning

37 # for more details.

38 if 'CIRQ_PRE_RELEASE_VERSION' in os.environ:

39 __version__ = os.environ['CIRQ_PRE_RELEASE_VERSION']

40 long_description = (

41 "**This is a development version of Cirq and may be "

42 "unstable.**\n\n**For the latest stable release of Cirq "

43 "see**\n`here <https://pypi.org/project/cirq>`__.\n\n" + long_description

44 )

45

46 # Read in requirements

47 requirements = open('requirements.txt').readlines()

48 requirements = [r.strip() for r in requirements]

49 contrib_requirements = open('cirq/contrib/contrib-requirements.txt').readlines()

50 contrib_requirements = [r.strip() for r in contrib_requirements]

51 dev_requirements = open('dev_tools/conf/pip-list-dev-tools.txt').readlines()

52 dev_requirements = [r.strip() for r in dev_requirements]

53

54 cirq_packages = ['cirq'] + ['cirq.' + package for package in find_packages(where='cirq')]

55

56 # Sanity check

57 assert __version__, 'Version string cannot be empty'

58

59 setup(

60 name=name,

61 version=__version__,

62 url='http://github.com/quantumlib/cirq',

63 author='The Cirq Developers',

64 author_email='[email protected]',

65 python_requires=('>=3.6.0'),

66 install_requires=requirements,

67 extras_require={

68 'contrib': contrib_requirements,

69 'dev_env': dev_requirements + contrib_requirements,

70 },

71 license='Apache 2',

72 description=description,

73 long_description=long_description,

74 packages=cirq_packages,

75 package_data={

76 'cirq': ['py.typed'],

77 'cirq.google.api.v1': ['*.proto', '*.pyi'],

78 'cirq.google.api.v2': ['*.proto', '*.pyi'],

79 'cirq.protocols.json_test_data': ['*'],

80 },

81 )

82

[end of setup.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -62,7 +62,7 @@

url='http://github.com/quantumlib/cirq',

author='The Cirq Developers',

author_email='[email protected]',

- python_requires=('>=3.6.0'),

+ python_requires=('>=3.7.0'),

install_requires=requirements,

extras_require={

'contrib': contrib_requirements,

| {"golden_diff": "diff --git a/setup.py b/setup.py\n--- a/setup.py\n+++ b/setup.py\n@@ -62,7 +62,7 @@\n url='http://github.com/quantumlib/cirq',\n author='The Cirq Developers',\n author_email='[email protected]',\n- python_requires=('>=3.6.0'),\n+ python_requires=('>=3.7.0'),\n install_requires=requirements,\n extras_require={\n 'contrib': contrib_requirements,\n", "issue": "Docs build is failing\nSince the black formatter merge the RTD builds are failing with some weird pip error:\r\n\r\nhttps://readthedocs.org/projects/cirq/builds/\r\n\r\nNeed to look into it and resolve it if the error is on our end or report it to the RTD team if it's on their end.\n", "before_files": [{"content": "# Copyright 2018 The Cirq Developers\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# https://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nimport io\nimport os\nfrom setuptools import find_packages, setup\n\n# This reads the __version__ variable from cirq/_version.py\n__version__ = ''\nexec(open('cirq/_version.py').read())\n\nname = 'cirq'\n\ndescription = (\n 'A framework for creating, editing, and invoking '\n 'Noisy Intermediate Scale Quantum (NISQ) circuits.'\n)\n\n# README file as long_description.\nlong_description = io.open('README.rst', encoding='utf-8').read()\n\n# If CIRQ_PRE_RELEASE_VERSION is set then we update the version to this value.\n# It is assumed that it ends with one of `.devN`, `.aN`, `.bN`, `.rcN` and hence\n# it will be a pre-release version on PyPi. See\n# https://packaging.python.org/guides/distributing-packages-using-setuptools/#pre-release-versioning\n# for more details.\nif 'CIRQ_PRE_RELEASE_VERSION' in os.environ:\n __version__ = os.environ['CIRQ_PRE_RELEASE_VERSION']\n long_description = (\n \"**This is a development version of Cirq and may be \"\n \"unstable.**\\n\\n**For the latest stable release of Cirq \"\n \"see**\\n`here <https://pypi.org/project/cirq>`__.\\n\\n\" + long_description\n )\n\n# Read in requirements\nrequirements = open('requirements.txt').readlines()\nrequirements = [r.strip() for r in requirements]\ncontrib_requirements = open('cirq/contrib/contrib-requirements.txt').readlines()\ncontrib_requirements = [r.strip() for r in contrib_requirements]\ndev_requirements = open('dev_tools/conf/pip-list-dev-tools.txt').readlines()\ndev_requirements = [r.strip() for r in dev_requirements]\n\ncirq_packages = ['cirq'] + ['cirq.' + package for package in find_packages(where='cirq')]\n\n# Sanity check\nassert __version__, 'Version string cannot be empty'\n\nsetup(\n name=name,\n version=__version__,\n url='http://github.com/quantumlib/cirq',\n author='The Cirq Developers',\n author_email='[email protected]',\n python_requires=('>=3.6.0'),\n install_requires=requirements,\n extras_require={\n 'contrib': contrib_requirements,\n 'dev_env': dev_requirements + contrib_requirements,\n },\n license='Apache 2',\n description=description,\n long_description=long_description,\n packages=cirq_packages,\n package_data={\n 'cirq': ['py.typed'],\n 'cirq.google.api.v1': ['*.proto', '*.pyi'],\n 'cirq.google.api.v2': ['*.proto', '*.pyi'],\n 'cirq.protocols.json_test_data': ['*'],\n },\n)\n", "path": "setup.py"}]} | 1,480 | 107 |

gh_patches_debug_27198 | rasdani/github-patches | git_diff | python-poetry__poetry-1910 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

poetry complains about missing argument when using `--help`

<!--

Hi there! Thank you for discovering and submitting an issue.

Before you submit this; let's make sure of a few things.

Please make sure the following boxes are ticked if they are correct.

If not, please try and fulfill these first.

-->

<!-- Checked checkbox should look like this: [x] -->

- [x] I am on the [latest](https://github.com/sdispater/poetry/releases/latest) Poetry version.

- [x] I have searched the [issues](https://github.com/sdispater/poetry/issues) of this repo and believe that this is not a duplicate.

- [ ] If an exception occurs when executing a command, I executed it again in debug mode (`-vvv` option).

<!--

Once those are done, if you're able to fill in the following list with your information,

it'd be very helpful to whoever handles the issue.

-->

## Issue

<!-- Now feel free to write your issue, but please be descriptive! Thanks again 🙌 ❤️ -->

I don't know whether this is a poetry issue or cleo and if this problem arises in earlier versions.

When I type `poetry add --help` I receive the error message

```

Not enough arguments (missing: "name").

```

Similar for `poetry remove --help`

```

Not enough arguments (missing: "packages").

```

If I append any name I get the help page.

The expected behavior would be, that whenever I use `--help`, the help page should be displayed and mandatory arguments for sub command shouldn't be checked.

Saw this with version 1.0.0b6 and 1.0.0b7

</issue>

<code>

[start of poetry/console/config/application_config.py]

1 import logging

2

3 from typing import Any

4

5 from cleo.config import ApplicationConfig as BaseApplicationConfig

6 from clikit.api.application.application import Application

7 from clikit.api.args.raw_args import RawArgs

8 from clikit.api.event import PRE_HANDLE

9 from clikit.api.event import PreHandleEvent

10 from clikit.api.event import PreResolveEvent

11 from clikit.api.event.event_dispatcher import EventDispatcher

12 from clikit.api.formatter import Style

13 from clikit.api.io import Input

14 from clikit.api.io import InputStream

15 from clikit.api.io import Output

16 from clikit.api.io import OutputStream

17 from clikit.api.io.flags import DEBUG

18 from clikit.api.io.flags import VERBOSE

19 from clikit.api.io.flags import VERY_VERBOSE

20 from clikit.api.io.io import IO

21 from clikit.formatter import AnsiFormatter

22 from clikit.formatter import PlainFormatter

23 from clikit.io.input_stream import StandardInputStream

24 from clikit.io.output_stream import ErrorOutputStream

25 from clikit.io.output_stream import StandardOutputStream

26

27 from poetry.console.commands.command import Command

28 from poetry.console.commands.env_command import EnvCommand

29 from poetry.console.logging.io_formatter import IOFormatter

30 from poetry.console.logging.io_handler import IOHandler

31

32

33 class ApplicationConfig(BaseApplicationConfig):

34 def configure(self):

35 super(ApplicationConfig, self).configure()

36

37 self.add_style(Style("c1").fg("cyan"))

38 self.add_style(Style("info").fg("blue"))

39 self.add_style(Style("comment").fg("green"))

40 self.add_style(Style("error").fg("red").bold())

41 self.add_style(Style("warning").fg("yellow"))

42 self.add_style(Style("debug").fg("black").bold())

43

44 self.add_event_listener(PRE_HANDLE, self.register_command_loggers)

45 self.add_event_listener(PRE_HANDLE, self.set_env)

46

47 def register_command_loggers(

48 self, event, event_name, _

49 ): # type: (PreHandleEvent, str, Any) -> None

50 command = event.command.config.handler

51 if not isinstance(command, Command):

52 return

53

54 io = event.io

55

56 loggers = ["poetry.packages.package", "poetry.utils.password_manager"]

57

58 loggers += command.loggers

59

60 handler = IOHandler(io)

61 handler.setFormatter(IOFormatter())

62

63 for logger in loggers:

64 logger = logging.getLogger(logger)

65

66 logger.handlers = [handler]

67 logger.propagate = False

68

69 level = logging.WARNING

70 if io.is_debug():

71 level = logging.DEBUG

72 elif io.is_very_verbose() or io.is_verbose():

73 level = logging.INFO

74

75 logger.setLevel(level)

76

77 def set_env(self, event, event_name, _): # type: (PreHandleEvent, str, Any) -> None

78 from poetry.utils.env import EnvManager

79

80 command = event.command.config.handler # type: EnvCommand

81 if not isinstance(command, EnvCommand):

82 return

83

84 io = event.io

85 poetry = command.poetry

86

87 env_manager = EnvManager(poetry)

88 env = env_manager.create_venv(io)

89

90 if env.is_venv() and io.is_verbose():

91 io.write_line("Using virtualenv: <comment>{}</>".format(env.path))

92

93 command.set_env(env)

94

95 def resolve_help_command(

96 self, event, event_name, dispatcher

97 ): # type: (PreResolveEvent, str, EventDispatcher) -> None

98 args = event.raw_args

99 application = event.application

100

101 if args.has_option_token("-h") or args.has_option_token("--help"):

102 from clikit.api.resolver import ResolvedCommand

103

104 resolved_command = self.command_resolver.resolve(args, application)

105 # If the current command is the run one, skip option

106 # check and interpret them as part of the executed command

107 if resolved_command.command.name == "run":

108 event.set_resolved_command(resolved_command)

109

110 return event.stop_propagation()

111

112 command = application.get_command("help")

113

114 # Enable lenient parsing

115 parsed_args = command.parse(args, True)

116

117 event.set_resolved_command(ResolvedCommand(command, parsed_args))

118 event.stop_propagation()

119

120 def create_io(

121 self,

122 application,

123 args,

124 input_stream=None,

125 output_stream=None,

126 error_stream=None,

127 ): # type: (Application, RawArgs, InputStream, OutputStream, OutputStream) -> IO

128 if input_stream is None:

129 input_stream = StandardInputStream()

130

131 if output_stream is None:

132 output_stream = StandardOutputStream()

133

134 if error_stream is None:

135 error_stream = ErrorOutputStream()

136

137 style_set = application.config.style_set

138

139 if output_stream.supports_ansi():

140 output_formatter = AnsiFormatter(style_set)

141 else:

142 output_formatter = PlainFormatter(style_set)

143

144 if error_stream.supports_ansi():

145 error_formatter = AnsiFormatter(style_set)

146 else:

147 error_formatter = PlainFormatter(style_set)

148

149 io = self.io_class(

150 Input(input_stream),

151 Output(output_stream, output_formatter),

152 Output(error_stream, error_formatter),

153 )

154

155 resolved_command = application.resolve_command(args)

156 # If the current command is the run one, skip option

157 # check and interpret them as part of the executed command

158 if resolved_command.command.name == "run":

159 return io

160

161 if args.has_option_token("--no-ansi"):

162 formatter = PlainFormatter(style_set)

163 io.output.set_formatter(formatter)

164 io.error_output.set_formatter(formatter)

165 elif args.has_option_token("--ansi"):

166 formatter = AnsiFormatter(style_set, True)

167 io.output.set_formatter(formatter)

168 io.error_output.set_formatter(formatter)

169

170 if args.has_option_token("-vvv") or self.is_debug():

171 io.set_verbosity(DEBUG)

172 elif args.has_option_token("-vv"):

173 io.set_verbosity(VERY_VERBOSE)

174 elif args.has_option_token("-v"):

175 io.set_verbosity(VERBOSE)

176

177 if args.has_option_token("--quiet") or args.has_option_token("-q"):

178 io.set_quiet(True)

179

180 if args.has_option_token("--no-interaction") or args.has_option_token("-n"):

181 io.set_interactive(False)

182

183 return io

184

[end of poetry/console/config/application_config.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/poetry/console/config/application_config.py b/poetry/console/config/application_config.py

--- a/poetry/console/config/application_config.py

+++ b/poetry/console/config/application_config.py

@@ -9,6 +9,7 @@

from clikit.api.event import PreHandleEvent

from clikit.api.event import PreResolveEvent

from clikit.api.event.event_dispatcher import EventDispatcher

+from clikit.api.exceptions import CliKitException

from clikit.api.formatter import Style

from clikit.api.io import Input

from clikit.api.io import InputStream

@@ -101,7 +102,16 @@

if args.has_option_token("-h") or args.has_option_token("--help"):

from clikit.api.resolver import ResolvedCommand

- resolved_command = self.command_resolver.resolve(args, application)

+ try:

+ resolved_command = self.command_resolver.resolve(args, application)

+ except CliKitException:

+ # We weren't able to resolve the command,

+ # due to a parse error most likely,

+ # so we fall back on the default behavior

+ return super(ApplicationConfig, self).resolve_help_command(

+ event, event_name, dispatcher

+ )

+

# If the current command is the run one, skip option

# check and interpret them as part of the executed command

if resolved_command.command.name == "run":

| {"golden_diff": "diff --git a/poetry/console/config/application_config.py b/poetry/console/config/application_config.py\n--- a/poetry/console/config/application_config.py\n+++ b/poetry/console/config/application_config.py\n@@ -9,6 +9,7 @@\n from clikit.api.event import PreHandleEvent\n from clikit.api.event import PreResolveEvent\n from clikit.api.event.event_dispatcher import EventDispatcher\n+from clikit.api.exceptions import CliKitException\n from clikit.api.formatter import Style\n from clikit.api.io import Input\n from clikit.api.io import InputStream\n@@ -101,7 +102,16 @@\n if args.has_option_token(\"-h\") or args.has_option_token(\"--help\"):\n from clikit.api.resolver import ResolvedCommand\n \n- resolved_command = self.command_resolver.resolve(args, application)\n+ try:\n+ resolved_command = self.command_resolver.resolve(args, application)\n+ except CliKitException:\n+ # We weren't able to resolve the command,\n+ # due to a parse error most likely,\n+ # so we fall back on the default behavior\n+ return super(ApplicationConfig, self).resolve_help_command(\n+ event, event_name, dispatcher\n+ )\n+\n # If the current command is the run one, skip option\n # check and interpret them as part of the executed command\n if resolved_command.command.name == \"run\":\n", "issue": "poetry complains about missing argument when using `--help`\n<!--\r\n Hi there! Thank you for discovering and submitting an issue.\r\n\r\n Before you submit this; let's make sure of a few things.\r\n Please make sure the following boxes are ticked if they are correct.\r\n If not, please try and fulfill these first.\r\n-->\r\n\r\n<!-- Checked checkbox should look like this: [x] -->\r\n- [x] I am on the [latest](https://github.com/sdispater/poetry/releases/latest) Poetry version.\r\n- [x] I have searched the [issues](https://github.com/sdispater/poetry/issues) of this repo and believe that this is not a duplicate.\r\n- [ ] If an exception occurs when executing a command, I executed it again in debug mode (`-vvv` option).\r\n\r\n<!--\r\n Once those are done, if you're able to fill in the following list with your information,\r\n it'd be very helpful to whoever handles the issue.\r\n-->\r\n\r\n## Issue\r\n<!-- Now feel free to write your issue, but please be descriptive! Thanks again \ud83d\ude4c \u2764\ufe0f -->\r\n\r\nI don't know whether this is a poetry issue or cleo and if this problem arises in earlier versions.\r\n\r\nWhen I type `poetry add --help` I receive the error message\r\n\r\n```\r\nNot enough arguments (missing: \"name\").\r\n```\r\n\r\nSimilar for `poetry remove --help`\r\n\r\n```\r\nNot enough arguments (missing: \"packages\").\r\n```\r\n\r\nIf I append any name I get the help page.\r\n\r\nThe expected behavior would be, that whenever I use `--help`, the help page should be displayed and mandatory arguments for sub command shouldn't be checked.\r\n\r\nSaw this with version 1.0.0b6 and 1.0.0b7\n", "before_files": [{"content": "import logging\n\nfrom typing import Any\n\nfrom cleo.config import ApplicationConfig as BaseApplicationConfig\nfrom clikit.api.application.application import Application\nfrom clikit.api.args.raw_args import RawArgs\nfrom clikit.api.event import PRE_HANDLE\nfrom clikit.api.event import PreHandleEvent\nfrom clikit.api.event import PreResolveEvent\nfrom clikit.api.event.event_dispatcher import EventDispatcher\nfrom clikit.api.formatter import Style\nfrom clikit.api.io import Input\nfrom clikit.api.io import InputStream\nfrom clikit.api.io import Output\nfrom clikit.api.io import OutputStream\nfrom clikit.api.io.flags import DEBUG\nfrom clikit.api.io.flags import VERBOSE\nfrom clikit.api.io.flags import VERY_VERBOSE\nfrom clikit.api.io.io import IO\nfrom clikit.formatter import AnsiFormatter\nfrom clikit.formatter import PlainFormatter\nfrom clikit.io.input_stream import StandardInputStream\nfrom clikit.io.output_stream import ErrorOutputStream\nfrom clikit.io.output_stream import StandardOutputStream\n\nfrom poetry.console.commands.command import Command\nfrom poetry.console.commands.env_command import EnvCommand\nfrom poetry.console.logging.io_formatter import IOFormatter\nfrom poetry.console.logging.io_handler import IOHandler\n\n\nclass ApplicationConfig(BaseApplicationConfig):\n def configure(self):\n super(ApplicationConfig, self).configure()\n\n self.add_style(Style(\"c1\").fg(\"cyan\"))\n self.add_style(Style(\"info\").fg(\"blue\"))\n self.add_style(Style(\"comment\").fg(\"green\"))\n self.add_style(Style(\"error\").fg(\"red\").bold())\n self.add_style(Style(\"warning\").fg(\"yellow\"))\n self.add_style(Style(\"debug\").fg(\"black\").bold())\n\n self.add_event_listener(PRE_HANDLE, self.register_command_loggers)\n self.add_event_listener(PRE_HANDLE, self.set_env)\n\n def register_command_loggers(\n self, event, event_name, _\n ): # type: (PreHandleEvent, str, Any) -> None\n command = event.command.config.handler\n if not isinstance(command, Command):\n return\n\n io = event.io\n\n loggers = [\"poetry.packages.package\", \"poetry.utils.password_manager\"]\n\n loggers += command.loggers\n\n handler = IOHandler(io)\n handler.setFormatter(IOFormatter())\n\n for logger in loggers:\n logger = logging.getLogger(logger)\n\n logger.handlers = [handler]\n logger.propagate = False\n\n level = logging.WARNING\n if io.is_debug():\n level = logging.DEBUG\n elif io.is_very_verbose() or io.is_verbose():\n level = logging.INFO\n\n logger.setLevel(level)\n\n def set_env(self, event, event_name, _): # type: (PreHandleEvent, str, Any) -> None\n from poetry.utils.env import EnvManager\n\n command = event.command.config.handler # type: EnvCommand\n if not isinstance(command, EnvCommand):\n return\n\n io = event.io\n poetry = command.poetry\n\n env_manager = EnvManager(poetry)\n env = env_manager.create_venv(io)\n\n if env.is_venv() and io.is_verbose():\n io.write_line(\"Using virtualenv: <comment>{}</>\".format(env.path))\n\n command.set_env(env)\n\n def resolve_help_command(\n self, event, event_name, dispatcher\n ): # type: (PreResolveEvent, str, EventDispatcher) -> None\n args = event.raw_args\n application = event.application\n\n if args.has_option_token(\"-h\") or args.has_option_token(\"--help\"):\n from clikit.api.resolver import ResolvedCommand\n\n resolved_command = self.command_resolver.resolve(args, application)\n # If the current command is the run one, skip option\n # check and interpret them as part of the executed command\n if resolved_command.command.name == \"run\":\n event.set_resolved_command(resolved_command)\n\n return event.stop_propagation()\n\n command = application.get_command(\"help\")\n\n # Enable lenient parsing\n parsed_args = command.parse(args, True)\n\n event.set_resolved_command(ResolvedCommand(command, parsed_args))\n event.stop_propagation()\n\n def create_io(\n self,\n application,\n args,\n input_stream=None,\n output_stream=None,\n error_stream=None,\n ): # type: (Application, RawArgs, InputStream, OutputStream, OutputStream) -> IO\n if input_stream is None:\n input_stream = StandardInputStream()\n\n if output_stream is None:\n output_stream = StandardOutputStream()\n\n if error_stream is None:\n error_stream = ErrorOutputStream()\n\n style_set = application.config.style_set\n\n if output_stream.supports_ansi():\n output_formatter = AnsiFormatter(style_set)\n else:\n output_formatter = PlainFormatter(style_set)\n\n if error_stream.supports_ansi():\n error_formatter = AnsiFormatter(style_set)\n else:\n error_formatter = PlainFormatter(style_set)\n\n io = self.io_class(\n Input(input_stream),\n Output(output_stream, output_formatter),\n Output(error_stream, error_formatter),\n )\n\n resolved_command = application.resolve_command(args)\n # If the current command is the run one, skip option\n # check and interpret them as part of the executed command\n if resolved_command.command.name == \"run\":\n return io\n\n if args.has_option_token(\"--no-ansi\"):\n formatter = PlainFormatter(style_set)\n io.output.set_formatter(formatter)\n io.error_output.set_formatter(formatter)\n elif args.has_option_token(\"--ansi\"):\n formatter = AnsiFormatter(style_set, True)\n io.output.set_formatter(formatter)\n io.error_output.set_formatter(formatter)\n\n if args.has_option_token(\"-vvv\") or self.is_debug():\n io.set_verbosity(DEBUG)\n elif args.has_option_token(\"-vv\"):\n io.set_verbosity(VERY_VERBOSE)\n elif args.has_option_token(\"-v\"):\n io.set_verbosity(VERBOSE)\n\n if args.has_option_token(\"--quiet\") or args.has_option_token(\"-q\"):\n io.set_quiet(True)\n\n if args.has_option_token(\"--no-interaction\") or args.has_option_token(\"-n\"):\n io.set_interactive(False)\n\n return io\n", "path": "poetry/console/config/application_config.py"}]} | 2,705 | 308 |

gh_patches_debug_2706 | rasdani/github-patches | git_diff | fossasia__open-event-server-4302 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Custom-forms: Change data.type in custom-form

**I'm submitting a ...** (check one with "x")

- [x] bug report

- [ ] feature request

- [ ] support request => Please do not submit support requests here, instead ask your query in out Gitter channel at https://gitter.im/fossasia/open-event-orga-server

**Current behavior:**

The type attribute is `custom_form` which leads to error 409 while making a request after #4300

**Expected behavior:**

The type attribute should be `custom-form`

@enigmaeth Can you please check?

</issue>

<code>

[start of app/api/custom_forms.py]

1 from flask_rest_jsonapi import ResourceDetail, ResourceList, ResourceRelationship

2 from marshmallow_jsonapi.flask import Schema, Relationship

3 from marshmallow_jsonapi import fields

4 import marshmallow.validate as validate

5 from app.api.helpers.permissions import jwt_required

6 from flask_rest_jsonapi.exceptions import ObjectNotFound

7

8 from app.api.bootstrap import api

9 from app.api.helpers.utilities import dasherize

10 from app.models import db

11 from app.models.custom_form import CustomForms

12 from app.models.event import Event

13 from app.api.helpers.db import safe_query

14 from app.api.helpers.utilities import require_relationship

15 from app.api.helpers.permission_manager import has_access

16 from app.api.helpers.query import event_query

17

18

19 class CustomFormSchema(Schema):

20 """

21 API Schema for Custom Forms database model

22 """

23 class Meta:

24 """

25 Meta class for CustomForm Schema

26 """

27 type_ = 'custom_form'

28 self_view = 'v1.custom_form_detail'

29 self_view_kwargs = {'id': '<id>'}

30 inflect = dasherize

31

32 id = fields.Integer(dump_only=True)

33 field_identifier = fields.Str(required=True)

34 form = fields.Str(required=True)

35 type = fields.Str(default="text", validate=validate.OneOf(

36 choices=["text", "checkbox", "select", "file", "image"]))

37 is_required = fields.Boolean(default=False)

38 is_included = fields.Boolean(default=False)

39 is_fixed = fields.Boolean(default=False)

40 event = Relationship(attribute='event',

41 self_view='v1.custom_form_event',

42 self_view_kwargs={'id': '<id>'},

43 related_view='v1.event_detail',

44 related_view_kwargs={'custom_form_id': '<id>'},

45 schema='EventSchema',

46 type_='event')

47

48

49 class CustomFormListPost(ResourceList):

50 """

51 Create and List Custom Forms

52 """

53

54 def before_post(self, args, kwargs, data):

55 """

56 method to check for required relationship with event

57 :param args:

58 :param kwargs:

59 :param data:

60 :return:

61 """

62 require_relationship(['event'], data)

63 if not has_access('is_coorganizer', event_id=data['event']):

64 raise ObjectNotFound({'parameter': 'event_id'},

65 "Event: {} not found".format(data['event_id']))

66

67 schema = CustomFormSchema

68 methods = ['POST', ]

69 data_layer = {'session': db.session,

70 'model': CustomForms

71 }

72

73

74 class CustomFormList(ResourceList):

75 """

76 Create and List Custom Forms

77 """

78 def query(self, view_kwargs):

79 """

80 query method for different view_kwargs

81 :param view_kwargs:

82 :return:

83 """

84 query_ = self.session.query(CustomForms)

85 query_ = event_query(self, query_, view_kwargs)

86 return query_

87

88 view_kwargs = True

89 decorators = (jwt_required, )

90 methods = ['GET', ]

91 schema = CustomFormSchema

92 data_layer = {'session': db.session,

93 'model': CustomForms,

94 'methods': {

95 'query': query

96 }}

97

98

99 class CustomFormDetail(ResourceDetail):

100 """

101 CustomForm Resource

102 """

103

104 def before_get_object(self, view_kwargs):

105 """

106 before get method

107 :param view_kwargs:

108 :return:

109 """

110 event = None

111 if view_kwargs.get('event_id'):

112 event = safe_query(self, Event, 'id', view_kwargs['event_id'], 'event_id')

113 elif view_kwargs.get('event_identifier'):

114 event = safe_query(self, Event, 'identifier', view_kwargs['event_identifier'], 'event_identifier')

115

116 if event:

117 custom_form = safe_query(self, CustomForms, 'event_id', event.id, 'event_id')

118 view_kwargs['id'] = custom_form.id

119

120 decorators = (api.has_permission('is_coorganizer', fetch='event_id',

121 fetch_as="event_id", model=CustomForms, methods="PATCH,DELETE"), )

122 schema = CustomFormSchema

123 data_layer = {'session': db.session,

124 'model': CustomForms}

125

126

127 class CustomFormRelationshipRequired(ResourceRelationship):

128 """

129 CustomForm Relationship (Required)

130 """

131 decorators = (api.has_permission('is_coorganizer', fetch='event_id',

132 fetch_as="event_id", model=CustomForms, methods="PATCH"),)

133 methods = ['GET', 'PATCH']

134 schema = CustomFormSchema

135 data_layer = {'session': db.session,

136 'model': CustomForms}

137

[end of app/api/custom_forms.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/app/api/custom_forms.py b/app/api/custom_forms.py

--- a/app/api/custom_forms.py

+++ b/app/api/custom_forms.py

@@ -24,7 +24,7 @@

"""

Meta class for CustomForm Schema

"""

- type_ = 'custom_form'

+ type_ = 'custom-form'

self_view = 'v1.custom_form_detail'

self_view_kwargs = {'id': '<id>'}

inflect = dasherize

| {"golden_diff": "diff --git a/app/api/custom_forms.py b/app/api/custom_forms.py\n--- a/app/api/custom_forms.py\n+++ b/app/api/custom_forms.py\n@@ -24,7 +24,7 @@\n \"\"\"\n Meta class for CustomForm Schema\n \"\"\"\n- type_ = 'custom_form'\n+ type_ = 'custom-form'\n self_view = 'v1.custom_form_detail'\n self_view_kwargs = {'id': '<id>'}\n inflect = dasherize\n", "issue": "Custom-forms: Change data.type in custom-form\n**I'm submitting a ...** (check one with \"x\")\r\n- [x] bug report\r\n- [ ] feature request\r\n- [ ] support request => Please do not submit support requests here, instead ask your query in out Gitter channel at https://gitter.im/fossasia/open-event-orga-server\r\n\r\n**Current behavior:**\r\nThe type attribute is `custom_form` which leads to error 409 while making a request after #4300 \r\n\r\n**Expected behavior:**\r\nThe type attribute should be `custom-form` \r\n\r\n@enigmaeth Can you please check?\n", "before_files": [{"content": "from flask_rest_jsonapi import ResourceDetail, ResourceList, ResourceRelationship\nfrom marshmallow_jsonapi.flask import Schema, Relationship\nfrom marshmallow_jsonapi import fields\nimport marshmallow.validate as validate\nfrom app.api.helpers.permissions import jwt_required\nfrom flask_rest_jsonapi.exceptions import ObjectNotFound\n\nfrom app.api.bootstrap import api\nfrom app.api.helpers.utilities import dasherize\nfrom app.models import db\nfrom app.models.custom_form import CustomForms\nfrom app.models.event import Event\nfrom app.api.helpers.db import safe_query\nfrom app.api.helpers.utilities import require_relationship\nfrom app.api.helpers.permission_manager import has_access\nfrom app.api.helpers.query import event_query\n\n\nclass CustomFormSchema(Schema):\n \"\"\"\n API Schema for Custom Forms database model\n \"\"\"\n class Meta:\n \"\"\"\n Meta class for CustomForm Schema\n \"\"\"\n type_ = 'custom_form'\n self_view = 'v1.custom_form_detail'\n self_view_kwargs = {'id': '<id>'}\n inflect = dasherize\n\n id = fields.Integer(dump_only=True)\n field_identifier = fields.Str(required=True)\n form = fields.Str(required=True)\n type = fields.Str(default=\"text\", validate=validate.OneOf(\n choices=[\"text\", \"checkbox\", \"select\", \"file\", \"image\"]))\n is_required = fields.Boolean(default=False)\n is_included = fields.Boolean(default=False)\n is_fixed = fields.Boolean(default=False)\n event = Relationship(attribute='event',\n self_view='v1.custom_form_event',\n self_view_kwargs={'id': '<id>'},\n related_view='v1.event_detail',\n related_view_kwargs={'custom_form_id': '<id>'},\n schema='EventSchema',\n type_='event')\n\n\nclass CustomFormListPost(ResourceList):\n \"\"\"\n Create and List Custom Forms\n \"\"\"\n\n def before_post(self, args, kwargs, data):\n \"\"\"\n method to check for required relationship with event\n :param args:\n :param kwargs:\n :param data:\n :return:\n \"\"\"\n require_relationship(['event'], data)\n if not has_access('is_coorganizer', event_id=data['event']):\n raise ObjectNotFound({'parameter': 'event_id'},\n \"Event: {} not found\".format(data['event_id']))\n\n schema = CustomFormSchema\n methods = ['POST', ]\n data_layer = {'session': db.session,\n 'model': CustomForms\n }\n\n\nclass CustomFormList(ResourceList):\n \"\"\"\n Create and List Custom Forms\n \"\"\"\n def query(self, view_kwargs):\n \"\"\"\n query method for different view_kwargs\n :param view_kwargs:\n :return:\n \"\"\"\n query_ = self.session.query(CustomForms)\n query_ = event_query(self, query_, view_kwargs)\n return query_\n\n view_kwargs = True\n decorators = (jwt_required, )\n methods = ['GET', ]\n schema = CustomFormSchema\n data_layer = {'session': db.session,\n 'model': CustomForms,\n 'methods': {\n 'query': query\n }}\n\n\nclass CustomFormDetail(ResourceDetail):\n \"\"\"\n CustomForm Resource\n \"\"\"\n\n def before_get_object(self, view_kwargs):\n \"\"\"\n before get method\n :param view_kwargs:\n :return:\n \"\"\"\n event = None\n if view_kwargs.get('event_id'):\n event = safe_query(self, Event, 'id', view_kwargs['event_id'], 'event_id')\n elif view_kwargs.get('event_identifier'):\n event = safe_query(self, Event, 'identifier', view_kwargs['event_identifier'], 'event_identifier')\n\n if event:\n custom_form = safe_query(self, CustomForms, 'event_id', event.id, 'event_id')\n view_kwargs['id'] = custom_form.id\n\n decorators = (api.has_permission('is_coorganizer', fetch='event_id',\n fetch_as=\"event_id\", model=CustomForms, methods=\"PATCH,DELETE\"), )\n schema = CustomFormSchema\n data_layer = {'session': db.session,\n 'model': CustomForms}\n\n\nclass CustomFormRelationshipRequired(ResourceRelationship):\n \"\"\"\n CustomForm Relationship (Required)\n \"\"\"\n decorators = (api.has_permission('is_coorganizer', fetch='event_id',\n fetch_as=\"event_id\", model=CustomForms, methods=\"PATCH\"),)\n methods = ['GET', 'PATCH']\n schema = CustomFormSchema\n data_layer = {'session': db.session,\n 'model': CustomForms}\n", "path": "app/api/custom_forms.py"}]} | 1,928 | 105 |

gh_patches_debug_30587 | rasdani/github-patches | git_diff | networkx__networkx-2618 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

`networkx.version` shadows any other module named `version` if imported first

Steps to reproduce:

```

$ pip freeze | grep networkx

networkx==1.11

$ touch version.py

$ python -c 'import version; print(version)'

<module 'version' from '/Users/ben/scratch/version.py'>

$ python -c 'import networkx; import version; print(version)'

<module 'version' from '/Users/ben/.virtualenvs/personal/lib/python3.6/site-packages/networkx/version.py'>

```

Reading the code, it looks like the `release` module is adding the networkx package to `sys.path`, importing version and deleting it again?

</issue>

<code>

[start of networkx/release.py]

1 """Release data for NetworkX.

2

3 When NetworkX is imported a number of steps are followed to determine

4 the version information.

5

6 1) If the release is not a development release (dev=False), then version

7 information is read from version.py, a file containing statically

8 defined version information. This file should exist on every

9 downloadable release of NetworkX since setup.py creates it during

10 packaging/installation. However, version.py might not exist if one

11 is running NetworkX from the mercurial repository. In the event that

12 version.py does not exist, then no vcs information will be available.

13

14 2) If the release is a development release, then version information

15 is read dynamically, when possible. If no dynamic information can be

16 read, then an attempt is made to read the information from version.py.

17 If version.py does not exist, then no vcs information will be available.

18

19 Clarification:

20 version.py is created only by setup.py

21

22 When setup.py creates version.py, it does so before packaging/installation.

23 So the created file is included in the source distribution. When a user

24 downloads a tar.gz file and extracts the files, the files will not be in a

25 live version control repository. So when the user runs setup.py to install

26 NetworkX, we must make sure write_versionfile() does not overwrite the

27 revision information contained in the version.py that was included in the

28 tar.gz file. This is why write_versionfile() includes an early escape.

29

30 """

31

32 # Copyright (C) 2004-2017 by

33 # Aric Hagberg <[email protected]>

34 # Dan Schult <[email protected]>

35 # Pieter Swart <[email protected]>

36 # All rights reserved.

37 # BSD license.

38

39 from __future__ import absolute_import

40

41 import os

42 import sys

43 import time

44 import datetime

45

46 basedir = os.path.abspath(os.path.split(__file__)[0])

47

48

49 def write_versionfile():

50 """Creates a static file containing version information."""

51 versionfile = os.path.join(basedir, 'version.py')

52

53 text = '''"""

54 Version information for NetworkX, created during installation.

55

56 Do not add this file to the repository.

57

58 """

59

60 import datetime

61

62 version = %(version)r

63 date = %(date)r

64

65 # Was NetworkX built from a development version? If so, remember that the major

66 # and minor versions reference the "target" (rather than "current") release.

67 dev = %(dev)r

68

69 # Format: (name, major, min, revision)

70 version_info = %(version_info)r

71

72 # Format: a 'datetime.datetime' instance

73 date_info = %(date_info)r

74

75 # Format: (vcs, vcs_tuple)

76 vcs_info = %(vcs_info)r

77

78 '''

79

80 # Try to update all information

81 date, date_info, version, version_info, vcs_info = get_info(dynamic=True)

82

83 def writefile():

84 fh = open(versionfile, 'w')

85 subs = {

86 'dev': dev,

87 'version': version,

88 'version_info': version_info,

89 'date': date,

90 'date_info': date_info,

91 'vcs_info': vcs_info

92 }

93 fh.write(text % subs)

94 fh.close()

95

96 if vcs_info[0] == 'mercurial':

97 # Then, we want to update version.py.

98 writefile()

99 else:

100 if os.path.isfile(versionfile):

101 # This is *good*, and the most likely place users will be when

102 # running setup.py. We do not want to overwrite version.py.

103 # Grab the version so that setup can use it.

104 sys.path.insert(0, basedir)

105 from version import version

106 del sys.path[0]

107 else:

108 # This is *bad*. It means the user might have a tarball that

109 # does not include version.py. Let this error raise so we can

110 # fix the tarball.

111 ##raise Exception('version.py not found!')

112

113 # We no longer require that prepared tarballs include a version.py

114 # So we use the possibly trunctated value from get_info()

115 # Then we write a new file.

116 writefile()

117

118 return version

119

120

121 def get_revision():

122 """Returns revision and vcs information, dynamically obtained."""

123 vcs, revision, tag = None, None, None

124

125 gitdir = os.path.join(basedir, '..', '.git')

126

127 if os.path.isdir(gitdir):

128 vcs = 'git'

129 # For now, we are not bothering with revision and tag.

130

131 vcs_info = (vcs, (revision, tag))

132

133 return revision, vcs_info

134

135

136 def get_info(dynamic=True):

137 # Date information

138 date_info = datetime.datetime.now()

139 date = time.asctime(date_info.timetuple())

140

141 revision, version, version_info, vcs_info = None, None, None, None

142

143 import_failed = False

144 dynamic_failed = False

145

146 if dynamic:

147 revision, vcs_info = get_revision()

148 if revision is None:

149 dynamic_failed = True

150

151 if dynamic_failed or not dynamic:

152 # This is where most final releases of NetworkX will be.

153 # All info should come from version.py. If it does not exist, then

154 # no vcs information will be provided.

155 sys.path.insert(0, basedir)

156 try:

157 from version import date, date_info, version, version_info, vcs_info

158 except ImportError:

159 import_failed = True

160 vcs_info = (None, (None, None))

161 else:

162 revision = vcs_info[1][0]

163 del sys.path[0]

164

165 if import_failed or (dynamic and not dynamic_failed):

166 # We are here if:

167 # we failed to determine static versioning info, or

168 # we successfully obtained dynamic revision info

169 version = ''.join([str(major), '.', str(minor)])

170 if dev:

171 version += '.dev_' + date_info.strftime("%Y%m%d%H%M%S")

172 version_info = (name, major, minor, revision)

173

174 return date, date_info, version, version_info, vcs_info

175

176

177 # Version information

178 name = 'networkx'

179 major = "2"

180 minor = "0"

181

182

183 # Declare current release as a development release.

184 # Change to False before tagging a release; then change back.

185 dev = True

186

187

188 description = "Python package for creating and manipulating graphs and networks"

189

190 long_description = \

191 """

192 NetworkX is a Python package for the creation, manipulation, and

193 study of the structure, dynamics, and functions of complex networks.

194

195 """

196 license = 'BSD'

197 authors = {'Hagberg': ('Aric Hagberg', '[email protected]'),

198 'Schult': ('Dan Schult', '[email protected]'),

199 'Swart': ('Pieter Swart', '[email protected]')

200 }

201 maintainer = "NetworkX Developers"

202 maintainer_email = "[email protected]"

203 url = 'http://networkx.github.io/'

204 download_url = 'https://pypi.python.org/pypi/networkx/'

205 platforms = ['Linux', 'Mac OSX', 'Windows', 'Unix']

206 keywords = ['Networks', 'Graph Theory', 'Mathematics',

207 'network', 'graph', 'discrete mathematics', 'math']

208 classifiers = [

209 'Development Status :: 5 - Production/Stable',

210 'Intended Audience :: Developers',

211 'Intended Audience :: Science/Research',

212 'License :: OSI Approved :: BSD License',

213 'Operating System :: OS Independent',

214 'Programming Language :: Python :: 2',

215 'Programming Language :: Python :: 2.7',

216 'Programming Language :: Python :: 3',

217 'Programming Language :: Python :: 3.4',

218 'Programming Language :: Python :: 3.5',

219 'Programming Language :: Python :: 3.6',

220 'Topic :: Software Development :: Libraries :: Python Modules',

221 'Topic :: Scientific/Engineering :: Bio-Informatics',

222 'Topic :: Scientific/Engineering :: Information Analysis',

223 'Topic :: Scientific/Engineering :: Mathematics',

224 'Topic :: Scientific/Engineering :: Physics']

225

226 date, date_info, version, version_info, vcs_info = get_info()

227

228 if __name__ == '__main__':

229 # Write versionfile for nightly snapshots.

230 write_versionfile()

231

[end of networkx/release.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/networkx/release.py b/networkx/release.py

--- a/networkx/release.py

+++ b/networkx/release.py

@@ -101,9 +101,9 @@

# This is *good*, and the most likely place users will be when

# running setup.py. We do not want to overwrite version.py.

# Grab the version so that setup can use it.

- sys.path.insert(0, basedir)

+ #sys.path.insert(0, basedir)

from version import version

- del sys.path[0]

+ #del sys.path[0]

else:

# This is *bad*. It means the user might have a tarball that

# does not include version.py. Let this error raise so we can

@@ -152,7 +152,7 @@

# This is where most final releases of NetworkX will be.

# All info should come from version.py. If it does not exist, then

# no vcs information will be provided.

- sys.path.insert(0, basedir)

+ #sys.path.insert(0, basedir)

try:

from version import date, date_info, version, version_info, vcs_info

except ImportError:

@@ -160,7 +160,7 @@

vcs_info = (None, (None, None))

else:

revision = vcs_info[1][0]

- del sys.path[0]

+ #del sys.path[0]

if import_failed or (dynamic and not dynamic_failed):

# We are here if:

| {"golden_diff": "diff --git a/networkx/release.py b/networkx/release.py\n--- a/networkx/release.py\n+++ b/networkx/release.py\n@@ -101,9 +101,9 @@\n # This is *good*, and the most likely place users will be when\n # running setup.py. We do not want to overwrite version.py.\n # Grab the version so that setup can use it.\n- sys.path.insert(0, basedir)\n+ #sys.path.insert(0, basedir)\n from version import version\n- del sys.path[0]\n+ #del sys.path[0]\n else:\n # This is *bad*. It means the user might have a tarball that\n # does not include version.py. Let this error raise so we can\n@@ -152,7 +152,7 @@\n # This is where most final releases of NetworkX will be.\n # All info should come from version.py. If it does not exist, then\n # no vcs information will be provided.\n- sys.path.insert(0, basedir)\n+ #sys.path.insert(0, basedir)\n try:\n from version import date, date_info, version, version_info, vcs_info\n except ImportError:\n@@ -160,7 +160,7 @@\n vcs_info = (None, (None, None))\n else:\n revision = vcs_info[1][0]\n- del sys.path[0]\n+ #del sys.path[0]\n \n if import_failed or (dynamic and not dynamic_failed):\n # We are here if:\n", "issue": "`networkx.version` shadows any other module named `version` if imported first\nSteps to reproduce:\r\n\r\n```\r\n$ pip freeze | grep networkx\r\nnetworkx==1.11\r\n$ touch version.py\r\n$ python -c 'import version; print(version)'\r\n<module 'version' from '/Users/ben/scratch/version.py'>\r\n$ python -c 'import networkx; import version; print(version)'\r\n<module 'version' from '/Users/ben/.virtualenvs/personal/lib/python3.6/site-packages/networkx/version.py'>\r\n```\r\n\r\nReading the code, it looks like the `release` module is adding the networkx package to `sys.path`, importing version and deleting it again?\n", "before_files": [{"content": "\"\"\"Release data for NetworkX.\n\nWhen NetworkX is imported a number of steps are followed to determine\nthe version information.\n\n 1) If the release is not a development release (dev=False), then version\n information is read from version.py, a file containing statically\n defined version information. This file should exist on every\n downloadable release of NetworkX since setup.py creates it during\n packaging/installation. However, version.py might not exist if one\n is running NetworkX from the mercurial repository. In the event that\n version.py does not exist, then no vcs information will be available.\n\n 2) If the release is a development release, then version information\n is read dynamically, when possible. If no dynamic information can be\n read, then an attempt is made to read the information from version.py.\n If version.py does not exist, then no vcs information will be available.\n\nClarification:\n version.py is created only by setup.py\n\nWhen setup.py creates version.py, it does so before packaging/installation.\nSo the created file is included in the source distribution. When a user\ndownloads a tar.gz file and extracts the files, the files will not be in a\nlive version control repository. So when the user runs setup.py to install\nNetworkX, we must make sure write_versionfile() does not overwrite the\nrevision information contained in the version.py that was included in the\ntar.gz file. This is why write_versionfile() includes an early escape.\n\n\"\"\"\n\n# Copyright (C) 2004-2017 by\n# Aric Hagberg <[email protected]>\n# Dan Schult <[email protected]>\n# Pieter Swart <[email protected]>\n# All rights reserved.\n# BSD license.\n\nfrom __future__ import absolute_import\n\nimport os\nimport sys\nimport time\nimport datetime\n\nbasedir = os.path.abspath(os.path.split(__file__)[0])\n\n\ndef write_versionfile():\n \"\"\"Creates a static file containing version information.\"\"\"\n versionfile = os.path.join(basedir, 'version.py')\n\n text = '''\"\"\"\nVersion information for NetworkX, created during installation.\n\nDo not add this file to the repository.\n\n\"\"\"\n\nimport datetime\n\nversion = %(version)r\ndate = %(date)r\n\n# Was NetworkX built from a development version? If so, remember that the major\n# and minor versions reference the \"target\" (rather than \"current\") release.\ndev = %(dev)r\n\n# Format: (name, major, min, revision)\nversion_info = %(version_info)r\n\n# Format: a 'datetime.datetime' instance\ndate_info = %(date_info)r\n\n# Format: (vcs, vcs_tuple)\nvcs_info = %(vcs_info)r\n\n'''\n\n # Try to update all information\n date, date_info, version, version_info, vcs_info = get_info(dynamic=True)\n\n def writefile():\n fh = open(versionfile, 'w')\n subs = {\n 'dev': dev,\n 'version': version,\n 'version_info': version_info,\n 'date': date,\n 'date_info': date_info,\n 'vcs_info': vcs_info\n }\n fh.write(text % subs)\n fh.close()\n\n if vcs_info[0] == 'mercurial':\n # Then, we want to update version.py.\n writefile()\n else:\n if os.path.isfile(versionfile):\n # This is *good*, and the most likely place users will be when\n # running setup.py. We do not want to overwrite version.py.\n # Grab the version so that setup can use it.\n sys.path.insert(0, basedir)\n from version import version\n del sys.path[0]\n else:\n # This is *bad*. It means the user might have a tarball that\n # does not include version.py. Let this error raise so we can\n # fix the tarball.\n ##raise Exception('version.py not found!')\n\n # We no longer require that prepared tarballs include a version.py\n # So we use the possibly trunctated value from get_info()\n # Then we write a new file.\n writefile()\n\n return version\n\n\ndef get_revision():\n \"\"\"Returns revision and vcs information, dynamically obtained.\"\"\"\n vcs, revision, tag = None, None, None\n\n gitdir = os.path.join(basedir, '..', '.git')\n\n if os.path.isdir(gitdir):\n vcs = 'git'\n # For now, we are not bothering with revision and tag.\n\n vcs_info = (vcs, (revision, tag))\n\n return revision, vcs_info\n\n\ndef get_info(dynamic=True):\n # Date information\n date_info = datetime.datetime.now()\n date = time.asctime(date_info.timetuple())\n\n revision, version, version_info, vcs_info = None, None, None, None\n\n import_failed = False\n dynamic_failed = False\n\n if dynamic:\n revision, vcs_info = get_revision()\n if revision is None:\n dynamic_failed = True\n\n if dynamic_failed or not dynamic:\n # This is where most final releases of NetworkX will be.\n # All info should come from version.py. If it does not exist, then\n # no vcs information will be provided.\n sys.path.insert(0, basedir)\n try:\n from version import date, date_info, version, version_info, vcs_info\n except ImportError:\n import_failed = True\n vcs_info = (None, (None, None))\n else:\n revision = vcs_info[1][0]\n del sys.path[0]\n\n if import_failed or (dynamic and not dynamic_failed):\n # We are here if:\n # we failed to determine static versioning info, or\n # we successfully obtained dynamic revision info\n version = ''.join([str(major), '.', str(minor)])\n if dev:\n version += '.dev_' + date_info.strftime(\"%Y%m%d%H%M%S\")\n version_info = (name, major, minor, revision)\n\n return date, date_info, version, version_info, vcs_info\n\n\n# Version information\nname = 'networkx'\nmajor = \"2\"\nminor = \"0\"\n\n\n# Declare current release as a development release.\n# Change to False before tagging a release; then change back.\ndev = True\n\n\ndescription = \"Python package for creating and manipulating graphs and networks\"\n\nlong_description = \\\n \"\"\"\nNetworkX is a Python package for the creation, manipulation, and\nstudy of the structure, dynamics, and functions of complex networks.\n\n\"\"\"\nlicense = 'BSD'\nauthors = {'Hagberg': ('Aric Hagberg', '[email protected]'),\n 'Schult': ('Dan Schult', '[email protected]'),\n 'Swart': ('Pieter Swart', '[email protected]')\n }\nmaintainer = \"NetworkX Developers\"\nmaintainer_email = \"[email protected]\"\nurl = 'http://networkx.github.io/'\ndownload_url = 'https://pypi.python.org/pypi/networkx/'\nplatforms = ['Linux', 'Mac OSX', 'Windows', 'Unix']\nkeywords = ['Networks', 'Graph Theory', 'Mathematics',\n 'network', 'graph', 'discrete mathematics', 'math']\nclassifiers = [\n 'Development Status :: 5 - Production/Stable',\n 'Intended Audience :: Developers',\n 'Intended Audience :: Science/Research',\n 'License :: OSI Approved :: BSD License',\n 'Operating System :: OS Independent',\n 'Programming Language :: Python :: 2',\n 'Programming Language :: Python :: 2.7',\n 'Programming Language :: Python :: 3',\n 'Programming Language :: Python :: 3.4',\n 'Programming Language :: Python :: 3.5',\n 'Programming Language :: Python :: 3.6',\n 'Topic :: Software Development :: Libraries :: Python Modules',\n 'Topic :: Scientific/Engineering :: Bio-Informatics',\n 'Topic :: Scientific/Engineering :: Information Analysis',\n 'Topic :: Scientific/Engineering :: Mathematics',\n 'Topic :: Scientific/Engineering :: Physics']\n\ndate, date_info, version, version_info, vcs_info = get_info()\n\nif __name__ == '__main__':\n # Write versionfile for nightly snapshots.\n write_versionfile()\n", "path": "networkx/release.py"}]} | 3,116 | 353 |

gh_patches_debug_37642 | rasdani/github-patches | git_diff | learningequality__kolibri-12059 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Feature Request: Add --manifest-only option to exportcontent

My understanding is that 0.16 will generate a channel manifest during

`kolibri manage exportcontent`

My request is that you add an option that will not do the export of content but only generate the manifest. This manifest could then be used on another remote install to import from network the same set of content.

```[tasklist]

### Tasks

- [ ] Add --manifest-only command line option to the exportcontent management command

- [ ] If this option is selected, generate the manifest, but skip copying any files (channel database files, and content files)

- [ ] Write tests to confirm the --manifest-only behaviour

```

</issue>

<code>

[start of kolibri/core/content/management/commands/exportcontent.py]

1 import logging