Pearl Model (23M-translate): English to Luganda Translation

This is the Pearl Model (23M-translate-v2), a Transformer-based neural machine translation (NMT) model trained from scratch. It is designed to translate text from English to Luganda and contains approximately 23,363,200 parameters. This version features an increased maximum sequence length of 512, deeper encoder/decoder (6 layers each), wider feed-forward networks (PF_DIM 1024), an increased tokenizer vocabulary size (16000), and the Transformer's original learning rate schedule.

Model Overview

The Pearl Model is an encoder-decoder Transformer architecture implemented entirely in PyTorch.

- Model Type: Sequence-to-Sequence Transformer

- Source Language: English ('english')

- Target Language: Luganda ('luganda')

- Framework: PyTorch

- Parameters: ~23,363,200

- Training: From scratch with "Attention is All You Need" learning rate schedule.

- Max Sequence Length: 512 tokens

- Tokenizer Vocabulary Size: 16000 for both source and target.

Detailed hyperparameters, architectural specifics, and tokenizer configurations can be found in the accompanying config.json file.

Intended Use

This model is intended for:

- Translating general domain text from English to Luganda, including longer sentences up to 512 tokens.

- Research purposes in low-resource machine translation, Transformer architectures, and NLP for African languages.

- Serving as a baseline for future improvements in English-Luganda translation.

- Educational tool for understanding how to build and train NMT models from scratch.

Out-of-scope:

- Translation of highly specialized or technical jargon not present in the training data.

- High-stakes applications requiring perfect fluency or nuance without further fine-tuning and rigorous evaluation.

- Translation into English (this model is unidirectional: English to Luganda).

Training Details

Dataset

The model was trained exclusively on the kambale/luganda-english-parallel-corpus dataset available on the Hugging Face Hub.

- Dataset ID: kambale/luganda-english-parallel-corpus

- Training Epochs Attempted: 50 (Early stopping based on validation loss was used)

- Tokenizers: Byte-Pair Encoding (BPE) tokenizers (vocab size: 16000) were trained from scratch.

- English Tokenizer:

english_tokenizer_v2.json - Luganda Tokenizer:

luganda_tokenizer_v2.json

- English Tokenizer:

Compute Infrastructure

- Hardware: 1x NVIDIA A100 40GB (example)

- Training Time: ~3 hours for 35 epochs

Performance & Evaluation

- Best Validation Loss: 1.803

- Test Set BLEU Score: 39.76

Example Validation Set Translations (from training run):

(Note: BLEU scores are calculated using SacreBLEU on detokenized text with force=True.)

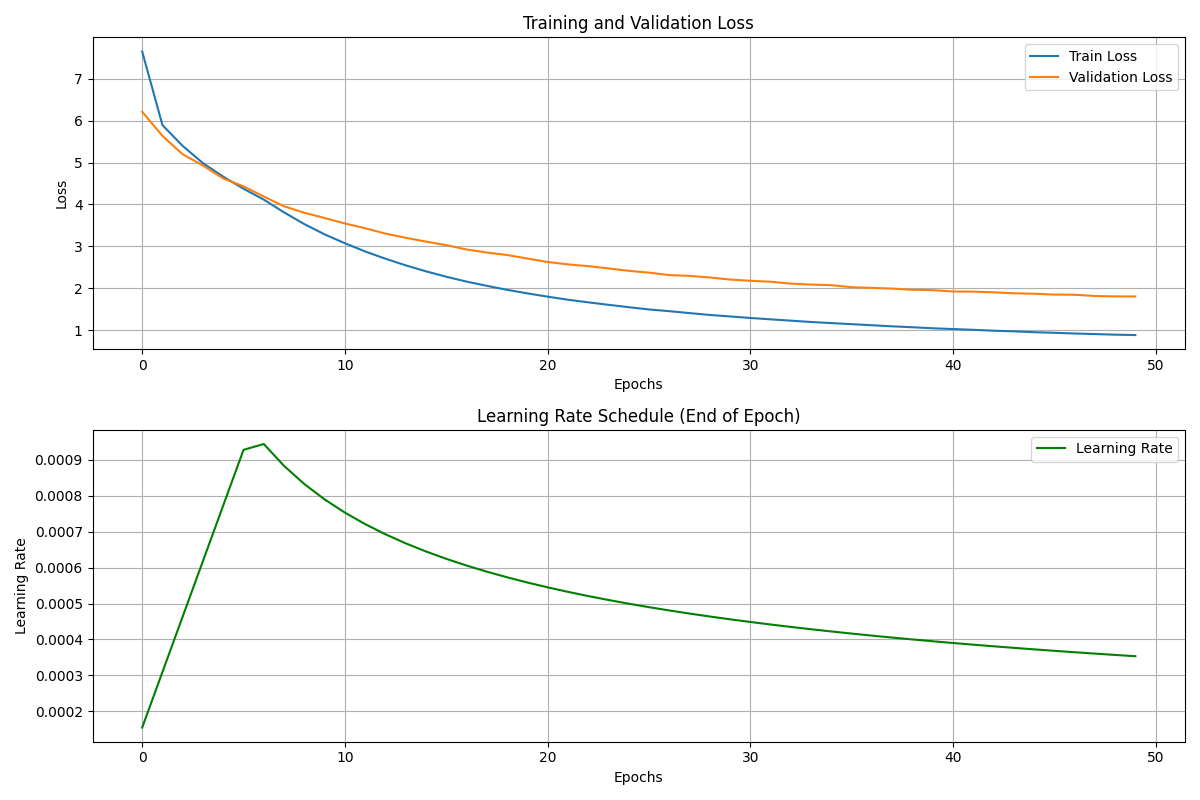

Training & Learning Rate Curves

How to Use

This model is provided with its PyTorch state dictionary, tokenizer files, and configuration. Manual loading is required.

Manual Loading (Conceptual Example)

- Define Model Architecture: Use the Python classes (

Seq2SeqTransformer,Encoder,Decoder, etc.) from the training script. - Load Tokenizers & Config:

from tokenizers import Tokenizer import json import torch # from your_model_script import Seq2SeqTransformer, Encoder, Decoder, PositionalEncoding, ... (ensure these are defined) # Load config with open("config.json", 'r') as f: config = json.load(f) # Load tokenizers src_tokenizer = Tokenizer.from_file(config["src_tokenizer_file"]) trg_tokenizer = Tokenizer.from_file(config["trg_tokenizer_file"]) # Model parameters from config params = config["model_parameters"] special_tokens = config["special_token_ids"] DEVICE = torch.device('cuda' if torch.cuda.is_available() else 'cpu') enc = Encoder(params["input_dim_vocab_size_src"], params["hidden_dim"], params["encoder_layers"], params["encoder_heads"], params["encoder_pf_dim"], params["encoder_dropout"], DEVICE, params["max_seq_length"]) dec = Decoder(params["output_dim_vocab_size_trg"], params["hidden_dim"], params["decoder_layers"], params["decoder_heads"], params["decoder_pf_dim"], params["decoder_dropout"], DEVICE, params["max_seq_length"]) model = Seq2SeqTransformer(enc, dec, special_tokens["pad_token_id"], special_tokens["pad_token_id"], DEVICE) model.load_state_dict(torch.load(config["pytorch_model_path"], map_location=DEVICE)) model.to(DEVICE) model.eval() - Inference: Use a

translate_sentencefunction similar to the one in the training notebook.

Limitations and Bias

(Content similar to original, adjusted for new context if necessary)

- Low-Resource Pair & Data Size: While performance has improved, Luganda remains low-resource. Model may struggle with OOV words or highly nuanced text.

- Data Source Bias: Biases in

kambale/luganda-english-parallel-corpuswill be reflected. - Generalization: May not generalize well to very different domains.

Limitations and Bias

- Low-Resource Pair: Luganda is a low-resource language. While the kambale/luganda-english-parallel-corpus is a valuable asset, the overall volume of parallel data is still limited compared to high-resource language pairs. This can lead to:

- Difficulties in handling out-of-vocabulary (OOV) words or rare phrases.

- Potential for translations to be less fluent or accurate for complex sentences or nuanced expressions.

- The model might reflect biases present in the training data.

- Data Source Bias: The characteristics and biases of the kambale/luganda-english-parallel-corpus (e.g., domain, style, demographic representation) will be reflected in the model's translations.

- Generalization: The model may not generalize well to domains significantly different from the training data.

- No Back-translation or Advanced Techniques: This model was trained directly on the parallel corpus without more advanced techniques like back-translation or pre-training on monolingual data, which could further improve performance.

- Greedy Decoding for Examples: Performance metrics (BLEU) are typically calculated using beam search. The conceptual usage examples might rely on greedy decoding, which can be suboptimal.

Ethical Considerations

- Bias Amplification: Machine translation models can inadvertently perpetuate or even amplify societal biases present in the training data. Users should be aware of this potential when using the translations.

- Misinformation: As with any generative model, there's a potential for misuse in generating misleading or incorrect information.

- Cultural Nuance: Automated translation may miss critical cultural nuances, potentially leading to misinterpretations. Human oversight is recommended for sensitive or important translations.

- Attribution: The training data is sourced from kambale/luganda-english-parallel-corpus. Please refer to the dataset card for its specific sourcing and licensing.

Future Work & Potential Improvements

- Fine-tuning on domain-specific data.

- Training with a larger parallel corpus if available.

- Incorporating monolingual Luganda data through techniques like back-translation.

- Experimenting with larger model architectures or pre-trained multilingual models as a base.

- Implementing more sophisticated decoding strategies (e.g., beam search with length normalization).

- Conducting a thorough human evaluation of translation quality.

Disclaimer

This model is provided "as-is" without warranty of any kind, express or implied. It was trained as part of an educational demonstration and may have limitations in accuracy, fluency, and robustness. Users should validate its suitability for their specific applications.

- Downloads last month

- 8

Dataset used to train kambale/pearl-23m-translate

Evaluation results

- BLEU on kambale/luganda-english-parallel-corpus (Test Split)self-reported39.760

- Validation Loss (Best) on kambale/luganda-english-parallel-corpus (Test Split)self-reported1.803