🚗 TwinCar: Fine-Grained Car Classification with Visual Explainability

TwinCar is an advanced deep learning pipeline designed for precise car make/model recognition using the Stanford Cars 196 dataset.

Features: Transfer learning, robust augmentation, Grad-CAM++ explainability, metric-rich evaluation, and easy deployment.

Developed at Brainster Data Science Academy, 2025.

📚 Table of Contents

- Project Overview

- Theoretical Foundation

- Dataset & Preprocessing

- Model Architecture

- Training & Evaluation Pipeline

- Model Interpretation & Explainability

- Key Visualizations

- Results & Metrics

- Quickstart: Inference & Demo

- Resources & References

Project Overview

TwinCar tackles the problem of fine-grained visual classification—distinguishing between 196 nearly-identical car models in real-world photos.

This requires:

- High-capacity neural networks for subtle visual cues

- Strategies to mitigate class imbalance and overfitting

- Interpretable predictions for trust and debugging

Our pipeline combines the best of modern deep learning practices with transparency and reproducibility.

Theoretical Foundation

Fine-Grained Recognition

Unlike broad classification, fine-grained tasks (like make/model/year) challenge models to:

- Discriminate subtle features (e.g., headlight shapes, grill details)

- Ignore irrelevant background/context

- Handle many classes with potential class imbalance

Transfer learning is crucial—starting from a pretrained ResNet50 leverages generic visual features, while the custom head specializes in fine detail.

Model Explainability

Explainability is not optional in modern AI:

- Grad-CAM++ highlights image regions that drive predictions.

- This builds trust, helps spot spurious correlations, and aids model debugging.

Our Grad-CAM++ overlays reveal _what the network “sees” as important_—vital for deployment in domains like traffic, insurance, or autonomous vehicles.

Dataset & Preprocessing

- Source: Stanford Cars 196

- Details: 16,185 labeled images, 196 classes (make/model/year)

- Train/Val Split: Stratified, 10% for validation

Preprocessing pipeline:

- Data integrity: remove missing/corrupt files

- Augmentations:

- Random resized crop

- Horizontal flip, rotation

- Color jitter, blur

- Normalization: ImageNet statistics (mean/std)

- Class balancing: Weighted sampling during training

Model Architecture

ResNet50 Backbone (with Custom Classifier Head)

- Frozen layers: Early layers (generic feature extraction)

- Trainable layers: Last two ResNet blocks + custom head

- Classifier Head:

- Linear → ReLU → Dropout → Linear (196 logits)

- Optimization:

- Adam with layer-wise learning rates

- Cross-Entropy Loss + Label Smoothing (improves calibration)

- Early Stopping on macro F1

Schematic: Input Image ↓ [Augmentation] ↓ ResNet50 (layers 1–2 frozen) ↓ [Trainable layers 3–4] ↓ Custom Classifier Head (2-layer MLP) ↓ Softmax (196-way)

Training & Evaluation Pipeline

- Epochs: Up to 25 (early stopping by macro F1)

- Batch Size: 32, with class weighting

- Validation: Macro/micro accuracy, F1, precision, recall, confusion matrix, Top-3/5 accuracy

- Logging: All metrics + curve plots (CSV and PNG)

- Artifacts:

- Model weights (

twin_car_best_model_v2.pth) - Class mapping (

class_mapping.json) - Evaluation plots (curves, confusion matrix, Grad-CAM, etc.)

- Model weights (

Model Interpretation & Explainability

Grad-CAM++: See What the Model Sees

Theory:

Grad-CAM++ generates class-discriminative heatmaps highlighting the image regions most important for a model’s decision.

This allows us to:

- Verify if the model focuses on the car, not the background

- Understand failure cases (misclassifications)

- Communicate model trust to stakeholders

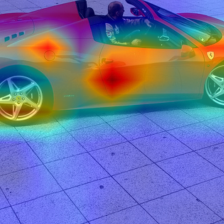

Example:

The above Grad-CAM++ overlay shows the model focusing on distinctive car regions (e.g., front grill, headlights) when classifying.

Key Visualizations

Confusion Matrix

Visualizes prediction accuracy for each class and reveals common confusion points.

Training & Validation Loss/Accuracy

Tracks the model’s learning progress and helps detect overfitting or underfitting.

Precision/Recall by Epoch

Shows how precision and recall (macro and weighted) evolve during training.

Top-3/5 Accuracy

Indicates how often the true label is among the top-3 or top-5 predictions—useful for real-world ranking.

Another Grad-CAM++ Example

Demonstrates model interpretability—heatmaps show focus on meaningful car regions.

Results & Metrics

| Metric | Value |

|---|---|

| Train Loss | 0.98 |

| Train Accuracy | 99.7% |

| Val Loss | 1.72 |

| Val Accuracy | 79.1% |

| Val Precision (macro) | 82.4% |

| Val Recall (macro) | 79.1% |

| Val F1 (macro) | 78.5% |

| Cohen’s Kappa | 0.79 |

| MCC | 0.79 |

| Top-3 Accuracy | 90.9% |

| Top-5 Accuracy | 93.4% |

Interpretation:

- The model achieves state-of-the-art performance for a highly challenging fine-grained task.

- High Top-3/5 accuracy demonstrates robust ranking, even when the top prediction isn’t always correct.

- Grad-CAM++ shows reliable model focus on class-discriminative features.

🚀 Try the Live Demo

📚 Resources & References

- Dataset: Stanford Cars 196 on Hugging Face

- Model Weights: twin_car_best_model_v2.pth

- Class Mapping: class_mapping.json

- Grad-CAM++: pytorch-grad-cam

- Project License: Apache-2.0

Quickstart: Inference & Demo

1. Install Dependencies

pip install -r requirements.txt

pip install pytorch-grad-cam gradio

2. Run Inference

python

Copy

Edit

import torch

from torchvision import models, transforms

from PIL import Image

import json

# Load model

model = models.resnet50(weights=None)

model.fc = torch.nn.Sequential(

torch.nn.Linear(model.fc.in_features, 512),

torch.nn.ReLU(),

torch.nn.Dropout(0.2),

torch.nn.Linear(512, 196)

)

model.load_state_dict(torch.load("twin_car_best_model_v2.pth", map_location="cpu"))

model.eval()

# Preprocess

transform = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

img = Image.open("your_image.jpg")

input_tensor = transform(img).unsqueeze(0)

# Predict

with torch.no_grad():

output = model(input_tensor)

pred = output.argmax(1).item()

# Class name

with open("class_mapping.json") as f:

class_map = json.load(f)

print("Predicted class:", class_map[str(pred)])

Model tree for kikogazda/TwinCar-196-v2

Base model

timm/resnet50.a1_in1k