Original model is https://huggingface.co/ByteDance-Seed/BAGEL-7B-MoT

ema-FP8.safetensors is float8_e4m3fn.

float8_e4m3fn weight of: https://huggingface.co/ByteDance-Seed/BAGEL-7B-MoT

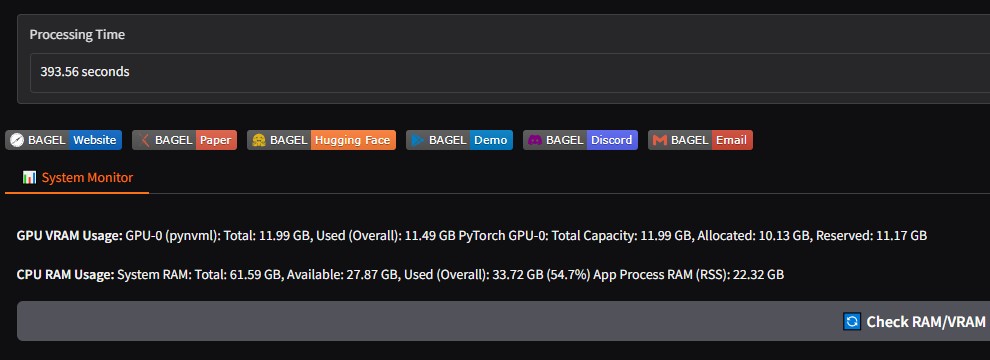

Benchmark Spec: 24GB 4090 + 60GB RAM

Default setting, Timesteps 25 steps

| Features | Speed (seconds) | GPU VRAM Usage | CPU RAM Usage |

|---|---|---|---|

| 📝 Text to Image | 128.90 s | 16.18 GB | 14.22 GB |

| 🖌️ Image Edit | 138.67 s | 15.08 GB | 14.21 GB |

| 🖼️ Image Understanding | 102.68 s | 15.08 GB | 13.66 GB |

Support

Runs with less than 12GB of GPU memory.

ram + vram = about 31GB

* 12GB is much slower than 24GB due to CPU offload. It will be 1.5x much slower than 24GB

How to Install:

new venv

- git clone https://github.com/bytedance-seed/BAGEL.git

- cd BAGEL

- conda create -n bagel python=3.10 -y

- conda activate bagel

install

install pytorch 2.5.1

CUDA 12.4

pip install torch==2.5.1 torchvision==0.20.1 --index-url https://download.pytorch.org/whl/cu124pip install flash_attn-2.7.0.post1+cu12torch2.5cxx11abiFALSE-cp310-cp310-linux_x86_64.whl

more whl: https://github.com/Dao-AILab/flash-attention/releases

It needs to be the same as the Python version, PyTorch version, CUDA version, and flash_attn WHL.pip install -r requirements.txt

(edit requirements.txt, without flash_attn==2.5.8, make it #flash_attn==2.5.8)pip install gradio pynvml (#pynvml for check vram stats.)

Models & Settings:

- Download huggingface.co/ByteDance-Seed/BAGEL-7B-MoT(without ema.safetensors) & ema-FP8.safetensors and make it like this.

folders

├── BAGEL

│ └── app-fp8.py

└── BAGEL-7B-MoT

└── ema-FP8.safetensors

Open app-fp8.py via Notepad or VScode etc.

Replace model_path to yours.

parser.add_argument("--model_path", type=str, default="/root/your_path/BAGEL-7B-MoT")

- Edit your spec:

cpu_mem_for_offload = "16GiB"

gpu_mem_per_device = "24GiB" #default:24GiB you can set 16GB within 24GB with 4090,more slower.

- Be more efficient

NUM_ADDITIONAL_LLM_LAYERS_TO_GPU = 5

# (5 for 24gb VRAM, >5 for 32gb VRAM, have a try)

# The default is 10 layers in GPU, use it can be 15 layers in GPU with 4090.

How to Use:

- CD BAGEL

- conda activate bagel

- python app-fp8.py

- Open 127.0.0.1:7860