Spaces:

Running

Running

metadata

title: README

emoji: 👁

colorFrom: purple

colorTo: green

sdk: static

pinned: false

Hugging Face Smol Models Research

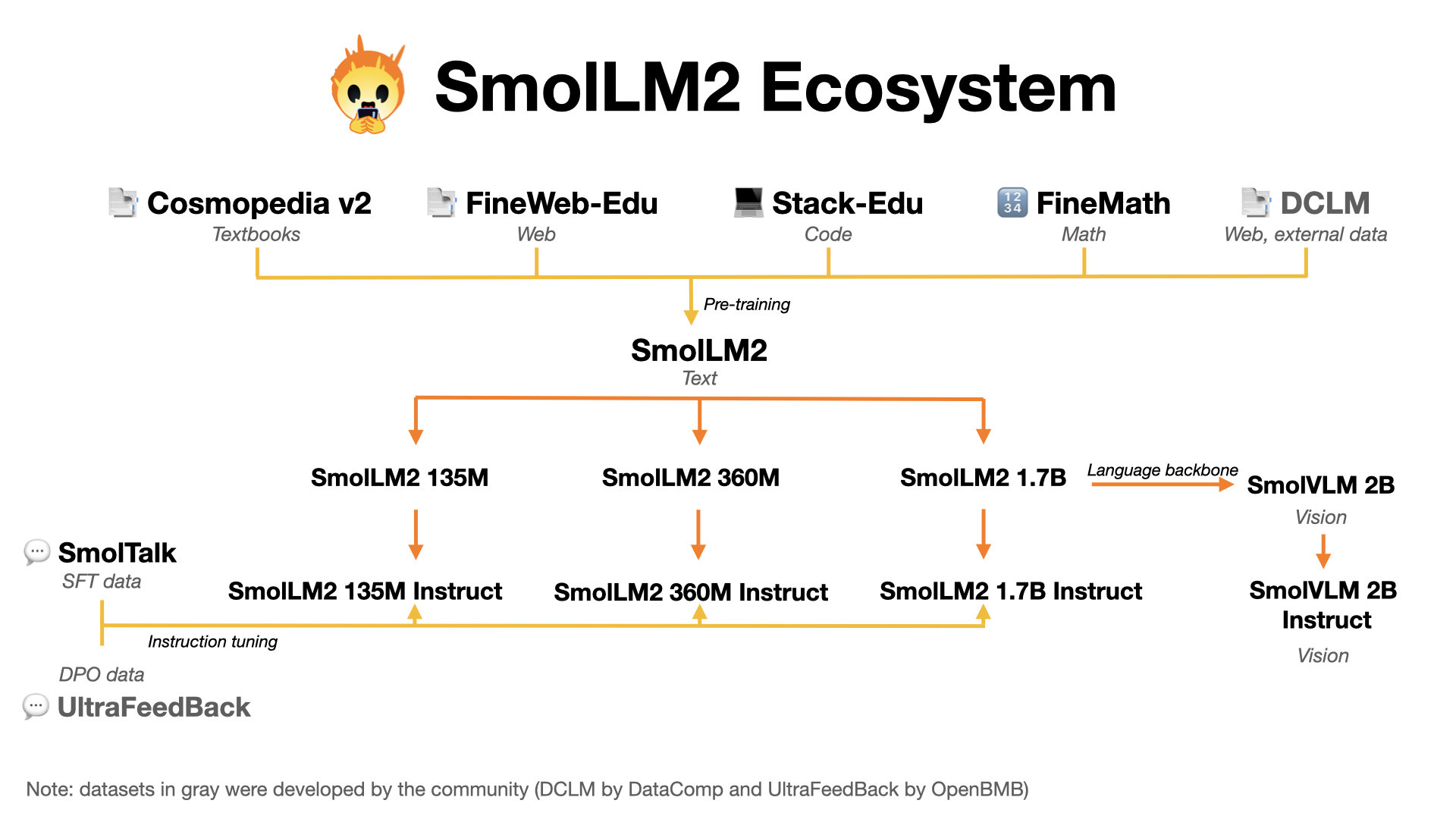

This is the home for smol models (SmolLM & SmolVLM) and high quality pre-training datasets. We released:

- FineWeb-Edu: a filtered version of FineWeb dataset for educational content, paper available here.

- Cosmopedia: the largest open synthetic dataset, with 25B tokens and 30M samples. It contains synthetic textbooks, blog posts, and stories, posts generated by Mixtral. Blog post available here.

- Smollm-Corpus: the pre-training corpus of SmolLM: Cosmopedia v0.2, FineWeb-Edu dedup and Python-Edu. Blog post available here.

- FineMath: the best public math pretraining dataset with 50B tokens of mathematical and problem solving data.

- Stack-Edu: the best open code pretraining dataset with educational code in 15 programming languages.

- SmolLM2 models: a series of strong small models in three sizes: 135M, 360M and 1.7B

- SmolVLM2: a family of small Video and Vision models in three sizes: 2.2B, 500M and 256M. Blog post available here.

News 🗞️

- HuggingSnap: turn your iPhone into a visual assistant usig SmolVLM2. App Store - Source code

- Stack-Edu: 125B tokens of educational code in 15 programming languages. Dataset