Error converting gemma3n

Hi there!

New to this community 🤗

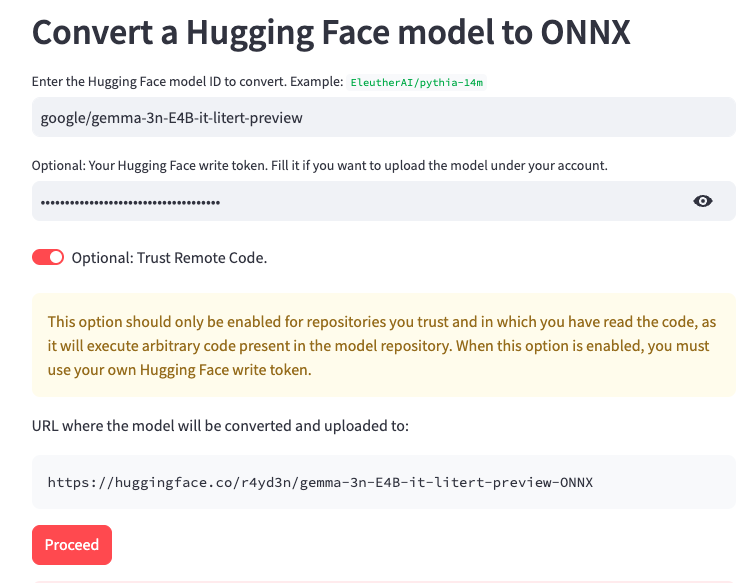

I was trying to use convert to onnx https://huggingface.co/spaces/onnx-community/convert-to-onnx

to convert google/gemma-3n-E4B-it-litert-preview

It isint authorized without an access token since its a gated model. So I provided one after obtaining access.

The converter runs without the 403 error but throws the following error, which is kind of weird. Any thoughts on what may be happening here would be much appreciated!

Conversion failed: Traceback (most recent call last): File "/usr/local/lib/python3.10/runpy.py", line 196, in _run_module_as_main return _run_code(code, main_globals, None, File "/usr/local/lib/python3.10/runpy.py", line 86, in _run_code exec(code, run_globals) File "/home/user/app/transformers.js/scripts/convert.py", line 456, in main() File "/home/user/app/transformers.js/scripts/convert.py", line 242, in main raise e File "/home/user/app/transformers.js/scripts/convert.py", line 235, in main tokenizer = AutoTokenizer.from_pretrained(tokenizer_id, **from_pretrained_kwargs) File "/usr/local/lib/python3.10/site-packages/transformers/models/auto/tokenization_auto.py", line 963, in from_pretrained return tokenizer_class_fast.from_pretrained(pretrained_model_name_or_path, *inputs, **kwargs) File "/usr/local/lib/python3.10/site-packages/transformers/tokenization_utils_base.py", line 2036, in from_pretrained raise EnvironmentError( OSError: Can't load tokenizer for 'google/gemma-3n-E4B-it-litert-preview'. If you were trying to load it from 'https://huggingface.co/models', make sure you don't have a local directory with the same name. Otherwise, make sure 'google/gemma-3n-E4B-it-litert-preview' is the correct path to a directory containing all relevant files for a GemmaTokenizerFast tokenizer.

Hi, @r4yd3n ! Welcome to the community!

As far as I see, gemma-3n-E4B-it-litert-preview haven't been converted to Hugging Face's model format, so it still requires Google's custom script to run.

Give it a bit more time. After it's compatible with HF format, it will be easier for the ONNX team to implement the conversion, and for Transformer.js team to update the conversion script. Once that's done, we'll be able to update https://huggingface.co/spaces/onnx-community/convert-to-onnx to convert it.

@Felladrin thanks so much for the insight.. makes sense now. A big thank you to the community for hosting the convert tool!

I'm seeing a similar issue with llama, although there are many converted version anyway.

Any recommendations?

ValueError: Asked to export a llama model for the task text2text-generation, but the Optimum ONNX exporter only supports the tasks feature-extraction, feature-extraction-with-past, text-generation, text-generation-with-past, text-classification for llama. Please use a supported task. Please open an issue at https://github.com/huggingface/optimum/issues if you would like the task text2text-generation to be supported in the ONNX export for llama.

Hi, @ddiddi .

Meta Llama hasn't officially released a text2text-generation model. So I guess you were trying to convert a custom model. Can provide a link to that model?