Model Card for FUSION

This is the checkpoint after Stage 1 and Stage1.5 training of FUSION-X-Phi3.5-3B.

Model Details

Model Description

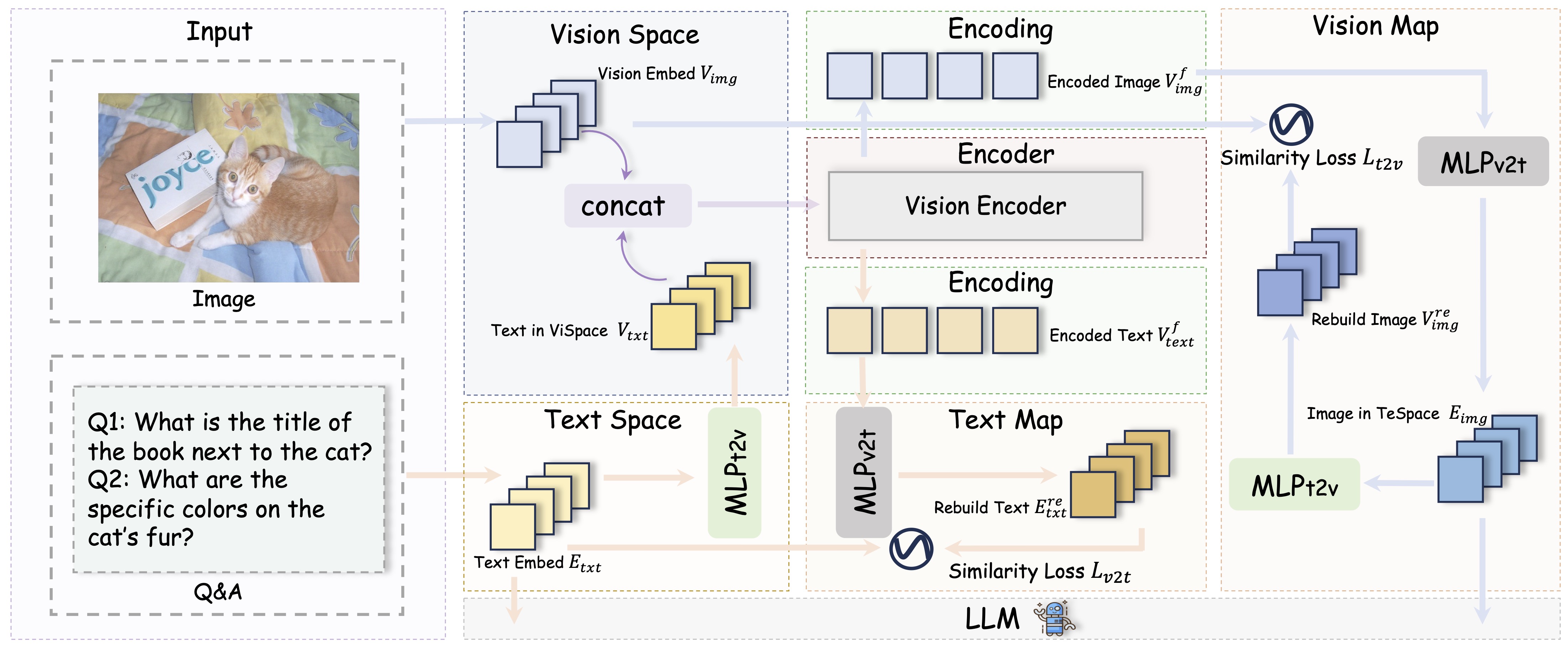

FUSION is a family of multimodal large language models that adopts a fully integrated vision-language architecture, enabling comprehensive and fine-grained cross-modal understanding. In contrast to prior approaches that primarily perform shallow or late-stage modality fusion during the LLM decoding phase, FUSION achieves deep, dynamic integration across the entire vision-language processing pipeline.

To enable this, FUSION utilizes Text-Guided Unified Vision Encoding, which incorporates textual context directly into the vision encoder. This design allows for pixel-level vision-language alignment and facilitates early-stage cross-modal interaction.

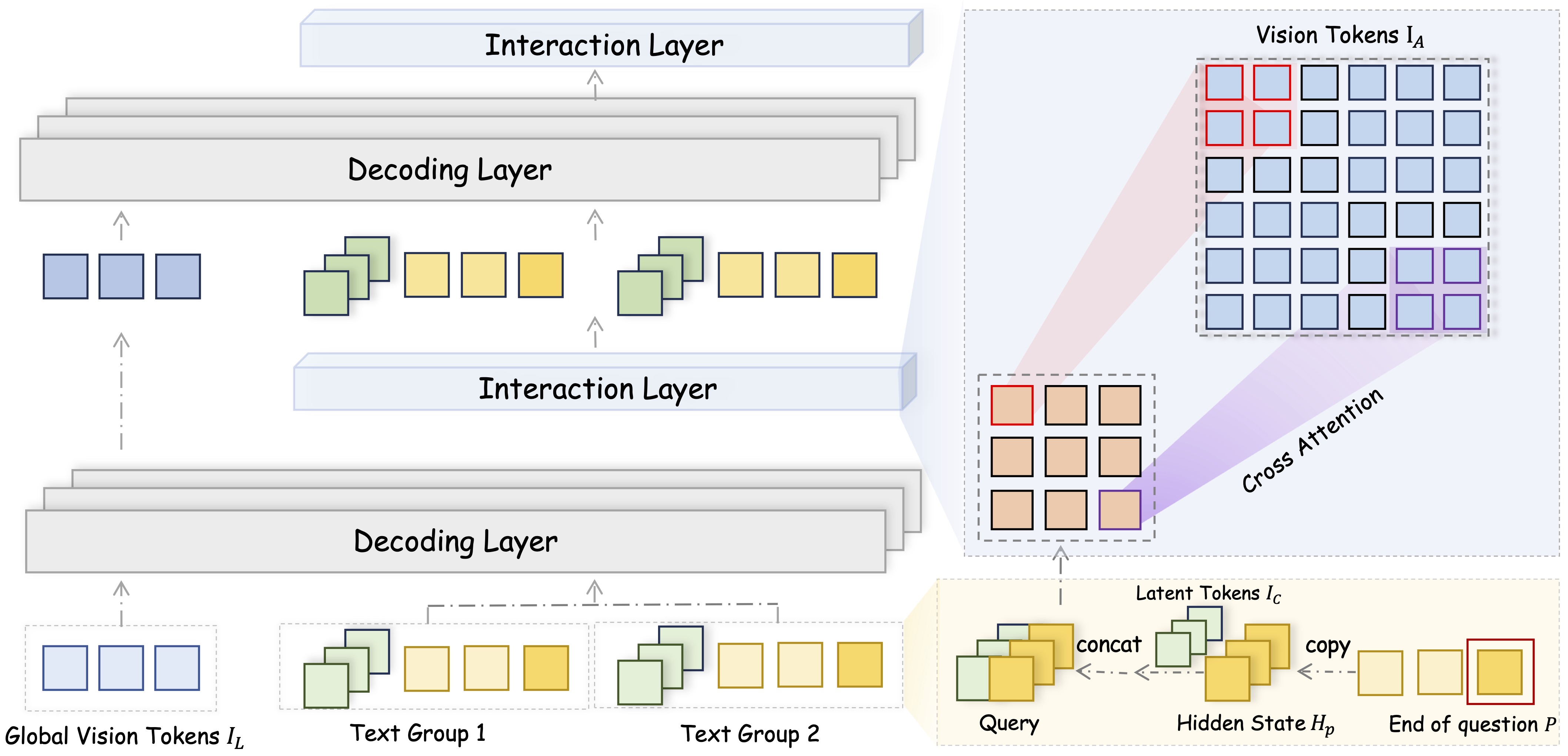

During decoding, FUSION employs Context-Aware Recursive Alignment Decoding strategy. This component dynamically aggregates and refines visual features based on the evolving textual context at each decoding step, allowing the model to capture question-level semantics with high precision.

To further enhance alignment and reduce the semantic gap between modalities, FUSION integrates Dual-Supervised Semantic Mapping Loss, which provides simultaneous supervision in both visual and textual embedding spaces. This dual-path guidance strengthens the consistency and semantic coherence of the fused representations.

Base Model

LLM: microsoft/Phi-3.5-mini-instruct

Vision Encoder: google/siglip2-giant-opt-patch16-384

Training Details

Training Strategies

FUSION is trained with a three-stage training framework, ensuring comprehensive alignment and integration between visual and linguistic modalities.

- Stage1: Foundational Semantic Alignment: We pretrain the vision encoder using extensive image-caption datasets to establish precise semantic alignment be- tween visual and textual representations.

- Stage1.5: Contextual Multimodal Fusion: In contrast to Stage 1, this intermediate stage incorporates various types of QA data along with image-caption pairs. This phase is designed to enhance the model’s adaptability in aligning vision and language representations across a broad spectrum of scenarios.

- Stage2: Visual Instruction Tuning: At this stage, we expose the model to various visual tasks, enabling it to answer downstream vision-related questions effectively.

Training Data

- 10M FUSION Alignment Data For Stage1

- 12M FUSION Curated Instruction Tuning Data For Stage1.5 and Stage2

Performance

Where to send questions or comments about the model:

https://github.com/starriver030515/FUSION/issues

Paper or resources for more information

- https://github.com/starriver030515/FUSION

- Coming soon~

- Downloads last month

- 0

Model tree for starriver030515/FUSION-X-Phi3.5-3B-Stage1.5

Base model

google/siglip2-giant-opt-patch16-384