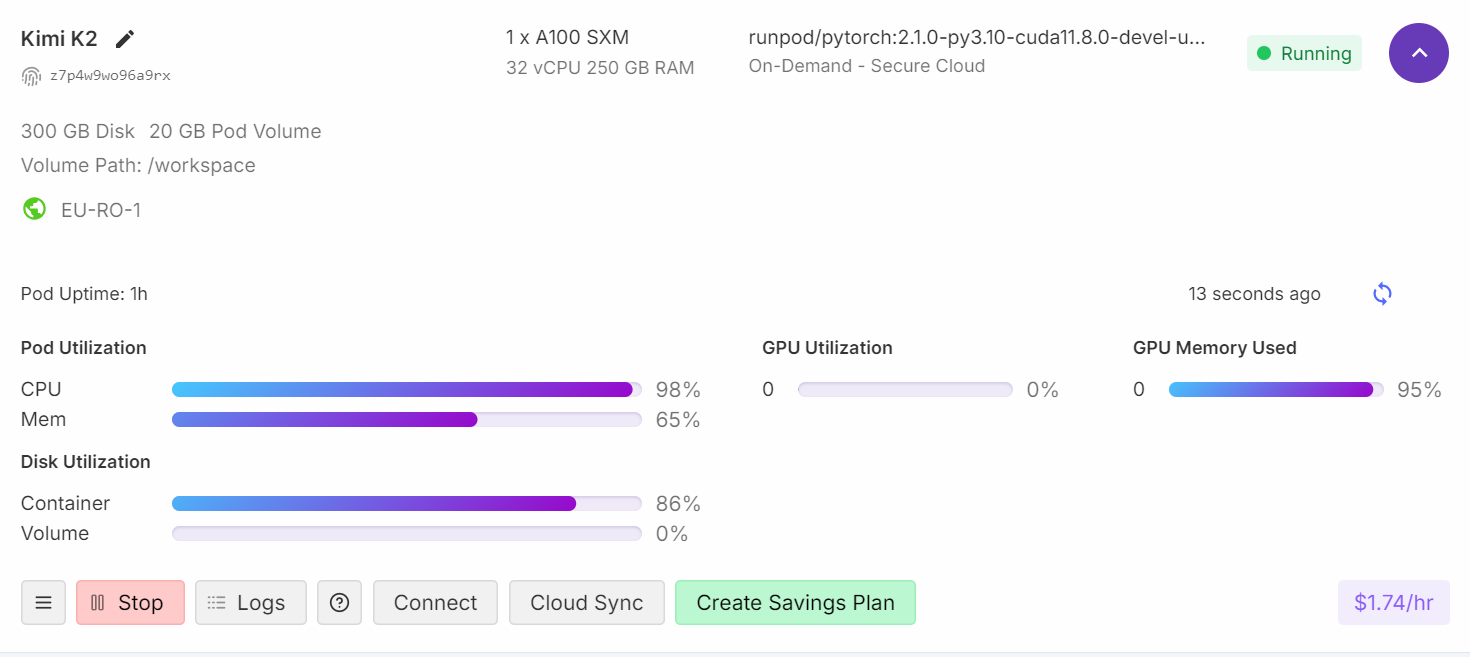

Slow Token Generation on A100

#13

by

kingabzpro

- opened

Please tell me what I am doing wrong? 95 % GPU RAM with 0 % GPU compute and 99 % CPU load.

Script:

./llama.cpp/llama-cli \

--model /unsloth/Kimi-K2-Instruct-GGUF/UD-TQ1_0/Kimi-K2-Instruct-UD-TQ1_0-00001-of-00005.gguf \

--cache-type-k q4_0 \

--threads -1 \

--n-gpu-layers 99 \

--temp 0.6 \

--min_p 0.01 \

--ctx-size 16384 \

--seed 3407 \

-ot ".ffn_(up|down)_exps.=CPU" \

--prompt "Hey"

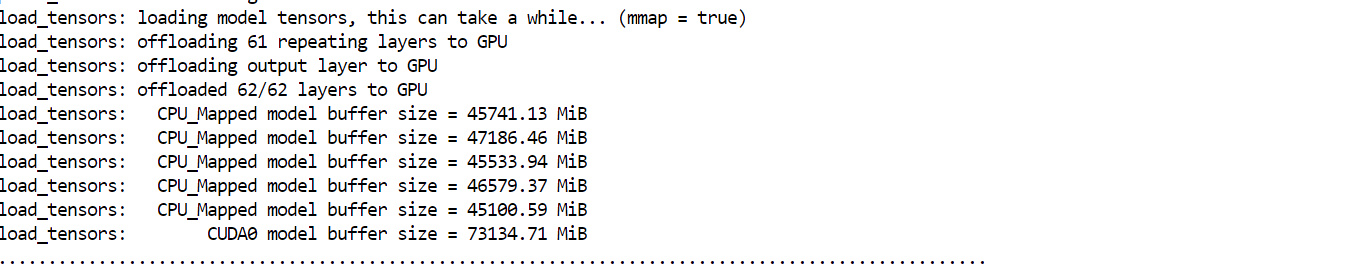

Your system ram looks too high. Those separate CPU buffers are inefficient / it happens when you forget to disable memory mapping:--no-mmap

Also consider using ik_llama with -fmoe and -mla3 to improve speed / reduce memory usage.

The --no-mmap didn't improved the speed. I think, I will try ik_llama later.