Is Llama-4-Scout-17B-16E-Instruct-GGUF a multimodal model that supports image input?

Hi! Thank you for this great model.

I’m trying to use the Llama-4-Scout-17B-16E-Instruct-UD-Q2_K_XL.gguf model for image+text input, like LLaVA. Specifically, I want to describe images with a prompt like this:

prompt = [{

"role": "user",

"content": [

{"type": "image_url", "image_url": {

"url": data_uri

}},

{"type": "text", "text": "Please describe this image."},

]

}]

I’m using llama-cpp-python with a Llava15ChatHandler, and passing in a clip_model_path. The image gets encoded successfully, but the model returns completely irrelevant answers (e.g., calling a dog photo "a tourist center").

Also,I inspected the GGUF metadata and found no indicators that this model is multimodal — no vision tower configuration or image-related fields are present.

general.name

general.architecture

...

llama4.context_length

llama4.embedding_length

...

tokenizer.chat_template

So I’m wondering:

Is this model actually multimodal? If not yet multimodal, are there plans to release a future version with image support?

Or is it currently text-only, and not designed to process the <|image|> token or CLIP embeddings?

If this model is even intended to be used with image inputs via llama-cpp-python, or if a compatible handler might be needed in the future?

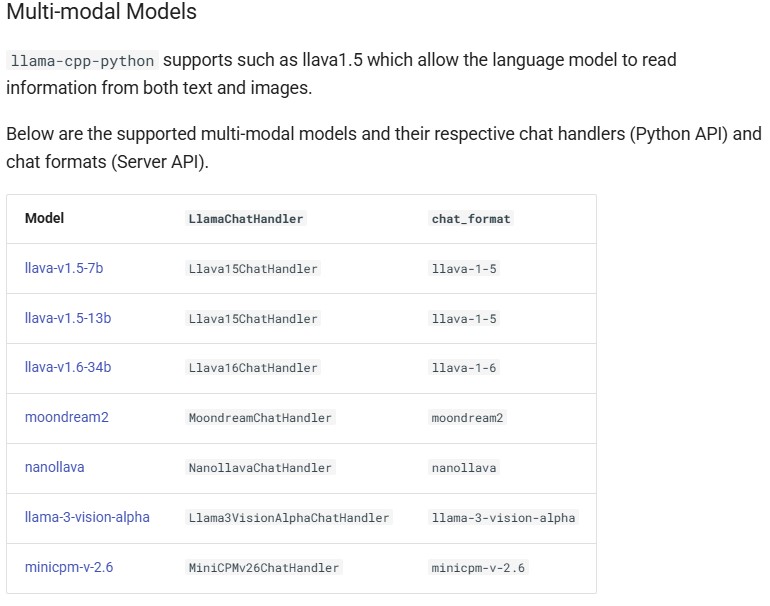

Additionally, I also checked the llama-cpp-python documentation and the list of built-in LlamaChatHandlers (e.g., Llava15ChatHandler, Llava16ChatHandler, etc.), and I couldn’t find any specific handler for Llama 4 Scout models.

Thank you!

Yes it is multimodal with our new update - please update llama.cpp