This is NOT a fine-tune.

This is the original OpenAI CLIP ViT-L/14@336 Text Encoder, converted to HuggingFace 'transformers' format. All credits to the original authors.

Why?

- It's a normal "CLIP-L" Text Encoder and can be used as such.

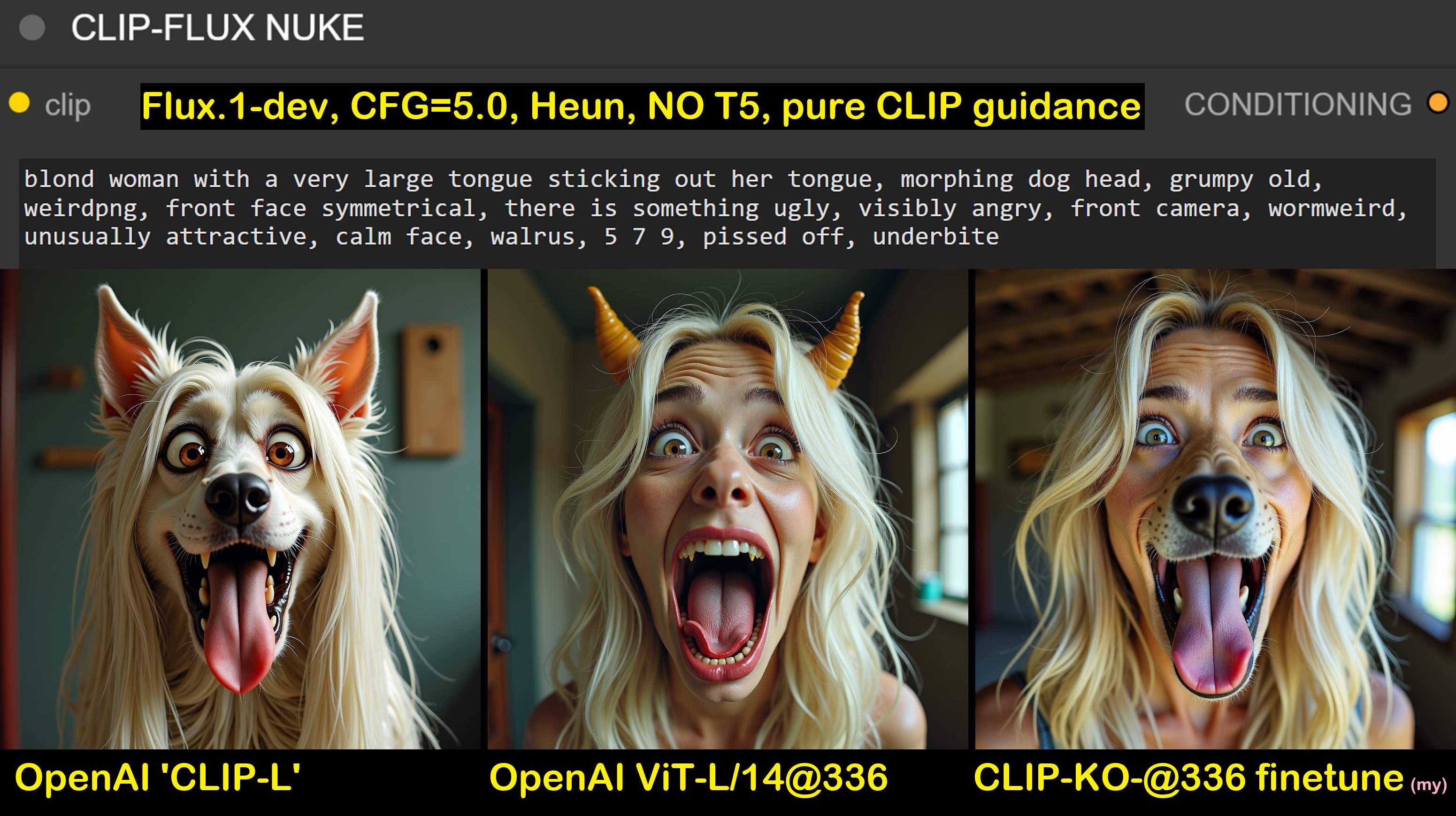

- See below (Flux.1-dev, CLIP only guidance, CFG 3.5, Heun).

- For my fine-tuned KO-CLIP ViT-L/14@336 -> see here

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support

Model tree for zer0int/clip-vit-large-patch14-336-text-encoder

Base model

openai/clip-vit-large-patch14-336