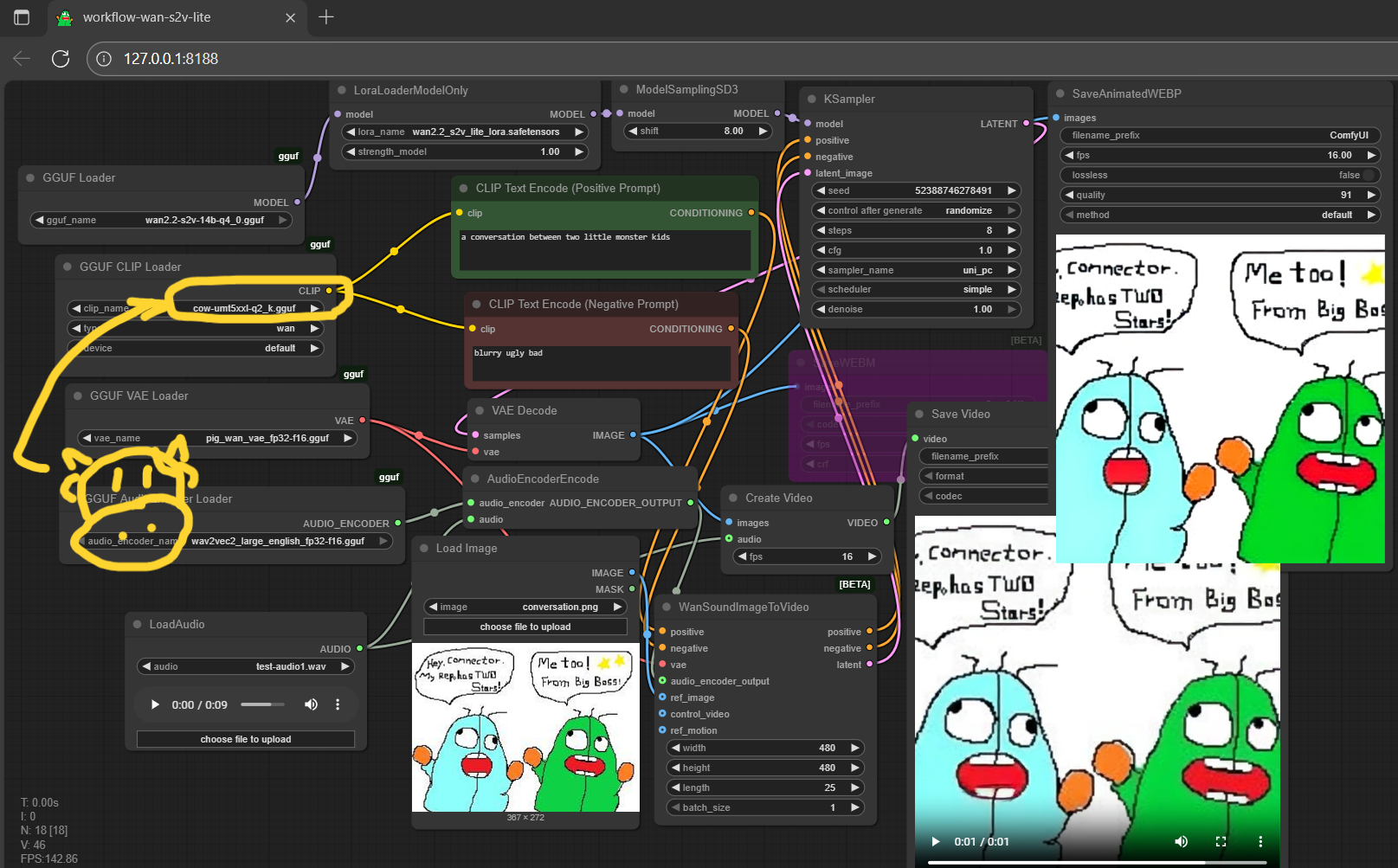

🐮cow architecture gguf encoder

- don't need to rebuild tokenizer from metadata ⏳🥊

- don't need separate tokenizer file 🐱🥊

- no more oom issues (possibly) 💫💻🥊

eligible model example

- use cow-mistral3small 7.73GB for flux2-dev

- use cow-gemma2 2.33GB for lumina

- use cow-umt5base 451MB for ace-audio

- use cow-umt5xxl 3.67GB for wan-s2v or any wan video model

the example workflow above is from wan-s2v-gguf; cow encoder is a special designed clip, even the lowest q2 quant still working very good; upgrade your node for cow-encoder support🥛🐮 and do drink more milk

- Prompt

- a conversation between cgg and connector

reference

- Downloads last month

- 1,177

Hardware compatibility

Log In

to add your hardware

2-bit

4-bit

8-bit

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support